MIT Study Reveals Potential Cognitive Impacts of AI Assistance in Writing Tasks

2 Sources

2 Sources

[1]

This is your brain on ChatGPT

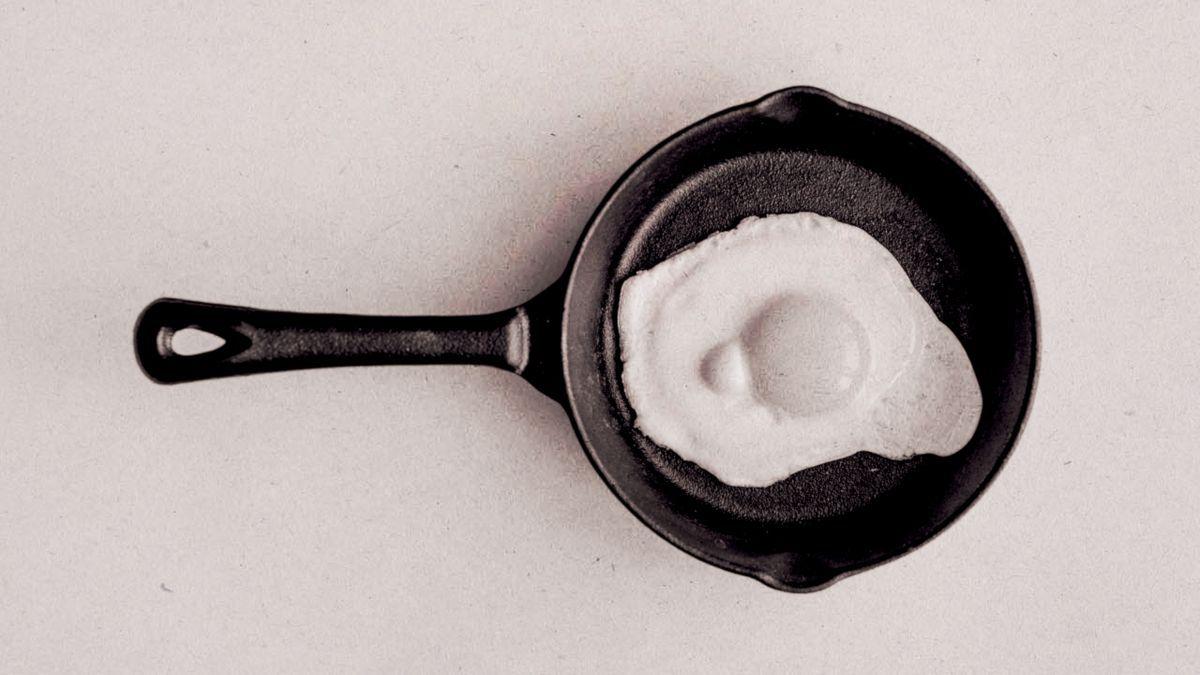

Sizzle. Sizzle. That's the sound of your neurons frying over the heat of a thousand GPUs as your generative AI tool of choice cheerfully churns through your workload. As it turns out, offloading all of that cognitive effort to a robot as you look on in luxury is turning your brain into a couch potato. That's what a recently published (and yet to be peer-reviewed) paper from some of MIT's brightest minds suggests, anyway. The study examines the "neural and behavioral consequences" of using LLMs (Large Language Models) like ChatGPT for, in this instance, essay writing. The findings raise serious questions about how long-term use of AI might affect learning, thinking, and memory. More worryingly, we recently witnessed it play out in real life. The study, titled, "Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task," involved 54 participants split into three groups: Across three sessions, these groups were tasked with writing an essay about one of three changing topics. An example of the essay question for the topic of "Art" was: "Do works of art have the power to change people's lives?" Participants then had 20 minutes to answer the question related to their chosen topic in essay form, all while wearing an Enobio headset to collect EEG signals from their brain. In a fourth session, LLM and Brain-only groups were swapped to measure any potential lasting impact of prior sessions. The results? Across the first three tests, Brain-only writers had the most active, widespread brain engagement during the task, while LLM-assisted writers showed the lowest levels of brain activity across the board (although routinely completed the task fastest). Search engine-assisted users generally fell somewhere in between the two. In short, Brain-only writers were actively engaging with the assignment, producing more creative and unique writing while actually learning. They were able to quote their essays afterwards and felt strong ownership of their work. Alternatively, LLM users engaged less over each session, began to uncritically rely on ChatGPT more as the study went on, and felt less ownership of the results. Their work was judged to be less unique, and participants often failed to accurately quote from their own work, suggesting reduced long-term memory formation. Researchers referred to this phenomenon as "metacognitive laziness" -- not just a great name for a Prog-Rock band, but also a perfect label for the hazy distance between autopilot and Copilot, where participants disengage and let the AI do the thinking for them. But it was the fourth session that yielded the most worrying results. According to the study, when the LLM and Brain-only group traded places, the group that previously relied on AI failed to bounce back to pre-LLM levels tested before the study. To put it simply, sustained use of AI tools like ChatGPT to "help" with tasks that require critical thinking, creativity, and cognitive engagement may erode our natural ability to access those processes in the future. But we didn't need a 206-page study to tell us that. On June 10, an outage lasting over 10 hours saw ChatGPT users cut off from their AI assistant, and it provoked a disturbing trend of people openly admitting, sans any hint of awareness, that without access to OpenAI's chatbot, they'd suddenly forgotten how to work, write, or function. This study may have used EEG caps and grading algorithms to prove it, but most of us may already be living its findings. When faced with an easy or hard path, many of us would assume that only a particularly smooth-brained individual would willingly take the more difficult, obtuse route. However, as this study claims, the so-called easy path may be quietly sanding down our frontal lobes in a lasting manner -- at least when it comes to our use of AI. That's especially frightening when you think of students, who are adopting these tools en masse, with OpenAI itself pushing for wider embrace of ChatGPT in education as part of its mission to build "an AI-Ready Workforce." A 2023 study conducted by Intelligent.com revealed that a third of U.S. college students surveyed used ChatGPT for schoolwork during the 2022/23 academic year. In 2024, a survey from the Digital Education Council claimed that 86% of students across 16 countries use artificial intelligence in their studies to some degree. AI's big sell is productivity, the promise that we can get more done, faster. And yes, MIT researchers have previously concluded that AI tools can boost worker productivity by up to 15%, but the long-term impact suggests codependency over competency. And that sounds a lot like regression.

[2]

MIT researchers say using ChatGPT can rot your brain, truth is little more complicated - The Economic Times

Since ChatGPT appeared almost three years ago, the impact of artificial intelligence (AI) technologies on learning has been widely debated. Are they handy tools for personalised education, or gateways to academic dishonesty? Most importantly, there has been concern that using AI will lead to a widespread "dumbing down", or decline in the ability to think critically. If students use AI tools too early, the argument goes, they may not develop basic skills for critical thinking and problem-solving. Is that really the case? According to a recent study by scientists from MIT, it appears so. Using ChatGPT to help write essays, the researchers say, can lead to "cognitive debt" and a "likely decrease in learning skills". So what did the study find? The difference between using AI and the brain alone Over the course of four months, the MIT team asked 54 adults to write a series of three essays using either AI (ChatGPT), a search engine, or their own brains ("brain-only" group). The team measured cognitive engagement by examining electrical activity in the brain and through linguistic analysis of the essays. The cognitive engagement of those who used AI was significantly lower than the other two groups. This group also had a harder time recalling quotes from their essays and felt a lower sense of ownership over them. Interestingly, participants switched roles for a final, fourth essay (the brain-only group used AI and vice versa). The AI-to-brain group performed worse and had engagement that was only slightly better than the other group's during their first session, far below the engagement of the brain-only group in their third session. The authors claim this demonstrates how prolonged use of AI led to participants accumulating "cognitive debt". When they finally had the opportunity to use their brains, they were unable to replicate the engagement or perform as well as the other two groups. Cautiously, the authors note that only 18 participants (six per condition) completed the fourth, final session. Therefore, the findings are preliminary and require further testing. Does this really show AI makes us stupider? These results do not necessarily mean that students who used AI accumulated "cognitive debt". In our view, the findings are due to the particular design of the study. The change in neural connectivity of the brain-only group over the first three sessions was likely the result of becoming more familiar with the study task, a phenomenon known as the familiarisation effect. As study participants repeat the task, they become more familiar and efficient, and their cognitive strategy adapts accordingly. When the AI group finally got to "use their brains", they were only doing the task once. As a result, they were unable to match the other group's experience. They achieved only slightly better engagement than the brain-only group during the first session. To fully justify the researchers' claims, the AI-to-brain participants would also need to complete three writing sessions without AI. Similarly, the fact the brain-to-AI group used ChatGPT more productively and strategically is likely due to the nature of the fourth writing task, which required writing an essay on one of the previous three topics. As writing without AI required more substantial engagement, they had a far better recall of what they had written in the past. Hence, they primarily used AI to search for new information and refine what they had previously written. What are the implications of AI in assessment? To understand the current situation with AI, we can look back to what happened when calculators first became available. Back in the 1970s, their impact was regulated by making exams much harder. Instead of doing calculations by hand, students were expected to use calculators and spend their cognitive efforts on more complex tasks. Effectively, the bar was significantly raised, which made students work equally hard (if not harder) than before calculators were available. The challenge with AI is that, for the most part, educators have not raised the bar in a way that makes AI a necessary part of the process. Educators still require students to complete the same tasks and expect the same standard of work as they did five years ago. In such situations, AI can indeed be detrimental. Students can for the most part offload critical engagement with learning to AI, which results in "metacognitive laziness". However, just like calculators, AI can and should help us accomplish tasks that were previously impossible - and still require significant engagement. For example, we might ask teaching students to use AI to produce a detailed lesson plan, which will then be evaluated for quality and pedagogical soundness in an oral examination. In the MIT study, participants who used AI were producing the "same old" essays. They adjusted their engagement to deliver the standard of work expected of them. The same would happen if students were asked to perform complex calculations with or without a calculator. The group doing calculations by hand would sweat, while those with calculators would barely blink an eye. Learning how to use AI Current and future generations need to be able to think critically and creatively and solve problems. However, AI is changing what these things mean. Producing essays with pen and paper is no longer a demonstration of critical thinking ability, just as doing long division is no longer a demonstration of numeracy. Knowing when, where and how to use AI is the key to long-term success and skill development. Prioritising which tasks can be offloaded to an AI to reduce cognitive debt is just as important as understanding which tasks require genuine creativity and critical thinking.

Share

Share

Copy Link

A recent MIT study suggests that prolonged use of AI tools like ChatGPT for writing tasks may lead to reduced brain activity and cognitive engagement, raising concerns about long-term impacts on learning and critical thinking skills.

MIT Study Reveals Potential Cognitive Impacts of AI Assistance

A recent study conducted by researchers at the Massachusetts Institute of Technology (MIT) has shed light on the potential cognitive consequences of using AI tools like ChatGPT for writing tasks. The study, titled "Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task," examined the neural and behavioral effects of using Large Language Models (LLMs) for essay writing

1

.

Source: ET

Study Methodology and Findings

The research involved 54 participants divided into three groups: those using only their brains, those using search engines, and those using ChatGPT. Participants were tasked with writing essays on various topics while wearing an Enobio headset to collect EEG signals from their brains

1

.Key findings from the study include:

- Brain-only writers showed the highest levels of brain activity and engagement.

- LLM-assisted writers exhibited the lowest levels of brain activity across all sessions.

- Search engine users generally fell between the two extremes in terms of brain engagement.

The study revealed that LLM users tended to disengage more over time, relying increasingly on ChatGPT and feeling less ownership of their work. This phenomenon was termed "metacognitive laziness" by the researchers

1

.Long-term Implications and Concerns

One of the most concerning findings was observed in the fourth session when the LLM and brain-only groups switched roles. The group that previously relied on AI failed to return to pre-LLM levels of cognitive engagement, suggesting that sustained use of AI tools may erode natural abilities to access critical thinking and creative processes

1

.This raises significant concerns about the long-term impact of AI use, especially in educational settings. A 2023 study by Intelligent.com revealed that a third of U.S. college students used ChatGPT for schoolwork during the 2022/23 academic year, while a 2024 survey from the Digital Education Council claimed that 86% of students across 16 countries use AI in their studies to some degree

1

.Related Stories

Debate and Alternative Perspectives

Source: LaptopMag

However, some experts argue that the study's findings may be influenced by its design rather than conclusively demonstrating that AI use leads to cognitive decline. The familiarization effect, where participants become more efficient at a task through repetition, could explain some of the observed changes in neural connectivity

2

.Critics suggest that to fully justify the researchers' claims, the AI-to-brain participants would need to complete three writing sessions without AI, mirroring the experience of the brain-only group .

The Future of AI in Education and Assessment

The integration of AI in education draws parallels to the introduction of calculators in the 1970s. Just as calculators led to more complex mathematical tasks in exams, AI could potentially raise the bar for cognitive engagement if properly implemented .

Experts argue that the key to long-term success and skill development lies in knowing when, where, and how to use AI effectively. This includes understanding which tasks can be offloaded to AI and which require genuine creativity and critical thinking .

As AI continues to reshape the landscape of education and cognitive tasks, the challenge for educators and policymakers will be to adapt assessment methods and learning objectives to ensure that students develop the necessary skills for an AI-augmented future.

References

Summarized by

Navi

[1]

Related Stories

MIT Study Reveals Cognitive Costs of Using ChatGPT for Writing Tasks

18 Jun 2025•Science and Research

AI's Impact on Brain Activity and Writing: New Research Raises Concerns

26 Jun 2025•Technology

The Cognitive Cost of AI: How Generative Tools May Be Dulling Our Thinking Skills

10 Nov 2025•Science and Research

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy