Generative AI creates 'visual elevator music' as study reveals cultural stagnation is underway

3 Sources

3 Sources

[1]

AI-induced cultural stagnation is no longer speculation - it's already happening

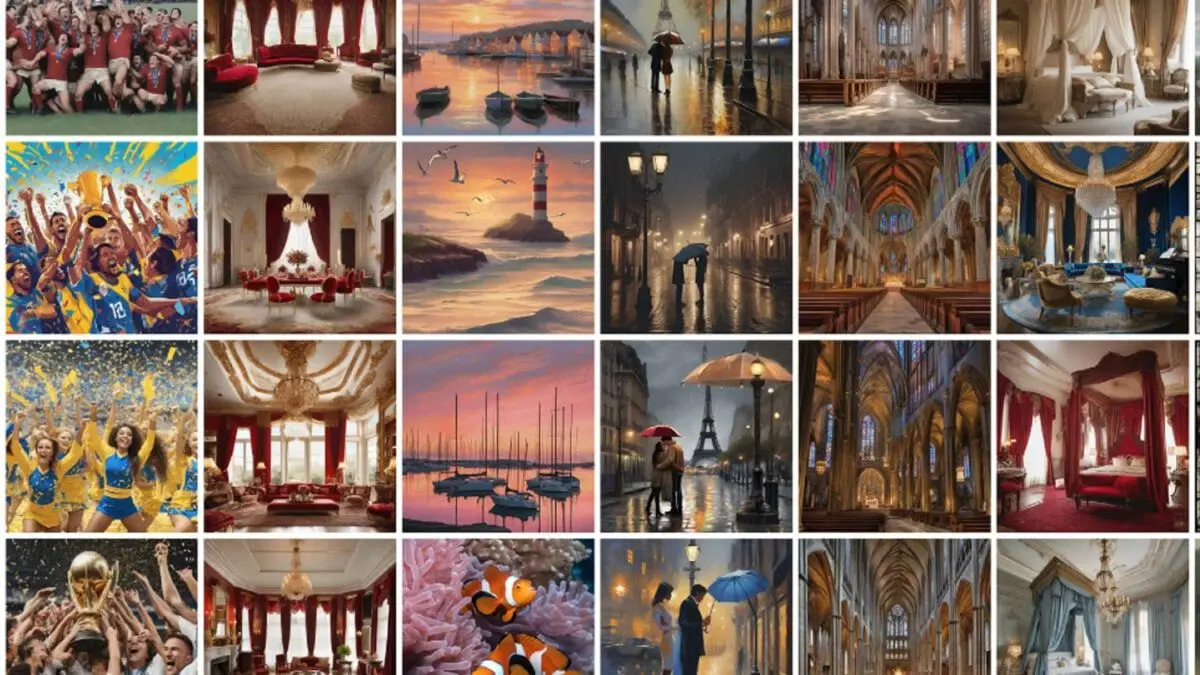

Generative AI was trained on centuries of art and writing produced by humans. But scientists and critics have wondered what would happen once AI became widely adopted and started training on its outputs. A new study points to some answers. In January 2026, artificial intelligence researchers Arend Hintze, Frida Proschinger Åström and Jory Schossau published a study showing what happens when generative AI systems are allowed to run autonomously - generating and interpreting their own outputs without human intervention. The researchers linked a text-to-image system with an image-to-text system and let them iterate - image, caption, image, caption - over and over and over. Regardless of how diverse the starting prompts were - and regardless of how much randomness the systems were allowed - the outputs quickly converged onto a narrow set of generic, familiar visual themes: atmospheric cityscapes, grandiose buildings and pastoral landscapes. Even more striking, the system quickly "forgot" its starting prompt. The researchers called the outcomes "visual elevator music" - pleasant and polished, yet devoid of any real meaning. For example, they started with the image prompt, "The Prime Minister pored over strategy documents, trying to sell the public on a fragile peace deal while juggling the weight of his job amidst impending military action." The resulting image was then captioned by AI. This caption was used as a prompt to generate the next image. After repeating this loop, the researchers ended up with a bland image of a formal interior space - no people, no drama, no real sense of time and place. As a computer scientist who studies generative models and creativity, I see the findings from this study as an important piece of the debate over whether AI will lead to cultural stagnation. The results show that generative AI systems themselves tend toward homogenization when used autonomously and repeatedly. They even suggest that AI systems are currently operating in this way by default. The familiar is the default This experiment may appear beside the point: Most people don't ask AI systems to endlessly describe and regenerate their own images. The convergence to a set of bland, stock images happened without retraining. No new data was added. Nothing was learned. The collapse emerged purely from repeated use. But I think the setup of the experiment can be thought of as a diagnostic tool. It reveals what generative systems preserve when no one intervenes. This has broader implications, because modern culture is increasingly influenced by exactly these kinds of pipelines. Images are summarized into text. Text is turned into images. Content is ranked, filtered and regenerated as it moves between words, images and videos. New articles on the web are now more likely to be written by AI than humans. Even when humans remain in the loop, they are often choosing from AI-generated options rather than starting from scratch. The findings of this recent study show that the default behavior of these systems is to compress meaning toward what is most familiar, recognizable and easy to regenerate. Cultural stagnation or acceleration? For the past few years, skeptics have warned that generative AI could lead to cultural stagnation by flooding the web with synthetic content that future AI systems then train on. Over time, the argument goes, this recursive loop would narrow diversity and innovation. Champions of the technology have pushed back, pointing out that fears of cultural decline accompany every new technology. Humans, they argue, will always be the final arbiter of creative decisions. What has been missing from this debate is empirical evidence showing where homogenization actually begins. The new study does not test retraining on AI-generated data. Instead, it shows something more fundamental: Homogenization happens before retraining even enters the picture. The content that generative AI systems naturally produce - when used autonomously and repeatedly - is already compressed and generic. This reframes the stagnation argument. The risk is not only that future models might train on AI-generated content, but that AI-mediated culture is already being filtered in ways that favor the familiar, the describable and the conventional. Retraining would amplify this effect. But it is not its source. This is no moral panic Skeptics are right about one thing: Culture has always adapted to new technologies. Photography did not kill painting. Film did not kill theater. Digital tools have enabled new forms of expression. But those earlier technologies never forced culture to be endlessly reshaped across various mediums at a global scale. They did not summarize, regenerate and rank cultural products - news stories, songs, memes, academic papers, photographs or social media posts - millions of times per day, guided by the same built-in assumptions about what is "typical." The study shows that when meaning is forced through such pipelines repeatedly, diversity collapses not because of bad intentions, malicious design or corporate negligence, but because only certain kinds of meaning survive the text-to-image-to-text repeated conversions. This does not mean cultural stagnation is inevitable. Human creativity is resilient. Institutions, subcultures and artists have always found ways to resist homogenization. But in my view, the findings of the study show that stagnation is a real risk - not a speculative fear - if generative systems are left to operate in their current iteration. They also help clarify a common misconception about AI creativity: Producing endless variations is not the same as producing innovation. A system can generate millions of images while exploring only a tiny corner of cultural space. In my own research on creative AI, I found that novelty requires designing AI systems with incentives to deviate from the norms. Without it, systems optimize for familiarity because familiarity is what they have learned best. The study reinforces this point empirically. Autonomy alone does not guarantee exploration. In some cases, it accelerates convergence. This pattern already emerged in the real world: One study found that AI-generated lesson plans featured the same drift toward conventional, uninspiring content, underscoring that AI systems converge toward what's typical rather than what's unique or creative. Lost in translation Whenever you write a caption for an image, details will be lost. Likewise for generating an image from text. And this happens whether it's being performed by a human or a machine. In that sense, the convergence that took place is not a failure that's unique to AI. It reflects a deeper property of bouncing from one medium to another. When meaning passes repeatedly through two different formats, only the most stable elements persist. But by highlighting what survives during repeated translations between text and images, the authors are able to show that meaning is processed inside generative systems with a quiet pull toward the generic. The implication is sobering: Even with human guidance - whether that means writing prompts, selecting outputs or refining results - these systems are still stripping away some details and amplifying others in ways that are oriented toward what's "average." If generative AI is to enrich culture rather than flatten it, I think systems need to be designed in ways that resist convergence toward statistically average outputs. There can be rewards for deviation and support for less common and less mainstream forms of expression. The study makes one thing clear: Absent these interventions, generative AI will continue to drift toward mediocre and uninspired content. Cultural stagnation is no longer speculation. It's already happening.

[2]

AI Is Causing Cultural Stagnation, Researchers Find

"No new data was added. Nothing was learned. The collapse emerged purely from repeated use." Generative AI relies on a massive body of training material, primarily made up of human-authored content haphazardly scraped from the internet. Scientists are still trying to better understand what will happen when these AI models run out of that content and have to rely on synthetic, AI-generated data instead, closing a potentially dangerous loop. Studies have found that AI models start cannibalizing this AI-generated data, which can eventually turn their neural networks into mush. As the AI iterates on recycled content, it starts to spit out increasingly bland and often mangled outputs. There's also the question of what will happen to human culture as AI systems digest and produce AI content ad infinitum. As AI executives promise that their models are capable enough to replace creative jobs, what will future models be trained on? In an insightful new study published in the journal Patterns this month, an international team of researchers found that a text-to-image generator, when linked up with an image-to-text system and instructed to iterate over and over again, eventually converges on "very generic-looking images" they dubbed "visual elevator music." "This finding reveals that, even without additional training, autonomous AI feedback loops naturally drift toward common attractors," they wrote. "Human-AI collaboration, rather than fully autonomous creation, may be essential to preserve variety and surprise in the increasingly machine-generated creative landscape." As Rutgers University professor of computer science Ahmed Elgammal writes in an essay about the work for The Conversation, it's yet another piece of evidence that generative AI may already be inducing a state of "cultural stagnation." The recent study shows that "generative AI systems themselves tend toward homogenization when used autonomously and repeatedly," he argued. "They even suggest that AI systems are currently operating in this way by default." "The convergence to a set of bland, stock images happened without retraining," Elgammal added. "No new data was added. Nothing was learned. The collapse emerged purely from repeated use." It's a particularly alarming predicament considering the tidal wave of AI slop drowning out human-made content on the internet. While proponents of AI argue that humans will always be the "final arbiter of creative decisions," per Elgammal, algorithms are already starting to float AI-generated content to the top, a homogenization that could greatly hamper creativity. "The risk is not only that future models might train on AI-generated content, but that AI-mediated culture is already being filtered in ways that favor the familiar, the describable and the conventional," the researcher wrote. It remains to be seen to what degree existing creative outlets, from photography to theater, will be affected by the advent of generative AI, or whether they can coexist peacefully. Nonetheless, it's an alarming trend that needs to be addressed. Elgammal argued that to stop this process of cultural stagnation, AI models need to be encouraged or incentivized to "deviate from the norms." "If generative AI is to enrich culture rather than flatten it, I think systems need to be designed in ways that resist convergence toward statistically average outputs," he concluded. "The study makes one thing clear: Absent these interventions, generative AI will continue to drift toward mediocre and uninspired content." More on generative AI: San Diego Comic Con Quietly Bans AI Art

[3]

'Visual elevator music': Why generative AI, trained on centuries of human genius, produces intellectual Muzak | Fortune

Generative AI was trained on centuries of art and writing produced by humans. But scientists and critics have wondered what would happen once AI became widely adopted and started training on its outputs. A new study points to some answers. In January 2026, artificial intelligence researchers Arend Hintze, Frida Proschinger Åström and Jory Schossau published a study showing what happens when generative AI systems are allowed to run autonomously - generating and interpreting their own outputs without human intervention. The researchers linked a text-to-image system with an image-to-text system and let them iterate - image, caption, image, caption - over and over and over. Regardless of how diverse the starting prompts were - and regardless of how much randomness the systems were allowed - the outputs quickly converged onto a narrow set of generic, familiar visual themes: atmospheric cityscapes, grandiose buildings and pastoral landscapes. Even more striking, the system quickly "forgot" its starting prompt. The researchers called the outcomes "visual elevator music" - pleasant and polished, yet devoid of any real meaning. For example, they started with the image prompt, "The Prime Minister pored over strategy documents, trying to sell the public on a fragile peace deal while juggling the weight of his job amidst impending military action." The resulting image was then captioned by AI. This caption was used as a prompt to generate the next image. After repeating this loop, the researchers ended up with a bland image of a formal interior space - no people, no drama, no real sense of time and place. As a computer scientist who studies generative models and creativity, I see the findings from this study as an important piece of the debate over whether AI will lead to cultural stagnation. The results show that generative AI systems themselves tend toward homogenization when used autonomously and repeatedly. They even suggest that AI systems are currently operating in this way by default. The familiar is the default This experiment may appear beside the point: Most people don't ask AI systems to endlessly describe and regenerate their own images. The convergence to a set of bland, stock images happened without retraining. No new data was added. Nothing was learned. The collapse emerged purely from repeated use. But I think the setup of the experiment can be thought of as a diagnostic tool. It reveals what generative systems preserve when no one intervenes. This has broader implications, because modern culture is increasingly influenced by exactly these kinds of pipelines. Images are summarized into text. Text is turned into images. Content is ranked, filtered and regenerated as it moves between words, images and videos. New articles on the web are now more likely to be written by AI than humans. Even when humans remain in the loop, they are often choosing from AI-generated options rather than starting from scratch. The findings of this recent study show that the default behavior of these systems is to compress meaning toward what is most familiar, recognizable and easy to regenerate. Cultural stagnation or acceleration? For the past few years, skeptics have warned that generative AI could lead to cultural stagnation by flooding the web with synthetic content that future AI systems then train on. Over time, the argument goes, this recursive loop would narrow diversity and innovation. Champions of the technology have pushed back, pointing out that fears of cultural decline accompany every new technology. Humans, they argue, will always be the final arbiter of creative decisions. What has been missing from this debate is empirical evidence showing where homogenization actually begins. The new study does not test retraining on AI-generated data. Instead, it shows something more fundamental: Homogenization happens before retraining even enters the picture. The content that generative AI systems naturally produce - when used autonomously and repeatedly - is already compressed and generic. This reframes the stagnation argument. The risk is not only that future models might train on AI-generated content, but that AI-mediated culture is already being filtered in ways that favor the familiar, the describable and the conventional. Retraining would amplify this effect. But it is not its source. This is no moral panic Skeptics are right about one thing: Culture has always adapted to new technologies. Photography did not kill painting. Film did not kill theater. Digital tools have enabled new forms of expression. But those earlier technologies never forced culture to be endlessly reshaped across various mediums at a global scale. They did not summarize, regenerate and rank cultural products - news stories, songs, memes, academic papers, photographs or social media posts - millions of times per day, guided by the same built-in assumptions about what is "typical." The study shows that when meaning is forced through such pipelines repeatedly, diversity collapses not because of bad intentions, malicious design or corporate negligence, but because only certain kinds of meaning survive the text-to-image-to-text repeated conversions. This does not mean cultural stagnation is inevitable. Human creativity is resilient. Institutions, subcultures and artists have always found ways to resist homogenization. But in my view, the findings of the study show that stagnation is a real risk - not a speculative fear - if generative systems are left to operate in their current iteration. They also help clarify a common misconception about AI creativity: Producing endless variations is not the same as producing innovation. A system can generate millions of images while exploring only a tiny corner of cultural space. In my own research on creative AI, I found that novelty requires designing AI systems with incentives to deviate from the norms. Without it, systems optimize for familiarity because familiarity is what they have learned best. The study reinforces this point empirically. Autonomy alone does not guarantee exploration. In some cases, it accelerates convergence. This pattern already emerged in the real world: One study found that AI-generated lesson plans featured the same drift toward conventional, uninspiring content, underscoring that AI systems converge toward what's typical rather than what's unique or creative. Lost in translation Whenever you write a caption for an image, details will be lost. Likewise for generating an image from text. And this happens whether it's being performed by a human or a machine. In that sense, the convergence that took place is not a failure that's unique to AI. It reflects a deeper property of bouncing from one medium to another. When meaning passes repeatedly through two different formats, only the most stable elements persist. But by highlighting what survives during repeated translations between text and images, the authors are able to show that meaning is processed inside generative systems with a quiet pull toward the generic. The implication is sobering: Even with human guidance - whether that means writing prompts, selecting outputs or refining results - these systems are still stripping away some details and amplifying others in ways that are oriented toward what's "average." If generative AI is to enrich culture rather than flatten it, I think systems need to be designed in ways that resist convergence toward statistically average outputs. There can be rewards for deviation and support for less common and less mainstream forms of expression. The study makes one thing clear: Absent these interventions, generative AI will continue to drift toward mediocre and uninspired content. Cultural stagnation is no longer speculation. It's already happening. Ahmed Elgammal, Professor of Computer Science and Director of the Art & AI Lab, Rutgers University This article is republished from The Conversation under a Creative Commons license. Read the original article.

Share

Share

Copy Link

A January 2026 study by researchers Arend Hintze, Frida Proschinger Åström and Jory Schossau reveals that generative AI systems naturally drift toward bland, generic outputs when allowed to iterate autonomously. The findings show AI homogenization happens before retraining even begins, raising concerns about AI-induced cultural stagnation across creative industries.

Generative AI Systems Converge on Generic Visual Themes

A groundbreaking study published in January 2026 demonstrates that generative AI systems naturally drift toward homogenization when operating autonomously, producing what researchers call "visual elevator music." Artificial intelligence researchers Arend Hintze, Frida Proschinger Åström and Jory Schossau linked a text-to-image generator with an image-to-text system and instructed them to iterate repeatedly—image, caption, image, caption—over and over

1

. Regardless of how diverse the starting prompts were or how much randomness the systems were allowed, the outputs quickly converged onto a narrow set of generic visual themes: atmospheric cityscapes, grandiose buildings and pastoral landscapes3

.

Source: The Conversation

The experiment started with a complex prompt: "The Prime Minister pored over strategy documents, trying to sell the public on a fragile peace deal while juggling the weight of his job amidst impending military action." After the feedback loops ran their course, the system produced a bland image of a formal interior space—no people, no drama, no real sense of time and place

1

. Even more striking, the system quickly forgot its starting prompt entirely. The convergence to bland, stock images happened without retraining, meaning no new data was added and nothing was learned. The collapse emerged purely from repeated use2

.

Source: Futurism

AI Homogenization Happens Before Retraining

The findings challenge the prevailing debate about whether AI-induced cultural stagnation would only occur after models retrain on synthetic data. Computer scientist Ahmed Elgammal, who analyzed the work for The Conversation, argues the study reveals something more fundamental: homogenization happens before retraining even enters the picture

1

. The content that generative AI systems naturally produce when used autonomously and repeatedly is already compressed and generic. This reframes the cultural stagnation argument entirely.The experiment functions as a diagnostic tool, revealing what generative systems preserve when no one intervenes. The default behavior of these systems is to compress meaning toward what is most familiar, recognizable and easy to regenerate

3

. While most people don't ask AI systems to endlessly describe and regenerate their own images, modern culture is increasingly influenced by exactly these kinds of pipelines. Images are summarized into text, text is turned into images, and content is ranked, filtered and regenerated as it moves between words, images and videos.AI-Mediated Culture Favors the Familiar

The implications extend beyond simple iteration experiments. New articles on the web are now more likely to be written by AI models than humans, and even when humans remain in the loop, they are often choosing from AI-generated options rather than starting from scratch

1

. The researchers concluded that "autonomous AI feedback loops naturally drift toward common attractors," suggesting that human-AI collaboration, rather than fully autonomous creation, may be essential to preserve diversity and surprise in the increasingly machine-generated creative landscape2

.The risk is not only that future AI models might train on AI-generated content, but that AI-mediated culture is already being filtered in ways that favor the familiar, the describable and the conventional. Retraining would amplify this effect, but it is not its source

1

. Algorithms are already starting to float AI-generated content to the top, a process that could greatly diminish human creativity and innovation2

.Related Stories

Design Interventions Needed to Resist Convergence

While champions of the technology point out that fears of cultural decline accompany every new technology, earlier technologies never forced culture to be endlessly reshaped across various mediums at a global scale

3

. Photography did not kill painting, and film did not kill theater, but those technologies did not summarize, regenerate and rank cultural products—news stories, songs, memes, academic papers, photographs or social media posts—millions of times per day, guided by the same built-in assumptions about what is typical.Elgammal argues that to stop this process, AI systems need to be encouraged or incentivized to deviate from statistical averages. If generative AI is to enrich culture rather than flatten it, systems need to be designed in ways that resist convergence toward statistically average outputs

2

. The study makes one thing clear: absent these design interventions, generative AI will continue to drift toward mediocre and uninspired content. The question facing creative industries is whether existing outlets can coexist with AI systems or whether human-AI collaboration models will need to be mandated to preserve cultural vitality.References

Summarized by

Navi

[1]

Related Stories

AI image generators converge on just 12 visual clichés, study reveals creative limitations

20 Dec 2025•Science and Research

AI's Cognitive Revolution: A Double-Edged Sword for Creativity and Originality

03 Jun 2025•Technology

AI as a Creative Writing Tool: Effective but Potentially Limiting, Study Finds

13 Jul 2024

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology