North Korean Hackers Exploit ChatGPT to Create Deepfake Military ID for Phishing Attack

9 Sources

9 Sources

[1]

This North Korean Phishing Attack Used ChatGPT's Image Generation

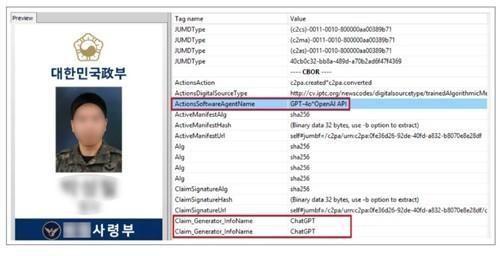

Don't miss out on our latest stories. Add PCMag as a preferred source on Google. A North Korean hacking group appears to have used the technology behind OpenAI's ChatGPT to help them develop a phishing attack. The scheme was traced to the Kimsuky group, which has been known to engage in cyber espionage for the North Korean government. On Monday, security vendor Genians published a report about phishing emails, which involved those impersonating "a South Korean defense-related institution" charged with issuing IDs for military-affiliated officials. Sent back in July, the emails used a domain meant to "mimic the official domain of a South Korean military institution" and included a .zip file as an attachment, Genians said. The .zip file contained the recipient's real name, but partially masked, which could lend the email some legitimacy and entice the target to open the attachment. The .zip file contained a malicious shortcut meant to fool the recipient into manually running a PowerShell command on their PC. This command would secretly connect their computer to the hacker's server and download malware capable of acting as a backdoor. The same process also fetched a fake government military ID image to mask the intrusion and convince victims that nothing unusual had happened. Genians looked closely at one of the fake military ID images and uncovered evidence that it was sourced to OpenAI's older GPT-4o model. The image's metadata referenced the words "GPT-4o*OpenAI API" along with the name ChatGPT. Genians also ran the fake military ID image through a DeepFake detector and found a 98% probability that it was AI-generated. The report notes that OpenAI's systems already have built-in safeguards that prevent the company's chatbots from generating AI images for government IDs. Still, Genians suspects the Kimsuky group was able to develop a workaround, also known as jailbreak, to trick ChatGPT into creating the IDs. "For example, it may respond to requests framed as creating a mock-up or sample design for legitimate purposes rather than reproducing an actual military ID," the vendor wrote. OpenAI didn't immediately respond to a request for comment. But the company has detected and prevented state-sponsored hackers from using its technology in the past. Still, the report from Genians suggests that North Korean hackers are continuing to find ways to access ChatGPT and leverage it for nefarious purposes. This comes as North Korea has increasingly been found using other AI technologies, including real-time deepfakes, to help them spread malware or even land remote jobs at US companies. Disclosure: Ziff Davis, PCMag's parent company, filed a lawsuit against OpenAI in April 2025, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

[2]

Nork snoops whip up fake military ID with help from ChatGPT

Kimsuky gang proves that with the right wording, you can turn generative AI into a counterfeit factory North Korean spies used ChatGPT to generate a fake military ID for use in an espionage campaign against a South Korean defense-related institution, according to new research. Kimsuky, a notorious cybercrime squad believed to be sponsored by the North Korean government, used a deepfaked image of a military employee ID card in a July spear-phishing attack against a military-related organization, according to the Genians Security Center (GSC), a South Korean security institute. The file's metadata indicated it was generated with ChatGPT's image tools, according to Genians, despite OpenAI's efforts to block the creation of counterfeit IDs. According to Genians' threat intel team, the faked ID photo was based on publicly available headshots and composited into a template resembling a South Korean military employee card. The researchers say the attackers likely used prompt-engineering tricks - framing the request as the creation of a "sample design" or "mock-up" for legitimate use - to get around ChatGPT's built-in refusals to generate government ID replicas. "Since military government employee IDs are legally protected identification documents, producing copies in identical or similar form is illegal. As a result, when prompted to generate such an ID copy, ChatGPT returns a refusal," Genians said. "However, the model's response can vary depending on the prompt or persona role settings." For example, it may respond to requests framed as creating a mock-up or sample design for legitimate purposes rather than reproducing an actual military ID. "The deepfake image used in this attack fell into this category. Because creating counterfeit IDs with AI services is technically straightforward, extra caution is required." Once crafted, the deepfake was distributed to targets in emails disguised as correspondence about ID issuance for military-affiliated officials. Targets included an unnamed defense-related institution in South Korea, though Genians stopped short of naming victims, and didn't say how many organizations were targeted. Genians' findings are the latest example of suspected North Korean hackers adopting AI as part of their intelligence-gathering work. Last month, Anthropic said Pyongyang's keyboard warriors had been using its Claude Code tool to spin up fake personas, ace job interviews, and even ship code for Fortune 500 firms. It also sees Kimsuky, the espionage crew best known for targeting South Korea's military, government, and think tanks, once again shifting its tactics; this time, the group is moving away from its well-worn phishing lures and malicious Word docs and is now delivering its payloads via deepfake-based forgeries. OpenAI didn't immediately respond to The Register's questions. However, in February, the company said [PDF] it had booted dozens of accounts it says were tied to North Korea's overseas IT worker schemes, adding that the crackdown was part of a broader effort to spot and disrupt state-backed misuse of its models. ®

[3]

North Korean Hackers Used ChatGPT to Help Forge Deepfake ID

A suspected North Korean state-sponsored hacking group used ChatGPT to create a deepfake of a military ID document to attack a target in South Korea, according to cybersecurity researchers. Attackers used the artificial intelligence tool to craft a fake draft of a South Korean military identification card in order to create a realistic-looking image meant to make a phishing attempt seem more credible, according to research published Sunday by Genians, a South Korean cybersecurity firm. Instead of including a real image, the email linked to malware capable of extracting data from recipients' devices, according to Genians.

[4]

North Korean hackers used ChatGPT to help forge deepfake ID

A suspected North Korean state-sponsored hacking group used ChatGPT to create a deepfake of a military ID document to attack a target in South Korea, according to cybersecurity researchers. Attackers used the artificial intelligence tool to craft a fake draft of a South Korean military identification card in order to create a realistic-looking image meant to make a phishing attempt seem more credible, according to research published Sunday by Genians, a South Korean cybersecurity firm. Instead of including a real image, the email linked to malware capable of extracting data from recipients' devices, according to Genians. The group responsible for the attack, which researchers have dubbed Kimsuky, is a suspected North Korea-sponsored cyber-espionage unit previously linked to other spying efforts against South Korean targets. The U.S. Department of Homeland Security said Kimsuky "is most likely tasked by the North Korean regime with a global intelligence-gathering mission," according to a 2020 advisory. The findings by Genians in July are the latest example of suspected North Korean operatives deploying AI as part of their intelligence-gathering work. Anthropic said in August it discovered North Korean hackers used the Claude Code tool to get hired and work remotely for U.S. Fortune 500 tech companies. In that case, Claude helped them build up elaborate fake identities, pass coding assessments and deliver actual technical work once hired. OpenAI representatives didn't immediately respond to a request for comment outside normal hours. The company said in February it had banned suspected North Korean accounts that had used the service to create fraudulent résumés, cover letters and social media posts to try recruiting people to aid their schemes. The trend shows that attackers can leverage emerging AI during the hacking process, including attack scenario planning, malware development, building their tools and to impersonate job recruiters, said Mun Chong-hyun, director at Genians. Phishing targets in this latest cybercrime spree included South Korean journalists and researchers and human rights activists focused on North Korea. It was also sent from an email address ending in .mli.kr, an impersonation of a South Korean military address. Exactly how many victims were breached wasn't immediately clear. Genians researchers experimented with ChatGPT while investigating the fake identification document. As reproduction of government IDs are illegal in South Korea, ChatGPT initially returned a refusal when asked to create an ID. But altering the prompt allowed them to bypass the restriction. American officials have alleged that North Korea is engaged in a long-running effort to use cyberattacks, cryptocurrency theft and IT contractors to gather information on behalf of the government in Pyongyang. Those tactics are also used to generate funds meant to help the regime subvert international sanctions and develop its nuclear weapons programs, according to the U.S. government. 2025 Bloomberg L.P. Distributed by Tribune Content Agency, LLC.

[5]

North Korean hackers used ChatGPT to help forge deepfake ID | Fortune

Attackers used the artificial intelligence tool to craft a fake draft of a South Korean military identification card in order to create a realistic-looking image meant to make a phishing attempt seem more credible, according to research published Sunday by Genians, a South Korean cybersecurity firm. Instead of including a real image, the email linked to malware capable of extracting data from recipients' devices, according to Genians. The group responsible for the attack, which researchers have dubbed Kimsuky, is a suspected North Korea-sponsored cyber-espionage unit previously linked to other spying efforts against South Korean targets. The US Department of Homeland Security said Kimsuky "is most likely tasked by the North Korean regime with a global intelligence-gathering mission," according to a 2020 advisory. The findings by Genians in July are the latest example of suspected North Korean operatives deploying AI as part of their intelligence-gathering work. Anthropic said in August it discovered North Korean hackers used the Claude Code tool to get hired and work remotely for US Fortune 500 tech companies. In that case, Claude helped them build up elaborate fake identities, pass coding assessments and deliver actual technical work once hired. OpenAI said in February it had banned suspected North Korean accounts that had used the service to create fraudulent résumés, cover letters and social media posts to try recruiting people to aid their schemes. The trend shows that attackers can leverage emerging AI during the hacking process, including attack scenario planning, malware development, building their tools and to impersonate job recruiters, said Mun Chong-hyun, director at Genians. Phishing targets in this latest cybercrime spree included South Korean journalists and researchers and human rights activists focused on North Korea. It was also sent from an email address ending in .mil.kr, an impersonation of a South Korean military address. Exactly how many victims were breached wasn't immediately clear. Genians researchers experimented with ChatGPT while investigating the fake identification document. As reproduction of government IDs are illegal in South Korea, ChatGPT initially returned a refusal when asked to create an ID. But altering the prompt allowed them to bypass the restriction. American officials have alleged that North Korea is engaged in a long-running effort to use cyberattacks, cryptocurrency theft and IT contractors to gather information on behalf of the government in Pyongyang. Those tactics are also used to generate funds meant to help the regime subvert international sanctions and develop its nuclear weapons programs, according to the US government.

[6]

North Korean hackers used ChatGPT to help forge deepfake - The Economic Times

A North Korea-linked hacking group used ChatGPT to create a fake South Korean military ID in a phishing attack, researchers at cybersecurity firm Genians revealed. The deepfake aimed to deceive targets, including journalists and activists. AI tools were also used to craft malware and fake identities in broader espionage and cybercrime efforts.A suspected North Korean state-sponsored hacking group used ChatGPT to create a deepfake of a military ID document to attack a target in South Korea, according to cybersecurity researchers. Attackers used the artificial intelligence tool to craft a fake draft of a South Korean military identification card in order to create a realistic-looking image meant to make a phishing attempt seem more credible, according to research published Sunday by Genians, a South Korean cybersecurity firm. Instead of including a real image, the email linked to malware capable of extracting data from recipients' devices, according to Genians. The group responsible for the attack, which researchers have dubbed Kimsuky, is a suspected North Korea-sponsored cyber-espionage unit previously linked to other spying efforts against South Korean targets. The US Department of Homeland Security said Kimsuky "is most likely tasked by the North Korean regime with a global intelligence-gathering mission," according to a 2020 advisory. The findings by Genians in July are the latest example of suspected North Korean operatives deploying AI as part of their intelligence-gathering work. Anthropic said in August it discovered North Korean hackers used the Claude Code tool to get hired and work remotely for US Fortune 500 tech companies. In that case, Claude helped them build up elaborate fake identities, pass coding assessments and deliver actual technical work once hired. OpenAI representatives didn't immediately respond to a request for comment outside normal hours. The company said in February it had banned suspected North Korean accounts that had used the service to create fraudulent résumés, cover letters and social media posts to try recruiting people to aid their schemes. The trend shows that attackers can leverage emerging AI during the hacking process, including attack scenario planning, malware development, building their tools and to impersonate job recruiters, said Mun Chong-hyun, director at Genians. Phishing targets in this latest cybercrime spree included South Korean journalists and researchers and human rights activists focused on North Korea. It was also sent from an email address ending in .mli.kr, an impersonation of a South Korean military address. Exactly how many victims were breached wasn't immediately clear. Genians researchers experimented with ChatGPT while investigating the fake identification document. As reproduction of government IDs are illegal in South Korea, ChatGPT initially returned a refusal when asked to create an ID. But altering the prompt allowed them to bypass the restriction. American officials have alleged that North Korea is engaged in a long-running effort to use cyberattacks, cryptocurrency theft and IT contractors to gather information on behalf of the government in Pyongyang. Those tactics are also used to generate funds meant to help the regime subvert international sanctions and develop its nuclear weapons programs, according to the US government.

[7]

North Korean hackers used ChatGPT to help forge deepfake ID

A suspected North Korean state-sponsored hacking group used ChatGPT to create a deepfake of a military ID document to attack a target in South Korea, according to cybersecurity researchers. Attackers used the artificial intelligence tool to craft a fake draft of a South Korean military identification card in order to create a realistic-looking image meant to make a phishing attempt seem more credible, according to research published Sunday by Genians, a South Korean cybersecurity firm. Instead of including a real image, the email linked to malware capable of extracting data from recipients' devices, according to Genians. The group responsible for the attack, which researchers have dubbed Kimsuky, is a suspected North Korea-sponsored cyber-espionage unit previously linked to other spying efforts against South Korean targets. The U.S. Department of Homeland Security said Kimsuky "is most likely tasked by the North Korean regime with a global intelligence-gathering mission," according to a 2020 advisory.

[8]

N. Korea-backed hacking group uses AI deepfake to target S. Korean institutions: report - The Korea Times

A North Korea-linked hacking group has carried out a cyberattack on South Korean organizations, including a defense-related institution, using artificial intelligence (AI)-generated deepfake images, a report showed Monday. Kimsuky group, a hacking unit believed to be sponsored by the North Korean government, attempted a spear-phishing attack on a military-related organization in July, according to the report by the Genians Security Center (GSC), a South Korean security institute. Spear phishing is a targeted cyberattack, often conducted through personalized emails that impersonate trusted sources. The report said the attackers sent an email attached with malicious code, disguised as correspondence about ID issuance for military-affiliated officials. The ID card image used in the attempt was presumed to have been produced by a generative AI model, marking a case of the Kimsuky group applying deepfake technology. Typically, AI platforms, such as ChatGPT, reject requests to generate copies of military IDs, citing that government-issued identification documents are legally protected. However, the GSC report noted that the hackers appear to have bypassed restrictions by requesting mock-ups or sample designs for "legitimate" purposes, rather than direct reproductions of actual IDs. The findings follow a separate report published in August by U.S.-based Anthropic, developer of the AI service Claude, which detailed how North Korean IT workers have misused AI. That report said the workers generated manipulated virtual identities to undergo technical assessments during job applications, part of a broader scheme to circumvent international sanctions and secure foreign currency for the regime. GSC said such cases highlight North Korea's growing attempts to exploit AI services for increasingly sophisticated malicious activities. "While AI services are powerful tools for enhancing productivity, they also represent potential risks when misused as cyber threats at the level of national security," it said. "Therefore, organizations must proactively prepare for the possibility of AI misuse and maintain continuous security monitoring across recruitment, operations and business processes."

[9]

ChatGPT exploited by North Korea-linked group to target South Korean journalists and activists

Hackers even spoofed official South Korean military email addresses to enhance phishing credibility. In a recent phishing campaign, a hacker group with ties to North Korea is allegedly creating fake South Korean military ID cards using OpenAI's well-known AI chatbot, ChatGPT, according to new research from Genians. The group, known as Kimsuky, reportedly created a fake draft of a military ID to make malicious emails appear more authentic. According to Genians' report, the phishing message was linked to malware that could steal data from victims' devices instead of an authentic attachment. South Korean journalists, researchers, and human rights activists who focused on North Korea were among those targeted. The group is said to be a state-sponsored cyber-espionage unit that has previously been linked to intelligence operations against South Korea and other countries. According to a 2020 advisory from the US Department of Homeland Security, the group works on Pyongyang's behalf to conduct global surveillance activities. The researchers stated that ChatGPT initially rejected the prompts to generate a government ID image, but the attackers were able to get around the safeguard by rephrasing their requests. While the deepfake ID appeared realistic, its purpose was to add credibility to the phishing lure rather than to provide an actual document. The incident highlights a growing trend of North Korean operatives using artificial intelligence in cyber operations. Anthropic, an AI firm, reported in August that hackers from the country had used its Claude tool to pose as software developers and secure remote work with US technology companies. Earlier this year, OpenAI announced that it had banned North Korean accounts that attempted to use its platform to create fraudulent résumés and recruitment materials. According to Genians, AI tools are now being used at various stages of cyberattacks, ranging from planning and malware development to impersonation of trusted entities. In this latest case, hackers even spoof an official South Korean military email address ending in.mil.kr to deceive recipients.

Share

Share

Copy Link

A North Korean hacking group, Kimsuky, used OpenAI's ChatGPT to generate a fake South Korean military ID for a sophisticated phishing attack. This incident highlights the growing use of AI tools by state-sponsored hackers for cyber espionage.

North Korean Hackers Leverage ChatGPT for Sophisticated Phishing Attack

In a concerning development at the intersection of artificial intelligence and cybersecurity, a North Korean hacking group known as Kimsuky has been found using OpenAI's ChatGPT to create deepfake military identification cards for a targeted phishing attack against South Korean defense institutions

1

2

3

. This incident, discovered by South Korean cybersecurity firm Genians, highlights the evolving tactics of state-sponsored hackers and the potential misuse of AI technologies in cyber espionage.The Attack: Methodology and Targets

The phishing campaign, detected in July 2025, involved emails impersonating a South Korean defense-related institution responsible for issuing IDs to military-affiliated officials

1

. The attackers used a domain mimicking an official South Korean military institution and included a malicious .zip file attachment1

. This file contained a fake government military ID image, which was later found to be generated using OpenAI's GPT-4o model1

2

.

Source: Korea Times

The targets of this sophisticated attack included South Korean journalists, researchers, and human rights activists focused on North Korea

4

. The phishing emails were sent from an address ending in .mil.kr, cleverly impersonating a legitimate South Korean military address4

5

.AI-Generated Deepfakes: A New Frontier in Cyber Attacks

The use of ChatGPT to create a convincing deepfake military ID marks a significant escalation in the capabilities of cybercriminals. Genians' analysis revealed that the fake ID image had a 98% probability of being AI-generated

1

. This development is particularly noteworthy because OpenAI has implemented safeguards to prevent the generation of government IDs1

2

.However, the Kimsuky group appears to have found a workaround, possibly by framing their requests as creating mock-ups or sample designs for legitimate purposes

1

2

. This technique, known as prompt engineering, allowed the attackers to bypass ChatGPT's built-in restrictions2

4

.Broader Implications and Growing Trends

This incident is not isolated but part of a broader trend of North Korean operatives leveraging AI for intelligence gathering and cyber attacks. In August 2025, Anthropic reported that North Korean hackers had used the Claude Code tool to create elaborate fake identities, pass coding assessments, and even secure remote work positions at U.S. Fortune 500 tech companies

3

4

5

.

Source: ET

Related Stories

Security Concerns and Preventive Measures

The successful use of AI in creating convincing fake documents raises significant security concerns. It demonstrates that attackers can leverage emerging AI technologies throughout the hacking process, from attack planning to malware development and social engineering

4

5

.In response to these threats, AI companies like OpenAI have taken steps to ban suspected North Korean accounts and prevent the misuse of their technologies

3

5

. However, the Kimsuky incident shows that determined attackers can still find ways to exploit these powerful AI tools.

Source: Fortune

Conclusion

As AI technologies continue to advance, the cybersecurity landscape faces new challenges. The use of ChatGPT by North Korean hackers to create deepfake military IDs serves as a stark reminder of the need for enhanced security measures and ongoing vigilance in the face of evolving cyber threats. It also underscores the importance of responsible AI development and the need for robust safeguards to prevent the misuse of these powerful tools in cyber warfare and espionage.

References

Summarized by

Navi

[2]

[4]

Related Stories

OpenAI Confirms ChatGPT Abuse by Hackers for Malware and Election Interference

10 Oct 2024•Technology

OpenAI Uncovers Global AI Misuse in Cyber Operations and Influence Campaigns

06 Jun 2025•Technology

OpenAI Cracks Down on ChatGPT Misuse: Bans Accounts Linked to Surveillance and Influence Campaigns

22 Feb 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology