NVIDIA and DataStax Revolutionize Multilingual AI with NeMo Retriever Microservices

3 Sources

3 Sources

[1]

AI in Your Own Words: NVIDIA Debuts NeMo Retriever Microservices for Multilingual Generative AI Fueled by Data

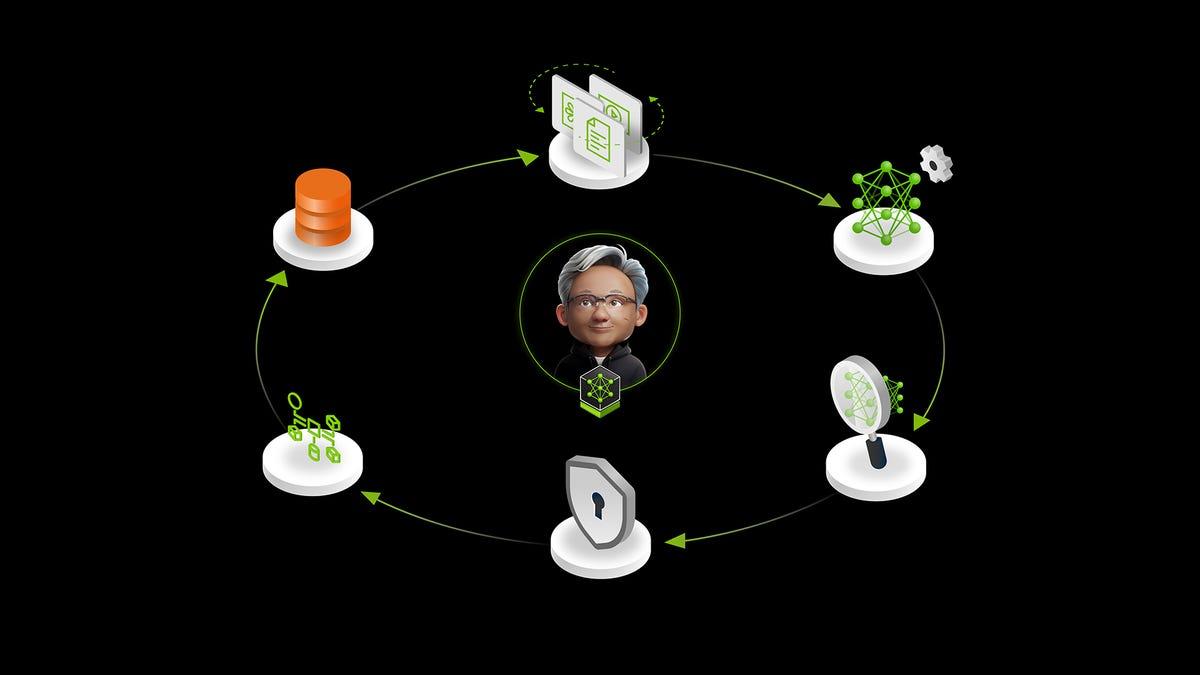

DataStax helps Wikimedia achieve 10x data processing speedup to serve their global content database to billions of users. In enterprise AI, understanding and working across multiple languages is no longer optional -- it's essential for meeting the needs of employees, customers and users worldwide. Multilingual information retrieval -- the ability to search, process and retrieve knowledge across languages -- plays a key role in enabling AI to deliver more accurate and globally relevant outputs. Enterprises can expand their generative AI efforts into accurate, multilingual systems using NVIDIA NeMo Retriever embedding and reranking NVIDIA NIM microservices, which are now available on the NVIDIA API catalog. These models can understand information across a wide range of languages and formats, such as documents, to deliver accurate, context-aware results at massive scale. With NeMo Retriever, businesses can now: Leading NVIDIA partners like DataStax, Cohesity, Cloudera, Nutanix, SAP, VAST Data and WEKA are already adopting these microservices to help organizations across industries securely connect custom models to diverse and large data sources. By using retrieval-augmented generation (RAG) techniques, NeMo Retriever enables AI systems to access richer, more relevant information and effectively bridge linguistic and contextual divides. Wikidata Speeds Data Processing From 30 Days to Under Three Days In partnership with DataStax, Wikimedia has implemented NeMo Retriever to vector-embed the content of Wikipedia, serving billions of users. Vector embedding -- or "vectorizing" -- is a process that transforms data into a format that AI can process and understand to extract insights and drive intelligent decision-making. Wikimedia used the NeMo Retriever embedding and reranking NIM microservices to vectorize over 10 million Wikidata entries into AI-ready formats in under three days, a process that used to take 30 days. That 10x speedup enables scalable, multilingual access to one of the world's largest open-source knowledge graphs. This groundbreaking project ensures real-time updates for hundreds of thousands of entries that are being edited daily by thousands of contributors, enhancing global accessibility for developers and users alike. With Astra DB's serverless model and NVIDIA AI technologies, the DataStax offering delivers near-zero latency and exceptional scalability to support the dynamic demands of the Wikimedia community. DataStax is using NVIDIA AI Blueprints and integrating the NVIDIA NeMo Customizer, Curator, Evaluator and Guardrails microservices into the LangFlow AI code builder to enable the developer ecosystem to optimize AI models and pipelines for their unique use cases and help enterprises scale their AI applications. Language-Inclusive AI Drives Global Business Impact NeMo Retriever helps global enterprises overcome linguistic and contextual barriers and unlock the potential of their data. By deploying robust, AI solutions, businesses can achieve accurate, scalable and high-impact results. NVIDIA's platform and consulting partners play a critical role in ensuring enterprises can efficiently adopt and integrate generative AI capabilities, such as the new multilingual NeMo Retriever microservices. These partners help align AI solutions to an organization's unique needs and resources, making generative AI more accessible and effective. They include: Breaking Language Barriers With Multilingual Information Retrieval Multilingual information retrieval is vital for enterprise AI to meet real-world demands. NeMo Retriever supports efficient and accurate text retrieval across multiple languages and cross-lingual datasets. It's designed for enterprise use cases such as search, question-answering, summarization and recommendation systems. Additionally, it addresses a significant challenge in enterprise AI -- handling large volumes of large documents. With long-context support, the new microservices can process lengthy contracts or detailed medical records while maintaining accuracy and consistency over extended interactions. These capabilities help enterprises use their data more effectively, providing precise, reliable results for employees, customers and users while optimizing resources for scalability. Advanced multilingual retrieval tools like NeMo Retriever can make AI systems more adaptable, accessible and impactful in a globalized world. Availability Developers can access the multilingual NeMo Retriever microservices, and other NIM microservices for information retrieval, through the NVIDIA API catalog, or a no-cost, 90-day NVIDIA AI Enterprise developer license. Learn more about the new NeMo Retriever microservices and how to use them to build efficient information retrieval systems.

[2]

Nvidia debuts NeMo Retriever microservices for multilingual generative AI - SiliconANGLE

Nvidia debuts NeMo Retriever microservices for multilingual generative AI Nvidia Corp. today announced the launch of microservices that will allow artificial intelligence engineers to build generative AI applications that can store and retrieve data across multiple languages, allowing them to cross national barriers more readily. To make data retrieval for generative AI across languages more accurate, Nvidia introduced multilingual capabilities using Nvidia NeMo Retriever via the company's application programming interface catalog for developers. This software can understand data across many languages and formats and turn it into text to assist with context-aware results. NeMo Retriever allows developers to build information ingestion and retrieval pipelines for AI models for extracting structured and unstructured data by converting information from text, documents, tables and similar and avoiding duplicate chunks. It does so by converting it into a language that an AI can understand and inserting it into a vector database in a vector database using embeddings. Embeddings are complex mathematical representations of information that represent the properties and relationships between words, phrases and other types of data. This can be used to help capture the "closeness" in meaning between two words or sentences when searching or thinking about them, similar to how "cat" and "dog" are close because they're both animals and both domestic pets. However, "toaster" and "dog" are more dissimilar, but both are often found in houses. Using Retriever to embed and retrieve data in native languages also increases accuracy, Kari Briski, vice president of generative AI software at Nvidia told SiliconANGLE in an interview. Part of this is because English dominates most AI data training sets. Anyone who has translated something from German into English and then back again, or vice versa, has discovered the "lost in translation" effect where context or accuracy is lost each time. "Accuracy is necessary and most of the data, open data in the world happens to be English, which is why there's this push for sovereign AI," said Briski. "To bolster other languages to have data and retrievers in their natural language will help the accuracy." Briski said when the Retriever was first released customers clamored for multilingual support due to the lost accuracy using translation software. Enterprise businesses do not operate in just one language -- they may embed English documents, German tests, something in Japanese or pull in research written in Russian. The result is that this information will need to be searched by the same model but the more tools it passes through the more accuracy falls. In addition to ingestion, NeMo Retriever can "evaluate and rerank" results to ensure that answers are accurate. When a query is sent through the Retriever, it examines the vector database response and ranks the information retrieved to rank answers based on relevance to the query, providing an extra layer of accuracy. Nvidia partnered with DataStax Inc. to implement NeMo Retriever to vector embed content from Wikipedia, the free online volunteer crowdsourced encyclopedia. Using the technology and specialized software from Nvidia the company was able to vectorize the content of 10 million data entries into AI-ready formats in under three days, a process that would normally take 30 days. Other Nvidia partners including Cohesity Inc., Cloudera Inc., SAP SE and VAST Data Inc. are already integrating support for these new microservices to support large multilingual data sources. These include services such as retrieval-augmented generation techniques, which allow pre-trained generative AI to use real-time data sources for richer more relevant information, with the adaptation of multilingual sources enterprises can pull in even more data. Currently, the NeMo Retriever for Multilingual only works for text retrieval and answers, Briski said. "We are working on things like multimodal data and images, PDFs and video for the future," she explained. "We're just talking about text right now. Because if you can nail text, then you can go on to do a great job with other modalities."

[3]

Nvidia and DataStax just made generative AI smarter and leaner -- here's how

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Nvidia and DataStax launched new technology today that dramatically reduces storage requirements for companies deploying generative AI systems, while enabling faster and more accurate information retrieval across multiple languages. The new Nvidia NeMo Retriever microservices, integrated with DataStax's AI platform, cuts data storage volume by 35 times compared to traditional approaches -- a crucial capability as enterprise data is projected to reach more than 20 zettabytes by 2027. "Today's enterprise unstructured data is at 11 zettabytes, roughly equal to 800,000 copies of the Library of Congress, and 83% of that is unstructured with 50% being audio and video," said Kari Briski, VP of product management for AI at Nvidia, in an interview with VentureBeat. "Significantly reducing these storage costs while enabling companies to effectively embed and retrieve information becomes a game changer." Wikimedia slashes processing time from 30 days to three The technology is already proving transformative for Wikimedia Foundation, which used the integrated solution to reduce processing time for 10 million Wikipedia entries from 30 days to under three days. The system handles real-time updates across hundreds of thousands of entries being edited daily by 24,000 global volunteers. "You can't just rely on large language models for content - you need context from your existing enterprise data," explained Chet Kapoor, CEO of DataStax. "This is where our hybrid search capability comes in, combining both semantic search and traditional text search, then using Nvidia's re-ranker technology to deliver the most relevant results in real-time at global scale." Enterprise data security meets AI accessibility The partnership addresses a critical challenge facing enterprises: how to make their vast stores of private data accessible to AI systems without exposing sensitive information to external language models. "Take FedEx -- 60% of their data sits in our products, including all package delivery information for the past 20 years with personal details. That's not going to Gemini or OpenAI anytime soon, or ever," Kapoor explained. The technology is finding early adoption across industries, with financial services firms leading the charge despite regulatory constraints. "I've been blown away by how far ahead financial services firms are now," said Kapoor, citing Commonwealth Bank of Australia and Capital One as examples. The next frontier for AI: Multimodal document processing Looking ahead, Nvidia plans to expand the technology's capabilities to handle more complex document formats. "We're seeing great results with multimodal PDF processing -- understanding tables, graphs, charts and images and how they relate across pages," Briski revealed. "It's a really hard problem that we're excited to tackle." For enterprises drowning in unstructured data while trying to deploy AI responsibly, the new offering provides a path to make their information assets AI-ready without compromising security or breaking the bank on storage costs. The solution is available immediately through the Nvidia API catalog with a 90-day free trial license. The announcement underscores the growing focus on enterprise AI infrastructure as companies move beyond experimentation to large-scale deployment, with data management and cost efficiency becoming critical success factors.

Share

Share

Copy Link

NVIDIA launches NeMo Retriever microservices for multilingual generative AI, partnering with DataStax to dramatically improve data processing efficiency and language understanding across industries.

NVIDIA Introduces NeMo Retriever for Multilingual AI

NVIDIA has unveiled its latest innovation in the field of artificial intelligence: NeMo Retriever microservices. This groundbreaking technology is designed to enhance multilingual generative AI capabilities, addressing the growing need for language-inclusive AI solutions in global enterprises

1

.Key Features and Capabilities

NeMo Retriever offers several critical functionalities:

-

Multilingual Information Retrieval: The system can search, process, and retrieve knowledge across multiple languages, enabling AI to deliver more accurate and globally relevant outputs

1

. -

Cross-Format Understanding: NeMo Retriever can comprehend information from various formats, including documents, making it versatile for diverse enterprise needs

1

. -

Vector Embedding: The technology transforms data into AI-ready formats, significantly speeding up the processing of large datasets

2

. -

Evaluation and Reranking: NeMo Retriever examines and ranks retrieved information based on relevance to queries, enhancing accuracy

2

.

Partnership with DataStax and Impact on Wikimedia

NVIDIA has partnered with DataStax to implement NeMo Retriever in vectorizing Wikipedia content. This collaboration has yielded impressive results:

- Processing time for 10 million Wikidata entries reduced from 30 days to under three days

1

. - Real-time updates for hundreds of thousands of entries edited daily by thousands of contributors

1

. - 35 times reduction in data storage volume compared to traditional approaches

3

.

Industry Adoption and Applications

Several leading companies are already adopting NeMo Retriever microservices:

- DataStax, Cohesity, Cloudera, Nutanix, SAP, VAST Data, and WEKA are integrating the technology to connect custom models to diverse data sources

1

. - Financial services firms, including Commonwealth Bank of Australia and Capital One, are early adopters despite regulatory constraints

3

.

Related Stories

Future Developments

NVIDIA is working on expanding NeMo Retriever's capabilities:

- Multimodal data processing, including images, PDFs, and video

2

. - Advanced PDF processing to understand and relate tables, graphs, charts, and images across pages

3

.

Availability and Access

Developers can access NeMo Retriever microservices through the NVIDIA API catalog or with a no-cost, 90-day NVIDIA AI Enterprise developer license

1

. This offering provides enterprises with a path to make their information assets AI-ready without compromising security or incurring excessive storage costs3

.References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology