Nvidia Approves Samsung's HBM3 Chips for China-Focused AI Processor

10 Sources

10 Sources

[1]

Nvidia will start using Samsung's memory chips in its AI chips for China

As Nvidia prepares for possible challenges to its chip business in China, the chipmaker is reportedly turning to Samsung for a key part of its specially-designed processor. Nvidia has approved Samsung's fourth-generation high bandwidth memory chips, called HBM3, for use in its artificial intelligence chips, called H20, which are developed to comply with U.S. export controls, Reuters reported, citing unnamed people familiar with the matter. The H20 graphics processing unit, or GPU, is one of three chips Nvidia designed to not require an export control license. Meanwhile, a shortage of memory chips has slowed Nvidia's production of AI chips amid rising demand for its processors, so turning to Samsung could help with its supply. It marks the first time Samsung's HBM3 chips will be used in Nvidia's chips, but it is not clear if Nvidia will want to use it in other AI chips, the people told Reuters. Nvidia has reportedly told its suppliers it will use the HBM3 chips as well as Samsung's fifth-generation memory chips, called HBM3E, in its AI chips, according to The Information, which cited unnamed people familiar with the matter. Samsung, which is facing a workers' strike in South Korea, has not met Nvidia's standards with its HBM3E chips, and is continuing to test the chips, people told Reuters. Nvidia declined to comment, and Samsung did not immediately respond to a request for comment. Nvidia is expected to sell more than one million of its H20 chips in China this year, worth around $12 billion, despite U.S. trade restrictions, the Financial Times reported, citing SemiAnalysis data. However, analysts at Jeffries said when the U.S. does its annual review of U.S. semiconductor export controls in October, "it is highly likely that" Nvidia's H20 chip will be banned for sale to China. Meanwhile, as the U.S. considers tougher trade restrictions to prevent advanced chip equipment from reaching China, Nvidia is reportedly working on a version of its latest AI chips to comply with those rules. The chipmaker will work with a local distribution partner, Inspur, to launch and sell a new version of its Blackwell chips, tentatively called "B20," in China, Reuters reported, citing unnamed people familiar with the matter.

[2]

Samsung's HBM3 chips get Nvidia's nod for use in China-focused AI processor

Samsung Electronics' (OTCPK:SSNLF) fourth-generation high bandwidth memory, or HBM3, chips have cleared Nvidia's (NASDAQ:NVDA) evaluation for use in the U.S. company's processor for the first time, Reuters reported citing people with knowledge of the matter. For now, it is a curtailed approval, as Samsung's HBM3 chips will only be used in a less sophisticated Nvidia graphics processing unit, or GPU, the H20, which has been built for the Chinese market to adhere to U.S. export rules, the report added. It was not clear if Nvidia would use the South Korean company's HBM3 chips in its other AI processors, or if the chips would have to clear more tests for that to occur. Samsung has not yet cleared Nvidia's evaluation for its fifth-generation HBM3E chips and testing of those chips is ongoing, according to the report. Samsung could start supplying HBM3 for Nvidia's H20 processor as early as August. To comply with the U.S. export curbs, the H20's computing power has been significantly downsized compared to the H100, a version sold in non-China markets. In March, it was reported that Nvidia was cutting the price of H20, its most advanced chip it made for the Chinese market, pricing it below a rival chip from Huawei Technologies. Sales of H20 began with a weak start earlier this year but now it is growing rapidly. Last month, Nvidia's CEO Jensen Huang said that the company was reviewing HBM chips from Micron Technology (MU) and Samsung to determine if they can effectively compete with SK Hynix, the South Korean company which is already supplying Nvidia with HBM3 and HBM3e chips. Huang had confirmed that Samsung's HBM chips had not failed any qualification tests, but need more engineering work. In May, it was reported that Samsung's HBM3 chips were failing to pass Nvidia's evaluations due to heat and power consumption issues. HBM is a type of dynamic random access memory, or DRAM, in which chips are vertically place to save space and curb power consumption. It helps in the processing of huge amounts of data produced by AI applications. The HBM3 chips are the fourth-generation HBM standard currently, usually used in GPUs for AI, and the fifth-generation are HBM3E chips. The market for HBM is led by South Korean companies SK Hynix and Samsung and, to a lesser extent, by Micron Technology. SK Hynix has been the main supplier of HBM chips to Nvidia and has been delivering HBM3 since June 2022. The company also started providing HBM3E in late March to a customer but did not disclose to whom but as per a report the deliveries went to Nvidia. In May, SK Hynix, reportedly, noted that its HBM chips were sold out for this year and almost fully booked for the next year, amid demand for semiconductors required to develop AI products. Since last year, Samsung has been trying to pass Nvidia's evaluation for HBM3 and HBM3E.

[3]

Nvidia clears Samsung's HBM3 chips for use in China-market processor, sources say

SINGAPORE/SEOUL, July 24 (Reuters) - Samsung Electronics' fourth-generation high bandwidth memory or HBM3 chips have been cleared by Nvidia for use in its processors for the first time, three people briefed on the matter said. But it is somewhat of a muted greenlight as Samsung's HBM3 chips will, for now, only be used in a less sophisticated Nvidia graphics processing unit (GPU), the H20, which has been developed for the Chinese market in compliance with U.S. export controls, the people said. It was not immediately clear if Nvidia would use Samsung's HBM3 chips in its other AI processors or if the chips would have to pass additional tests before that could happen, they added. Samsung has also yet to meet Nvidia's standards for fifth-generation HBM3E chips and testing of those chips is continuing, the people added, declining to be identified as they were not authorised to speak to media. Nvidia and Samsung declined to comment. HBM is a type of dynamic random access memory or DRAM standard first produced in 2013 in which chips are vertically stacked to save space and reduce power consumption. A key component of GPUs for artificial intelligence, it helps process massive amounts of data produced by complex applications. Nvidia's approval of Samsung's HBM3 chips comes amid soaring demand for sophisticated GPUs created by the generative AI boom that Nvidia and other makers of AI chipsets are struggling to meet. There are only three main manufacturers of HBM - SK Hynix , Micron and Samsung - and with HBM3 also in short supply, Nvidia is keen to see Samsung clear its standards so that it can diversify its supplier base. Nvidia's need to have more access to HBM3 is also set to grow as SK Hynix - the clear leader in the field - plans to increase its HBM3E production and make less HBM3, two of the sources said. SK Hynix declined to comment. Samsung, the world's largest maker of memory chips, has been seeking to pass Nvidia's tests for both HBM3 and HBM3E since last year but has struggled due to heat and power consumption issues, Reuters reported in May, citing sources. Samsung said after the publication of the Reuters article in May that claims of failing Nvidia's tests due to heat and power consumption problems were untrue. Samsung could begin supplying HBM3 for Nvidia's H20 processor as early as August, according to two of the sources. The H20 is the most advanced of three GPUs Nvidia has tailored for the China market after the U.S. tightened export restrictions in 2023, aiming to impede supercomputing and AI breakthroughs that could benefit the Chinese military. In accordance with U.S. sanctions, the H20's computing power has been significantly capped compared to the version sold in non-China markets, the H100. The H20 initially got off to a weak start when deliveries began this year and the U.S. firm priced it below a rival chip from Chinese tech giant Huawei, Reuters reported in May. But sales are now growing rapidly, separate sources have said. In contrast to Samsung, SK Hynix is the main supplier of HBM chips to Nvidia and has been supplying HBM3 since June 2022. It also began supplying HBM3E in late March to a customer it declined to identify. Shipments went to Nvidia, sources have said. Micron has also said it will supply Nvidia with HBM3E. (Reporting by Fanny Potkin in Singapore and Heekyong Yang in Seoul; Editing by Edwina Gibbs)

[4]

Nvidia clears Samsung's HBM3 chips for use in China-market processor, sources say

SINGAPORE/SEOUL - Samsung Electronics' fourth-generation high bandwidth memory or HBM3 chips have been cleared by Nvidia for use in its processors for the first time, three people briefed on the matter said. But it is somewhat of a muted greenlight as Samsung's HBM3 chips will, for now, only be used in a less sophisticated Nvidia graphics processing unit (GPU), the H20, which has been developed for the Chinese market in compliance with U.S. export controls, the people said. It was not immediately clear if Nvidia would use Samsung's HBM3 chips in its other AI processors or if the chips would have to pass additional tests before that could happen, they added. Samsung has also yet to meet Nvidia's standards for fifth-generation HBM3E chips and testing of those chips is continuing, the people added, declining to be identified as they were not authorised to speak to media. Nvidia and Samsung declined to comment. HBM is a type of dynamic random access memory or DRAM standard first produced in 2013 in which chips are vertically stacked to save space and reduce power consumption. A key component of GPUs for artificial intelligence, it helps process massive amounts of data produced by complex applications. Nvidia's approval of Samsung's HBM3 chips comes amid soaring demand for sophisticated GPUs created by the generative AI boom that Nvidia and other makers of AI chipsets are struggling to meet. There are only three main manufacturers of HBM - SK Hynix , Micron and Samsung - and with HBM3 also in short supply, Nvidia is keen to see Samsung clear its standards so that it can diversify its supplier base. Nvidia's need to have more access to HBM3 is also set to grow as SK Hynix - the clear leader in the field - plans to increase its HBM3E production and make less HBM3, two of the sources said. SK Hynix declined to comment. Samsung, the world's largest maker of memory chips, has been seeking to pass Nvidia's tests for both HBM3 and HBM3E since last year but has struggled due to heat and power consumption issues, Reuters reported in May, citing sources. Samsung said after the publication of the Reuters article in May that claims of failing Nvidia's tests due to heat and power consumption problems were untrue. H20 Samsung could begin supplying HBM3 for Nvidia's H20 processor as early as August, according to two of the sources. The H20 is the most advanced of three GPUs Nvidia has tailored for the China market after the U.S. tightened export restrictions in 2023, aiming to impede supercomputing and AI breakthroughs that could benefit the Chinese military. In accordance with U.S. sanctions, the H20's computing power has been significantly capped compared to the version sold in non-China markets, the H100. The H20 initially got off to a weak start when deliveries began this year and the U.S. firm priced it below a rival chip from Chinese tech giant Huawei, Reuters reported in May. But sales are now growing rapidly, separate sources have said. In contrast to Samsung, SK Hynix is the main supplier of HBM chips to Nvidia and has been supplying HBM3 since June 2022. It also began supplying HBM3E in late March to a customer it declined to identify. Shipments went to Nvidia, sources have said. Micron has also said it will supply Nvidia with HBM3E. (Reporting by Fanny Potkin in Singapore and Heekyong Yang in Seoul; Editing by Edwina Gibbs)

[5]

Nvidia clears Samsung's HBM3 chips for use in China-market processor: sources

The logo of the Samsung Electronics is seen at its office in Seoul, Jan. 31, 2023. AP-Yonhap Samsung Electronics' fourth-generation high bandwidth memory or HBM3 chips have been cleared by Nvidia for use in its processors for the first time, three people briefed on the matter said. But it is somewhat of a muted greenlight as Samsung's HBM3 chips will, for now, only be used in a less sophisticated Nvidia graphics processing unit (GPU), the H20, which has been developed for the Chinese market in compliance with U.S. export controls, the people said. It was not immediately clear if Nvidia would use Samsung's HBM3 chips in its other AI processors or if the chips would have to pass additional tests before that could happen, they added. Samsung has also yet to meet Nvidia's standards for fifth-generation HBM3E chips and testing of those chips is continuing, the people added, declining to be identified as they were not authorised to speak to media. Nvidia and Samsung declined to comment. HBM is a type of dynamic random access memory or DRAM standard first produced in 2013 in which chips are vertically stacked to save space and reduce power consumption. A key component of GPUs for artificial intelligence, it helps process massive amounts of data produced by complex applications. Nvidia's approval of Samsung's HBM3 chips comes amid soaring demand for sophisticated GPUs created by the generative AI boom that Nvidia and other makers of AI chipsets are struggling to meet. There are only three main manufacturers of HBM -- SK Hynix , Micron and Samsung -- and with HBM3 also in short supply, Nvidia is keen to see Samsung clear its standards so that it can diversify its supplier base. Nvidia's need to have more access to HBM3 is also set to grow as SK Hynix -- the clear leader in the field -- plans to increase its HBM3E production and make less HBM3, two of the sources said. SK Hynix declined to comment. Samsung, the world's largest maker of memory chips, has been seeking to pass Nvidia's tests for both HBM3 and HBM3E since last year but has struggled due to heat and power consumption issues, Reuters reported in May, citing sources. Samsung said after the publication of the Reuters article in May that claims of failing Nvidia's tests due to heat and power consumption problems were untrue. Samsung could begin supplying HBM3 for Nvidia's H20 processor as early as August, according to two of the sources. The H20 is the most advanced of three GPUs Nvidia has tailored for the China market after the U.S. tightened export restrictions in 2023, aiming to impede supercomputing and AI breakthroughs that could benefit the Chinese military. In accordance with U.S. sanctions, the H20's computing power has been significantly capped compared to the version sold in non-China markets, the H100. The H20 initially got off to a weak start when deliveries began this year and the U.S. firm priced it below a rival chip from Chinese tech giant Huawei, Reuters reported in May. But sales are now growing rapidly, separate sources have said. In contrast to Samsung, SK Hynix is the main supplier of HBM chips to Nvidia and has been supplying HBM3 since June 2022. It also began supplying HBM3E in late March to a customer it declined to identify. Shipments went to Nvidia, sources have said. Micron has also said it will supply Nvidia with HBM3E. (Reuters)

[6]

Exclusive-Nvidia clears Samsung's HBM3 chips for use in China-market processor, sources say

It was not immediately clear if Nvidia would use Samsung's HBM3 chips in its other AI processors or if the chips would have to pass additional tests before that could happen, they added. Samsung has also yet to meet Nvidia's standards for fifth-generation HBM3E chips and testing of those chips is continuing, the people added, declining to be identified as they were not authorised to speak to media. Nvidia and Samsung declined to comment. HBM is a type of dynamic random access memory or DRAM standard first produced in 2013 in which chips are vertically stacked to save space and reduce power consumption. A key component of GPUs for artificial intelligence, it helps process massive amounts of data produced by complex applications. Nvidia's approval of Samsung's HBM3 chips comes amid soaring demand for sophisticated GPUs created by the generative AI boom that Nvidia and other makers of AI chipsets are struggling to meet. There are only three main manufacturers of HBM - SK Hynix, Micron and Samsung - and with HBM3 also in short supply, Nvidia is keen to see Samsung clear its standards so that it can diversify its supplier base. Nvidia's need to have more access to HBM3 is also set to grow as SK Hynix - the clear leader in the field - plans to increase its HBM3E production and make less HBM3, two of the sources said. SK Hynix declined to comment. Samsung, the world's largest maker of memory chips, has been seeking to pass Nvidia's tests for both HBM3 and HBM3E since last year but has struggled due to heat and power consumption issues, Reuters reported in May, citing sources. Samsung said after the publication of the Reuters article in May that claims of failing Nvidia's tests due to heat and power consumption problems were untrue. H20 Samsung could begin supplying HBM3 for Nvidia's H20 processor as early as August, according to two of the sources. The H20 is the most advanced of three GPUs Nvidia has tailored for the China market after the U.S. tightened export restrictions in 2023, aiming to impede supercomputing and AI breakthroughs that could benefit the Chinese military. In accordance with U.S. sanctions, the H20's computing power has been significantly capped compared to the version sold in non-China markets, the H100. The H20 initially got off to a weak start when deliveries began this year and the U.S. firm priced it below a rival chip from Chinese tech giant Huawei, Reuters reported in May. But sales are now growing rapidly, separate sources have said. In contrast to Samsung, SK Hynix is the main supplier of HBM chips to Nvidia and has been supplying HBM3 since June 2022. It also began supplying HBM3E in late March to a customer it declined to identify. Shipments went to Nvidia, sources have said. Micron has also said it will supply Nvidia with HBM3E. (Reporting by Fanny Potkin in Singapore and Heekyong Yang in Seoul; Editing by Edwina Gibbs)

[7]

Nvidia clears Samsung's HBM3 chips for use in China-market processor - ET Telecom

Devices 3 min read Nvidia clears Samsung's HBM3 chips for use in China-market processor Samsung has also yet to meet Nvidia's standards for fifth-generation HBM3E chips and testing of those chips is continuing, the people added, declining to be identified as they were not authorised to speak to media. By Fanny Potkin and Heekyong Yang SINGAPORE/SEOUL: Samsung Electronics' fourth-generation high bandwidth memory or HBM3 chips have been cleared by Nvidia for use in its processors for the first time, three people briefed on the matter said. But it is somewhat of a muted greenlight as Samsung's HBM3 chips will, for now, only be used in a less sophisticated Nvidia graphics processing unit (GPU), the H20, which has been developed for the Chinese market in compliance with U.S. export controls, the people said. It was not immediately clear if Nvidia would use Samsung's HBM3 chips in its other AI processors or if the chips would have to pass additional tests before that could happen, they added. Samsung has also yet to meet Nvidia's standards for fifth-generation HBM3E chips and testing of those chips is continuing, the people added, declining to be identified as they were not authorised to speak to media. Nvidia and Samsung declined to comment. HBM is a type of dynamic random access memory or DRAM standard first produced in 2013 in which chips are vertically stacked to save space and reduce power consumption. A key component of GPUs for artificial intelligence, it helps process massive amounts of data produced by complex applications. Nvidia's approval of Samsung's HBM3 chips comes amid soaring demand for sophisticated GPUs created by the generative AI boom that Nvidia and other makers of AI chipsets are struggling to meet. There are only three main manufacturers of HBM - SK Hynix, Micron and Samsung - and with HBM3 also in short supply, Nvidia is keen to see Samsung clear its standards so that it can diversify its supplier base. Nvidia's need to have more access to HBM3 is also set to grow as SK Hynix - the clear leader in the field - plans to increase its HBM3E production and make less HBM3, two of the sources said. SK Hynix declined to comment. Samsung, the world's largest maker of memory chips, has been seeking to pass Nvidia's tests for both HBM3 and HBM3E since last year but has struggled due to heat and power consumption issues, Reuters reported in May, citing sources. Samsung said after the publication of the Reuters article in May that claims of failing Nvidia's tests due to heat and power consumption problems were untrue. H20 Samsung could begin supplying HBM3 for Nvidia's H20 processor as early as August, according to two of the sources. The H20 is the most advanced of three GPUs Nvidia has tailored for the China market after the U.S. tightened export restrictions in 2023, aiming to impede supercomputing and AI breakthroughs that could benefit the Chinese military. In accordance with U.S. sanctions, the H20's computing power has been significantly capped compared to the version sold in non-China markets, the H100. The H20 initially got off to a weak start when deliveries began this year and the U.S. firm priced it below a rival chip from Chinese tech giant Huawei, Reuters reported in May. But sales are now growing rapidly, separate sources have said. In contrast to Samsung, SK Hynix is the main supplier of HBM chips to Nvidia and has been supplying HBM3 since June 2022. It also began supplying HBM3E in late March to a customer it declined to identify. Shipments went to Nvidia, sources have said. Micron has also said it will supply Nvidia with HBM3E.

[8]

Nvidia Greenlights Samsung's High Bandwidth Memory Chips For AI Chips In China: Report - Samsung Electronics Co (OTC:SSNLF), NVIDIA (NASDAQ:NVDA)

Nvidia Corp. NVDA has reportedly given the green light for the use of Samsung Electronics Co Ltd.'s SSNLF fourth-generation high bandwidth memory (HBM3) chips in its AI processors for the Chinese market. What Happened: This marks the first time that Nvidia has approved the use of Samsung's HBM3 chips. However, the chips will initially be used in a less advanced Nvidia graphics processing unit (GPU), the H20, which is specifically designed for the Chinese market to comply with U.S. export controls, Reuters reported, citing three people briefed on the matter. It remains unclear whether Nvidia will extend the use of Samsung's HBM3 chips to its other AI processors. The chips are also yet to meet Nvidia's standards for fifth-generation HBM3E chips, with testing ongoing. According to the report, Samsung could start supplying HBM3 for Nvidia's H20 processor as early as August. The H20 is the most advanced of the three GPUs Nvidia has developed for the Chinese market following the U.S. tightening export restrictions in 2023. These restrictions aim to impede supercomputing and AI advancements that could benefit the Chinese military. The approval comes at a time when there is a significant demand for advanced GPUs due to the generative AI boom. Nvidia's net income has surged to over $23.6 billion, reflecting the soaring demand for these chips. With HBM3 chips also facing a shortage, Nvidia's approval of Samsung's chips is seen as a move to diversify its supplier base. This is especially important as the current leader in the field, SK Hynix, plans to increase its HBM3E production and reduce HBM3 production, according to the report. Samsung and Nvidia did not immediately respond to Benzinga's request for comment. See Also: Investors Anticipate Unraveling Of 'Trump Trade' As Biden Exits Presidential Race, But Analyst Says 'It's A Bit Too Early For The Markets To Declare Victory For Trump' Why It Matters: Nvidia's approval of Samsung's HBM3 chips is a significant development in the context of ongoing U.S. export restrictions on advanced semiconductors to China. In response to these restrictions, Nvidia is reportedly developing new versions of its AI chips specifically for the Chinese market. Nvidia is working on a new AI chip, named "B20," in collaboration with Inspur, a major distribution partner in China. Additionally, the Chinese government has been urging its tech giants to reduce their reliance on foreign-made chips, including those from Nvidia. China has advised companies like Alibaba and Baidu to increase their purchase of domestic AI chips. Despite U.S. restrictions, companies like Alphabet Inc.'s Google and Microsoft Corp. have been providing Chinese firms access to Nvidia's AI chips through data centers outside China. This move highlights the ongoing demand for Nvidia's technology in China. Furthermore, Nvidia's market value has been soaring, driven by the increasing demand for AI chips. A prominent tech investor predicted that Nvidia could achieve a market capitalization of nearly $50 trillion within the next decade. Price Action: Nvidia's stock closed at $122.59 on Tuesday, down 0.77% from the previous day. In after-hours trading, the stock further declined by 1.62%. Year to date, the stock has experienced an increase of 154.49%, according to data from Benzinga Pro. Read Next: Tesla Q2 Earnings Highlights: Revenue Beat, EPS Miss, Robotaxi Update, 2024 Growth Rate To Be 'Notably Lower' Than 2023 Image Via Shutterstock This story was generated using Benzinga Neuro and edited by Kaustubh Bagalkote Market News and Data brought to you by Benzinga APIs

[9]

Samsung's possible HBM supply for NVIDIA draws mixed outlook

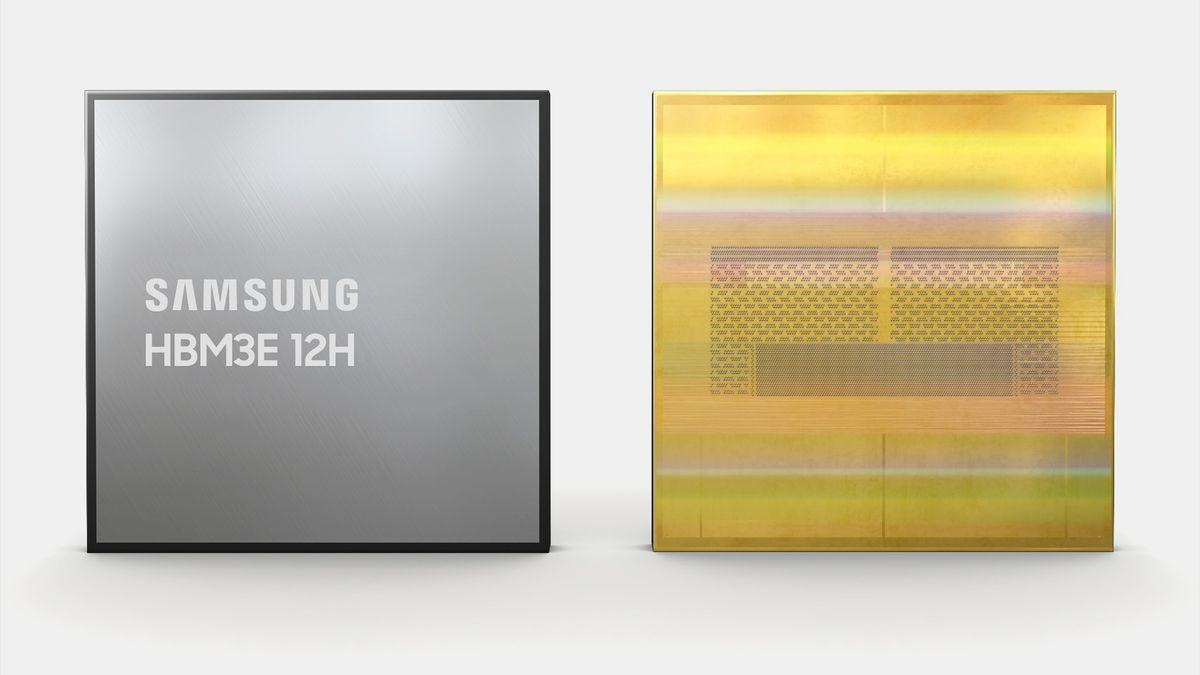

Seen above is NVIDIA CEO Jensen Huang's signature on Samsung Electronics' HBM3E 12H chip at a Samsung Electronics booth at the GTC 2024 in San Jose in the U.S. state of California. This photo was posted on social media of Han Jin-man, executive vice president of Samsung Electronics Semiconductor Division America. Captured from Han's social media Market shows disappointment over delayed tests for advanced HBM chipsBy Nam Hyun-woo Samsung Electronics' fourth-generation high bandwidth memory 3 (HBM3) chips have been cleared by NVIDIA for use in NVIDA's China-bound processors, marking the first case of Samsung supplying its HBM chips to the artificial intelligence (AI) processor giant, according to a Wednesday report. The report that Samsung neither confirmed nor denied also said Samsung's fifth-generation HBM3E chips have yet to meet NVIDIA standards and that tests are continuing, presenting a mixed outlook on the chipmaker's efforts to keep up with the fast-evolving HBM market. Citing multiple unnamed sources, Reuters reported that Samsung's HBM3 chips will, for now, only be used in a less sophisticated Nvidia graphics processing unit (GPU), the H20, and Samsung could begin supplying HBM3 as early as August. Samsung Electronics' HBM3E 12H / Courtesy of Samsung Electronics The H20 has been developed for the Chinese market, and its computing power is capped in compliance with U.S. export controls. HBM, which vertically stacks chips to save space and power consumption, is viewed as a key component of graphics processing units for powering generative AI. Samsung did not confirm or deny the report, with an official noting that "efforts are underway with partner companies to supply HBM chips." Samsung has been striving to supply HBM chips to NVIDIA, which holds a dominant position in GPUs for AI, in order to achieve sustainable improvements in its earnings and challenge SK hynix's leadership in the HBM market. SK hynix is the main supplier of HBM chips to NVIDIA, supplying HBM3 since June 2022. On March 19, SK hynix announced that it began supplying HBM3E to "a client," which was assumed to be NVIDIA. Along with SK hynix, Micron of the U.S. is also supplying HBM chips to NVIDIA. In comparison, Samsung has been struggling to compete to win NVIDIA's order. In February, the company announced that it has developed the industry's first 36-gigabyte 12-layer HBM3E and plans to mass produce those chips in the second half of this year, but reports have alleged that Samsung's supplying efforts are being delayed due to heat and power consumption issues. NVIDIA CEO Jensen Huang said earlier this month that Samsung has not failed any qualification tests. Read MoreSamsung strikes back in AI chip war with 12-layer HBM3E DRAM NVIDIA's possible clearance of Samsung's fourth-generation chip appears to be a boost for the company's outlook in the HBM market and its earnings. In the DRAM market, HBM accounted for 8.4 percent last year, but its shares are expected to jump to 20.1 percent this year. Samsung plans to expand its HBM production capacity by 2.9 times compared to that of last year, in order to expand its market share. Last year, SK hynix was leading the global HBM market with a 53 percent share, followed by Samsung with 38 percent and Micron with 9 percent, according to market tracker TrendForce. Analysts have assumed that NVIDIA may post approximately $12 billion in revenue by selling more than 1 million H20 processors to China this year. Samsung has already predicted that its second-quarter operating profit will likely be 10.4 trillion won ($7.54 billion), a jump of about 1,452 percent from 670 billion won a year ago. For NVIDIA, Samsung's world's largest chip manufacturing capacity is also necessary to meet the soaring demand for its chips and to diversify its supply sources. Casting uncertainties are new U.S. regulations. U.S. media outlets reported earlier this week that the U.S. government may introduce new trade restrictions that could prevent NVIDIA from selling its H20 processors while NVIDIA is preparing another China-oriented processor, the B20. Though it will likely be the first instance of Samsung supplying its HBM chips for NVIDIA, the market appeared to be accepting this issue as a disappointment due to hyped expectations that Samsung has already passed HBM3E tests and will likely begin mass production soon. Samsung Electronics closed at 82,000 won, down 2.26 percent from a day earlier. Industry officials assume that further details of Samsung's chip supply for NVIDIA will likely be available during the company's conference call slated for July 31.

[10]

Nvidia makes special server design for new China chip to comply with US rules

Nvidia's (NASDAQ:NVDA) new chip for China has a special server design which would not violate U.S. export restrictions, The Information reported. Earlier this week, it was reported that Nvidia will work with Inspur, one of its main distributor partners in China, on the launch and distribution of the chip which has been initially dubbed as B20. The server tentatively called GB20 and the chip B20 are part of Nvidia's latest chip line, the Blackwell series, which was released in March. The chip, which will launch next year, will be sold alongside the new server, designed to maximize the performance of the chip, the report added citing people involved in developing the server. The B20 chip will be slower in performing AI calculations compared to Blackwell but the slowness is partially offset by installing lots of the chips together in the GB20 server, the report noted. This would enable the products to be competitive versus those from Chinese rivals such as Huawei Technologies, while also adhering to the computing power cap set by U.S. export curbs, the report added.

Share

Share

Copy Link

Nvidia has given the green light to use Samsung's HBM3 memory chips in its AI processors designed for the Chinese market. This move comes amidst ongoing US-China tech tensions and could potentially boost Samsung's market position.

Nvidia's Strategic Move in China

Nvidia, the leading AI chip manufacturer, has approved the use of Samsung's High Bandwidth Memory 3 (HBM3) chips in its artificial intelligence processors targeted at the Chinese market

1

. This decision comes as Nvidia navigates the complex landscape of US-China tech tensions and export restrictions.Samsung's HBM3 Technology

Samsung's HBM3 chips are advanced memory components crucial for high-performance computing and AI applications. These chips offer faster data processing and improved energy efficiency compared to previous generations

2

. The approval from Nvidia signifies the quality and competitiveness of Samsung's technology in the global semiconductor market.Impact on Market Dynamics

This development could potentially alter the competitive landscape in the memory chip sector. Samsung, as the world's largest memory chip maker, stands to benefit significantly from this partnership with Nvidia

3

. The move may also impact other players in the industry, such as SK Hynix, another major supplier of HBM chips.Navigating Export Restrictions

Nvidia's decision to use Samsung's HBM3 chips comes amid ongoing US export controls on advanced chips to China. The company has been working on developing alternative products that comply with these restrictions while still serving the Chinese market

4

. This strategy allows Nvidia to maintain its presence in China, a significant market for AI and high-performance computing.Related Stories

Future Implications

The collaboration between Nvidia and Samsung could have far-reaching effects on the global semiconductor industry. It may accelerate the development of AI technologies in China while also boosting Samsung's position in the high-end memory market

5

. Furthermore, this partnership could influence future trade policies and technological collaborations between companies from different countries.Industry Response

While neither Nvidia nor Samsung have officially commented on this development, industry analysts are closely watching its potential impact. The move is seen as a strategic decision by both companies to strengthen their positions in the competitive AI chip market, particularly in China, which remains a key growth area despite geopolitical challenges.

References

Summarized by

Navi

[3]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology