Nvidia-Backed Enfabrica Unveils EMFASYS: A Cost-Effective Memory Solution for AI Data Centers

2 Sources

2 Sources

[1]

Nvidia-backed Enfabrica releases system aimed at easing memory costs

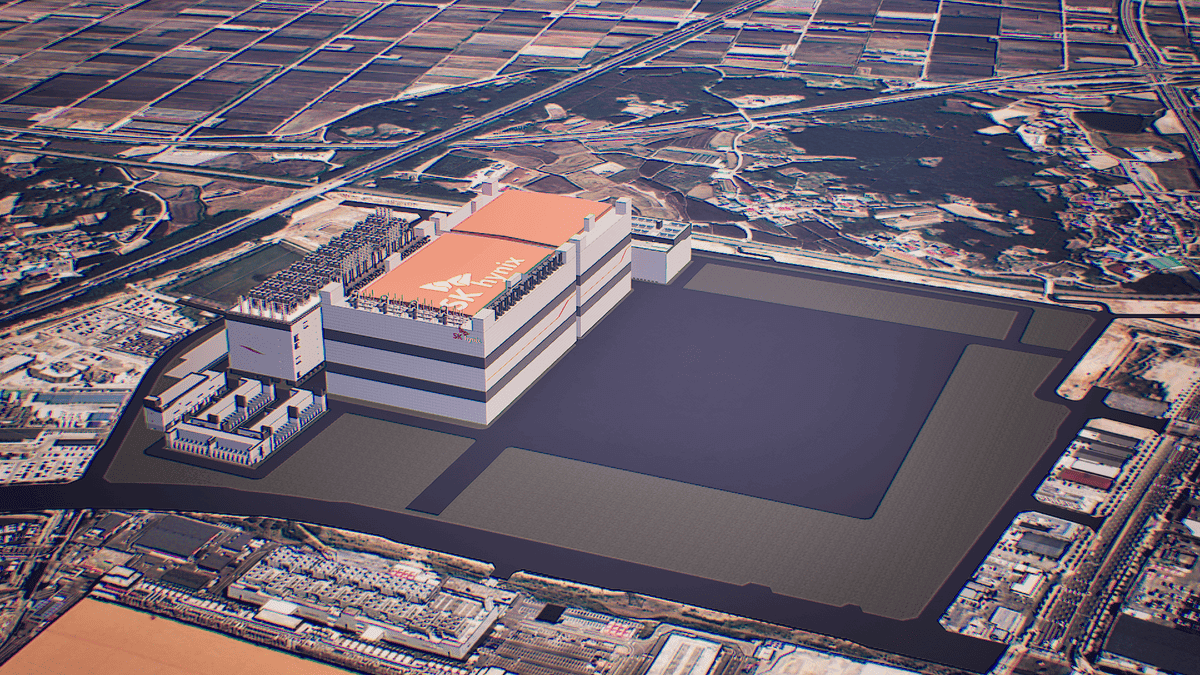

SAN FRANCISCO, July 29 (Reuters) - Enfabrica, a Silicon Valley-based chip startup working on solving bottlenecks in artificial intelligence data centers, on Tuesday released a chip-and-software system aimed at reining in the cost of memory chips in those centers. Enfabrica, which has raised $260 million in venture capital to date and is backed by Nvidia (NVDA.O), opens new tab, released a system it calls EMFASYS, pronounced like "emphasis." The system aims to address the fact that a portion of the high cost of flagship AI chips from Nvidia or rivals such as Advanced Micro Devices (AMD.O), opens new tab is not the computing chips themselves, but the expensive high-bandwidth memory (HBM) attached to them that is required to keep those speedy computing chips supplied with data. Those HBM chips are supplied by makers such as SK Hynix (000660.KS), opens new tab and Micron Technology (MU.O), opens new tab. The Enfabrica system uses a special networking chip that it has designed to hook the AI computing chips up directly to boxes filled with another kind of memory chip called DDR5 that is slower than its HBM counterpart but much cheaper. By using special software, also made by Enfabrica, to route data back and forth between AI chips and large amounts of lower-cost memory, Enfabrica is hoping its chip will keep data center speeds up but costs down as tech companies ramp up chatbots and AI agents, said Enfabrica Co-Founder and CEO Rochan Sankar. Rochan said Enfabrica has three "large AI cloud" customers using the chip but declined to disclose their names. "It's not replacing" HBM, Sankar told Reuters. "It is capping (costs) where those things would otherwise have to blow through the roof in order to scale to what people are expecting." Reporting by Stephen Nellis in San Francisco; Editing by Jamie Freed Our Standards: The Thomson Reuters Trust Principles., opens new tab

[2]

Nvidia-backed Enfabrica releases system aimed at easing memory costs

SAN FRANCISCO (Reuters) -Enfabrica, a Silicon Valley-based chip startup working on solving bottlenecks in artificial intelligence data centers, on Tuesday released a chip-and-software system aimed at reining in the cost of memory chips in those centers. Enfabrica, which has raised $260 million in venture capital to date and is backed by Nvidia, released a system it calls EMFASYS, pronounced like "emphasis." The system aims to address the fact that a portion of the high cost of flagship AI chips from Nvidia or rivals such as Advanced Micro Devices is not the computing chips themselves, but the expensive high-bandwidth memory (HBM) attached to them that is required to keep those speedy computing chips supplied with data. Those HBM chips are supplied by makers such as SK Hynix and Micron Technology. The Enfabrica system uses a special networking chip that it has designed to hook the AI computing chips up directly to boxes filled with another kind of memory chip called DDR5 that is slower than its HBM counterpart but much cheaper. By using special software, also made by Enfabrica, to route data back and forth between AI chips and large amounts of lower-cost memory, Enfabrica is hoping its chip will keep data center speeds up but costs down as tech companies ramp up chatbots and AI agents, said Enfabrica Co-Founder and CEO Rochan Sankar. Rochan said Enfabrica has three "large AI cloud" customers using the chip but declined to disclose their names. "It's not replacing" HBM, Sankar told Reuters. "It is capping (costs) where those things would otherwise have to blow through the roof in order to scale to what people are expecting." (Reporting by Stephen Nellis in San Francisco; Editing by Jamie Freed)

Share

Share

Copy Link

Enfabrica, a Silicon Valley startup, has introduced EMFASYS, a chip-and-software system designed to reduce memory costs in AI data centers by utilizing cheaper DDR5 memory alongside expensive high-bandwidth memory.

Enfabrica Introduces EMFASYS: A Game-Changing Memory Solution

Enfabrica, a Silicon Valley-based chip startup, has unveiled a groundbreaking chip-and-software system called EMFASYS, aimed at addressing the escalating costs of memory in artificial intelligence (AI) data centers. The company, which has secured $260 million in venture capital funding and boasts Nvidia as a backer, is tackling one of the most pressing challenges in the AI industry

1

.The High Cost of AI Memory

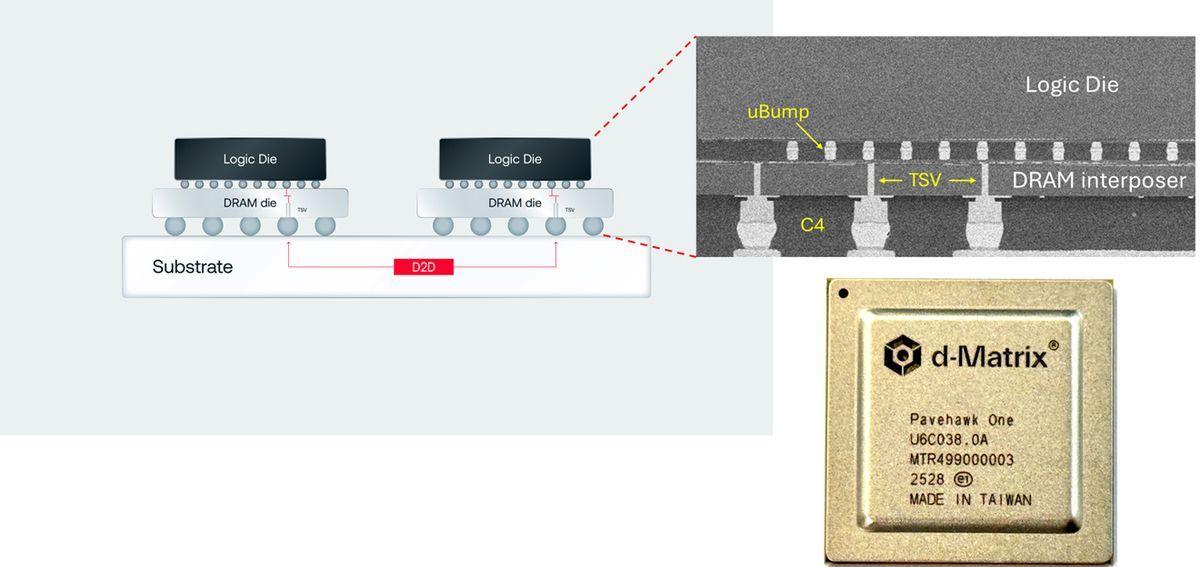

The development of EMFASYS is driven by the recognition that a significant portion of the expense associated with flagship AI chips from industry leaders like Nvidia and Advanced Micro Devices (AMD) is not the computing chips themselves. Instead, it's the costly high-bandwidth memory (HBM) attached to these chips that drives up prices. HBM is essential for keeping the high-speed computing chips supplied with data, and is typically provided by manufacturers such as SK Hynix and Micron Technology

2

.

Source: Reuters

EMFASYS: A Cost-Effective Alternative

Enfabrica's EMFASYS system introduces a novel approach to memory management in AI data centers. The system employs a specially designed networking chip that directly connects AI computing chips to containers filled with DDR5 memory chips. While DDR5 is slower than HBM, it is significantly less expensive, potentially offering substantial cost savings for data center operators

1

.Innovative Software Solution

A key component of the EMFASYS system is Enfabrica's proprietary software. This software efficiently routes data between AI chips and large volumes of lower-cost memory, aiming to maintain data center speeds while reducing overall costs. This approach is particularly relevant as tech companies continue to scale up their chatbot and AI agent capabilities

2

.Related Stories

Industry Impact and Adoption

Enfabrica Co-Founder and CEO Rochan Sankar emphasized that EMFASYS is not intended to replace HBM entirely. Instead, it aims to cap costs that would otherwise "blow through the roof" as AI applications scale up to meet growing demand. The company has already secured three "large AI cloud" customers, although their identities remain undisclosed

1

.Future Implications for AI Infrastructure

The introduction of EMFASYS could have far-reaching implications for the AI industry. By potentially reducing one of the most significant cost factors in AI data centers, Enfabrica's solution may enable more companies to scale their AI operations cost-effectively. This development could accelerate the adoption and deployment of AI technologies across various sectors, fostering innovation and competition in the rapidly evolving field of artificial intelligence.

References

Summarized by

Navi

[2]

Related Stories

Enfabrica Raises $115M and Unveils Revolutionary GPU Networking Chip for AI Infrastructure

20 Nov 2024•Technology

Nvidia's $900 Million Deal with Enfabrica: A Strategic Move in AI Infrastructure

19 Sept 2025•Technology

D-Matrix Challenges HBM with 3DIMC: A New Memory Technology for AI Inference

04 Sept 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology