Nvidia launches Nemotron 3 open source AI models as Meta steps back from transparency

16 Sources

16 Sources

[1]

Nvidia Becomes a Major Model Maker With Nemotron 3

Nvidia has made a fortune supplying chips to companies working on artificial intelligence, but today the chipmaker took a step toward becoming a more serious model maker itself by releasing a series of cutting-edge open models, along with data and tools to help engineers use them. The move, which comes at a moment when AI companies like OpenAI, Google, and Anthropic are developing increasingly capable chips of their own, could be a hedge against these firms veering away from Nvidia's technology over time. Open models are already a crucial part of the AI ecosystem with many researchers and startups using them to experiment, prototype, and build. While OpenAI and Google offer small open models, they do not update them as frequently as their rivals in China. For this reason and others, open models from Chinese companies are currently much more popular, according to data from Hugging Face, a hosting platform for open source projects. Nvidia's new Nemotron 3 models are among the best that can be downloaded, modified, and run on one's own hardware, according to benchmark scores shared by the company ahead of release. "Open innovation is the foundation of AI progress," CEO Jensen Huang said in a statement ahead of the news. "With Nemotron, we're transforming advanced AI into an open platform that gives developers the transparency and efficiency they need to build agentic systems at scale." Nvidia is taking a more fully transparent approach than many of its US rivals by releasing the data used to train Nemotron -- a fact that should help engineers modify the models more easily. The company is also releasing tools to help with customization and fine-tuning. This includes a new hybrid latent mixture-of-experts model architecture, which Nvidia says is especially good for building AI agents that can take actions on computers or the web. The company is also launching libraries that allow users to train agents to do things using reinforcement learning, which involves giving models simulated rewards and punishments. Nemotron 3 models come in three sizes: Nano, which has 30 billion parameters; Super, which has 100 billion; and Ultra, which has 500 billion. A model's parameters loosely correspond to how capable it is as well as how unwieldy it is to run. The largest models are so cumbersome that they need to run on racks of expensive hardware. Kari Ann Briski, vice president of generative AI software for enterprise at Nvidia, said open models are important to AI builders for three reasons: Builders increasingly need to customize models for particular tasks; it often helps to hand queries off to different models; and it is easier to squeeze more intelligent responses from these models after training by having them perform a kind of simulated reasoning. "We believe open source is the foundation for AI innovation, continuing to accelerate the global economy," Briski said. The social media giant Meta released the first advanced open models under the name Llama in February 2023. As competition has intensified, however, Meta has signaled that its future releases might not be open source. The move is part of a larger trend in the AI industry. Over the past year, US firms have moved away from openness, becoming more secretive about their research and more reluctant to tip off their rivals about their latest engineering tricks.

[2]

As Meta fades in open-source AI, Nvidia senses its chance to lead

Nvidia argues it's more open than Meta with data transparency. Seizing upon a shift in the field of open-source artificial intelligence, chip giant Nvidia, whose processors dominate AI, has unveiled the third generation of its Nemotron family of open-source large language models. The new Nemotron 3 family scales the technology from what had been one-billion-parameter and 340-billion-parameter models, the number of neural weights, to three new models, ranging from 30 billion for Nano, 100 billion for Super, and 500 billion for Ultra. Also: Meta's Llama 4 'herd' controversy and AI contamination, explained The Nano model, available now on the HuggingFace code hosting platform, increases the throughput in tokens per second by four times and extends the context window -- the amount of data that can be manipulated in the model's memory -- to one million tokens, seven times as large as its predecessor. Nvidia emphasized that the models aim to address several concerns for enterprise users of generative AI, who are concerned about accuracy, as well as the rising cost of processing an increasing number of tokens each time AI makes a prediction. "With Nemotron 3, we are aiming to solve those problems of openness, efficiency, and intelligence," said Kari Briski, vice president of generative AI software at Nvidia, in an interview with ZDNET before the release. Also: Nvidia's latest coup: All of Taiwan on its software The Super version of the model is expected to arrive in January, and Ultra is due in March or April. Nvidia, Briski emphasized, has increasing prominence in open-source. "This year alone, we had the most contributions and repositories on HuggingFace," she told me. It's clear to me from our conversation that Nvidia sees a chance to not only boost enterprise usage, thereby fueling chip sales, but to seize leadership in open-source development of AI. After all, this field looks like it might lose one of its biggest stars of recent years, Meta Platforms. Also: 3 ways Meta's Llama 3.1 is an advance for Gen AI When Meta, owner of Facebook, Instagram, and WhatsApp, first debuted its open-source Llama gen AI technology in February 2023, it was a landmark event: a fast, capable model with some code available to researchers, versus the "closed-source," proprietary models of OpenAI, Google, and others. Llama quickly came to dominate developer attention in open-source tech as Meta unveiled fresh innovations in 2024 and scaled up the technology to compete with the best proprietary frontier models from OpenAI and the rest. But 2025 has been different. The company's rollout of the fourth generation of Llama, in April, was greeted with mediocre reviews and even a controversy about how Meta developed the program. These days, Llama models don't show up in the top 100 models on LMSYS's popular LMArena Leaderboard, which is dominated by proprietary models Gemini from Google, xAI's Grok, Anthropic's Claude, OpenAI's GPT-5.2, and by open-source models such as DeepSeek AI, Alibaba's Qwen models, and the Kimi K2 model developed by Singapore-based Moonshot AI. Also: While Google and OpenAI battle for model dominance, Anthropic is quietly winning the enterprise AI race Charts from the third-party firm Artificial Analysis show a similar ranking. Meanwhile, the recent "State of Generative AI" report from venture capitalists Menlo Ventures blamed Llama for helping to reduce the use of open-source in the enterprise. "The model's stagnation -- including no new major releases since the April release of Llama 4 -- has contributed to a decline in overall enterprise open-source share from 19% last year to 11% today," they wrote. Leaderboard scores can come and go, but after a broad reshuffling of its AI team this year, Meta appears poised to place less emphasis on open source. A forthcoming Meta project code-named Avocado, wrote Bloomberg reporters Kurt Wagner and Riley Griffin last week, "may be launched as a 'closed' model -- one that can be tightly controlled and that Meta can sell access to," according to their unnamed sources. The move to closed models "would mark the biggest departure to date from the open-source strategy Meta has touted for years," they wrote. Also: I tested GPT-5.2 and the AI model's mixed results raise tough questions Meta's Chief AI Officer, Alexandr Wang, installed this year after Meta invested in his previous company, Scale AI, "is an advocate of closed models," Wagner and Griffin noted. (An article over the weekend by Eli Tan of The New York Times suggested that there have been tensions between Wang and various product leads for Instagram and advertising inside of Meta.) When I asked Briski about Menlo Ventures's claim that open source is struggling, she replied, "I agree about the decline of Llama, but I don't agree with the decline of open source." Added Briski, "Qwen models from Alibaba are super popular, DeepSeek is really popular -- I know many, many companies that are fine-tuning and deploying DeepSeek." While Llama may have faded, it's also true that Nvidia's own Nemotron family has not yet reached the top of the leaderboards. In fact, the family of models lags DeepSeek, Kimi, and Qwen, and other increasingly popular offerings. Also: Gemini vs. Copilot: I tested the AI tools on 7 everyday tasks, and it wasn't even close But Nvidia believes it is addressing many of the pain points that plague enterprise deployment, specifically. One focus of companies is to "cost-optimize," with a mix of closed-source and open-source models, said Briski. "One model does not make an AI application, and so there is this combination of frontier models and then being able to cost-optimize with open models, and how do I route to the right model." The focus on a selection of models, from Nano to Ultra, is expected to address the need for broad coverage of task requirements. The second challenge is to "specialize" AI models for a mix of tasks in the enterprise, ranging from cybersecurity to electronic design automation and healthcare, Briski said. "When we go across all these verticals, frontier models are really great, and you can send some data to them, but you don't want to send all your data to them," she observed. Open-source tech, then, running "on-premise," is crucial, she said, "to actually help the experts in the field to specialize them for that last mile." Also: Get your news from AI? Watch out - it's wrong almost half the time The third challenge is the exploding cost of tokens, the output of text, images, sound, and other data forms, generated piece by piece when a live model makes predictions. "The demand for tokens from all these models being used is just going up," said Briski, especially with "long-thinking" or "reasoning" models that generate verbose output. "This time last year, each query would take maybe 10 LLM calls," noted Briski. "In January, we were seeing each query making about 50 LLM calls, and now, as people are asking more complex questions, there are 100 LLM calls for every query." To balance demands, such as accuracy, efficiency, and cost, the Nemotron 3 models improve upon a popular approach used to control model costs called "mixture of experts (MOE)," where the model can turn on and off groups of the neural network weights to run with less computing effort. The fresh approach, called "latent mixture of experts," used in the Super and Ultra models, compresses the memory used to store data in the model weights, while multiple "expert" neural networks use the data. Also: Sick of AI in your search results? Try these 8 Google alternatives "We're getting four times better memory usage by reducing the KV-cache," compared to the prior Nemotron, said Briski, referring to the part of a large language model that stores the most-relevant recent search results in response to a query. The more-efficient latent MOE should give greater accuracy at a lower cost while preserving the latency, how fast the first token comes back to the user, and bandwidth, the number of tokens transmitted per second. In data provided by Artificial Analysis, said Briski, Nemotron 3 Nano surpasses a top model, OpenAI's GPT-OSS, in terms of accuracy of output and the number of tokens generated each second. Another big concern for enterprises is the data that goes into models, and Briski said the company aims to be much more transparent with its open-source approach. "A lot of our enterprise customers can't deploy with some models, or they can't build their business on a model that they don't know what the source code is," she said, including training data. The Nemotron 3 release on HuggingFace includes not only the model weights but also trillions of tokens of training data used by Nvidia for pre-training, post-training, and reinforcement learning. There is a separate data set for "agentic safety," which the company says will provide "real-world telemetry to help teams evaluate and strengthen the safety of complex agent systems." "If you consider the data sets, the source code, everything that we use to train is open," said Briski. "Literally, every piece of data that we train the model with, we are releasing." Also: Meta inches toward open source AI with new Llama 3.1 Meta's team has not been as open, she said. "Llama did not release their data sets at all; they released the weights," Briski told me. When Nvidia partnered with Meta last year, she said, to convert the Llama 3.1 models to smaller Nemotron models, via a popular approach known as "distillation," Meta withheld resources from Nvidia. "Even with us as a great partner, they wouldn't even release a sliver of the data set to help distill the model," she said. "That was a recipe we kind of had to come up with on our own." Nvidia's emphasis on data transparency may help to reverse a worrying trend toward diminished transparency. Scholars at MIT recently conducted a broad study of code repositories on HuggingFace. They related that truly open-source postings are on the decline, citing "a clear decline in both the availability and disclosure of models' training data." As lead author Shayne Longpr and team pointed out, "The Open Source Initiative defines open source AI models as those which have open model weights, but also 'sufficiently detailed information about their [training] data'," adding, "Without training data disclosure, a released model is considered 'open weight' rather than 'open source'." It's clear Nvidia and Meta have different priorities. Meta needs to make a profit from AI to reassure Wall Street about its planned spending of hundreds of billions of dollars to build AI data centers. Nvidia, the world's largest company, needs to ensure it keeps developers hooked on its chip platform, which generates the majority of its revenue. Also: US government agencies can use Meta's Llama now - here's what that means Meta CEO Mark Zuckerberg has suggested Llama is still important, telling Wall Street analysts in October, "As we improve the quality of the model, primarily for post-training Llama 4, at this point, we continue to see improvements in usage." However, he also emphasized moving beyond just having a popular LLM with the new directions his newly formed Meta Superintelligence Labs (MSL) will take. "So, our view is that when we get the new models that we're building in MSL in there, and get, like, truly frontier models with novel capabilities that you don't have in other places, then I think that this is just a massive latent opportunity." As for Nvidia, "Large language models and generative AI are the way that you will design software of the future," Briski told me. "It's the new development platform." Support is key, she said, and, in what could be taken as a dig at Zuckerberg's intransigence, thought not intended as such, Briski invoked the words of Nvidia founder and CEO Jensen Huang: "As Jensen says, we'll support it as long as we shall live."

[3]

Not enough good American open models? Nvidia wants to help

Nemotron 3 is a grab bag of 2025's top machine learning advancements For many, enterprise AI adoption depends on the availability of high-quality open-weights models. Exposing sensitive customer data or hard-fought intellectual property to APIs so you can use closed models like ChatGPT is a non-starter. Outside of Chinese AI labs, the few open-weights models available today don't compare favorably to the proprietary models from the likes of OpenAI or Anthropic. This isn't just a problem for enterprise adoption; it's a roadblock to Nvidia's agentic AI vision that the GPU giant is keen to clear. On Monday, the company added three new open-weights models of its own design to its arsenal. Open-weights models are nothing new for Nvidia -- most of the company's headcount is composed of software engineers. However, its latest generation of Nemotron LLMs is by far its most capable and open. When they launch, the models will be available in three sizes, Nano, Super, and Ultra, which weigh in at about 30, 100, and 500 billion parameters, respectively. In addition to the model weights, which will roll out on popular AI repos like Hugging Face over the next few months beginning with Nemotron 3 Nano this week, Nvidia has committed to releasing training data and the reinforcement learning environments used to create them, opening the door to highly customized versions of the models down the line. The models also employ a novel "hybrid latent MoE" architecture designed to minimize performance losses when processing long input sequences, like ingesting large documents and processing queries against them. This is possible using a combination of the Mamba-2 and Transformer architectures throughout the model's layers. Mamba-2 is generally more efficient than transformers when processing long sequences, which results in shorter prompt processing times and more consistent token generation rates. Nvidia says that it's using transformer layers to maintain "precise reasoning" and prevent the model from losing context of the relevant information, a known challenge when ingesting long documents or keeping track of details over extended chat sessions. Speaking of which, these models natively support a million token context window -- the equivalent of roughly 3,000 double spaced pages of text. All of these models employ a mixture-of-experts (MoE) architecture, which means only a fraction of the total parameter count is activated for each token processed and generated. This puts less pressure on the memory subsystem, resulting in faster throughput than an equivalent dense model on the same hardware. For example, Nemotron 3 Nano has 30 billion parameters but only 3 billion are activated for each token generated. While the nano model employs a pretty standard MoE architecture not unlike those seen in gpt-oss or Qwen3-30B-A3B, the larger Super and Ultra models were pretrained using Nvidia's NVFP4 data type and use a new latent MoE architecture. As Nvidia explains it, using this approach, "experts operate on a shared latent representation before outputs are projected back to token space. This approach allows the model to call on 4x more experts at the same inference cost, enabling better specialization around subtle semantic structures, domain abstractions, or multi-hop reasoning patterns." Finally, these models have been engineered to use "multi-token prediction," a spin on speculative decoding, which we've explored in detail here, that can improve inference performance by up to 3x by predicting future tokens each time a new one is generated. Speculative decoding is particularly useful in agentic applications where large quantities of information are repeatedly processed and regenerated, like code assistants. Nvidia's 30-billion-parameter Nemotron 3 Nano is available this week, and is designed to run efficiently on enterprise hardware like the vendor's L40S or RTX Pro 6000 Server Edition. However, using 4-bit quantized versions of the model, it should be possible to cram it into GPUs with as little as 24GB of video memory. According to Artificial Analysis, the model delivers performance on par with models like gpt-oss-20B or Qwen3 VL 32B and 30B-A3B, while offering enterprises far greater flexibility for customization. One of the go-to methods for model customization is reinforcement learning (RL), which enables users to teach the model new information or approaches through trial and error, where desirable outcomes are rewarded while undesirable ones are punished. Alongside the new models, Nvidia is releasing RL-datasets and training environments, which it calls NeMo Gym, to help enterprises fine-tune the models for their specific application or agentic workflows. Nemotron 3 Super and Ultra are expected to make their debut in the first half of next year. ®

[4]

Nvidia bets on open infrastructure for the agentic AI era with Nemotron 3

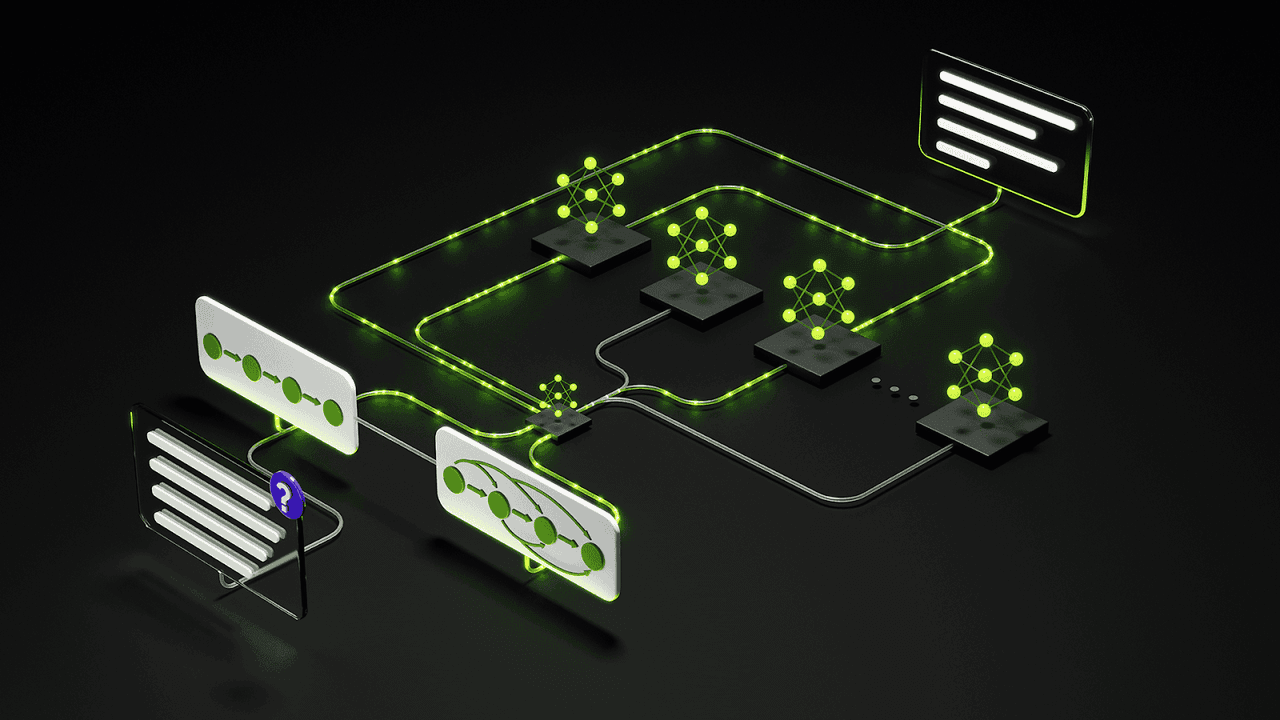

The company is positioning its new offerings as a business-ready way for enterprises to build domain-specific agents without first needing to create foundation models. AI agents must be able to cooperate, coordinate, and execute across large contexts and long time periods, and this, says Nvidia, demands a new type of infrastructure, one that is open. The company says it has the answer with its new Nemotron 3 family of open models. Developers and engineers can use the new models to create domain-specific AI agents or applications without having to build a foundation model from scratch. Nvidia is also releasing most of its training data and its reinforcement learning (RL) libraries for use by anyone looking to build AI agents.

[5]

Nvidia Wants To Be Your Open Source Model Provider

It seems that chips dominance isn't enough for Nvidia. The tech giant wants its products to be present at every level of the AI development ecosystem, and its got its eyes on open-source AI models. Nvidia released its latest Nemotron 3 family of open models on Monday, only months after announcing the first iteration at the company's GTC event in March. The company press release claims they possess "leading accuracy for building agentic AI applications." Open-source AI is often preferred by some users, like firms or governments, for its ability to be customized to the hyper-specific needs of the industry, and for being more private by giving organizations the ability to run the data on their own internal servers instead of the AI company's. American tech giants had largely drawn back from the world of open source models as the companies increasingly opted for proprietary options that they can arguably make more money out of. Largely the only exception to this has been Meta which is also reportedly eyeing a shift to proprietary. Even OpenAI, which purports to be an "open" organization unveiled only its first open source model in five years this past August with the release of gpt-oss-120b and gpt-oss-20b. In Silicon Valley's relative absence, Chinese models have dominated the open source space with high profile releases from DeepSeek and Alibaba. It's all part of China's strategy to achieve global AI dominance: an "AI for all" approach would prove broader reach and greater influence on the global industry, especially at a time when Beijing believes that it can produce AI chips powerful enough to become a sizable competitor to Nvidia's offerings. From the looks of it, Nvidia CEO Jensen Huang is worried about this. He has taken almost every opportunity the past few months to urge Washington to take this possibility seriously. At the company's GTC conference held in D.C. back in October, Huang also warned attendees of China's open source dominance. He argued that because China is a huge creator of open source software, if Americans retreat completely from China they might risk being "ill-prepared" for when Chinese software "permeates the world." Despite not being a big consumer-facing creator of AI modelsâ€"aside from its AI face generator StyleGAN back in the pre-ChatGPT daysâ€"Nvidia seems to be taking matters into its own hands with this China situation. Company executives made it clear that it's not proprietary models they are trying to compete with. But when it comes to open source, they want to be a bigger player as fellow American tech giants might be conceding and shifting focus. Nvidia sees Nemotron as a tool to drive further reliance worldwide on its hardware empire. By giving out this open model to developers worldwide, it is hoping to ensure that any new models built will be best aligned with its silicon rather than the rapidly emerging industry in China. "When we're the best development platform, again, people are going to choose us, choose our platform, choose our GPU, in order to be able to not just build today, but also build for tomorrow," Nvidia's vice president of generative AI software for enterprise Kari Briski said at a press briefing ahead of the release. Briski believes that a reliable and consistent roadmap of new model releases can help Nvidia achieve this open model edge. "We recognize that people developing AI applications can't rely on a model when there's only been just one model release and no road map," Briski said, adding that Nvidia will be investing in building that reliability and consistency and is already eyeing new models. "GPT-oss is a fantastic model, OpenAI is a great partner we love them. The last time they put out an open model was over five years ago."

[6]

From Slurm to Nemotron 3, Nvidia unveils big open-source push

Nvidia launched Nemotron 3 models, including Nano, Super, and Ultra sizes for AI tasks Nvidia has announced a major expansion of its open source efforts, combining a software acquisition with new open AI models. The company has announced it has acquired SchedMD, the developer of Slurm, an open source workload management system widely used in high-performance computing and AI. Nvidia will continue to operate Slurm as vendor-neutral software, ensuring compatibility with diverse hardware and maintaining support for existing HPC and AI customers. Slurm manages scheduling, queuing, and resource allocation across large computing clusters that run parallel tasks. More than half of the top 10 and top 100 supercomputers listed in the TOP500 rankings rely on the services, with enterprises, cloud providers, research labs, and AI companies across industries using the system, including organizations in autonomous driving, healthcare, energy, financial services, manufacturing, and government. Slurm works with Nvidia's latest hardware, and its developers continue to adapt it for high-performance AI workloads. Alongside the acquisition, Nvidia also introduced the Nemotron 3 family of open models, including Nano, Super, and Ultra sizes. The models use a hybrid mixture-of-experts architecture to support multi-agent AI systems. Nemotron 3 Nano focuses on efficient task execution, Nemotron 3 Super supports collaboration across multiple AI agents, and Nemotron 3 Ultra handles complex reasoning workflows. Nvidia provides these models with associated datasets, reinforcement learning libraries, and NeMo Gym training environments. Nemotron 3 models run on Nvidia accelerated computing platforms, including workstations and large AI clusters. Developers can combine open models with proprietary systems in multi-agent workflows, using public clouds or enterprise platforms. Nvidia provides tools, libraries, and datasets to support training, evaluation, and deployment across varied computing environments. Nvidia has released three trillion tokens of pre-training, post-training, and reinforcement learning data for Nemotron 3 models. Additional AI tools, including NeMo RL and NeMo Evaluator, offer model evaluation and safety assessment. Early adopters integrating Nemotron 3 include companies in software, cybersecurity, media, manufacturing, and cloud services. Nvidia has made open source models, AI tools, and datasets available on GitHub and Hugging Face for developers building agentic AI applications. "Open innovation is the foundation of AI progress," Jensen Huang, founder and CEO of Nvidia, wrote in the company's press release. "With Nemotron, we're transforming advanced AI into an open platform that gives developers the transparency and efficiency they need to build agentic systems at scale."

[7]

Nvidia debuts Nemotron 3 with hybrid MoE and Mamba-Transformer to drive efficient agentic AI

Nvidia launched the new version of its frontier models, Nemotron 3, by leaning in on a model architecture that the world's most valuable company said offers more accuracy and reliability for agents. Nemotron 3 will be available in three sizes: Nemotron 3 Nano with 30B parameters, mainly for targeted, highly efficient tasks; Nemotron 3 Super, which is a 100B parameter model for multi-agent applications and with high-accuracy reasoning and Nemotron 3 Ultra, with its large reasoning engine and around 500B parameters for more complex applications. To build the Nemotron 3 models, Nvidia said it leaned into a hybrid mixture-of-experts (MoE) architecture to improve scalability and efficiency. By using this architecture, Nvidia said in a press release that its new models also offer enterprises more openness and performance when building multi-agent autonomous systems. Kari Briski, Nvidia vice president for generative AI software, told reporters in a briefing that the company wanted to demonstrate its commitment to learn and improving from previous iterations of its models. "We believe that we are uniquely positioned to serve a wide range of developers who want full flexibility to customize models for building specialized AI by combining that new hybrid mixture of our mixture of experts architecture with a 1 million token context length," Briski said. Nvidia said early adopters of the Nemotron 3 models include Accenture, CrowdStrike, Cursor, Deloitte, EY, Oracle Cloud Infrastructure, Palantir, Perplexity, ServiceNow, Siemens and Zoom. Breakthrough architectures Nvidia has been using the hybrid Mamba-Transformer mixture-of-experts architecture for many of its models, including Nemotron-Nano-9B-v2. The architecture is based on research from Carnegie Mellon University and Princeton, which weaves in selective state-space models to handle long pieces of information while maintaining states. It can reduce compute costs even through long contexts. Nvidia noted its design "achieves up to 4x higher token throughput" compared to Nemotron 2 Nano and can significantly lower inference costs by reducing reasoning token generation by up 60%. "We really need to be able to bring that efficiency up and the cost per token down. And you can do it through a number of ways, but we're really doing it through the innovations of that model architecture," Briski said. "The hybrid Mamba transformer architecture runs several times faster with less memory, because it avoids these huge attention maps and key value caches for every single token." Nvidia also introduced an additional innovation for the Nemotron 3 Super and Ultra models. For these, Briski said Nvidia deployed "a breakthrough called latent MoE." "That's all these experts that are in your model share a common core and keep only a small part private. It's kind of like chefs sharing one big kitchen, but they need to get their own spice rack," Briski added. Nvidia is not the only company that employs this kind of architecture to build models. AI21 Labs uses it for its Jamba models, most recently in its Jamba Reasoning 3B model. The Nemotron 3 models benefited from extended reinforcement learning. The larger models, Super and Ultra, used the company's 4-bit NVFP4 training format, which allows them to train on existing infrastructure without compromising accuracy. Benchmark testing from Artificial Analysis placed the Nemotron models highly among models of similar size. New environments for models to 'work out' As part of the Nemotron 3 launch, Nvidia will also give users access to its research by releasing its papers and sample prompts, offering open datasets where people can use and look at pre-training tokens and post-training samples, and most importantly, a new NeMo Gym where customers can let their models and agents "workout." The NeMo Gym is a reinforcement learning lab where users can let their models run in simulated environments to test their post-training performance. AWS announced a similar tool through its Nova Forge platform, targeted for enterprises that want to test out their newly created distilled or smaller models. Briski said the samples of post-training data Nvidia plans to release "are orders of magnitude larger than any available post-training data set and are also very permissive and open." Nvidia pointed to developers seeking highly intelligent and performant open models, so they can better understand how to guide them if needed, as the basis for releasing more information about how it trains its models. "Model developers today hit this tough trifecta. They need to find models that are ultra open, that are extremely intelligent and are highly efficient," she said. "Most open models force developers into painful trade-offs between efficiencies like token costs, latency, and throughput." She said developers want to know how a model was trained, where the training data came from and how they can evaluate it.

[8]

Analysis: Nvidia Nemotron-3 open models lead to more efficient agentic AI - SiliconANGLE

Analysis: Nvidia Nemotron-3 open models lead to more efficient agentic AI Artificial intelligence leader Nvidia Corp. Monday announced the Nemotron-3 family of models, data and tools, and the release is further evidence of the company's commitment to the open ecosystem, focusing on delivering highly efficient, accurate and transparent models essential for building sophisticated agentic AI applications. Nvidia executives, including Chief Executive Jensen Huang, have talked about the importance open source plays in democratizing access to AI models, tools and software to create that "rising tide," and bringing AI to everyone. The announcement underscores Nvidia's belief that open source is the foundation of AI innovation, driving global collaboration and lowering the barrier to entry for diverse developers. As large language models achieve reasoning accuracy suitable for enterprise applications, on an analyst prebrief Nvidia highlighted three critical challenges facing businesses today: Nvidia's answer to the above challenges is the Nemotron-3 family, characterized by its focus on being open, accurate, and efficient. The new models use a hybrid Mamba-Transformer mixture-of-experts or MoE architecture. This design dramatically improves efficiency as it runs several times faster with reduce memory requirements. The Nemotron-3 family will be rolled out in three sizes, catering to different compute needs and performance requirements: Nemotron-3 offers leading accuracy within its class, as evidenced by independent benchmarks from testing firm Artificial Analysis. In one test, Nemotron-3 Nano was shown to be the most open and intelligent model in its tiny, small reasoning class. Furthermore, the model's competitive advantage comes from its focus on token efficiency and speed. On the call, Nvidia highlighted Nemotron-3 tokens-to-intelligence rate ratio, which is crucial as the demand for tokens from cooperating agents increases. A significant feature of this family is the 1 million-token context length. This massive context window allows the models to perform dense, long-range reasoning at lower cost, enabling them to process full code bases, long technical specifications and multiday conversations within a single pass. A core component of the Nemotron-3 release is the use of NeMo Gym environments and data sets for reinforcement learning, or RL. This provides the exact tools and infrastructure Nvidia used to train Nemotron-3. The company is the first to release open, state-of-the-art, full reinforcement learning environments, alongside the open models, libraries and data to help developers build more accurate and capable, specialized agents. The RL framework allows developers to pick up the environment and start generating specialized training data in hours. The process involves: This systematic loop enables models to get better at choosing actions that earn higher rewards, like a student improving their skills through repeated, guided practice. Nvidia released 12 Gym environments targeting high-impact tasks like competitive coding, math and practical calendar scheduling. The Nemotron release is backed by a substantial commitment across three areas: Nvidia is releasing the actual code used to train Nemotron-3, ensuring full transparency. This includes the Nemotron-3 research paper detailing techniques like synthetic data generation and RL. Nvidia researchers continue to push the boundaries of AI, with notable research including: Nvidia is shifting the data narrative from big data to smart and improved data curation and quality. To accomplish this, the company is releasing several new data sets: Nvidia is providing reference blueprints to accelerate adoption, integrating Nemotron-3 models and acceleration libraries: The Nemotron ecosystem is broad, with day-zero support for Nemotron-3 on platforms such as Amazon Bedrock. Key partners such as CrowdStrike Holdings Inc. and ServiceNow Inc. are actively using Nemotron data and tools, with ServiceNow noting that 15% of the pretraining data for their Apriel 1.6 Thinker model came from an Nvidia Nemotron data set. The industry is winding down the hype phase of AI and we should start to see more production use cases. The Nemotron 3 family is well-suited for this era as it provides a performant and efficient open-source foundation for the development of the next generation of Agentic AI, reinforcing Nvidia's deep commitment to democratizing AI innovation.

[9]

NVIDIA Unveils Nemotron 3 Open Models to Power Multi-Agent AI Systems | AIM

The Nemotron 3 lineup includes Nano, Super and Ultra models built on a hybrid latent mixture-of-experts (MoE) architecture. NVIDIA on Monday announced the NVIDIA Nemotron 3 family of open models, datasets and libraries aimed at supporting the development of transparent and efficient multi-agent AI systems across industries. The Nemotron 3 lineup includes Nano, Super and Ultra models built on a hybrid latent mixture-of-experts (MoE) architecture, which NVIDIA says is designed to reduce inference costs, limit context drift and improve coordination among multiple AI agents. "Open innovation is the foundation of AI progress," NVIDIA founder and CEO Jensen Huang said. "With Nemotron, we're transforming advanced AI into an open platform that gives developers the transparency and efficiency they need to build agentic systems at scale." Among the three models, Nemotron 3 Nano is available immediately. It is a 30-billion-parameter model that activates up to 3 billion parameters per task and is optimised for low-cost inference use cases such as software debugging, summarisation and AI assistants. NVIDIA said the model delivers up to four times higher token throughput than Nemotron 2 Nano and reduces reasoning token generation by up to 60%. It is available on Hugging Face and through inference providers such as Baseten, DeepInfra, Fireworks, FriendliAI, OpenRouter and Together AI. The model is also offered as an NVIDIA NIM microservice for deployment on NVIDIA-accelerated infrastructure. Nemotron 3 Nano will also be available on AWS via Amazon Bedrock and supported on multiple cloud platforms in the coming months. On the other hand, Nemotron 3 Super is a roughly 100-billion-parameter model, designed for multi-agent applications requiring low latency, while Nemotron 3 Ultra, with about 500 billion parameters, is intended for deep reasoning and long-horizon planning tasks. Both Super and Ultra use NVIDIA's 4-bit NVFP4 training format on Blackwell GPUs to reduce memory requirements. These models are expected to be available in the first half of 2026. The launch comes as companies move beyond single AI chatbots toward collaborative agent-based systems, where multiple models work together on complex workflows. According to NVIDIA, Nemotron 3 allows developers to route tasks between frontier proprietary models and open Nemotron models within the same workflow to balance reasoning capability and cost efficiency. NVIDIA said the Nemotron 3 family also aligns with its sovereign AI strategy, allowing governments and enterprises to deploy models tailored to local data, regulations and policy requirements. Organisations across Europe and South Korea are among those adopting the open models, the company said. Several enterprise customers and partners, including Accenture, Deloitte, EY, Oracle Cloud Infrastructure, Palantir, Perplexity, ServiceNow, Siemens, Synopsys and Zoom, are integrating Nemotron models into AI workflows spanning manufacturing, cybersecurity, software development and communications. Perplexity CEO Aravind Srinivas said the company is using Nemotron within its agent routing system to optimise performance. "We can direct workloads to fine-tuned open models like Nemotron 3 Ultra or use proprietary models when tasks require it," he said. Alongside the models, NVIDIA released three trillion tokens of pretraining, post-training and reinforcement learning datasets, including an Agentic Safety Dataset for evaluating multi-agent systems. The company also open-sourced NeMo Gym, NeMo RL and NeMo Evaluator to support training, customisation and evaluation of agentic AI.

[10]

Nvidia launches Nemotron 3 model family as open foundation for agentic AI systems - SiliconANGLE

Nvidia launches Nemotron 3 model family as open foundation for agentic AI systems Nvidia Corp. today announced the launch of Nemotron 3, a family of open models and data libraries aimed at powering the next generation of agentic artificial intelligence operations across industries. The new family of models will consist of three sizes: Nano, Super and Ultra. The company built them using a breakthrough architecture using a hybrid latent mixture-of-experts to compress memory needs, optimize compute and deliver exceptional intelligence for size. According to the company, the smallest model, Nemotron 3 Nano, delivers 4x higher throughput than its predecessor while maintaining high performance. "Open innovation is the foundation of AI progress," said Jensen Huang, founder and chief executive of Nvidia Corp. "With Nemotron, we're transforming advanced AI into an open platform that gives developers the transparency and efficiency they need to build agentic systems at scale." The company said the release of these AI models addresses the aggressive acceleration of industry adoption of agentic AI applications that require reasoning and tool orchestration. The era of single-model chatbots is giving way to orchestrated AI applications that automate multiple models to power proactive and intelligent agents. The Nemotron 3 launch also positions Nvidia more directly against a growing field of open and semi-open reasoning models, as competition shifts from raw parameter counts toward orchestration, reliability and agent-centric performance. Using its new architecture, Nvidia said it will help drive costs down, while still providing high-speed, reliable reasoning and intelligence. Launching today, Nano is a small 30-billion-parameter model with 3 billion active parameters, aimed at targeted, highly efficient tasks. The next larger model, Super, combines 100 billion parameters with 10 billion active parameters and is designed to provide mid-range intelligence for multi-agent applications. Finally, Ultra, a large reasoning engine weighing in at 500 billion parameters with 50 billion active, delivers powerful reasoning for complex AI applications and agentic orchestration. Nemotron Super excels at applications that bring together multiple AI agents collaborating on complex tasks with low latency. Nemotron Ultra, by contrast, is positioned as a powerhouse "brain" at the center of demanding AI workflows that require deep research and long-horizon strategic planning. Nano is available now, Super will arrive during the first quarter of 2026 and Ultra is expected in the first half of next year. Using the company's ultra-efficient 4-bit NVPF4 training format, developers and engineers can deploy the models on Nvidia's Blackwell architecture across smaller numbers of graphics processing units, with a significantly reduced memory footprint. The efficient training process also allows the models to scale down through distillation without notable losses to accuracy or reasoning capability. Early adopters of the Nemotron family include Accenture plc, CrowdStrike Holdings Inc., Oracle Cloud Infrastructure, Palantir Technologies Inc., Perplexity AI Inc., ServiceNow Inc., Siemens AG and Zoom Communications Inc. "Perplexity is built on the idea that human curiosity will be amplified by accurate AI built into exceptional tools, like AI assistants," said Aravind Srinivas, CEO of Perplexity. "With our agent router, we can direct workloads to the best fine-tuned open models, like Nemotron 3 Ultra." With the release of these models, Nvidia is betting heavily on ecosystem-driven adoption to create a mutually beneficial symbiosis. Although the new models do not require Nvidia hardware to run, they are highly optimized for Nvidia-designed platforms and graphics cards due to internal architectural optimizations. The company also emphasized its intention to maintain a reliability roadmap for model releases. While the current cadence of AI model launches can feel relentless, with new releases appearing almost monthly, Nvidia said it aims to provide developers with clearer expectations around model maturity and long-term support within each open-source family. In addition to the new models, Nvidia is releasing a collection of training datasets and state-of-the-art reinforcement learning libraries for building specialized AI agents. Reinforcement learning is a method of training AI models by exposing them to real-world questions, instructions and tasks. Unlike supervised learning, which relies on predefined question-and-answer datasets, reinforcement learning places models in uncertain environments, reinforcing successful actions through rewards and penalizing mistakes. The result is a reasoning system capable of operating in complex, dynamic conditions while learning rules and boundaries through active feedback. The new dataset contains three trillion tokens of new Nemotron pretraining, post-training and reinforcement to supply rich reasoning, coding and multistep workflow examples. These provide the framework for building highly capable, domain-specialized agents. In addition, Nvidia released the Nemotron Agentic Safety Dataset, a real-world telemetry dataset that allows teams to evaluate and strengthen safety in complex and multi-agent systems. Building on this, Nvidia released the NeMo Gym and NeMo RL open-source libraries. Gym and RL provide training environments and a post-training foundation for Nemotron models. Developers can use them together as scaffolding to accelerate development by running models through test environments using RL training loops and interoperate with existing training environments. The release of the open-source Nemotron models and datasets further cements Nvidia's outreach to the broader AI ecosystem. The company said the data addresses a growing industry challenge: developers struggle to trust the behavior of models deployed into production. By providing transparent datasets and tooling, Nvidia aims to help teams define operational boundaries, train models for specific tasks and more rigorously evaluate reliability before deployment.

[11]

NVIDIA debuts Nemotron 3 open models to accelerate agentic AI development

The Nemotron 3 line of open models has been released by NVIDIA, focusing on providing transparent, efficient, and highly accurate AI functionality for building collaborative agentic systems. The Nemotron 3 line comes in different sizes, including Nano, Super, and Ultra, and boasts architectural innovations that are a breakthrough for the industry. NVIDIA has recently rolled out the launch of the Nemotron 3 series of open models, which are intended to help in the advancement of agentic artificial intelligence in the business and technology field. The latest series of artificial intelligence model development is designed to be more efficient, have strong reasoning, multi-agent coordination, and offer effective and affordable performance, as opposed to its past versions. The Nemotron 3 effort has been launched along with other open tools and resources. Specifically, the Nemotron 3 models are based on a hybrid latent mixture-of-experts architecture, which results in a dramatic improvement in throughput and long-term reasoning performance. Specifically, this new architecture is intended to respond to an increasing demand for AI models capable of supporting complex multi-step business processes and retaining their accuracy levels and interpretability. NVIDIA intends to position Nemotron 3 models as a universal platform for developing AI solutions based on business priorities. The Nemotron 3 series has three models based on varying levels of performance requirements. The Nemotron 3 Nano model is designed for cost-effective tasks and offers four times the performance capacity of the previous version. This will make multi-agent systems feasible for developers using this version. The main application area for this version will include tasks like interactive assistance, automatic summarization of content, software debugging, and other applications where speed is a priority. In complex situations, Nemotron 3 Super can provide high-accuracy multi-agent reasoning. This model maintains a balance between accuracy and simplicity and can be applied in collaborative agents that demand joint operations by a large number of sub-agents. Nemotron 3 Ultra is the largest among the three and is applicable in cases that demand strategic thinking based on large-scale information and complex logic and deep reasoning tasks. The three models were designed for developing flexibility in choosing a model that meets particular project needs without compromising on accuracy and efficiency capabilities for scaling and processing their inputs and outputs properly. One of the major differentiators for Nemotron 3 is the provision of open datasets and libraries at the same time that the models are rolled out. NVIDIA has come up with pre-training, post-training, and reinforcement learning datasets that amount to three trillion tokens and are the base for effective AI training datasets. In addition to the above, they have developed environments for reinforcement learning and datasets. This has been done for the safety of reinforcement learning that will enable development teams to assess and enhance the safety aspects of complex AI scenarios. All the above are available on platforms such as GitHub and Hugging Face. In order to accelerate its adoption, NVIDIA has also published open source libraries like NeMo Gym and NeMo RL to support training and post-training development. The Nemotron 3 also provides an offer of the NeMo Evaluator to validate its safety and performance for pre-defined benchmarks of applications developed for Nemotron 3 via an open source method to overcome barriers to innovation while improving the application's dependability and flexibility for innovation and development of artificial intelligence applications. "The launch of Nemotron 3 is part of a larger trend in the AI industry, which values open innovation, transparency, and collective model development. With our decision to offer open access to advanced models and data, we are creating a community where AI developers can move fast, adapt models to address their specific requirements, and give back to the community with new knowledge," explained NVIDIA in a statement. While it is not a full-fledged AI system, it is a part of NVIDIA's strategy to introduce a hybrid model development ecosystem. The company, NVIDIA, has confirmed the immediate release of Nemotron 3 Nano, while the Super and Ultra variants are expected to roll out during the first half of 2026. This indicates the early adoption being practiced by businesses and technology firms and a demand for transparent and open AI solutions that can adapt to respective operational requirements. In short, the Nemotron 3 line signifies a thoughtful move by NVIDIA into the open AI model class with a focus on delivering performance and utility within a single package. In doing so, the company is paving the way for the development of the next generation of AI agents with agentic intelligence that will shape the various sectors in meaningful ways.

[12]

AI Chipmaker Nvidia Just Released Open Source Models For AI agents - NVIDIA (NASDAQ:NVDA)

Nvidia on Monday introduced the Nemotron 3 family of open models, data and libraries designed to power and support open, efficient, specialized AI agents. The company said these models help teams build reliable AI systems that work together. At the same time, Nvidia is also expanding its open software foundation. Nvidia Acquires SchedMD to Strengthen AI Infrastructure To support this goal, Nvidia acquired SchedMD, the company behind Slurm. Slurm is open-source software that manages computing jobs for AI and supercomputers. Also Read: Nvidia Has 'Capabilities No Rival Can Replicate:' Analyst Importantly, Nvidia will keep Slurm open and vendor-neutral. As AI systems grow larger, managing computing resources becomes critical. Slurm helps queue, schedule, and share computing power efficiently. Nvidia said that today, it runs on more than half of the world's top supercomputers. How The Deal Supports Nemotron Together, Nemotron and Slurm support AI from training to real-world use. Nemotron handles intelligent decision-making, while Slurm manages computing workloads. As a result, developers can scale AI systems more smoothly and at lower cost. Nvidia said it plans to invest further in Slurm's development and community support. Nvidia held $60.6 billion in cash and equivalents as of October 26, 2025. The company became the first company to hit the $4.5 trillion market cap in October. Nvidia's Performance Nvidia's stock gained over 31% year-to-date as its graphics processing units gain traction thanks to the aggressive AI ambitions of Microsoft Corp (NASDAQ:MSFT), Amazon.com Inc (NASDAQ:AMZN), Meta Platforms Inc (NASDAQ:META), Alphabet Inc (NASDAQ:GOOGL). Nvidia is pushing to unlock more value from open-source AI by releasing new models and more that help developers move faster across digital and physical AI. The company showcased these efforts at NeurIPS, emphasizing that open access accelerates innovation in areas like autonomous driving and robotics. By open-sourcing advanced reasoning models, physical AI platforms, and data tools, Nvidia aims to lower barriers, expand adoption, and strengthen its broader AI ecosystem. NVDA Price Action: Nvidia shares were down 0.64% at $175.16 during premarket trading on Tuesday, according to Benzinga Pro data. Read Next: Apple iPhone Lead Times Are Stretching -- Analyst Sees Strong Year-End Demand Photo by Nvidia NVDANVIDIA Corp$175.77-0.29%OverviewAMZNAmazon.com Inc$222.28-0.12%GOOGLAlphabet Inc$308.880.21%METAMeta Platforms Inc$644.21-0.51%MSFTMicrosoft Corp$473.31-0.32%Market News and Data brought to you by Benzinga APIs

[13]

Nvidia Bets on Open Models to Power AI Agents | PYMNTS.com

By completing this form, you agree to receive marketing communications from PYMNTS and to the sharing of your information with our sponsor, if applicable, in accordance with our Privacy Policy and Terms and Conditions. The lineup includes Nano, Super and Ultra models that leverage a hybrid latent mixture-of-experts (MoE) architecture to deliver higher throughput, extended context reasoning, and scalable performance for multi-agent workflows. Developers and enterprises can access these models, associated data and tooling to build and customize AI agents for tasks ranging from coding and reasoning to complex workflow automation. Open-source AI models are large pretrained neural networks whose weights and code are publicly available for download, inspection, modification and redistribution. By contrast with closed or proprietary models controlled by a single provider, open models enable developers, researchers and enterprises to adapt the model to specific needs, verify behavior, and integrate the technology into their systems without restrictive licensing. True open-source AI, according to industry definitions, ideally also includes transparency around training data and methodologies to ensure trust and reproducibility. Open-source models matter because they widen access to advanced AI, let independent developers tailor systems to specific domains, and provide transparency that supports safety, auditability and regulatory compliance, In the broader AI landscape, open models occupy a dynamic middle ground. Tech giants such as Nvidia, Meta and OpenAI have experimented with open or "open-weight" releases alongside proprietary offerings. Open models often lag one generation behind the most advanced closed models in raw capability, but they compensate with flexibility and reduced cost, making them attractive for bespoke applications and edge deployment. As open models improve rapidly, this performance gap is shrinking, driving wider adoption, as covered by Time. Nvidia's decision to release Nemotron 3 as an open model reflects a deliberate expansion beyond its traditional role as a hardware supplier into a more direct provider of foundational AI software. While Nvidia has released models before, including earlier Nemotron variants and domain-specific models used by partners and internal teams, those efforts were largely positioned as reference systems or tied closely to enterprise services. With Nemotron 3, the company is making model weights, datasets, reinforcement learning environments and supporting libraries broadly available, signaling a deeper commitment to open-source development. The move is closely tied to how Nvidia sees enterprise demand evolving. As companies deploy AI agents across operations, many want models they can run on their own infrastructure, inspect for behavior, fine-tune with proprietary data and integrate tightly with existing systems. Open models provide that flexibility while still reinforcing demand for Nvidia's GPUs, networking and software tools. Previous Nvidia open or semi-open models have generally performed competitively in enterprise benchmarks, particularly in reasoning, instruction following and agentic workflows, even if they have not always matched the absolute frontier of closed models. Nvidia says Nemotron 3 builds on that foundation with a hybrid architecture that combines mixture-of-experts techniques with newer sequence modeling approaches to improve efficiency and reasoning performance. The company has cited work with companies such as Accenture, Cursor, EY, Palantir, Perplexity and Zoom, where open models are used alongside proprietary systems to balance performance, cost and governance requirements. Nvidia's open-source push comes as competition intensifies globally, particularly from China, where open-access models have advanced rapidly. Chinese firms and research labs have released models that rival Western counterparts on several benchmarks while emphasizing lower training and inference costs. Those models have gained significant traction on developer platforms, reshaping the competition for open AI. Usage data from developer platforms suggests Chinese open models are among the most downloaded and deployed globally, prompting analysts to argue that China's emphasis on accessibility has become a strategic advantage, as reported by the Financial Times. One prominent example is DeepSeek-V3, developed by the Hangzhou-based startup DeepSeek. The model has drawn attention for delivering strong reasoning and coding performance while relying on more efficient architectures that reduce dependence on the most advanced GPUs. Alibaba Cloud's Qwen family represents another major force in the open-model ecosystem. The Qwen lineup spans multiple model sizes and tasks and has seen broad adoption across enterprise and consumer applications, with frequent appearances near the top of open-model rankings on developer platforms such as Hugging Face.

[14]

Nvidia unveils new open-source AI models amid boom in Chinese offerings

SAN FRANCISCO -- Nvidia on Monday unveiled a new family of open-source artificial intelligence models that it says will be faster, cheaper and smarter than its previous offerings, as open-source offerings from Chinese AI labs proliferate. Nvidia is primarily known for providing chips that firms such as OpenAI use to train their closed-source models and charge money for them. But it also offers a slew of its own models for everything from physics simulations to self-driving vehicles as open-source software that can be used by researchers or by other companies, with firms such as Palantir Technologies weaving Nvidia's model into their products. Nvidia on Monday revealed the third generation of its "Nemotron" large-language models aimed at writing, coding and other tasks. The smallest of the models, called Nemotron 3 Nano, was being released Monday, with two other, larger versions coming in the first half of 2026. Nvidia, which has become the world's most valuable listed company, said that Nemotron 3 Nano was more efficient than its predecessor - meaning it would be cheaper to run - and would do better at long tasks with multiple steps. Nvidia is releasing the models as open-source offerings from Chinese tech firms such as DeepSeek, Moonshot AI and Alibaba Group Holdings are becoming widely used in the tech industry, with companies such as Airbnb disclosing use of Alibaba's Qwen open-source model. At the same time, CNBC and Bloomberg have reported that Meta Platforms is considering shifting toward closed-source models, leaving Nvidia as one of the most prominent U.S. providers of open-source offerings. Many U.S. states and government entities have banned use of Chinese models over security concerns. Kari Briski, vice president of generative AI software for enterprise at Nvidia, said the company aimed to provide a "model that people can depend on," and was also openly releasing its training data and other tools so that government and business users could test it for security and customize it. "This is why we're treating it like a library," Briski told Reuters in an interview. "This is why we're committed to it from a software engineering perspective."

[15]

Nvidia launches new open-source AI models amid rise of Chinese alternatives

On Monday Nvidia unveiled a new generation of open-source artificial intelligence models, marking a strategic offensive in response to the rise of models developed by Chinese labs such as DeepSeek, Alibaba and Moonshot AI. The American group, known for its chips essential to training proprietary models such as those from OpenAI, is thus strengthening its position in the open-model market amid growing technological rivalry. Dubbed Nemotron 3 , this new series includes models designed for complex tasks such as writing and coding. The first model, Nemotron 3 Nano, is already available and stands out for greater energy efficiency and better performance on multi-step tasks. Two more powerful versions are expected in the first half of 2026. Nvidia also plans to release the training data and evaluation tools, enabling companies and institutions to audit or adapt the models to their needs. As some Western companies now adopt Chinese models, such as Airbnb with Qwen from Alibaba, Nvidia aims to offer a credible, controlled alternative, in a climate where questions of technological sovereignty and data security are gaining momentum. Unlike Meta, which is reportedly considering a return to closed models, Nvidia reinstates its commitment to open source and positions itself as a reliable US supplier for businesses and governments.

[16]

NVIDIA powers the agentic era: The Nemotron 3 debut explained

Open weight NVIDIA Nemotron models drive sovereign AI agentic systems The era of the solitary chatbot is fading. As 2025 draws to a close, the artificial intelligence industry is pivoting aggressively toward "agentic AI" - systems where multiple specialized AI agents collaborate to solve complex problems. Leading this charge is NVIDIA, which has just unveiled its Nemotron 3 family of open models, a release that promises to reshape how enterprises build and deploy these digital workforces. Also read: Sergey Brin admits Google "messed up" and didn't take LLMs seriously The release of Nemotron 3 underscores a vital shift toward "open weight" models, a category that offers a middle ground between closed proprietary systems and fully open-source software. In an open weight model, the developer releases the trained neural network weights - allowing the public to download, run, and fine-tune the model on their own hardware - while often keeping the training data and deeper methodologies proprietary. This approach has exploded in popularity throughout 2024 and 2025. Google championed this early with its Gemma family, lightweight models derived from its Gemini research that allowed developers to build efficient apps on consumer hardware. Similarly, OpenAI entered the fray in August 2025 with gpt-oss, a family of open-weight reasoning models that gave enterprises the ability to run GPT-class reasoning on their own infrastructure, bypassing API dependencies for sensitive tasks. NVIDIA is now taking this concept further by optimizing open weights specifically for multi-agent workflows. At the heart of the announcement are three distinct models, each sized for a specific role in an AI agent's workflow. All three utilize a breakthrough "hybrid latent mixture-of-experts" (MoE) architecture, a design that allows the models to boast massive parameter counts while only "activating" a small fraction of them for any given task. This ensures the models remain computationally efficient without sacrificing their intellectual depth. Nemotron 3 Nano is the fleet-footed scout of the family. Available immediately, this 30-billion-parameter model is optimized for edge computing and high-frequency tasks like software debugging and information retrieval. Despite its size, it activates only about 3 billion parameters per token. NVIDIA claims it delivers four times the throughput of its predecessor, Nemotron 2 Nano, and fits comfortably within a 1-million-token context window. For developers, this means the ability to run capable agents locally or at the edge with minimal latency. Also read: AI-controlled robot shoots human after prompt manipulation, raising serious safety risks Nemotron 3 Super, scheduled for the first half of 2026, is the reliable middle manager. With approximately 100 billion parameters (10 billion active), it strikes a balance between speed and depth. It is engineered for high-accuracy reasoning in collaborative environments where multiple agents need to communicate and hand off tasks without losing context or hallucinating details. Nemotron 3 Ultra represents the heavy artillery. Also arriving in 2026, this 500-billion-parameter giant (50 billion active) serves as the "reasoning engine" for the most complex strategic planning and deep research tasks. It is designed to sit at the center of an agentic workflow, directing the smaller Nano and Super models and handling problems that require long-horizon thinking. NVIDIA's strategy extends beyond just model weights. By releasing the Nemotron 3 family alongside open-source tools like NeMo Gym and NeMo RL, the company is providing the "gymnasium" where these agents can be trained and refined. This is particularly critical for "sovereign AI" initiatives, where nations and large corporations seek to build AI systems that align with local regulations, languages, and values, something impossible to achieve with a black-box model hosted on a foreign cloud. With early adopters like Accenture, Siemens, and CrowdStrike already integrating these models, Nemotron 3 is poised to become the standard reference architecture for the next generation of AI: a generation where models don't just talk to humans, but to each other.

Share

Share

Copy Link

Nvidia released its Nemotron 3 family of models in three sizes—Nano (30B), Super (100B), and Ultra (500B parameters)—with full training data transparency. The move positions the chip giant as a major force in open source AI just as Meta signals a retreat from openness, leaving Chinese models to dominate the space.

Nvidia Takes Leadership Position in Open Source AI

Nvidia has stepped decisively into the open source AI arena with the release of its Nemotron 3 family of models, positioning itself as a transparency leader at a moment when American tech giants are retreating from openness

1

. The chip giant, which has built its fortune supplying GPUs to AI companies, is now hedging against the possibility that firms like OpenAI, Google, and Anthropic might develop their own chips and move away from Nvidia's technology over time1

.The Nemotron 3 family of models arrives in three sizes: Nano with 30 billion parameters, Super with 100 billion, and Ultra with 500 billion

1

. The Nano model, available now on Hugging Face, increases throughput in tokens per second by four times and extends the context window to one million tokens—seven times larger than its predecessor2

. Super is expected in January, while Ultra will arrive in March or April2

.

Source: Digit

Enterprise AI Adoption Drives Model Customization Focus

Nvidia is taking a more transparent approach than many US rivals by releasing the training data used to train Nemotron, a fact that should help engineers with model customization more easily

1

. The company is also releasing tools including a new hybrid latent mixture-of-experts model architecture, which Nvidia says excels at building AI agents that can take actions on computers or the web1

.Kari Briski, vice president of generative AI software for enterprise at Nvidia, explained that open AI models matter to builders for three key reasons: the growing need to customize models for particular tasks, the benefit of routing queries to different models, and the ability to extract more intelligent responses through simulated reasoning

1

. For enterprise AI adoption, exposing sensitive customer data or intellectual property to APIs for closed models like ChatGPT remains a non-starter3

.The company is releasing reinforcement learning libraries and training environments called NeMo Gym, enabling users to train AI agents through trial and error with rewards and punishments

3

. Jensen Huang stated that "open innovation is the foundation of AI progress," emphasizing how Nemotron transforms advanced AI into an open platform that gives developers the transparency and efficiency needed to build agentic systems at scale1

.

Source: SiliconANGLE

Meta's Retreat Creates Opening in AI Development Ecosystem

The timing of Nvidia's push is strategic. Meta, which released the first advanced open models under the name Llama in February 2023, has signaled that future releases might not be open source

1

. Meta's rollout of Llama 4 in April 2025 received mediocre reviews, and Llama models no longer appear in the top 100 on LMSYS's LMArena Leaderboard, which is dominated by proprietary models from Google, xAI, Anthropic, and OpenAI, along with open source AI models from DeepSeek AI, Alibaba's Qwen, and Singapore-based Moonshot AI2

.A forthcoming Meta project code-named Avocado may launch as a closed model that Meta can sell access to, marking the biggest departure from the open source strategy Meta has promoted for years

2

. Meta's Chief AI Officer Alexandr Wang, installed this year, is an advocate of closed models2

. When asked about Menlo Ventures' claim that open source is struggling, Briski replied: "I agree about the decline of Llama, but I don't agree with the decline of open source"2

.Chinese AI Models Dominate as American Firms Turn Proprietary

With American tech giants drawing back from open source, Chinese AI models have dominated the space with high-profile releases from DeepSeek and Alibaba

5

. Open models from Chinese companies are currently much more popular than those from US firms, according to data from Hugging Face1

. Even OpenAI, which purports to be an "open" organization, unveiled only its first open source model in five years this past August with gpt-oss-120b and gpt-oss-20b5

.At Nvidia's GTC conference in Washington DC last October, Jensen Huang warned attendees of China's open source dominance, arguing that because China creates substantial open source software, Americans risk being "ill-prepared" if Chinese software "permeates the world"

5

. Briski emphasized that "this year alone, we had the most contributions and repositories on Hugging Face," signaling Nvidia's growing prominence2

.Related Stories

Technical Architecture Targets AI Infrastructure Needs

The Nemotron 3 models employ a novel hybrid latent mixture-of-experts architecture designed to minimize performance losses when processing long input sequences

3

. This combines Mamba-2 and Transformer architectures throughout the model's layers, with Mamba-2 providing efficiency for long sequences while transformers maintain precise reasoning and prevent context loss3

. All models natively support a million token context window, equivalent to roughly 3,000 double-spaced pages3

.The mixture-of-experts architecture means only a fraction of total parameters activates for each token processed. Nemotron 3 Nano has 30 billion parameters but only 3 billion activate per token, putting less pressure on memory and delivering faster throughput than equivalent dense models

3

. The larger Super and Ultra models use Nvidia's NVFP4 data type and a latent MoE architecture where experts operate on shared latent representation, allowing models to call on 4x more experts at the same inference cost3

.Strategic Positioning for Hardware Dominance

Nvidia sees Nemotron as a tool to drive further reliance on its hardware empire. By distributing open models to developers worldwide, the company aims to ensure that new foundation models built will align best with its silicon rather than rapidly emerging Chinese alternatives

5

. "When we're the best development platform, people are going to choose us, choose our platform, choose our GPU, in order to be able to not just build today, but also build for tomorrow," Briski said5

.The 30-billion-parameter Nemotron 3 Nano runs efficiently on enterprise hardware like Nvidia's L40S or RTX Pro 6000 Server Edition, though 4-bit quantized versions should work on GPUs with as little as 24GB of video memory

3

. According to Artificial Analysis, the model delivers performance on par with gpt-oss-20B or Qwen3 models while offering greater flexibility for customization3

. Nvidia is positioning these offerings as business-ready infrastructure for enterprises to build domain-specific AI agents without needing to create foundation models from scratch4

.

Source: The Register

References

Summarized by

Navi

[3]

Related Stories

NVIDIA Unveils Nemotron Model Families to Advance AI Agents and Enterprise Productivity

07 Jan 2025•Technology

Nvidia Unveils Llama Nemotron: Advanced Open Reasoning Models to Accelerate Agentic AI Development

19 Mar 2025•Technology

NVIDIA's Open-Source AI Model Nemotron-70B Outperforms GPT-4 and Claude 3.5

18 Oct 2024•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation