Nvidia Overcomes Technical Hurdles, Accelerates Production of Blackwell AI Servers

4 Sources

4 Sources

[1]

Nvidia GB200 production ramps up after suppliers tackle AI server overheating and liquid cooling leaks

There are also reports of software bugs and inter-chip connectivity problems Nvidia suppliers building its Blackwell AI server racks have reportedly solved a series of technical hurdles, allowing them to accelerate production of the GB200 AI rack. According to the Financial Times, suppliers including Foxconn, Inventec, Dell, and Wistron have made "a series of breakthroughs" to allow shipments to kick off. According to the report, shipments of the GB200 were delayed due to technical issues that emerged at the end of last year, disrupting production. According to the report, Nvidia's Taiwanese partners announced at Computex 2025 that shipments of the GB200 racks commenced at the end of Q1 2025, stating that "Production capacity was now being rapidly scaled up." One engineer at an unnamed partner manufacturer of Nvidia reportedly told FT that internal testing revealed connectivity problems, requiring supply chain collaboration with Nvidia two or three months ago. FT reports that supply chain partners have spent "several months" tackling other challenges with the GB200 racks, including overheating and leaks in the liquid cooling systems. Other issues cited by engineers reportedly include "software bugs and inter-chip connectivity problems stemming from the complexity of synchronising such a large number of processors." One analyst told FT that "Nvidia had not allowed the supply chain sufficient time to be fully ready," and that inventory risk for the GB200 would ease in the second half of the year. According to the report, as Nvidia prepares for the rollout of the GB300 (expected in Q3), Nvidia has compromised some facets of the GB300 design. FT claims it has ditched the Cordelia chip board layout in favor of the older Bianca design it uses in the GB200. The report states that two suppliers reported installation issues; however, the move will preclude the replacement of individual GPUs in the system. This matches a report from earlier in May, claiming Nvidia was delaying the introduction of SOCAMM memory tech originally planned for the Blackwell Ultra GB300, with reports at the time citing the Cordelia to Bianca switch was behind the postponement. According to that earlier report and FT's latest story, Nvidia is still planning to implement Cordelia in its next-generation Rubin chips.

[2]

Nvidia's suppliers resolve AI 'rack' issues in boost to sales

Nvidia's suppliers are accelerating production of its flagship AI data centre "racks" following a resolution of technical issues that had delayed shipments, as the US chipmaker intensifies its global sales push. The semiconductor giant's partners -- including Foxconn, Inventec, Dell and Wistron -- have made a series of breakthroughs that have allowed them to start shipments of Nvidia's highly anticipated "Blackwell" AI servers, according to several people familiar with developments at the groups. The recent fixes are a boost to chief executive Jensen Huang, who unveiled Blackwell last year promising it would massively increase the computing power needed to train and use large language models. Technical problems that emerged at the end of last year had disrupted their production, threatening the US company's ambitious annual sales targets. The GB200 AI rack includes 36 "Grace" central processing units and 72 Blackwell graphics processing units, connected through Nvidia's NVLink communication system. Speaking at the Computex conference in Taipei last week, Nvidia's Taiwanese partners Foxconn, Inventec and Wistron said shipments of the GB200 racks began at the end of the first quarter. Production capacity is now being rapidly scaled up, they added. "Our internal tests showed connectivity problems . . . the supply chain collaborated with Nvidia to solve the issues, which happened two to three months ago," said an engineer at one of Nvidia's partner manufacturers. The development comes ahead of Nvidia's quarterly earnings on Wednesday, where investors will be watching for signs that Blackwell shipments are proceeding at pace following the initial technical problems. Saudi Arabia and the United Arab Emirates recently announced plans to acquire thousands of Blackwell chips during President Donald Trump's tour of the Gulf, as Nvidia looks beyond the Big Tech "hyperscaler" companies to nation states to diversify its customer base. Nvidia's supply chain partners have spent months tackling several challenges with the GB200 racks, including overheating caused by its 72 high-performance GPUs, and leaks in the liquid cooling systems. Engineers also cited software bugs and inter-chip connectivity problems stemming from the complexity of synchronising such a large number of processors. "This technology is really complicated. No company has tried to make this many AI processors work simultaneously in a server before, and in such a short timeframe," said Chu Wei-Chia, a Taipei-based analyst at consultancy SemiAnalysis. "Nvidia had not allowed the supply chain sufficient time to be fully ready, hence the delays. The inventory risk around GB200 will ease off as manufacturers increase rack output in the second half of the year," Chu added. To ensure a smoother deployment for major customers such as Microsoft and Meta, suppliers have beefed up testing protocols before shipping, running more checks to ensure the racks function for AI workloads. Nvidia is also preparing for the rollout of its next-generation GB300 AI rack, which features enhanced memory capabilities and is designed to handle more complex reasoning models such as OpenAI's 01 and DeepSeek R1. Huang said last week that GB300 will launch in the third quarter. In a bid to accelerate deployment, Nvidia has compromised on aspects of the GB300's design. It had initially planned to introduce a new chip board layout, known as "Cordelia," allowing for the replacement of individual GPUs. But in April, the company told partners it would revert to the earlier "Bianca" design -- used in the the current GB200 rack -- due to installation issues, according to two suppliers. The decision could help Nvidia to achieve its sales targets. In February the company said it was aiming for around $43bn in sales for the quarter to the end of April, a record figure which would be up around 65 per cent year on year. Analysts have said the Cordelia board would have offered the potential for better margins and made it easier for customers to do maintenance. Nvidia has not abandoned Cordelia and has informed suppliers it intends to implement the redesign within its next-generation AI chips, according to three people familiar with the matter. Separately, Nvidia is working to offset revenue losses in China, following a US government ban on exports of its H20 chip -- a watered-down version of its AI processors. The company said it expects to incur $5.5bn in charges related to the ban, due to inventory write-offs and purchase commitments. Last week Bank of America analyst Vivek Arya wrote that the China sales hit would drag down Nvidia's gross margins for the quarter from the 71 per cent previously indicated by the company to around 58 per cent. But he wrote that a faster than expected rollout of Blackwell due to the company reverting back to Bianca boards could help offset the China revenue hit in the second half of the year.

[3]

NVIDIA suppliers solve AI rack issues, says next-gen GB300 AI racks will launch in Q3

As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. NVIDIA supply chain partners are accelerating the production of its flagship AI data center racks, after technical issues had delayed shipments, but now these issues have been resolved and supply of GB300 AI server racks will now flow. In a new report from The Financial Times, we're hearing that NVIDIA supply chain partners including Foxconn, Inventec, Dell, and Wistron, have "made a series of breakthroughs" that have allowed them to start shipments of NVIDIA's high Blackwell AI servers. NVIDIA experienced technical problems that started at the end of 2024 that disrupted production, and their annual sales targets. This new advancement is also seeing NVIDIA laying the groundwork of its next-gen GB300 NVL72 AI server racks, featuring 288GB of HBM3E memory per GPU and offering even more compute power than GB200 NVL72 AI servers, which will handle even more complex reasoning models including OpenAI O1 and DeepSeek R1 models. NVIDIA CEO Jensen Huang said last week that GB300 will launch in Q3 2025. In order to speed up the deployment of its AI servers, NVIDIA has reportedly compromised on aspects of the GB300's design, where the company had planned a new chip-board layout known as "Cordelia" which allowed for the replacement of individual GPUs. However, in April 2025, NVIDIA told its partners it would be reverting to its earlier "Bianca" board design -- the one in the current GB200 AI servers -- due to installation issues, according to two suppliers that talked to The Financial Times. NVIDIA isn't moving away from the new Cordelia board completely, as it has told suppliers that it will be implementing the new Cordelia board redesign with its next-gen AI GPUs -- the new Rubin GPU architecture and its new R100 and GR100 AI servers that will be unleashed later this year, and pushed into the market in 2026 and beyond.

[4]

Nvidia suppliers ramp up AI server production after technical snag - FT By Investing.com

Investing.com -- NVIDIA (NASDAQ:NVDA) suppliers, including industry leaders like Foxconn (SS:601138), Inventec, Dell (NYSE:DELL), and Wistron, have overcome technical challenges that previously delayed shipments of Nvidia's flagship AI data center racks, the Financial Times reported on Tuesday. This development marks a significant stride in the company's efforts to enhance its global sales initiatives. The technical issues, which arose toward the end of last year, had impeded the production of the highly anticipated "Blackwell" AI servers. These servers are expected to substantially boost computing power for training and utilizing large language models, a promise made by Nvidia's CEO, Jensen Huang, upon the product's announcement last year. The resolution of these technical difficulties is a welcome relief for Nvidia, as it aims to meet its ambitious annual sales targets. The suppliers' breakthroughs have enabled the commencement of shipments, which is likely to contribute positively to the company's performance. Nvidia's Blackwell AI servers are part of a broader strategy to solidify the chipmaker's position in the rapidly growing field of artificial intelligence. The successful deployment of these servers is crucial for the company to maintain its competitive edge and fulfill the expectations set by its leadership. This latest advancement underscores Nvidia's resilience in addressing production challenges and reaffirms its commitment to delivering cutting-edge technology solutions to its customers. As shipments begin, the market is watching closely to see how this will translate into financial performance for the semiconductor giant in the coming quarters. All eyes are on NVIDIA ahead of earnings on Wednesday after the close. Shares of the AI leader closed up 3.2% on Tuesday and are up 25% over the last month, as tariff and DeepSeek fears have subsided.

Share

Share

Copy Link

Nvidia's suppliers have resolved technical issues with the GB200 AI server racks, ramping up production. The company is also preparing for the Q3 launch of the next-generation GB300, with some design compromises to ensure smoother deployment.

Nvidia's Suppliers Overcome Technical Challenges

Nvidia's suppliers, including Foxconn, Inventec, Dell, and Wistron, have successfully resolved a series of technical issues that were delaying the production of the company's flagship AI data center racks

1

. These breakthroughs have allowed the suppliers to accelerate the production of the highly anticipated "Blackwell" AI servers, specifically the GB200 AI rack2

.

Source: FT

Technical Hurdles Overcome

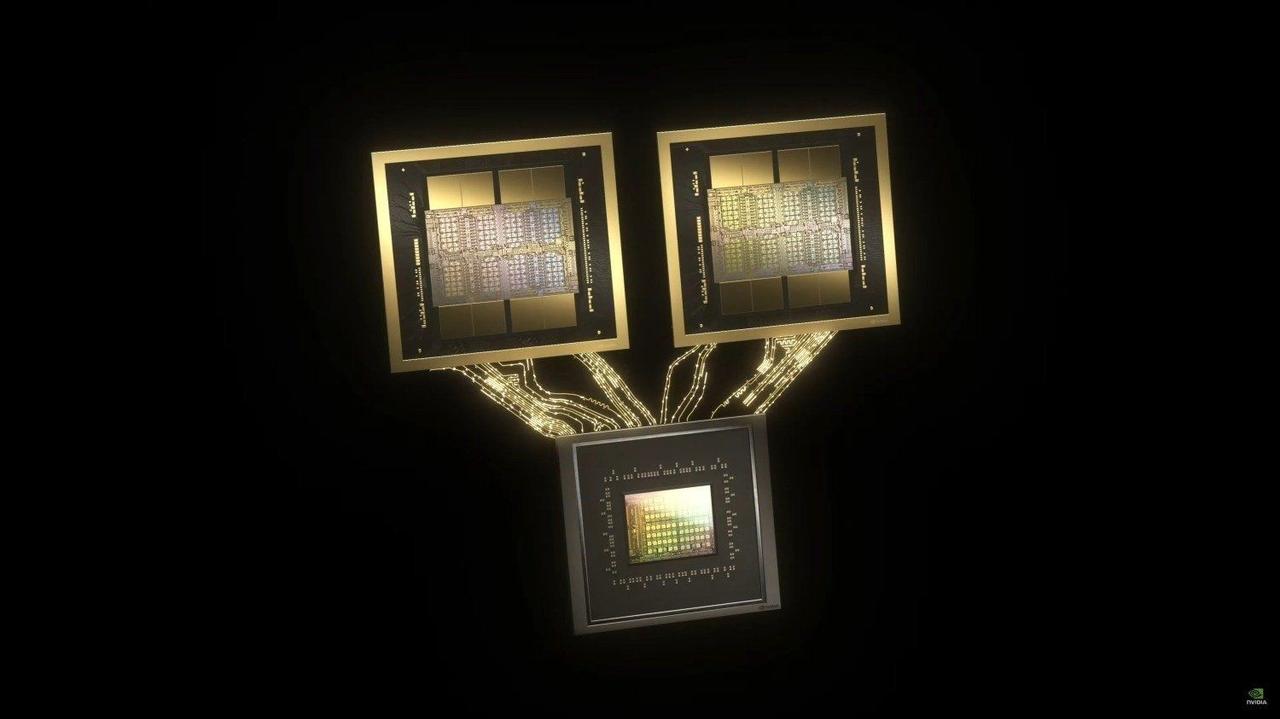

The GB200 AI rack, which includes 36 "Grace" central processing units and 72 Blackwell graphics processing units, faced several challenges during production. These issues included:

- Overheating caused by the 72 high-performance GPUs

- Leaks in the liquid cooling systems

- Software bugs

- Inter-chip connectivity problems

Engineers at Nvidia's partner manufacturers worked collaboratively with the company to resolve these issues, with the process taking several months

1

.Production Ramp-Up and Shipments

Following the resolution of these technical challenges, Nvidia's Taiwanese partners announced at Computex 2025 that shipments of the GB200 racks commenced at the end of Q1 2025. Production capacity is now being rapidly scaled up to meet demand

2

.Next-Generation GB300 and Design Compromises

As Nvidia prepares for the rollout of its next-generation GB300 AI rack, expected to launch in Q3 2025, the company has made some design compromises to ensure smoother deployment

3

. Initially, Nvidia had planned to introduce a new chip board layout called "Cordelia," which would have allowed for the replacement of individual GPUs. However, due to installation issues, the company has decided to revert to the older "Bianca" design used in the current GB200 rack1

.Related Stories

Impact on Nvidia's Business

The resolution of these technical issues and the acceleration of production are expected to have a positive impact on Nvidia's business. The company had set ambitious sales targets, aiming for around $43 billion in sales for the quarter ending in April 2025, which would represent a 65% year-on-year increase

1

.Future Developments

Source: TweakTown

As Nvidia continues to push the boundaries of AI computing power, the successful resolution of these technical challenges demonstrates the company's ability to adapt and overcome obstacles in its pursuit of innovation in the rapidly growing field of artificial intelligence.

References

Summarized by

Navi

[1]

Related Stories

Nvidia's Blackwell AI Servers Face Potential Delays Due to Technical Challenges

18 Dec 2024•Technology

NVIDIA's AI AI server evolution: GB300 production ramps up as Vera Rubin looms on the horizon

19 Jul 2025•Technology

NVIDIA's GB300 Blackwell Ultra AI Servers Set to Revolutionize AI Computing in Late 2025

01 Jul 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology