NVIDIA's Blackwell GPUs and RTX 50 Series: Revolutionizing AI for Consumers and Creators

3 Sources

3 Sources

[1]

The Future of AI is Being Built Today, Accelerated by GeForce RTX 50 Series GPUs on RTX AI PCs

Whether you're experienced in AI or haven't used it much at all, it's already here and only growing bigger every day. Luckily, NVIDIA is helping to make it more accessible for beginners and seasoned developers alike. The latest GeForce RTX 50 Series GPUs are changing the game with advanced and powerful AI supercharging digital humans, content creation, productivity and development to function on consumer PCs, allowing you to integrate AI into all your activities. Before we can get to what NVIDIA's doing, we need to have some understanding of AI tools in general. That's where foundation models come in. Absolute mountains of work and data have gone into training various foundation models, such as GPT-4, LLaMA, and Stable Diffusion. There are many different models, and they can specialize in different things. You've likely heard of LLMs, or Large Language Models. These are foundation models trained on text and language. By using one of these models, you can end up with an AI that's great at generating text, analyzing written documents, or conversing with you. Other foundation models are trained on images, and in turn, they can be used in AI tools that generate imagery or help you find information on new images. Foundation Models are diverse, and text and images are just some of the types of training data they can contain. But to get a powerful AI, the model can become quite massive. As a result, not just any device can load up and run an AI built around a specific model. That's where the newly announced NVIDIA NIM microservices come in. A key aspect of NIM microservices is the ease with which developers can implement them. NVIDIA offers low-code and no-code methods for deploying NIM microservices. For skilled developers, adding in NIM microservices takes just a few lines of code. And with no-code tools like AnythingLLM, ComfyUI, Langflow, and LM studio, you can dabble in building apps and tools that incorporate AI using a simple graphical user interface. Even if you don't try to deploy NIM microservices yourself, you'll likely see the benefits of them in no time. The ease of deployment means you can expect to see more and more programs integrate AI tools that will be easy for you to tap into. When it comes time for you to interact with the many AI tools available to you, AI Blueprints are the way you're likely to do so. These are essentially reference projects for complex AI workflows combining libraries, software dev kits and AI models into a convenient, single application. By combining various different functions you can end up with all sorts of unique applications that interface with and produce different media, essentially making them multi-modal. One such NVIDIA AI Blueprint combines PDF extraction, text generation, and text-to-speech capabilities. This lets it sift through all the data in a PDF, synthesize the information into a script and then create an auto-generated audio podcast about the information inside the PDF. Going another step beyond that, you can ask questions to the app, and effectively have a real-time discussion with the podcast to get insights into the data PDF. That's what's in store with the PDF to Podcast Blueprint. Another AI Blueprint, 3D-Guided Generative AI, lets you wield more control over the final product in AI image generation. Many image generators take a text prompt and then produce a number of results. With some luck, you might land on one that looks like you wanted. But with this AI Blueprint built into a 3D rendering application, like Blender, you can set up your scenes with simple 3D objects (which you might also generate with AI), choose your exact visual perspective, provide style instructions, and then call on the AI to generate an image with all the pieces in place. With GeForce RTX 50 Series GPUs, all these AI advancements find their way home to your personal computer. These new GPUs offer immense computational power with the ability to handle up to 3,400 TOPs (trillion operations per second) of AI inference -- an essential metric for how quickly the system can produce a finished product using AI. Memory is also crucial for these new GPUs to be able to handle the large AI models that make so much possible. Thankfully, the RTX 50 Series GPUs feature up to 32GB of GDDR7 VRAM, providing ample space and immense bandwidth for loading up models. These are also the first consumer GPUs to offer support for FP4 computation, which allows for faster AI inference and a smaller memory requirement than earlier hardware. And that's just scratching the surface of what GeForce RTX 50 Series GPUs will enable through AI. You'll be able to see all of this in action for yourself soon. NVIDIA NIM microservices and AI Blueprints will land in February, and RTX AI PCs running the new RTX 50 Series GPUs will be here very soon.

[2]

Nvidia RTX AI PCs and generative AI for games -- how the Blackwell GPUs and RTX 50-series aim to change the way we work and play

Nvidia has a lot to say about artificial intelligence and machine learning. It's not all data center hardware and software either, though Nvidia's various supercomputers are doing most of the heavy lifting for training new AI models. At its Editors' Day earlier this month, Nvidia briefed us on a host of upcoming technologies and hardware, including the Blackwell RTX architecture, neural rendering, and the GeForce RTX 50-series Founders Edition cards. There were also two sessions devoted to generative AI and Nvidia's RTX AI PC ecosystem, which we'll discuss here. Generative AI came to the forefront with the rise of tools like Stable Diffusion and ChatGPT over the past couple of years. Nvidia has been working on AI tools for a while now that are designed to change the way games and NPCs behave and the way we interact with them. We've heard about ACE (Avatar Cloud Engine) for a while now, and it continues to improve. With Blackwell and upcoming games, Nvidia has partnered with various game developers and publishers to leverage ACE and related technologies. The results range from interesting to pretty bad, so we'll just let these videos speak for themselves and provide more analysis below. One of the key issues, as with so many things related to generative AI, is getting the desired results. Live demos of PUBG Ally had Krafton representatives talking to the AI player, who would respond verbally as expected. "Go find me a rifle and bring it to me." "Okay, I'll go do that..." At this point, the AI NPC would seemingly do nothing of the sort. It seemed caught in a loop and still looks far away from being ready for public consumption. But these things change fast, so perhaps it's really only a few months away from being great -- who knows? (We also question how having AI NPCs in a multiplayer game will work out, but that's a different subject.) Other use cases demonstrated include a raid boss in MIR5 that will supposedly learn from past encounters and adapt over time, requiring different tactics to repeatedly defeat the boss. The high-level concept sounds a bit like the Omnidroid from the Incredibles, learning and becoming more powerful over time, though the raid boss won't gain new abilities so it shouldn't become invincible -- because where's the fun in that? Zoopunk allows the player to repaint and decorate a spaceship by interacting with an AI. Again, the live demo was lacking, as there were lengthy pauses before a response, with a simple prompt like "Please paint my ship purple," resulting in a 20 to 30-second sequence that felt entirely unnecessary. Fundamentally, the problem isn't just about using ACE and AI to create NPCs for games; it's about making those NPCs actually useful, interesting, and fun. These are games, after all, and if we're only adding voice interactions that aren't actually meaningful, what's the point? We're still waiting to see a demo of a game where the AI NPCs make for a better end result than traditional game development, but we're sure there are bean counters looking for ways to cut costs. The other session, RTX AI PCs, was related to the ACE stuff with more of a focus on all the various tools Nvidia has created rather than on actual game demos. There are lots of new RTX NIMs -- Nvidia Inference Microservices -- coming, with blueprints (code samples, basically) of how they can be used. One of the more interesting examples was a blueprint for converting a PDF into an AI-voiced podcast. The tool had several components for extracting text, analyzing images, analyzing tables (all stored in a PDF), and then creating a script for a podcast. The result still sounds very much like an AI reading a script, but you can fully edit the resulting text, and you could even do the voiceover yourself, which would bring some humanity into the equation. You can see the full slide deck below, and developers interested in these tools will want to get in touch with Nvidia to register for and use the various APIs.

[3]

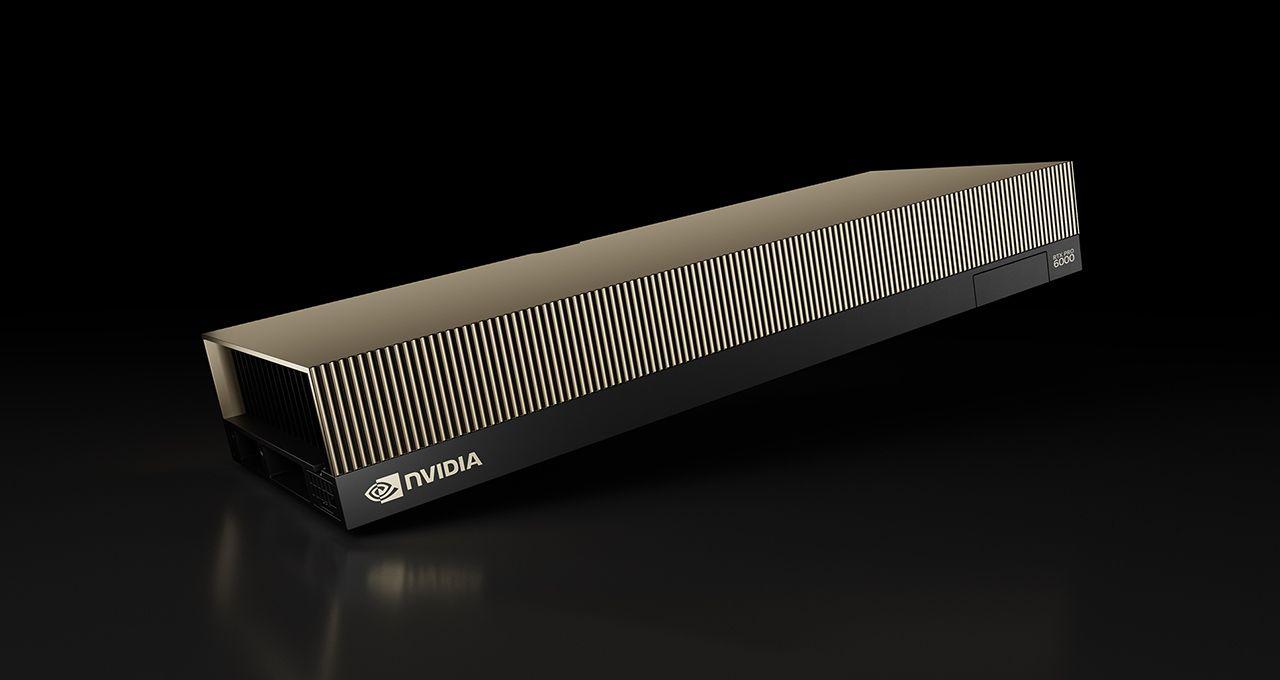

Nvidia Blackwell for creators and professionals -- upgrades for editing video, images, audio, and more

Nvidia Broadcast will also get some new AI-powered features and enhancements. Nvidia RTX Blackwell cards aren't just for gaming. Hopefully, that's no surprise, and that sort of statement has been true about every Nvidia GPU architecture dating back to the original Quadro cards in 2000. The professional and content creator features present in Nvidia GPUs have increased significantly, targeting video and photo editing, 3D rendering, audio, and more. As with so many other features of the Blackwell architecture, many of the latest developments will be powered by AI. We have a whole suite of Nvidia presentations to get through today, including neural rendering and DLSS 4, the Founders Edition 50-series cards, generative AI for games and RTX AI PCs, and a final session on how to benchmark properly. We've got the full slide deck from each session from Nvidia's Editors' Day on January 8, so check those out for additional details. AI and machine learning have caused a massive paradigm shift in the way we use a lot of applications, particularly those that deal with content creation. I remember the earlier days of Photoshop, where the context-aware fill often resulted in a blob of garbage. Now, it's become an indispensable part of my photo editing workflow, doing in seconds what used to require minutes or more. Similar improvements are coming to video editing, 3D rendering, and game development. For AI image generation, one of the new LLMs is Flux. It basically requires a 24GB graphics card to get it running properly by default, though there are quantized models that aim to fit all of that into a smaller amount of VRAM. But with native FP4 support in Blackwell, not only do the quantized models run in 10GB of VRAM, but they also run substantially faster. It's not all apples to apples, but Flux.dev in FP16 mode required 15 seconds to create an image, compared to just five seconds when using FP4 mode. (Wait, what happened to FP8 mode on the RTX 4090? Yeah, there's marketing fluff happening...) Another cool demo Nvidia showed was image generation guided by a 3D scene. Using Blender, you could mock up a rough 3D environment and then provide a text prompt, which generative AI would then use the depth map combined with Flux to spit out an image. It still had some of the usual issues, but the ability to place 3D models to provide hints to the AI LLM often resulted in images that were far closer to the desired result. There was a live blueprint demo shown at CES that should be publicly available next month. Nvidia has also added native 4:2:2 video codec support to Blackwell's video engine. Many videos use 4:2:0, which has known quality issues, but many modestly priced cameras now support raw 4:2:2. The problem is that editing 4:2:2 can be very CPU-intensive. Blackwell will fix that, and a sample workflow using a Core i9-14900K went from taking 110 minutes to encode to just 10 minutes with a Blackwell GPU. Blackwell's video engine has also improved the AV1 and HEVC quality, with a new AV1 UHQ mode. And the RTX 5090 will come with triple NVENC blocks, compared to two NVENC blocks on the 4090 and just one on the 30-series and earlier GPUs. Support for popular video editing applications, including Adobe Premiere and DaVinci Resolve, will come starting in February. Something I'm more interested in seeing is the updated Nvidia Broadcast software, which adds new Studio Voice and Virtual Key Light filters. The former should help lower-tier microphones sound better, while the latter aims to improve contrast and brightness. But these aren't the only changes for streamers. Nvidia demonstrated a new ACE-powered AI sidekick. It went about as well as the ACE game demos, though, and feels like something more for beginners rather than quality streamers. But you can give it a shot when it becomes available, and I'll freely admit that it's not something really targeted at the sort of work I do. Other new features include faster ray tracing hardware for 3D rendering, with Blender running 1.4X faster on a 5090 than a 4090. (Much of that comes courtesy of having 27% more processing cores, of course.) Other applications like D5 Render saw even larger gains, but that's thanks to DLSS 4. Still, boosting the speed of viewport updates can really help with 3D rendering applications, and that seems to be a great use of AI denoising and upscaling. One final item Nvidia showed was the ability to create 3D models using SPAR3D, which can take images and make a 3D model in seconds. It's already available from HuggingFace, and there will be a new NIM (Nvidia Inference Microservice) soon.

Share

Share

Copy Link

NVIDIA unveils its new Blackwell architecture and RTX 50 Series GPUs, promising significant advancements in AI capabilities for consumer PCs, content creation, and gaming.

NVIDIA Introduces Blackwell Architecture and RTX 50 Series GPUs

NVIDIA has unveiled its latest GPU architecture, Blackwell, and the upcoming GeForce RTX 50 Series, promising significant advancements in AI capabilities for consumer PCs, content creation, and gaming. These new technologies aim to make AI more accessible and powerful for both beginners and experienced developers

1

2

.Enhanced AI Performance for Consumer PCs

The GeForce RTX 50 Series GPUs are set to revolutionize AI integration in consumer PCs:

- Up to 3,400 TOPs (trillion operations per second) of AI inference

- Up to 32GB of GDDR7 VRAM for handling large AI models

- First consumer GPUs to support FP4 computation, enabling faster AI inference and reduced memory requirements

1

NVIDIA NIM Microservices and AI Blueprints

NVIDIA is introducing new tools to simplify AI integration:

- NIM (NVIDIA Inference Microservices) for easy deployment of AI services

- Low-code and no-code methods for implementing NIM microservices

- AI Blueprints: reference projects for complex AI workflows combining libraries, SDKs, and AI models

1

2

AI-Powered Content Creation

Blackwell architecture brings significant improvements to content creation:

- Native 4:2:2 video codec support for faster video editing

- Improved AV1 and HEVC quality with new AV1 UHQ mode

- Triple NVENC blocks on the RTX 5090 for enhanced encoding capabilities

- Faster ray tracing for 3D rendering, with Blender running 1.4X faster on a 5090 compared to a 4090

3

Generative AI for Gaming

NVIDIA is partnering with game developers to integrate AI into gaming experiences:

- Avatar Cloud Engine (ACE) for creating responsive AI NPCs

- AI-powered raid bosses that adapt to player strategies

- Voice-controlled game interactions and customization

2

Related Stories

Nvidia Broadcast Enhancements

The updated Nvidia Broadcast software introduces new features for content creators:

- Studio Voice filter for improving audio quality

- Virtual Key Light filter for enhancing contrast and brightness

- AI-powered sidekick for streamers (still in development)

3

Challenges and Future Developments

While the potential for AI integration is significant, some challenges remain:

- Live demos of AI-powered NPCs showed inconsistent results

- Questions about the practicality and fun factor of AI NPCs in multiplayer games

- The need for meaningful AI interactions that enhance rather than complicate gameplay

2

As NVIDIA continues to refine these technologies, the full impact of AI-powered GPUs on consumer computing, content creation, and gaming remains to be seen. The NVIDIA NIM microservices and AI Blueprints are expected to launch in February, with RTX AI PCs featuring the new RTX 50 Series GPUs arriving soon after

1

.References

Summarized by

Navi

[1]

[2]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology