Nvidia's Dynamo Software Promises 30x Speed Boost for DeepSeek R1 AI Model

2 Sources

2 Sources

[1]

Nvidia can boost DeepSeek R1's speed 30x, says Jensen Huang

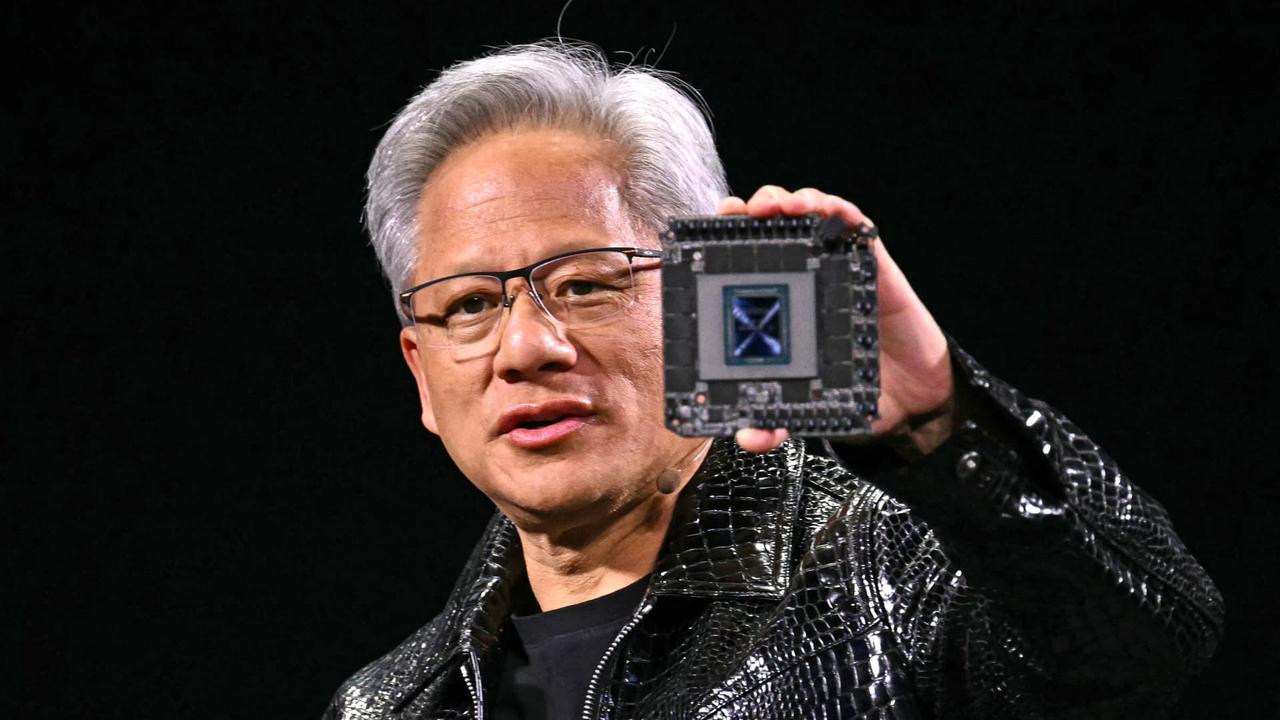

Nvidia aims to enhance the performance of DeepSeek's artificial intelligence program R1, striving for 30 times faster processing speeds. This announcement was made by co-founder and CEO Jensen Huang during a event at the SAP Center in San Jose, California. To address investor concerns stemming from the emergence of DeepSeek's R1 in January, which had previously prompted a stock market selloff, Nvidia has introduced new software called Nvidia Dynamo. This software allows for the distribution of AI inference tasks across up to 1,000 Nvidia GPUs, increasing query throughput significantly. According to Ian Buck, Nvidia's head of hyperscale and high-performance computing, "Dynamo can capture that benefit and deliver 30 times more performance in the same number of GPUs in the same architecture for reasoning models like DeepSeek." This software, now available on GitHub, enables more efficient processing by breaking up tasks to run in parallel, resulting in enhanced performance and revenue generation. For inference tasks priced at $1 per million tokens, the increased throughput means that more tokens can be processed each second, thereby boosting revenue for GPU service providers. Buck explained that AI factories -- large-scale operations utilizing Nvidia's technology -- can now offer premium services at higher rates while also increasing the overall token volume of their operations. Nvidia RTX Pro Blackwell series pack insane power for AI and 3D work Utilizing Dynamo with Nvidia's Blackwell GPU model allows data centers to potentially generate 50 times more revenue compared to earlier GPU models, like Hopper. Nvidia has also introduced its version of DeepSeek R1 on HuggingFace, optimizing it by reducing the bit level for variable manipulation to "FP4," or floating-point four bits, which minimizes computational needs compared to standard floating-point formats. "It increases the performance from Hopper to Blackwell substantially," Buck stated. This modification maintains the accuracy of the AI model while enhancing processing efficiency. In addition to unveiling Dynamo, Huang showcased the latest iteration of Blackwell, referred to as Blackwell Ultra, which upgrades features of the original Blackwell 200. Notable improvements include an increase in DRAM memory from 192GB to 288GB of HBM3e high-bandwidth memory. When paired with Nvidia's Grace CPU chip, up to 72 Blackwell Ultras can be integrated into the NVL72 rack-based computer, enhancing inference performance running at FP4 by 50% over the existing NVL72 system.

[2]

Nvidia Says Dynamo Can Make DeepSeek R1 30X Faster With Blackwell, Months After The AI Model Sparked Selloff Leading To A $600 Billion Drop In Its Market Cap - NVIDIA (NASDAQ:NVDA)

At the GTC 2025 conference in San Jose, Nvidia Corp. NVDA announced that the company's newly released open-source software, Dynamo, can make China's DeepSeek R1 AI model 30 times faster when run on its latest Blackwell GPU chips. What Happened: During a media briefing ahead of CEO Jensen Huang's keynote, Ian Buck, Nvidia's head of hyperscale and high-performance computing said, "Dynamo can capture that benefit and deliver 30 times more performance in the same number of GPUs in the same architecture for reasoning models like DeepSeek." Dynamo distributes AI workloads across up to 1,000 GPUs to boost throughput and performance. It is available now on GitHub. See Also: Jensen Huang Loses $20B In Wealth: How DeepSeek Hit Nvidia Stock And World's Richest People The announcement comes just two months after DeepSeek R1 rattled investors by demonstrating how advanced AI models could reduce computing needs, leading to a $600 million drop in Nvidia's market cap. Nvidia says Dynamo, combined with the memory-rich Blackwell Ultra chips and Grace CPUs, will allow AI data centers -- what it calls "AI factories" -- to run significantly more queries per second or offer premium services at higher margins. Subscribe to the Benzinga Tech Trends newsletter to get all the latest tech developments delivered to your inbox. Why It's Important: Nvidia is looking to reframe the narrative around increasingly efficient AI models like DeepSeek, which initially raised fears of slowing demand for its chips. Since the launch of DeepSeek in January 2025, Nvidia has experienced a significant decline in market capitalization, losing $420 billion. This decline has now been exacerbated by a lackluster response to announcements made at the GTC AI Conference. In addition to the Dynamo software, Huang also revealed a partnership with General Motors Co. to advance autonomous vehicle technology at GTC. He also said that Nvidia is expanding its focus on embodied AI, introducing the Groot N1 model for humanoid robots, which could potentially revolutionize the industry. Price Action: On Tuesday, Nvidia's stock dropped 3.43%, closing at $115.43. In the pre-market session on Wednesday, it gained 1.36%, reaching $117 at the time of writing. Year-to-date, the company's shares have fallen 16.54%, according to Benzinga Pro. Check out more of Benzinga's Consumer Tech coverage by following this link. Read Next: How Nvidia CEO Jensen Huang's Clever Pick-Up Line Turned A Homework Proposal Into Marriage While Building $3.38 Trillion AI Chip Giant Along The Way Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. Photo courtesy: Shutterstock NVDANVIDIA Corp$116.841.22%Stock Score Locked: Want to See it? Benzinga Rankings give you vital metrics on any stock - anytime. Reveal Full ScoreEdge RankingsMomentum80.87Growth88.51Quality97.18Value7.32Price TrendShortMediumLongOverviewMarket News and Data brought to you by Benzinga APIs

Share

Share

Copy Link

Nvidia introduces Dynamo, an open-source software that can significantly enhance the performance of AI models like DeepSeek R1, potentially revolutionizing AI processing capabilities and addressing investor concerns.

Nvidia Unveils Dynamo: A Game-Changer for AI Processing

Nvidia, the leading GPU manufacturer, has announced a significant breakthrough in AI processing capabilities with its new open-source software, Dynamo. According to Nvidia's CEO Jensen Huang, Dynamo has the potential to boost the speed of DeepSeek's R1 AI model by up to 30 times

1

.The Power of Dynamo

Dynamo's impressive capabilities lie in its ability to distribute AI inference tasks across up to 1,000 Nvidia GPUs, significantly increasing query throughput. Ian Buck, Nvidia's head of hyperscale and high-performance computing, explained, "Dynamo can capture that benefit and deliver 30 times more performance in the same number of GPUs in the same architecture for reasoning models like DeepSeek"

1

.This software, now available on GitHub, enhances performance by breaking up tasks to run in parallel, potentially revolutionizing AI processing efficiency and revenue generation for GPU service providers

2

.Addressing Investor Concerns

The introduction of Dynamo comes in the wake of a significant market event. In January 2025, the emergence of DeepSeek's R1 model sparked investor concerns about reduced computing needs for advanced AI models, leading to a substantial $600 billion drop in Nvidia's market capitalization

2

.Nvidia's Dynamo appears to be a strategic move to reframe the narrative around increasingly efficient AI models and reassure investors about the continued demand for its chips.

Related Stories

Blackwell Ultra and AI Factories

Alongside Dynamo, Nvidia unveiled the Blackwell Ultra, an upgraded version of its Blackwell 200 GPU. This new iteration boasts increased DRAM memory, from 192GB to 288GB of HBM3e high-bandwidth memory

1

.When combined with Nvidia's Grace CPU chip, up to 72 Blackwell Ultras can be integrated into the NVL72 rack-based computer. This configuration enhances inference performance running at FP4 by 50% over the existing NVL72 system

1

.Implications for AI Industry

The introduction of Dynamo and Blackwell Ultra has significant implications for what Nvidia calls "AI factories" - large-scale operations utilizing Nvidia's technology. These advancements allow AI data centers to run significantly more queries per second or offer premium services at higher margins

2

.For inference tasks priced at $1 per million tokens, the increased throughput means more tokens can be processed each second, potentially boosting revenue for GPU service providers

1

.References

Summarized by

Navi

Related Stories

Nvidia CEO Jensen Huang Praises DeepSeek's R1 Model, Remains Bullish on AI Chip Demand

22 Feb 2025•Business and Economy

Nvidia's GTC 2025: Ambitious AI Advancements Amid Growing Challenges

15 Mar 2025•Business and Economy

Nvidia's Stock Rebounds After DeepSeek Scare, But AI Chip Market Faces New Challenges

13 Feb 2025•Business and Economy

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy