Nvidia's GTC 2025: Ambitious AI Advancements Amid Growing Challenges

27 Sources

27 Sources

[1]

GTC felt more bullish than ever, but Nvidia's challenges are piling up | TechCrunch

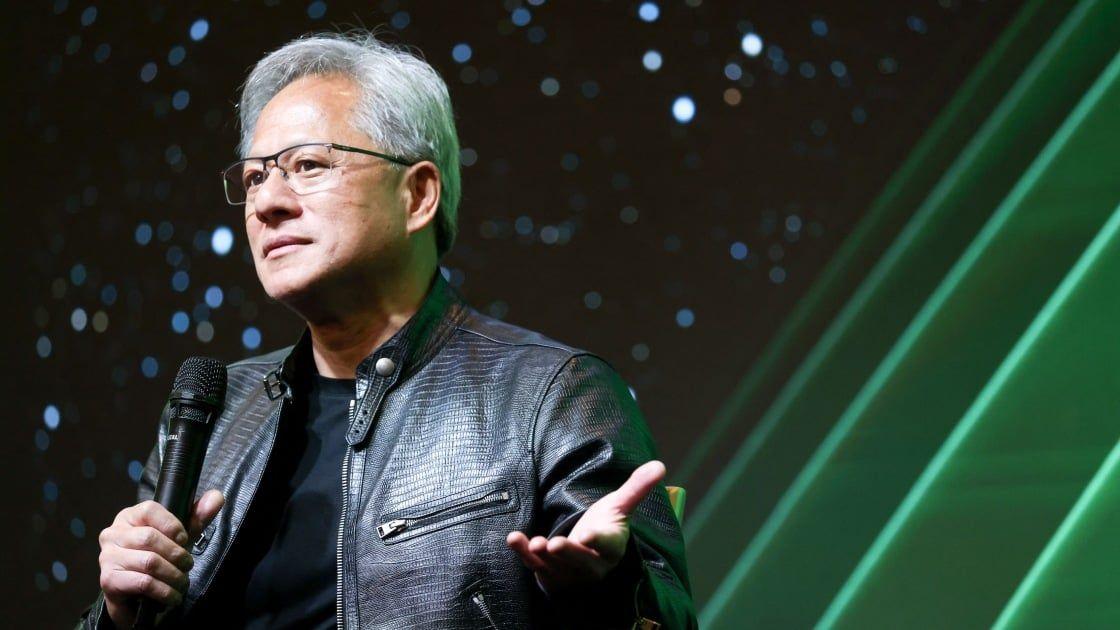

Nvidia took San Jose by storm this year, with a record-breaking 25,000 attendees flocking to the San Jose Convention Center and surrounding downtown buildings. Many workshops, talks, and panels were so packed that people had to lean against walls or sit on the floor -- and suffer the wrath of organizers shouting commands to get them to line up properly. Nvidia currently sits at the top of the AI world, with record-breaking financials, sky-high profit margins, and no serious competitors yet. But the coming months also hold unprecedented risk for the company as it faces U.S. tariffs, DeepSeek, and shifting priorities from top AI customers. At GTC 2025, Nvidia CEO Jensen Huang attempted to project confidence, unveiling powerful new chips, personal "supercomputers," and, of course, really cute robots. It was an exhaustive sales pitch - one aimed at investors reeling from Nvidia's nosediving stock. "The more you buy, the more you save," Huang said at one point during a keynote on Tuesday. "It's even better than that. Now, the more you buy, the more you make." More than anything, Nvidia at this year's GTC sought to assure attendees - and the rest of the world watching - that demand for its chips won't slow down anytime soon. During his keynote, Huang claimed that nearly the "entire world got it wrong" on traditional AI scaling falling out of vogue. Chinese AI lab DeepSeek, which earlier this year released a highly efficient "reasoning" model called R1, prompted fears among investors that Nvidia's monster chips may no longer be necessary for training competitive AI. But Huang has repeatedly insisted that power-hungry reasoning models will, in fact, drive more demand for the company's chips, not less. That's why at GTC, Huang showed off Nvidia's next line of Vera Rubin GPUs, claiming they'll perform inference (that is, run AI models) at roughly double the rate of Nvidia's current best Blackwell chip. The threat to Nvidia's business Huang spent less time addressing was upstarts like Cerebras, Groq, and other low-cost inference hardware and cloud providers. Nearly every hyperscaler is developing a custom chip for inference, if not training, as well. AWS has Graviton and Inferentia (which it's reportedly aggressively discounting), Google has TPUs, and Microsoft has Cobalt 100. Along the same vein, tech giants currently extremely reliant on Nvidia chips, including OpenAI and Meta, are looking to reduce those ties via in-house hardware efforts. If they - and the aforementioned other rivals - are successful, it'll almost assuredly weaken Nvidia's stranglehold on the AI chips market. That's perhaps why Nvidia's share price dipped around 4% following Huang's keynote. Investors might've been holding out hope for "one last thing" -- or perhaps an accelerated launch window. In the end, they got neither. Nvidia also sought to allay worries about tariffs at GTC 2025. The U.S. hasn't imposed any tariffs on Taiwan (where Nvidia gets most of its chips), and Huang claimed tariffs wouldn't do "significant damage" in the short run. He stopped short of promising that Nvidia would be shielded from the long-term economic impacts, however -- whatever form they ultimately take. Nvidia has clearly received the Trump Administration's "America First" message, with Huang pledging at GTC to spend hundreds of billions of dollars on manufacturing in the U.S. While that would help the company diversify its supply chains, it's also a massive cost for Nvidia, whose multitrillion-dollar valuation depends on healthy profit margins. As it looks to seed and grow businesses other than its core chips line, Nvidia at GTC drew attention to its new investments in quantum, an industry that the company has historically neglected. At GTC's first Quantum Day, Huang apologized to the CEOs of major quantum companies for causing a minor stock crash in January 2025 after he suggested that the tech wouldn't be very useful for the next 15 to 30 years. On Tuesday, Nvidia announced that it would open a new center in Boston, NVAQC, to advance quantum computing in collaboration with "leading" hardware and software markers. The center will, of course, be equipped with Nvidia chips, which the company says will enable researchers to simulate quantum systems and the models necessary for quantum error correction. In the more immediate future, Nvidia sees what it's calling "personal AI supercomputers" as a potential new revenue-maker. At GTC, the company launched DGX Spark (previously called Project Digits) and DGX Station, both of which are designed to allow users to prototype, fine-tune, and run AI models in a range of sizes at the edge. Neither is exactly inexpensive - they retail for thousands of dollars - but Huang boldly proclaimed that they represent the future of the personal PC. "This is the computer of the age of AI," Huang said during his keynote. "This is what computers should look like, and this is what computers will run in the future."

[2]

Nvidia Looks Past DeepSeek and Tariffs for AI's Next Chapter

No one has benefited more from the AI boom than Nvidia CEO Jensen Huang. With troubling signs ahead, he's trying to extend the good times. On a Monday in mid-January, Jensen Huang held a party for a crowd of health-care and tech executives in San Francisco. As about 400 guests filled up the Fairmont hotel's opulent Gold room, the Nvidia Corp. chief executive officer, wearing his default black leather jacket, worked through a routine of tech-themed dad jokes. "What do you call a robot that's better at finding your pills than you are?" he asked. "Computer-aided drug discovery!" The night progressed, and Huang downed at least two glasses of red wine, a bit of the harder stuff and -- to the amusement of many in attendance -- let fly. Huang taunted Stripe Inc. CEO Patrick Collison, a Massachusetts Institute of Technology dropout, for not being as smart as his wife, and told Ari Bousbib, the CEO of health-care software provider Iqvia Inc., that his company's name looked like "you fell asleep at the keyboard and then sent it in." Those who know Huang would recognize the style: self-assured, a bit guileless and goofy enough to come off as either charming or cringe. One key thing has changed, though: the size of the audience. The artificial intelligence boom has made Nvidia a multitrillion-dollar company, even if it's not quite a household name. (There isn't consensus on how to say the name. Nvidia's official brand guidelines suggest pronouncing the first syllable "en," but some people still use "in," or even "nuh," which is clearly wrong.) Still, it's an undeniable force in global tech. Huang -- now the world's 15th-wealthiest person, according to the Bloomberg Billionaires Index -- is constantly on the road evangelizing for Nvidia and AI. Sizable press gaggles have documented him slurping noodles in a Taipei night market; he's held babies and signed countless autographs, thrown out first pitches at Major League Baseball games, led tech conference crowds in chants, appeared onstage with the CEOs of Goldman Sachs, Meta Platforms and Salesforce, and chatted privately at the White House with President Donald Trump.

[3]

Nvidia CEO Jensen Huang discusses AI's future at GTC 2025

Nvidia founder Jensen Huang kicked off the company's artificial intelligence developer conference on Tuesday by telling a crowd of thousands that AI is going through "an inflection point." GTC 2025, heralded as "AI Woodstock," is being hosted at SAP Center in San Jose, Calif. Huang's keynote has been focused on the company's advancements in AI and his predictions for how the industry will move over the next few years. Huang said demand for GPUs from the top four cloud service providers is surging, adding that he expects Nvidia's data center infrastructure revenue to hit $1 trillion by 2028. He also announced that U.S. car maker General Motors would integrate Nvidia technology in its new fleet of self-driving cars. The Nvidia head also unveiled the company's Halos system, an AI solution built around automotive -- especially autonomous driving -- safety. "We're the first company in the world, I believe, to have every line of code safety assessed," Huang said.

[4]

SAN FRANCISCO

Sign up for the On Tech newsletter. Get our best tech reporting from the week. Get it sent to your inbox. In 2009, when Nvidia held its first developer conference, the event was something of a science fair. Dozens of academics filled a San Jose, Calif., hotel decorated with white poster boards of computer research. Jensen Huang, the chipmaker's chief executive, roamed the floor like a judge. This year, Nvidia's developer conference is far different. More than 25,000 people are expected to congregate on Tuesday at the event, known as Nvidia GTC. The crowds will fill a National Hockey League arena to hear a speech about the future of artificial intelligence from Mr. Huang, who has been nicknamed "A.I. Jesus." Nvidia, the world's leading developer of A.I. chips, has also wrapped San Jose in the company's neon green and black colors, shutting down city streets and sending hotel prices soaring as high as $1,800 a night. A who's who of industry leaders is expected to attend, including Michael Dell, the chief executive of Dell Technologies; Jeffrey Katzenberg, the co-founder of DreamWorks and WndrCo, a venture capital firm; and Bill McDermott, the chief executive of ServiceNow. "Nvidia makes the chips that are oxygen for A.I., so people are on their toes to learn about their latest and greatest," said Ali Farhadi, the chief executive of the Allen Institute for Artificial Intelligence, who is also attending. "The breadth of technology on display there is going to be phenomenal." The transformation of Nvidia's conference from an academic event to the Super Bowl of A.I. -- a weeklong showcase of robots, large language models and autonomous cars -- is symbolic of the company's metamorphosis. As A.I. has gone mainstream, customers have clamored for Nvidia's graphics processing units, the powerful chips that help create the technology. That has propelled the chipmaker to a nearly $3 trillion valuation, up from $8 billion in 2009. Yet Nvidia's ascent has raised questions. Generative A.I., which can answer questions, create images and write code, has been celebrated for its potential to improve businesses and create trillions of dollars in economic value. Microsoft, Amazon, Google, Meta and others are spending hundreds of billions of dollars to make that idea a reality. But the spending has prompted concerns across Wall Street and Silicon Valley about whether A.I. will make enough money to justify its staggering costs. And the technology's trajectory can be upended by new entrants like DeepSeek, a small Chinese company that made a cutting-edge A.I. system with a small fraction of the Nvidia chips that other companies used. (In January, when investors realized what DeepSeek had done, Nvidia lost $600 billion in value on a single day.) At Nvidia GTC, Mr. Huang will seek to reassure people that A.I. will deliver on its potential, said Patrick Moorhead, founder of Moor Insights & Strategy, a tech research firm. Mr. Huang is expected to elaborate on how A.I. systems are providing services that people will want to pay for, like A.I. agents, which can autonomously perform tasks such as shopping for groceries. He is also set to describe more futuristic uses for A.I., like the development of human-size robots that can walk and pick up things. In addition, Mr. Huang is expected to talk about Nvidia's next generation of A.I. chips, called Rubin, which may deliver as much as 30 times faster performance. Nvidia declined to comment on Mr. Huang's speech. The Rubin chip is critical to Nvidia's staying at the forefront of A.I. The company faces challenges as its customers, including Amazon, Google and Meta, make their own A.I. chips. And Nvidia's chips also have to change as A.I. companies try to get better performance out of their A.I. models. "The gravy train comes to a screeching halt if cloud companies stop spending," Mr. Moorhead said. Mr. Huang "has to reinforce that he knows what's going on out there." Mr. Huang's ability to command a crowd is reminiscent of Apple's Steve Jobs. Ahead of major company events, the Apple co-founder spent days rehearsing his speeches about a new iPod, iPhone or iPad, before taking the stage to thunderous applause and seeming to deliver his remarks as though they were unscripted. Mr. Huang, 62, similarly prepares in great detail for Nvidia GTC. Two months ahead of the event, he works with the company's product divisions to identify what to announce, said Greg Estes, Nvidia's vice president of corporate marketing. Mr. Huang also works with the marketing team to develop slides and demonstrations to show onstage, creating bullet points and checking facts that he may cite. But Mr. Huang never writes a speech, Mr. Estes said. When he takes the stage in his trademark black leather jacket, he speaks extemporaneously. A speech scheduled for 90 minutes can run more than two hours. "Sometimes a mistake will happen and he'll say, 'You know, we don't rehearse,'" Mr. Estes said. "He's not kidding. It is 'grip it and rip it.'" Nvidia GTC was formerly the GPU Technology Conference, named after the graphics processing units, or GPUs. The event, which was designed to encourage developers to use the company's chips, included a research summit where academics put up poster boards detailing how they had used the components for computing research. Mr. Huang spoke to attendees about what they did with the chips and, over the years, often heard that they were using them to develop A.I. David Cox, who presented research at an early conference as a Harvard professor, said most attendees treated the academics as "this weird little footnote." But he said Mr. Huang and other Nvidia executives took them seriously. "They seemed to understand that we had something here," said Mr. Cox, who is now the vice president of A.I. models at IBM Research. In 2014, Mr. Huang began devoting the majority of his speech at the conference to the way Nvidia chips could be used for machine learning and A.I. Gaming developers, who used GPUs to render video game graphics and had long been the heart of the company's business, were angered by the shift. "They were like, 'What the hell is this shiny new thing?'" said Naveen Rao, the chief A.I. officer at Databricks, which provides software tools for storing and analyzing large amounts of data. "We were like: 'No. No. This is the sea change.'" Mr. Huang bet that A.I. would drive tech's next big boom and that GPUs would be essential. In 2016, Nvidia developed a supercomputer packed with its chips and delivered it to OpenAI, an A.I. lab. A little over six years later, OpenAI released the ChatGPT chatbot, unleashing an A.I. frenzy. (The New York Times has sued OpenAI and its partner, Microsoft, for copyright infringement of news content related to A.I. systems. OpenAI and Microsoft have denied the claims.) Since then, Nvidia's finances have soared. The company, which was founded in 1993, increased its annual profit more than 1,500 percent in a two-year period to $72.88 billion last year from $4.37 billion in fiscal 2023. "Jensen has become the celebrity C.E.O. he always wanted to be," Mr. Rao said. "It's an overnight success years in the making because he captured A.I."

[5]

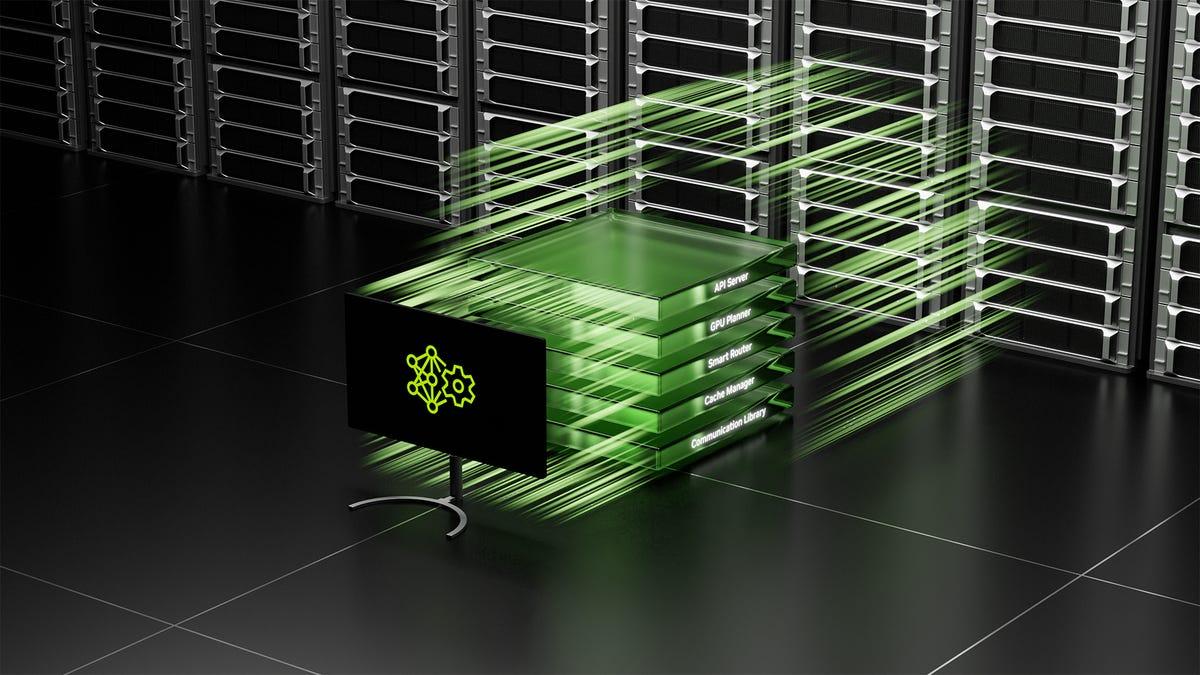

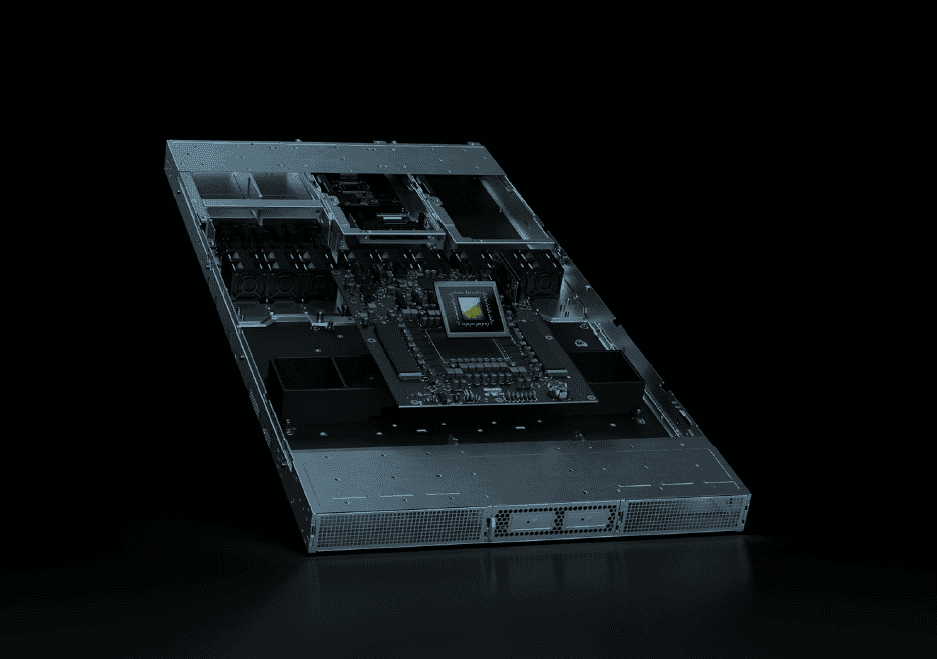

Nvidia's GTC 2025 keynote: 40x AI performance leap, open-source 'Dynamo', and a walking Star Wars-inspired 'Blue' robot

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More SAN JOSE, Calif. -- Nvidia CEO Jensen Huang took to the stage at the SAP Center on Tuesday morning, leather jacket intact and without a teleprompter, to deliver what has become one of the most anticipated keynotes in the technology industry. The GPU Technology Conference (GTC) 2025, self-described by Huang as the "Super Bowl of AI," arrives at a critical juncture for Nvidia and the broader artificial intelligence sector. "What an amazing year it was, and we have a lot of incredible things to talk about," Huang told the packed arena, addressing an audience that has grown exponentially as AI has transformed from a niche technology into a fundamental force reshaping entire industries. The stakes were particularly high this year following market turbulence triggered by Chinese startup DeepSeek's release of its highly efficient R1 reasoning model, which sent Nvidia's stock tumbling earlier this year amid concerns about potential reduced demand for its expensive GPUs. Against this backdrop, Huang delivered a comprehensive vision of Nvidia's future, emphasizing a clear roadmap for data center computing, advancements in AI reasoning capabilities, and bold moves into robotics and autonomous vehicles. The presentation painted a picture of a company working to maintain its dominant position in AI infrastructure while expanding into new territories where its technology can create value. Nvidia's stock traded down throughout the presentation, closing more than 3% lower for the day, suggesting investors may have hoped for even more dramatic announcements. But if Huang's message was clear, it was this: AI isn't slowing down, and neither is Nvidia. From groundbreaking chips to a push into physical AI, here are the five most important takeaways from GTC 2025. Blackwell platform ramps up production with 40x performance gain over Hopper The centerpiece of Nvidia's AI computing strategy, the Blackwell platform, is now in "full production," according to Huang, who emphasized that "customer demand is incredible." This is a significant milestone after what Huang had previously described as a "hiccup" in early production. Huang made a striking comparison between Blackwell and its predecessor, Hopper: "Blackwell NVLink 72 with Dynamo is 40 times the AI factory performance of Hopper." This performance leap is particularly crucial for inference workloads, which Huang positioned as "one of the most important workloads in the next decade as we scale out AI." The performance gains come at a critical time for the industry, as reasoning AI models like DeepSeek's R1 require substantially more computation than traditional large language models. Huang illustrated this with a demonstration comparing a traditional LLM's approach to a wedding seating arrangement (439 tokens, but wrong) versus a reasoning model's approach (nearly 9,000 tokens, but correct). "The amount of computation we have to do in AI is so much greater as a result of reasoning AI and the training of reasoning AI systems and agentic systems," Huang explained, directly addressing the challenge posed by more efficient models like DeepSeek's. Rather than positioning efficient models as a threat to Nvidia's business model, Huang framed them as driving increased demand for computation -- effectively turning a potential weakness into a strength. Next-generation Rubin architecture unveiled with clear multi-year roadmap In a move clearly designed to give enterprise customers and cloud providers confidence in Nvidia's long-term trajectory, Huang laid out a detailed roadmap for AI computing infrastructure through 2027. This is an unusual level of transparency about future products for a hardware company, but reflects the long planning cycles required for AI infrastructure. "We have an annual rhythm of roadmaps that has been laid out for you so that you could plan your AI infrastructure," Huang stated, emphasizing the importance of predictability for customers making massive capital investments. The roadmap includes Blackwell Ultra coming in the second half of 2025, offering 1.5 times more AI performance than the current Blackwell chips. This will be followed by Vera Rubin, named after the astronomer who discovered dark matter, in the second half of 2026. Rubin will feature a new CPU that's twice as fast as the current Grace CPU, along with new networking architecture and memory systems. "Basically everything is brand new, except for the chassis," Huang explained about the Vera Rubin platform. The roadmap extends even further to Rubin Ultra in the second half of 2027, which Huang described as an "extreme scale up" offering 14 times more computational power than current systems. "You can see that Rubin is going to drive the cost down tremendously," he noted, addressing concerns about the economics of AI infrastructure. This detailed roadmap serves as Nvidia's answer to market concerns about competition and sustainability of AI investments, effectively telling customers and investors that the company has a clear path forward regardless of how AI model efficiency evolves. Nvidia Dynamo emerges as the 'operating system' for AI factories One of the most significant announcements was Nvidia Dynamo, an open-source software system designed to optimize AI inference. Huang described it as "essentially the operating system of an AI factory," drawing a parallel to how traditional data centers rely on operating systems like VMware to orchestrate enterprise applications. Dynamo addresses the complex challenge of managing AI workloads across distributed GPU systems, handling tasks like pipeline parallelism, tensor parallelism, expert parallelism, in-flight batching, disaggregated inferencing, and workload management. These technical challenges have become increasingly important as AI models grow more complex and reasoning-based approaches require more computation. The system gets its name from the dynamo, which Huang noted was "the first instrument that started the last Industrial Revolution, the industrial revolution of energy." The comparison positions Dynamo as a foundational technology for the AI revolution. By making Dynamo open source, Nvidia is attempting to strengthen its ecosystem and ensure its hardware remains the preferred platform for AI workloads, even as software optimization becomes increasingly important for performance and efficiency. Partners including Perplexity are already working with Nvidia on Dynamo implementation. "We're so happy that so many of our partners are working with us on it," Huang said, specifically highlighting Perplexity as "one of my favorite partners" due to "the revolutionary work that they do." The open-source approach is a strategic move to maintain Nvidia's central position in the AI ecosystem while acknowledging the importance of software optimization in addition to raw hardware performance. Physical AI and robotics take center stage with open-source Groot N1 model In what may have been the most visually striking moment of the keynote, Huang unveiled a significant push into robotics and physical AI, culminating with the appearance of "Blue," a Star Wars-inspired robot that walked onto the stage and interacted with Huang. "By the end of this decade, the world is going to be at least 50 million workers short," Huang explained, positioning robotics as a solution to global labor shortages and a massive market opportunity. The company announced Nvidia Isaac Groot N1, described as "the world's first open, fully customizable foundation model for generalized humanoid reasoning and skills." Making this model open source represents a significant move to accelerate development in the robotics field, similar to how open-source LLMs have accelerated general AI development. Alongside Groot N1, Nvidia announced a partnership with Google DeepMind and Disney Research to develop Newton, an open-source physics engine for robotics simulation. Huang explained the need for "a physics engine that is designed for very fine-grain, rigid and soft bodies, designed for being able to train tactile feedback and fine motor skills and actuator controls." The focus on simulation for robot training follows the same pattern that has proven successful in autonomous driving development, using synthetic data and reinforcement learning to train AI models without the limitations of physical data collection. "Using Omniverse to condition Cosmos, and Cosmos to generate an infinite number of environments, allows us to create data that is grounded, controlled by us and yet systematically infinite at the same time," Huang explained, describing how Nvidia's simulation technologies enable robot training at scale. These robotics announcements represent Nvidia's expansion beyond traditional AI computing into the physical world, potentially opening up new markets and applications for its technology. GM partnership signals major push into autonomous vehicles and industrial AI Rounding out Nvidia's strategy of extending AI from data centers into the physical world, Huang announced a significant partnership with General Motors to "build their future self-driving car fleet." "GM has selected Nvidia to partner with them to build their future self-driving car fleet," Huang announced. "The time for autonomous vehicles has arrived, and we're looking forward to building with GM AI in all three areas: AI for manufacturing, so they can revolutionize the way they manufacture; AI for enterprise, so they can revolutionize the way they work, design cars, and simulate cars; and then also AI for in the car." This partnership is a significant vote of confidence in Nvidia's autonomous vehicle technology stack from America's largest automaker. Huang noted that Nvidia has been working on self-driving cars for over a decade, inspired by the breakthrough performance of AlexNet in computer vision competitions. "The moment I saw AlexNet was such an inspiring moment, such an exciting moment, it caused us to decide to go all in on building self-driving cars," Huang recalled. Alongside the GM partnership, Nvidia announced Halos, described as "a comprehensive safety system" for autonomous vehicles. Huang emphasized that safety is a priority that "rarely gets any attention" but requires technology "from silicon to systems, the system software, the algorithms, the methodologies." The automotive announcements extend Nvidia's reach from data centers to factories and vehicles, positioning the company to capture value throughout the AI stack and across multiple industries. The architect of AI's second act: Nvidia's strategic evolution beyond chips GTC 2025 revealed Nvidia's transformation from GPU manufacturer to end-to-end AI infrastructure company. Through the Blackwell-to-Rubin roadmap, Huang signaled Nvidia won't surrender its computational dominance, while its pivot toward open-source software (Dynamo) and models (Groot N1) acknowledges hardware alone can't secure its future. Nvidia has cleverly reframed the DeepSeek efficiency challenge, arguing more efficient models will drive greater overall computation as AI reasoning expands -- though investors remained skeptical, sending the stock lower despite the comprehensive roadmap. What sets Nvidia apart is Huang's vision beyond silicon. The robotics initiative isn't just about selling chips; it's about creating new computing paradigms that require massive computational resources. Similarly, the GM partnership positions Nvidia at the center of automotive AI transformation across manufacturing, design, and vehicles themselves. Huang's message was clear: Nvidia competes on vision, not just price. As computation extends from data centers into physical devices, Nvidia bets that controlling the full AI stack -- from silicon to simulation -- will define computing's next frontier. In Huang's world, the AI revolution is just beginning, and this time, it's stepping out of the server room.

[6]

Nvidia is dreaming of trillion-dollar datacentres with millions of GPUs and I can't wait to live in the Omniverse

From millions of GPUs to billions of robots, Nvidia has ambitions to fashion the world's factories of tomorrow I don't think it is an understatement to say that Nvidia wants to be the company that powers the artificial intelligence universe we may one day live in permanently. The opening keynote at Nvidia GTC 2025, colloquially referred to as the Woodstock of AI, saw CEO Jensen Huang extol the virtues of an AI ecosystem powered by hardware - and software - from the second most valuable company in the world. We were re-introduced to the concept of AI factories, which are essentially gigantic data centers and where, Nvidia's world, tokens generated are equivalent to revenue: the presentation mentioned a price, $1 trillion. The cost of building data centers, like mega-farms, increases exponentially every generation as they get more complex, and require more power to house even more compute. The lofty Stargate project has a budget of $500 billion and Apple has already committed to spending $500 billion over the next few years, a large chunk of which will be on data centres. Depending on what analysts you talk to, the global data centre capital expenditure is expected to reach $1 trillion either in 2027 (PWC) or 2029 (Dell'Oro). So that gives you a measure of Nvidia's extremely ambitious targets for itself and for humanity. A big piece of that puzzle that Nvidia introduced at the keynote is its Photonics switch systems which will allow those AI factories to "scale to millions of GPUs", seemingly within the same physical perimeter. The biggest number of them all in this presentation was when Rev Lebaredian Nvidia's VP, Omniverse and Simulation Technology, presented a slide about how Physical AI is transforming $50 trillion industries. These span across two billion cameras, 10 million factories, two billion vehicles and, Lebareian added 'Future Billion humanoid robots'. Yes, it's not just about robots, but humanoid robots, millions of them. Robots, in general, have been around for decades in many shapes and forms. Nvidia, however, envisions a world where billions of robots are built on what it calls, its "Three Computers": Pre and post-training (done via DGX), simulation and synthetic data generation (done via Omniverse) and Runtime (done using AGX). The world of the future may be home to "multiple types of humanoid robots" that will be able to post-train - while on the job so to speak - with real or synthetic data, allowing them to learn new skills or enhance their knowledgebase on the fly, literally at (or near) the speed of light. And the first of them may well be based on its new GR00T N1 humanoid robot foundation model, developed in partnership with Google Deepmind and ... Disney Research. (Ed: One can not see the tie-in with GR00T from Guardians of the Galaxy and Wall-E, a potentially prescient movie about the impact of robotics and AI on humanity). Where does this leave us? With a clear roadmap to a world where AI becomes an indispensable part of what it means to be human, perhaps the most important utility of them all. Never, in the history of humanity, will so many depend on so few. Oh and the other acronym (AGI) was not mentioned during the presentation, not once.

[7]

GTC 2025: 5 key takeaways from Nvidia's developer conference

Missed Nvidia's GTC 2025? Euronews Next breaks down the main announcements from the company's annual conference. Nvidia's CEO and founder Jensen Huang took to the stage at the semiconductor company's annual software conference on Tuesday to announce new products. While giving his keynote speech, Huang also expanded on his thoughts surrounding the future of artificial intelligence (AI) which he said was "at an inflexion point". In case you missed it, here are five key takeaways from GTC 2025 in San Jose, California. 1.'Computers of the future' "AI agents will be everywhere," Huang told the audience at his keynote, referring to the technology that is designed to take autonomous actions to assist humans without their input. "How they run, what enterprises run, and how we run it will be fundamentally different. And so we need a new line of computers," he continued. The answer to this large computing requirement, he said, is two new computers, called DGX Spark (previously called Project Digits) and DGX Station. DGX Spark is already available and allows developers to prototype, fine-tune, and inference (the process that machines use to conclude new data) the latest generation of reasoning AI models, such as DeepSeek, Meta, Google, and others. Nvidia says that it can also "seamlessly deploy" to data centres or the cloud. It is available in a desktop-friendly size. The much bigger DGX Station is in the works, but Huang said "this is what a PC should look like and this is what computers will run in the future. And we have a whole lineup for enterprise now, from little, tiny ones to workstation ones". It resembles a tower desktop and like its smaller cousin, will allow users to prototype fine-tune, and run AI models. DGX Station is marketed toward enterprise customers that would need to run heavy AI workloads. It features Nvidia's so-called "super chip," the Grace Blackwell Ultra. 2. Humanoid robots Nvidia announced several technologies to supercharge humanoid robot development. One includes a very Star Wars-esque-style robot that is being developed in partnership with Disney Research and Google DeepMind. It is called Newton and is Nvidia's physics engine, a computer software that helps simulate robot behaviour, to develop robots. One of the small Disney robots made its debut on stage next to Jensen. The entertainment company hopes to bring these robots to its theme parks next year. Nvidia said Newton is supposed to help robots be more "expressive" and "learn how to handle complex tasks with greater precision". The company also announced NVIDIA Isaac GR00T N1, a technology it says is the world's "first open, fully customisable foundation model for generalised humanoid reasoning and skills". 3. Data Centres and AI factories Huang said that the future will see a move from traditional data centres - buildings dedicated to handling general-purpose computing - to AI factories, which handle AI specifically. The European Commission has made AI factories one of its priorities for the bloc's AI development. "AI workloads aren't static. The next wave of AI applications will push power, cooling, and networking demands even further," Nvidia states in a blog post on its website. Huang announced Omniverse Blueprint, which lets engineers design, test, and optimise a new generation of intelligence manufacturing data centres using digital twins. One of the uses of Blueprint is to ensure AI factories are ready by predicting how changes in AI workloads will affect power and cooling at the data centre scale as well. 4. Autonomous driving Huang announced a partnership with General Motors (GM), the largest US carmaker, to integrate AI chips and software into its autonomous vehicle technology and manufacturing. The partnership will see Nvidia's platforms train GM's AI models to develop its next-generation vehicles, factories, and robots. AI is now going out "to the rest of the world," in robotics and self-driving cars, factories, and wireless networks," Huang said. "One of the earliest industries AI went into was autonomous vehicles... We build technology that almost every single self-driving car company uses," he added. 5. Quantum ambitions Huang also announced Nvidia was building a quantum computing research centre in Boston. The NVIDIA Accelerated Quantum Research Center, or NVAQC, will provide "cutting-edge technologies to advance quantum computing," according to the company. "Quantum computing will augment AI supercomputers to tackle some of the world's most important problems, from drug discovery to materials development," Huang said in his keynote. "Working with the wider quantum research community to advance CUDA-quantum hybrid computing, the NVIDIA Accelerated Quantum Research Center is where breakthroughs will be made to create large-scale, useful, accelerated quantum supercomputers".

[8]

Nvidia's GTC keynote inevitably went all in on AI but I'm definitely here for the Isaac GR00T robots

AI for your job, your storage, your factories, and your little robot too. Yesterday, Nvidia's CEO Jensen Huang addressed the crowd at the company's global artificial intelligence conference. Naturally, the keynote had a strong focus on Nvidia's future delving into AI technologies, including the current Blackwell processing architecture and beyond. Huang even touched on things like the use of AI in robotics, but with Nvidia stock prices continuing to drop, the market doesn't seem too impressed. For the first portion of the presentation, RTX Blackwell was hot on the CEO's lips. We've just seen the launch of Nvidia's new RTX 50-series cards running the new technology and DLSS 4 has been one of the few highlights. Between this and AMD's FSR 4, the use of AI scaling to help improve games is going to be key in the coming generations. Huang made a point of noting the server-side version of Blackwell's 40x increase in AI 'factory' performance over Nvidia's own Hopper. So while the company didn't announce any gaming PCs, we did see two brand new Nvidia desktops shown off. DGX Spark (formerly DIGITS) and DGX Station are desktop computers designed specifically to run AI. These can be used to run large models on hardware designed precisely for the job. They're not likely going to be a choice pick for your next rig, like the also enterprise focussed RTX Blackwell Pros that were announced, but you can register interest for the golden AI bois. Huang also covered Nvidia's new roadmap detailing the near future work regarding AI. As this is a developer aimed conference this is more about helping teams plan when working in coordination with Nvidia or being ready to use the company's technologies. That being said, Nvidia is touting the next big leap with extreme scale-up capabilities with Rubin and Rubin Ultra. Rubin is Nvidia's upcoming AI ready architecture, and it's not a bastardised spelling of the sandwich. Instead, the architecture is named after Vera Rubin, the astronomer who discovered dark matter. It is destined to introduce new designs for CPUs, and GPUs as well as memory systems. "Rubin is 900x the performance of Hopper in scale-up FLOPS, setting the stage for the next era of AI." says Huang, to a crowd who hopefully understood that. It also comes with a turbo boosted version, Rubin Ultra. This is for huge projects and will be able to configure racks up to 600 kilowatts with over 2.5 million individual components per rack. With both in place in the market, Nvidia hopes to be ready to face the greater demands AI will put on factories and processing, while still being scalable and energy efficient. According to the roadmap we should be seeing Rubin in play for Nvidia in 2026, and then after that we'll have the Feynman architecture, named after physicist Richard Feynman, in 2027-28 It will likely work in tandem with Dynamo, another Nvidia AI enablement announced during the keynote. Dynamo, will be the successor to Nvidia's Triton Inference Server, and critically is open source and available to all. The AI inference-serving software will help language models by interfacing between GPUs, separating the processing and reasoning tasks. It's already doubled performance over Hopper, but it leads to a more interesting way to handle these tasks. Dynamo stores what's been done, and will begin allocating tasks to GPUs that already have information that might help. These GPUs will become more efficient at these tasks thanks to this. Honestly, this sounds a lot like how a real brain works. The more you think and associate topics, the stronger those links will be and the better you can process ideas. But it's not just limited to GPUs and CPUs, it's also going to drastically change storage. "Rather than a retrieval-based storage system, the storage system of the future is going to be a semantics-based retrieval system. A storage system that continuously embeds raw data into knowledge in the background, and later, when you access it, you won't retrieve it -- you'll just talk to it. You'll ask it questions and give it problems." he explains. If you're not still reeling at that, get ready for Nvidia's incredibly named Isaac GR00T N1. Touted to be the first open and customizable foundation model for generalized humanoid reasoning and skills, this will teach your robot exactly what to do when an apple falls on their head. Invent gravity. "With NVIDIA Isaac GR00T N1 and new data-generation and robot-learning frameworks, robotics developers everywhere will open the next frontier in the age of AI." says Huang. It works by splitting tasks into two different categories, one for immediate and fast reactions, and others for more thoughtful reasoning. These can be combined to do things like look around a room and immediately analyse it, and then perform specific actions capable of the specific robot. These are just the first in a series of modules that Nvidia is planning to pretrain and release for download. These keynotes are always squarely aimed at developers and enterprise users rather than the average gamer, but they also point to future technologies that could wind up anywhere, including gaming. For Nvidia it looks like we can expect the company to go all in on further AI development, and most of it looks like it's being put to good use. Less AI for art purposes, more for improving graphics, efficient storage, complex programming, and, of course teaching robots how to grab stuff.

[9]

Nvidia GTC 2025: AI breakthroughs & expert insights on theCUBE - SiliconANGLE

A pivotal moment for artificial intelligence takes shape at this year's Nvidia Corp.'s GTC conference, and SiliconANGLE Media's roving livestream studio, theCUBE, is there to capture all the action. John Furrier, SiliconANGLE's founding editor, and theCUBE Research's Savannah Peterson are on the set, dissecting the AI breakthroughs happening at the epicenter of modern computing. From keynote breakdowns to insightful interviews with some of the best and brightest minds in the industry -- including leaders from Nvidia Corp., Dell Technologies, Inc., Hewlett Packard Enterprise Company, IBM Corp. and more -- dive into theCUBE's exclusive coverage of this landmark event. Don't miss Furrier's Day 1 recap of the top takeaways from Founder and Chief Executive Officer of Nvidia Jensen Huang's keynote presentation. The company's Blackwell architecture represents a big leap in AI computing, while its Dynamo AI operating system is making waves for large-scale inference. Nvidia lifted the veil on Nvidia Blackwell Ultra, the latest evolution of its newest graphics processing unit platform. The company said it's designed to bear the brunt of next-generation AI reasoning workloads, launching them alongside its first-ever Blackwell-powered desktop computers for AI developers. Nvidia has expanded its hyper-realistic real-time 3D graphics collaboration and simulation platform, Omniverse, with several major improvements and partnerships to accelerate industrial digitization with robotics and AI. Be sure to also read up on the company's latest AI models for adaptable robots and a new collection of efficient data center switches. After joining Huang on the keynote stage to discuss AI breakthroughs, Dell's Founder, Chairman and Chief Executive Officer, Michael Dell, sat down with Furrier for a follow-up analysis. Dell also shared details on the company's new line of AI PCs, launched Tuesday at the event. "I marvel at the incredible pace," Dell said. "What I see is every company in the world wants to embrace AI. This is not an evolutionary change; it's a revolution." Nvidia GTC has a bubbling ecosystem of strategic partners, and theCUBE's analysts met on-site with Antonio Neri, HPE's president and chief executive officer, to talk about the company's tightened alliance with Nvidia. TheCUBE has also met with "Shark Tank" legend Robert Herjavec at the Equinix booth, IBM's data scientist rockstar Hillery Hunter and many others. Follow the news and exclusive interviews at theCUBE.net. Join the conversations -- the SiliconANGLE and theCUBE community is buzzing on LinkedIn, with many members of theCUBE Collective analysts and alumni guests sharing photos, interviews and insights. Drop your thoughts on GTC's biggest moments, and follow the star-studded lineup for Friday's post-show broadcast.

[10]

NVIDIA CEO Jensen Huang - the robots are coming as the era of "physical AI" gets underway

The ChatGPT moment for general robotics is just around the corner. A typically bold claim from NVIDIA CEO Jensen Huang on day one of the firm's GTC conference this week, which he modestly dubbed "the SuperBowl of AI". But then if anyone expected to find him perhaps a little more on the defensive following the negative impact on Wall Street's perception of the company's prospects following the appearance of DeepSeek and its 'fewer AI chips means lower cost' pitch earlier in the year, they would be sorely disappointed. Huang had lots of talk about and he wasn't about to be put off his stride by short termist investors worried that the knock-on effect of the DeepSeek play would be a general reduction in demand for chips from NVIDIA and others, particularly when he was of the opinion that basically they'd just got their sums wrong anyway. The reality is that there will be more need, not less, he declared: Almost the entire world got it wrong. The amount of computation we need as a result of agentic AI, as a result of reasoning, is easily 100 times more than we thought we needed this time last year. To that end, Huang set out the firm's product roadmap for the next couple of years. A next-generation platform called Vera Rubin is scheduled for the second half of 2026, boasting 3.3 times the performance of the current Grace Blackwell system. This will be followed by Vera Rubin Ultra in the second half of 2027, complete with 14.4 times the performance of Grace Blackwell. As part of an enterprise focus, NVIDIA and Oracle expanded their existing relationship to make the former's AI Enterprise stack available across Oracle Cloud Infrastructure (OCI) services, including natively through the OCI Console and anywhere in the OCI distributed cloud. This enterprise push is important for the future. Huang noted that while hyperscalers like AWS, Microsoft and Google Cloud have spent around $3.6 million on Blackwell GPUs to date, the need for data center expansion in the coming years will also come from the enterprise: If you look at the world's data center spend, half of it is [cloud service providers], half of it is enterprise. Still, that half of the enterprise, unless somebody does something, it's going to stay not AI. That's what Nvidia AI enterprise is all about. But it was the declarations around robotics that caught the mainstream headlines today as Huang predicted: The time has come for robots. Everybody pay attention to this space - this could very well likely be the largest industry of all. Of particular note in the keynote was an insight into work on a platform called Isaac GR00T N1 that will "supercharge humanoid robot development." Isaac GROOT N1 is pitched as "the world's first open Humanoid Robot foundation model". GR00T N1 can easily generalize across common tasks, such as moving objects or transferring items from one arm to another, and multi-part tasks that require long context and combinations of general skills. Huang said: The age of generalist robotics is here. With NVIDIA Isaac GR00T N1 and new data-generation and robot-learning frameworks, robotics developers everywhere will open the next frontier in the age of AI...It started with perception AI -- understanding images, words and sounds. Then generative AI -- creating text, images and sound. Now it's the era of "physical AI, AI that can proceed, reason, plan and act, he argued. Nvidia is partnering with Walt Disney Co. and Google's DeepMind on the project, with Disney Research set to use the tech to enhance its use of robotic characters. In a statement, Kyle Laughlin, Senior VP at Walt Disney Imagineering Research & Development, said: We're committed to bringing more characters to life in ways the world hasn't seen before, and this collaboration with Disney Research, NVIDIA and Google DeepMind is a key part of that vision.This collaboration will allow us to create a new generation of robotic characters that are more expressive and engaging than ever before - and connect with our guests in ways that only Disney can. Huang also flagged up the NVIDIA Cosmos world foundation model platform, which integrates generative models, tokenizers, and a video processing pipeline to power physical AI systems, such as robots or autonomous vehicles (AVs). It equips AI models with advanced simulation capabilities to model process text, image and video prompts and use this capability to create detailed virtual environments tailored for robotics and AV simulations. I am the Chief Revenue Destroyer. A quip from Huang, but one that might hit home on a still clearly sceptical Wall Street. Despite announcement after announcement in a very full 90 minutes keynote, NVIDIA's stock price actually took a tumble in its aftermath. It's one thing to face down DeepSeek's impact, but perceptions linger and the ideas that have been planted in investors minds is going to take time to settle down.

[11]

Jensen Believes the Entire World is Wrong, NVIDIA isn't

While AI remains a priority, NVIDIA is now focusing on quantum computing. If last year's GTC was a pop concert, this year's event felt like the Super Bowl of AI, said NVIDIA chief Jensen Huang. Only this time, there are no losers, just winners. "I'm up here without a net -- there are no scripts, there's no teleprompter, and I've got a lot of things to cover," he quipped. The event was attended by over 25,000 in-person attendees, while 3,00,000 joined virtually. This prompted Huang to joke that the only way to accommodate more people at GTC was to physically expand the size of San Jose, where the event was held. This year, Huang, unfazed by DeepSeek's impact, only means business. "The entire world got it wrong -- the computation requirement and the scaling law of AI are more resilient and, in fact, hyper-accelerated. The amount of computation we need at this point, as a result of agentic AI and reasoning, is easily a hundred times more than we thought we needed this time last year," Huang claimed. Huang emphasised the shift from "retrieval computing" to "generative computing", where AI generates answers based on context. He introduced "agentic AI", which involves AI perceiving, reasoning, and planning actions. Huang introduced the open Llama Nemotron family of models, which are equipped with reasoning capabilities. According to him, this evolution, coupled with "physical AI" for robotics, has significantly increased computation needs. Building on this, Huang announced that NVIDIA's Blackwell architecture is now in full production, delivering 40 times the performance of Hopper. The Blackwell architecture boosts AI model training and inference, improving efficiency and scalability. Notably, Blackwell Ultra is set to hit systems later this year, but the real powerhouse is coming soon. Named after an American astronomer known for her research on dark matter, Vera Rubin is NVIDIA's next-generation GPU and is expected to debut in 2026. Huang added that the Rubin chips will be followed by Feynman chips, which are slated to arrive in 2028. To accelerate large-scale AI inference, Huang introduced NVIDIA Dynamo, an open-source platform that powers and scales AI reasoning models within AI factories. "It is essentially the operating system of an AI factory," Huang said Comparing Blackwell with older Hopper GPUs, Huang said, "The more you buy, the more you save. But now, the more you buy, the more you make." He even joked that when Blackwell hits the market, customers won't be able to give away Hopper GPUs and encouraged them to buy Blackwell. He further quipped that he is the 'chief revenue destroyer' as his statement might alarm his sales team, discouraging customers from purchasing remaining Hopper inventory. Ultimately, he stressed that technology is advancing so rapidly that buyers should invest in the latest and most powerful options available rather than settling for older models. Moreover, he expects data centre revenue to reach one trillion dollars by the end of 2028. "I am fairly certain we're going to reach that very soon." NVIDIA is not just about big data centres. The company also unveiled two new supercomputers, DGX Spark and DGX Station, powered by the Grace Blackwell platform, allowing AI developers, researchers, and students to prototype, fine-tune, and run large models on desktops. Cute Disney Robots on the Way Huang is all in on robotics and is convinced that physical AI is the next big thing. On stage, he was joined by 'Blue', a small AI-powered robot resembling Disney's Wall-E. He also introduced Newton, an open-source physics engine for robotics simulation developed in collaboration with Google DeepMind and Disney Research. Not stopping there, NVIDIA unveiled Isaac GROOT N1, the world's first open Humanoid Robot foundation model. If that wasn't enough, Huang also announced NVIDIA Isaac GR00T Blueprint, a system that generates massive synthetic datasets to train robots -- making it way more affordable to develop advanced robotics. NVIDIA's physical AI experience extends to autonomous vehicles. General Motors announced it will work with NVIDIA to optimise factory planning and robotics. Moreover, GM will use NVIDIA DRIVE AGX for in-vehicle systems to support advanced driver-assistance systems and enhanced safety features. Huang also mentioned the importance of safety in Autonomous Vehicles (AVs) and launched NVIDIA Halos, a comprehensive safety system for AVs. It integrates NVIDIA's automotive hardware and software safety solutions with AI research to ensure safe AV development from cloud to car. While AI remains a priority, NVIDIA is now focusing on quantum computing. It will host its first Quantum Day on March 20 -- an interesting move considering Huang once claimed that "quantum computers are still 15 to 30 years away".

[12]

The key takeaways from Nvidia CEO Jensen Huang's GTC keynote

Nvidia Corp. Chief Executive Jensen Huang took the stage today at Nvidia Corp.'s annual GTC keynote with his characteristic blend of technical mastery, visionary ambition and a touch of humor. This year's event underscored not just how fast the AI revolution is moving but how Nvidia continues to redefine computing itself. The keynote was a master class in scaling AI, pushing the limits of hardware, and building the future of artificial intelligence-driven enterprises. Key takeaways Blackwell in full production: computation demand and reasoning At the heart of the keynote was the Blackwell system, the latest in Nvidia's graphics processing unit evolution. This isn't just another generational upgrade; it represents the most extreme scale-up of AI computing ever attempted. With the Grace Blackwell NVLink72 rack, Nvidia has built an architecture that brings inference at scale to new heights. The numbers alone are staggering: The shift from air-cooled to liquid-cooled computing is a necessary adaptation to manage power and efficiency demands. This is not incremental innovation; it's a wholesale reinvention of AI computing infrastructure. Blackwell NVL with Dynamo: 40X better performance and scale-out Huang emphasized that AI inference at scale is extreme computing, with an unprecedented demand for FLOPS, memory and processing power. Nvidia introduced Dynamo, an AI-optimized operating system that enables Blackwell NVL systems to achieve 40 times better performance. Dynamo represents a breakthrough in delivering an operating system software to run on the AI Factory engineered hardware systems. This should unleash the agentic wave of applications and new levels of intelligence. Dynamo manages three key processes: Upcoming AI infrastructure product roadmap: cloud, enterprise and robotics Jensen made a point to emphasise the importance of Nvidia laying out a predictable annual rhythm for AI infrastructure product and technology evolution, covering cloud, enterprise computing, and robotics. Each milestone is an exponential leap forward, resetting industry KPIs for AI efficiency, power consumption, and compute scale. Scaling the AI network: Spectrum-X and silicon photonics Networking is the next bottleneck, and Nvidia is tackling this head-on: As Huang pointed out, datacenters are like stadiums, requiring short-range, high-bandwidth interconnects for intra-factory communication, and long-range optical solutions for AI cloud scale. Enterprise AI: Redefining the digital workforce Huang predicted that AI will reshape the entire computing stack, from processors to applications. AI agents will become integral to every business process, and Nvidia is building the infrastructure to support them. This isn't just about replacing humans; it's about enabling enterprises to scale intelligence like never before. The shift from data centers to AI factories Nvidia's ultimate vision is to move from traditional datacenters to AI factories -- self-contained, ultra-high-performance computing environments designed to generate AI intelligence at scale. This transformation redefines cloud infrastructure and makes AI an industrial-scale production process. Huang's new punchline, "The more you buy, the more revenue you get," was a comedic yet poignant reminder that AI's value is directly tied to scale. Nvidia is positioning itself as the architect of this new era, where investing in AI computing power isn't an option -- it's an economic necessity. Storage must be completely reinvented to support AI-driven workloads, shifting towards semantic-based retrieval systems that enable smarter, more efficient data access. This transformation will define the future of enterprise storage, ensuring seamless integration with AI and next-generation computing architectures. Look for key ecosystem partners like Dell Technologies, Hewlett Packard Enterprise and others to step up with new products and solutions for the new AI infrastructure wave. Michael Dell was highlighted by Jensen in showcasing Dell as having a complete Nvidia-enabled set of AI products and systems. Beyond AI: reinventing robotics Finally, Nvidia is applying its AI leadership to robotics. Huang outlined a future where general-purpose robots will be trained in virtual environments using synthetic data, reinforcement learning and digital twins before being deployed in the real world. This marks the beginning of AI-driven automation at an industrial scale. Final takeaways Huang's GTC keynote wasn't just about the next wave of GPUs -- it was about redefining the entire computing industry. The shift from datacenters to AI factories, from programming to AI agents, and from traditional networking to AI-optimized interconnects, positions Nvidia at the forefront of the AI industrial revolution. The Nvidia CEO has set the tone for the next decade: AI isn't just an application -- it's the future of computing itself. As we have been saying on theCUBE Pod, AI infrastructure has to deliver the speeds and feeds and scale to open the floodgates for innovation in the agentic and new AI applications that sit on top.

[13]

Scaling up: Nvidia redefines the computing stack with new releases for the AI factory - SiliconANGLE

Scaling up: Nvidia redefines the computing stack with new releases for the AI factory Minutes before his keynote speech at the GTC conference in San Jose Tuesday, Nvidia Corp. Chief Executive Jensen Huang surprised the more than 20,000 attendees in San Jose by suddenly appearing on-stage with a T-shirt launcher in his hands. He proceeded to fire rolled-up shirts into the crowd in the SAP Center, a fitting image for the speech that followed, which took dead aim at redefining the entire computing stack and enabling it for AI. Nvidia's vision, as outlined by Huang (pictured) and his executives this week, is of a world in which AI must be scaled up with more powerful components first before it can be scaled out to many servers worldwide. That will require advanced processing capability that pushes the limits of hardware while building AI-driven enterprises. It demands money, time and planning, all a part of the long game that Nvidia is playing as it seeks to maintain its leadership role in defining the next computer revolution. "We're building AI factories and AI infrastructure," Huang said during his keynote. "It's going to take years of planning. It isn't like buying a laptop. There's no replacement for scaling up before you scale out." A key ingredient in Nvidia's "scale up" strategy is Blackwell Ultra NVL72, the latest iteration of Nvidia's graphics processing unit platform. Huang unveiled a significant upgrade to its original Blackwell architecture, designed to handle the next generation of AI reasoning and agent-driven workloads. Blackwell Ultra will include a staggering 600,000 components per data center rack and 120 kilowatts of fully liquid-cooled infrastructure. "We have a one exaflops computer in one rack," Huang noted. "This is the most extreme scale-up the world has ever done." Huang also offered attendees a glimpse into the future by providing a roadmap for additional scale-up architecture. Blackwell Ultra aggregates the power of 72 GPUs, but Nvidia's next-generation Rubin will offer 144 GPUs by this time next year with an expansion to 576 GPUs or 600 kilowatts per rack in 2027. Despite the performance boost for Blackwell, the network could still be a bottleneck. The release on Tuesday of Spectrum-X Ethernet and Quantum-X800 InfiniBand networking systems can provide up to 800 gigabytes per second of data throughput for each of the 72 Blackwell GPUs. Nvidia also announced Dynamo, open-source inferencing software designed to increase throughput and decrease the cost of generating large language model tokens for AI during the process of running those models. By orchestrating inference communication across what is expected to be thousands of GPUs, Nvidia intends to drive efficiency as AI agents and other use cases ramp up. "Dynamo is essentially the operating system of an AI factory," Huang said in his keynote. Huang's declaration of operational support highlights a key element in his firm's enterprise strategy and a clear theme at GTC this week. The ultimate goal is for enterprises to move from traditional datacenters to AI factories, high-performance computing environments that will generate AI at scale. This vision of AI as an industrial-scale production process is driving Nvidia's evolving business model. Nvidia itself has become an AI factory, according to Huang. In a briefing for the media the day after his keynote, Nvidia's CEO outlined how his company has transitioned from processor maker to a critical revenue driver for its diverse customer base. "We're not building chips anymore, those were the good old days," Huang said. "We are an AI factory now. A factory helps customers make money." In many ways, Nvidia now finds itself in an unusual position within the tech industry. It has no reluctance to communicate its product plan years in advance, and it appears wholly unconcerned about the potential for competition with the very customers its supplies. The company's release of its roadmap for Blackwell and Rubin, along with planned enhancements in several other key product areas, reflected a level of transparency that Huang pointedly noted in his appearance before the assembled press on Wednesday. "We're the first tech company in history that announced four generations of technology at one time," Huang said. "That's like a company announcing the next four smartphones. Now everybody else can plan." Indeed, making sure the entire industry is on board with Nvidia's tick-tock advances in AI enabling technologies is critical to its continued dominance. Nvidia's bold moves in divulging its roadmap reflect a market philosophy that its "big tent" approach will avoid potential conflicts of interest with those who purchase its systems. Huang noted that Nvidia deliberately avoids being a "solutions company," and by leaving the last half of value creation to its customers, it can work side-by-side in partnership with any client to build AI platforms that deliver results. "We have no trouble taking the core tech that we create and allowing them to integrate it in their core solution and take it to market," Huang said. "We became the only AI company in the world that works with every AI company in the world. We have no trouble working with anyone in their way. We want to enable the ecosystem. That's why every company is here." Indeed, a stroll through the GTC exhibit halls in San Jose this week provided an opportunity to interact with representatives from Amazon Web Services Inc., Microsoft Corp., Google Cloud, Oracle Corp. and Hewlett Packard Enterprise Co. , among others. Attendees had an opportunity to rub shoulders with longtime tech luminaries Michael Dell of Dell Technologies Inc. and Bill McDermott of ServiceNow Inc. as they strolled the convention center halls. When the Nvidia ecosystem convened in San Jose last year, SiliconANGLE industry analyst Dave Vellante described the event as "...the most significant in terms of its reach, vision, ecosystem impact and broad-based recognition that the AI era will permanently change the world." One year later, it would be hard to argue that AI's impact has lessened or that GTC has become any less significant. "Last year GTC was described as the Woodstock of AI," said Huang during his opening day keynote. "This year it's being described as the Super Bowl of AI. We have now reached the tipping point of accelerated computing."

[14]

Nvidia CEO hypes AI with new chips and 'supercharged humanoid robots'

Gift 5 articles to anyone you choose each month when you subscribe. Nvidia, looking to cement its place at the heart of the artificial intelligence boom, laid out plans for more powerful chips, a model for robotics, and "personal AI supercomputers" that will let developers work on desktop machines. Speaking at the company's annual GTC event in San Jose, California, chief executive Jensen Huang unveiled a platform called Isaac GR00T N1 that will "supercharge humanoid robot development". Nvidia is working with Walt Disney and Google's DeepMind on the project, which will be open to outside developers.

[15]

GTC 2025: The most important AI announcements

If you thought AI computing was already moving at breakneck speed, GTC 2025 just hit turbo mode. Nvidia unveiled game-changing advancements in AI hardware, software, and robotics -- pushing boundaries in AI reasoning, inference acceleration, and 6G connectivity. With Blackwell Ultra, Dynamo, and GR00T N1, Nvidia isn't just leading the AI revolution -- it's rewriting the rules. Let's break down the biggest announcements. Nvidia has officially launched the RTX Pro Blackwell series at GTC 2025, introducing an unprecedented level of power for AI workloads and 3D processing. The flagship RTX Pro 6000 Blackwell for workstations features 96GB of GDDR7 memory, requiring 600 watts of power -- an increase from the 575 watts of the RTX 5090. It boasts 24,064 CUDA cores, 512-bit memory bus, and 1792 GB/s memory bandwidth, supporting PCIe Gen 5 and DisplayPort 2.1. This professional-grade GPU is tailored for game developers, AI professionals, and data-heavy workflows, coming in Max-Q and server variants. Nvidia has rebranded its professional GPU lineup under "RTX Pro," replacing the previous Quadro series. The series includes RTX Pro 5000, 4000, and 4500 models for desktops and laptops, with up to 24GB of VRAM for laptop variants. Nvidia RTX Pro Blackwell series pack insane power for AI and 3D work According to Ian Buck, Nvidia's head of hyperscale and high-performance computing, Dynamo delivers 30 times more performance using the same GPU count. This efficiency boost translates to 50 times more revenue potential for data centers utilizing Blackwell GPUs over previous Hopper-based architectures. Additionally, Nvidia optimized DeepSeek R1 by reducing its floating-point precision to FP4, a move that increases efficiency without sacrificing accuracy. The Blackwell Ultra variant, an enhancement of the Blackwell 200, now includes 288GB of HBM3e memory and integrates with Grace CPUs in the NVL72 rack system for 50% better inference performance. Nvidia can boost DeepSeek R1's speed 30x, says Jensen Huang Nvidia reveals DGX Spark: The world's smallest AI supercomputer AI at warp speed: Nvidia's new GB300 superchip arrives this year A notable feature is its adaptive reasoning toggle, allowing AI systems to switch between full reasoning and direct response modes, optimizing performance and cost efficiency. Additionally, Nvidia introduced Agent AI-Q, an open-source framework for integrating AI reasoning models into enterprise workflows. Llama Nemotron: Nvidia's answer to the AI reasoning boom

[16]

Nvidia CEO Jensen Huang discusses AI's future at GTC 2025

Nvidia founder Jensen Huang kicked off the company's artificial intelligence developer conference on Tuesday by telling a crowd of thousands that AI is going through "an inflection point." GTC 2025, heralded as "AI Woodstock," is being hosted at SAP Center in San Jose, Calif. Huang's keynote has been focused on the company's advancements in AI and his predictions for how the industry will move over the next few years. Huang said demand for GPUs from the top four cloud service providers is surging, adding that he expects Nvidia's data center infrastructure revenue to hit $1 trillion by 2028. He also announced that U.S. car maker General Motors would integrate Nvidia technology in its new fleet of self-driving cars. The Nvidia head also unveiled the company's Halos system, an AI solution built around automotive -- especially autonomous driving -- safety. "We're the first company in the world, I believe, to have every line of code safety assessed," Huang said.

[17]

Nvidia CEO Jensen Huang Discusses AI's Future at GTC 2025

Nvidia founder Jensen Huang kicked off the company's artificial intelligence developer conference on Tuesday by telling a crowd of thousands that AI is going through "an inflection point." GTC 2025, heralded as "AI Woodstock," is being hosted at SAP Center in San Jose, Calif. Huang's keynote has been focused on the company's advancements in AI and his predictions for how the industry will move over the next few years. Huang said demand for GPUs from the top four cloud service providers is surging, adding that he expects Nvidia's data center infrastructure revenue to hit $1 trillion by 2028. He also announced that U.S. car maker General Motors would integrate Nvidia technology in its new fleet of self-driving cars. The Nvidia head also unveiled the company's Halos system, an AI solution built around automotive -- especially autonomous driving -- safety. "We're the first company in the world, I believe, to have every line of code safety assessed," Huang said. Copyright 2025 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed.

[18]

Nvidia CEO Jensen Huang Says AI Is in the Middle of 'an Inflection Point'

Nvidia founder Jensen Huang kicked off the company's artificial intelligence developer conference on Tuesday by telling a crowd of thousands that AI is going through "an inflection point." At GTC 2025, heralded as "AI Woodstock," Huang focused his keynote on the company's advancements in AI and his predictions for how the industry will move over the next few years. In a highly anticipated announcement, Huang revealed more details around Nvidia's next-generation graphics architectures: Blackwell Ultra and Vera Rubin -- named for the famous astronomer. Blackwell Ultra is slated for the second half of 2025, while its successor, the Rubin AI chip, is expected to launch in late 2026. Rubin Ultra will take the stage in 2027. In a talk that lasted at over two hours, Huang outlined the "extraordinary progress" that AI has made. In 10 years, he said, AI graduated from perception and "computer vision" to generative AI, and now to agentic AI -- or AI that has the ability to reason.

[19]

Nvidia Looks to Expand AI Reign With New Chips, Personal Supercomputers

Nvidia's stock closed down more than three percent on Tuesday Jensen Huang, Nvidia's chief executive officer, used his annual developer conference to address concerns that the cost of artificial intelligence computing is spiraling out of control. At the event, Huang unveiled more powerful chips and related technology that he said would provide a clearer payoff to customers. The lineup includes a successor to Nvidia's flagship AI processor called the Blackwell Ultra, as well as additional generations stretching into 2027. Huang also unveiled Dynamo-branded software that will fine-tune existing and future equipment, making it more efficient and profitable. "It is essentially the operating system of an AI factory," Huang said during a roughly two-hour presentation at the company's annual GTC event in San Jose, California, which touched on everything from robot technology to personal supercomputers. The conference, once a little-known gathering of developers, has become a closely watched event since Nvidia assumed a central role in AI -- with the tech world and Wall Street taking its cues from the presentation. Huang introduced a variety of hardware, software and services during his speech, though there were no bombshell revelations for investors. The stock closed down more than three percent on Tuesday. Nvidia, once focused on computer gaming chips, has become a tech powerhouse involved in myriad fields. At the event, Huang said the new Blackwell Ultra processor lineup would arrive in the second half of 2025. It will be followed by a more dramatic upgrade called "Vera Rubin" in the latter half of 2026. The announcements also included: It's a pivotal moment for Nvidia. After two years of stratospheric growth for both its revenue and market value, investors in 2025 have begun to question whether the frenzy is sustainable. These concerns were brought into focus earlier this year when Chinese startup DeepSeek said it had developed a competitive AI model using a fraction of the resources. DeepSeek's claim spurred doubts over whether the pace of investment in AI computing infrastructure was warranted. But it was followed by commitments by Nvidia's biggest customers, a group that includes Microsoft and Amazon.com's AWS, to keep spending this year. The biggest data center operators -- a group known as hyperscalers -- are projected to spend $371 billion (roughly Rs. 32,10,561 crore) on AI facilities and computing resources in 2025, a 44 percent increase from the year prior, according to a Bloomberg Intelligence report published Monday. That amount is set to climb to $525 billion (roughly Rs. 45,43,247 crore) by 2032, growing at a faster clip than analysts expected before the viral success of DeepSeek. But broader concerns about trade wars and a possible recession have weighed on Nvidia's stock, which is down 14 percen this year. The shares fell 3.3 percent to $115.53 (roughly Rs. 9,997) by the close of New York trading Tuesday. The GM news hurt shares of Mobileye Global Inc., which develops self-driving technology. The stock fell 3.5 percent to $14.44 (roughly Rs. 1,250). The company, majority-owned by Intel Corp., had its initial public offering in 2022. The weeklong GTC event is a chance to convince the tech industry that Nvidia's chips are still must-haves for AI -- a field that Huang expects to spread to more of the economy in what he's called a new industrial revolution. Huang noted that the event has been described as the "Super Bowl of AI." The most important issue facing Nvidia is whether AI capital spending will continue to climb in 2026, Wolfe Research analyst Chris Caso said in a note previewing the event. "AI stocks have been down sharply on recession fears, and while we think AI spending is the last place cloud customers would wish to trim budgets, if the areas that fund those budgets suffer, that could put some pressure on capex." On that front, Huang didn't seem to soothe investors' concerns. But he offered a road map for future chips and unveiled a breakthrough system that relies on a combination of silicon and photonics -- light waves. Nvidia also announced plans for a quantum computing research lab in Boston, aiming to capitalize on another emerging technology. Nvidia, based in Santa Clara, California, has suffered some production snags as it works to rapidly upgrade its chips. Some early versions of Blackwell required fixes, delaying the release. Nvidia has said that those challenges are behind it, but the company still doesn't have enough supply to meet demand. It has increased spending to get more of the chips out the door, something that will weigh on margins this year. Huang said the top four public cloud vendors -- Amazon, Microsoft, Alphabet Inc.'s Google, and Oracle Corp. -- bought 1.3 million of Nvidia's older-generation Hopper AI chips last year. So far in 2025, the same group has bought 3.6 million Blackwell AI chips, he said. After Vera Rubin debuts in the second half of next year, Nvidia plans to release a version a year later called Rubin Ultra. Vera Rubin's namesake was an American astronomer credited with helping discover the existence of dark matter. The generation of chips after that will be named Feynman, Huang said. The name is a likely reference to Richard Feynman, an American theoretical physicist who made contributions to quantum mechanics.

[20]

Nvidia Launches New Google And Microsoft Azure Products At GTC 2025