NVIDIA Unveils Blackwell AI GPUs: A Leap Forward in AI and Data Center Technology

4 Sources

4 Sources

[1]

NVIDIA Blackwell Is Up & Running In Data Centers: NVLINK Upgraded To 1.4 TB/s, More GPU Details, First-Ever FP4 GenAI Image

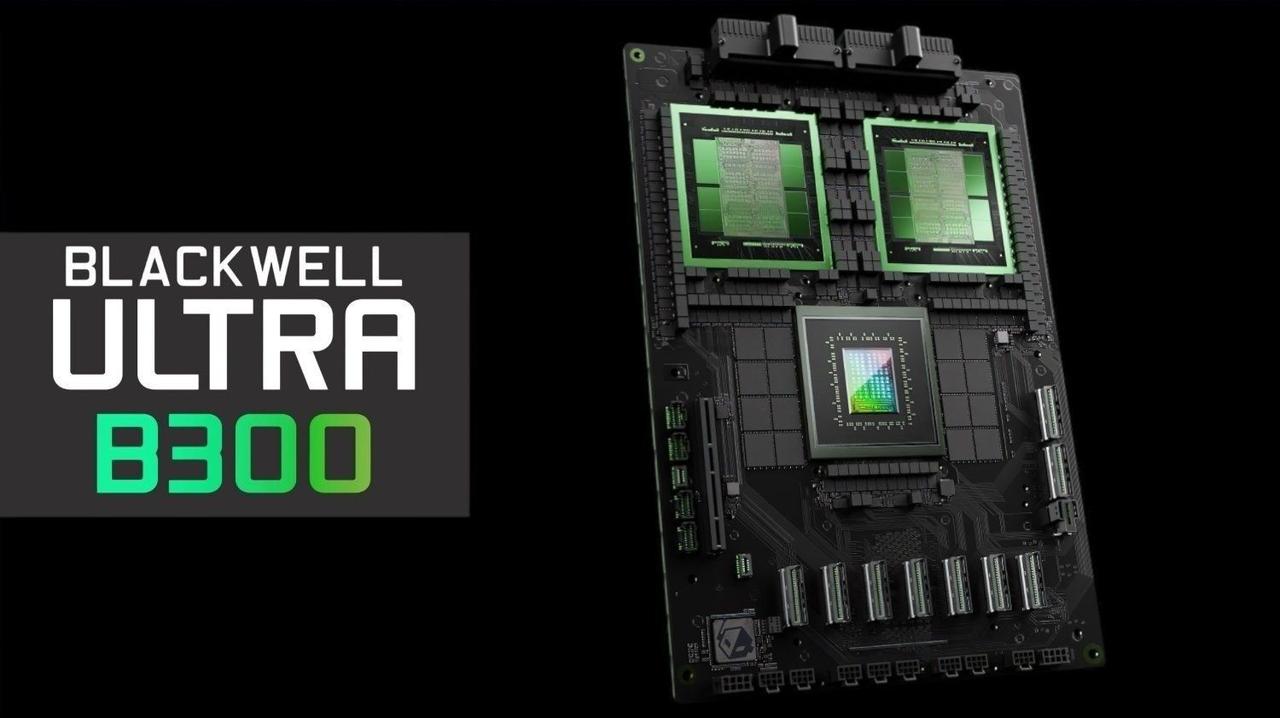

NVIDIA slams down Blackwell delay rumors as it moves towards sharing more info on the data center Goliath, now operational at data centers. NVIDIA's Blackwell Is Now Up & Operational, Coming To Global Data Centers This Year & More Details To Be Shared at Hot Chips Next Week With Hot Chips commencing next week, NVIDIA is giving us a heads-up on what to expect during the various sessions that they have planned during the event. Given that there has been a recent surge in rumors regarding a delay in Blackwell's roll-out, the company kicked off a press session by showing Blackwell up and running in one of its data centers and as the company has already stated previously, Blackwell is on track for ramp and will be shipping to customers later this year. So there is not a whole lot of weight to anyone saying that Blackwell has some sort of defect or issue & that it won't make it to markets this year. But Blackwell isn't just one chip, it's a platform. Just like Hopper, Blackwell encompasses a vast array of designs for data center, cloud, and AI customers, & each Blackwell product is comprised of various chips. These include: NVIDIA is also sharing brand new pictures of various trays featured in the Blackwell lineup. These are the first pictures of Blackwell trays being shared and show the amount of engineering expertise that goes into designing the next-generation Data Center platforms. The Blackwell generation is designed to tackle modern AI needs and to offer great performance in large language models such as the 405B Llama-3.1 from Meta. As LLMs grow in size with larger parameter sizes, data centers will require more compute and lower latency. Now you can make a large GPU with loads of memory and put the entire model on that chip but multiple GPUs are the requirement for achieving lower latency in token generation. The Multi-GPU inference approach splits the calculations across multiple GPUs for low latency and high throughput but going the multi-GPU route has its complications. Each GPU in a multi-GPU environment will have to send results of calculations to every other GPU at each layer and this brings the need for high-bandwidth GPU-to-GPU communication. NVIDIA's solution is already available for multi-GPU instances in the form of the NVSwitch. Hopper NVLINK switches offer up to 1.5x higher inference throughput compared to a traditional GPU-to-GPU approach thanks to its 900 GB/s interconnect (fabric) bandwidth. Instead of having to make several HOPS going from one GPU to the other, the NVLINK Switch makes it so that the GPU only needs to make 1 HOP to the NVSwitch and the other HOP directly to the secondary GPU. Talking about the GPU itself, NVIDIA shared a few speeds and feeds on the Blackwell GPU itself which are as follows: And some of the advantages of building a reticle limit chip include: With Blackwell, NVIDIA is introducing an even faster NVLINK Switch which doubles the fabric bandwidth to 1.8 TB/s. The NVLINK Switch itself is an 800mm2 die based on TSMC's 4NP node & extends NVLINK to 72 GPUs in the GB200 NVL72 racks. The chip provides 7.2 TB/s of full all-to-all bidirectional bandwidth over 72 ports and has an in-network compute capability of 3.6 TFLOPs. The NVLINK Switch Tray comes with two of these switches, offering up to 14.4 TB/s of total bandwidth. One of the tutorials planned by NVIDIA for Hot Chips is titled "Liquid Cooling Boosts Performance and Efficiency". These new liquid-cooling solutions will be adopted by GB200, Grace Blackwell GB200, and B200 systems. One of the liquid-cooling approaches to be discussed is the use of warm water direct-to-chip which offers improved cooling efficiency, lower operation cost, extended IT server life, and heat reuse possibility. Since these are not traditional chillers that require power to cool down the liquid, the war water approach can deliver up to a 28% reduction in data center facility power costs. NVIDIA is also sharing the world's first Generative AI image made using FP4 compute. The FP4-quantized model is shown to produce a 4-bit bunny image that is very similar to FP16 models at much faster speeds. This image was produced by MLPerf using Blackwell in Stable Diffusion. Now the challenge with reducing precision (going from FP16 to FP4) is that some accuracy is lost. There are some variances in the orientation of the bunny but mostly, the accuracy is preserved and the image is still great in terms of quality. This utilization of FP4 precision is part of NVIDIA's Quasar Quantization system and research which is pushing reduced-precision AI compute to the next level. NVIDIA, as mentioned previously, is leveraging AI to build chips for AI. The generative AI prowess is used to generate optimized Verilog code which is a hardware description language that describes circuits in the form of code and is used for design and verification of processors such as Blackwell. The language is also helping the speedup of next-gen chip architectures, pushing NVIDIA to deliver on its yearly cadence. NVIDIA is expected to follow up with Blackwell Ultra GPU next year which features 288 GB of HBM3e memory, increased compute density, and more AI flops and that would be followed by Rubin / Rubin Ultra GPUs in 2026 and 2027, respectively.

[2]

NVIDIA shows off Blackwell AI GPUs running in its data center: makes first-ever FP4 GenAI image

NVIDIA has powered up its new Blackwell AI GPUs and run them in real-time inside of their data centers, while teasing it will provide more details about its Blackwell GPU architecture at Hot Chips next week. The company has been embroiled in rumors of its Blackwell AI GPUs having issues big enough to require a redesign, and issues with Blackwell AI servers leaking through their water-cooling setups. NVIDIA has now shown its new Blackwell AI GPUs running in real-time, with Blackwell on-track to ramp into production and ship (in small quantities) to customers in Q4 2024. NVIDIA also teased new pictures of various trays available in the Blackwell family, with these first images of Blackwell trays being teased showing just how much engineering and design work goes into these things. It's truly incredible, almost like a work of art... and that's on the outside. Inside, we have the Blackwell B200 AI GPU featuring 208 billion transistors (104 billion with two reticle-limited GPUs merged into one) on the TSMC 4NP process node. We have 192GB of HBM3E memory with 8TB/sec of memory bandwidth, and 1.8TB/sec bi-directional NVLink bandwidth with high-speed NVLink-C2C Link to Grace CPU.

[3]

Nvidia shows off Blackwell server installations in progress -- AI and data center roadmap has Blackwell Ultra coming next year with Vera CPUs and Rubin GPUs in 2026

Nvidia also emphasized that it sees Blackwell and Rubin as platforms and not just GPUs. Prior to the start of the Hot Chips 2024 tradeshow, Nvidia showed off more elements of its Blackwell platform, including servers being installed and configured. It's a less than subtle way of saying that Blackwell is still coming -- never mind the delays. It also talked about its existing Hopper H200 solutions, showed FP4 LLM optimizations using its new Quasar Quantization System, discussed warm water liquid cooling for data centers, and talked about using AI to help build even better chips for AI. It reiterated that Blackwell is more than just a GPU, it's an entire platform and ecosystem. Much of what will be presented by Nvidia at Hot Chips 2024 is already known, like the data center and AI roadmap showing Blackwell Ultra coming next year, with Vera CPUs and Rubin GPUs in 2026, followed by Vera Ultra in 2027. Nvidia first confirmed those details at Computex back in June. But AI remains a big topic and Nvidia is more than happy to keep beating the AI drum. While Blackwell was reportedly delayed three months, Nvidia neither confirmed nor denied that information, instead opting to show images of Blackwell systems being installed, as well as providing photos and renders showing more of the internal hardware in the Blackwell GB200 racks and NVLink switches. There's not much to say, other than the hardware looks like it can suck down a lot of juice and has some pretty robust cooling. It also looks very expensive. Nvidia also showed some performance results from its existing H200, running with and without NVSwitch. It says performance can be up to 1.5X higher on inference workloads compared to running point-to-point designs -- that was using a Llama 3.1 70B parameter model. Blackwell doubles the NVLink bandwidth to offer further improvements, with an NVLink Switch Tray offering an aggregate 14.4 TB/s of total bandwidth. Because data center power requirements keep increasing, Nvidia is also working with partners to boost performance and efficiency. One of the more promising results is using warm water cooling, where the heated water can potentially be recirculated for heating to further reduce costs. Nvidia claims it has seen up to a 28% reduction in data center power use using the tech, with a large portion of that coming from the removal of below ambient cooling hardware. Above you can see the full slide deck from Nvidia's presentation. There are a few other interesting items of note. To prepare for Blackwell, which now adds native FP4 support that can further boost performance, Nvidia has worked to ensure it's latest software benefits from the new hardware features without sacrificing accuracy. After using its Quasar Quantization System to tune the workloads results, Nvidia is able to deliver basically the same quality as FP16 while using one quarter as much bandwidth. The two generated bunny images may very in minor ways, but that's pretty typical of text-to-image tools like Stable Diffusion. Nvidia also talked about using AI tools to design better chips -- AI building AI, with turtles all the way down. Nvidia created an LLM for internal use that helps to speed up design, debug, analysis, and optimization. It works with the Verilog language that's used to describe circuits and was a key factor in the creation of the 208 billion transistor Blackwell B200 GPU. This will then be used to create even better models to enable Nvidia to work on the next generation Rubin GPUs and beyond. [Feel free to insert your own Skynet joke at this point.] Wrapping things up, we have a better quality image of Nvidia's AI roadmap for the next several years, which again defines the "Rubin platform" with switches and interlinks as an entire package. Nvidia will be presenting more details on the Blackwell architecture, using generative AI for computer aided engineering, and liquid cooling at the Hot Chips conference next week.

[4]

NVIDIA to deep dive into the Blackwell GPU architecure at Hot Chips 2024 next week

NVIDIA will be hosting a Hot Chips Talk next week, deep diving into its new Blackwell GPU architecture while reminding the world that its Blackwell GPU has the highest AI compute, memory bandwidth, and interconnect bandwidth ever in a single GPU. At Hot Chips 2024 next week, NVIDIA will go into more detail about the Blackwell GPU architecture while also reminding us that it features not one, but two reticle-limited GPUs merged into one. One of the limitations of lithographic chipmaking tools is that they've been designed to make ICs (integrated circuits) that are no bigger than around 800 square millimeters, which is referred to as the "reticle limit". NVIDIA has two reticle-limited AI GPUs together (104 billion transistors per chip, 208 billion transistors in total). NVIDIA will discuss building to the reticle limit, and how it delivers on the highest communication density, lowest latency, and optimal energy efficiency during its Hot Chips Talk. Alongside the 208 billion transistors, Blackwell B200 also has 20 PetaFLOPS FP4 AI performance, up to 192GB of HBM3E memory with 8TB/sec of memory bandwidth from its 8-site HBM3E. There's 1.8TB/sec of bidirectional bandwidth through NVLink, and high-speed NVLink-C2C Link to Grace CPU. There's a LOT going on inside of Blackwell, a LOT.

Share

Share

Copy Link

NVIDIA showcases its next-generation Blackwell AI GPUs, featuring upgraded NVLink technology and introducing FP4 precision. The company also reveals its roadmap for future AI and data center innovations.

NVIDIA's Blackwell GPUs: A New Era in AI Computing

NVIDIA, the leading graphics processing unit (GPU) manufacturer, has unveiled its highly anticipated Blackwell AI GPUs, marking a significant advancement in artificial intelligence and data center technology. The company recently showcased these next-generation GPUs running in its data centers, demonstrating the rapid progress in AI hardware development

1

.Enhanced NVLink Technology

One of the key improvements in the Blackwell architecture is the upgraded NVLink technology. NVIDIA has boosted the NVLink bandwidth to an impressive 1.4 TB/s, a substantial increase from the previous generation

1

. This enhancement allows for faster data transfer between GPUs, crucial for complex AI workloads and large-scale data processing.Introduction of FP4 Precision

In a groundbreaking move, NVIDIA has introduced FP4 precision with the Blackwell GPUs. This marks the first time FP4 has been used for generative AI image creation

2

. The implementation of FP4 precision is expected to significantly improve performance and efficiency in AI tasks, particularly in areas like image generation and processing.Blackwell Server Installations

NVIDIA has begun installing Blackwell servers in its data centers, showcasing the company's commitment to rapid deployment of its latest technology. These installations demonstrate the readiness of the Blackwell architecture for real-world applications and its potential impact on AI research and development

3

.Related Stories

Future Roadmap: Blackwell Ultra and Beyond

Looking ahead, NVIDIA has outlined an ambitious roadmap for its AI and data center technologies. The company plans to release Blackwell Ultra next year, followed by the introduction of Vera CPUs and Rubin GPUs in 2026

3

. This roadmap highlights NVIDIA's long-term strategy to maintain its leadership in the AI hardware market.Upcoming Deep Dive at Hot Chips 2024

For those eager to learn more about the Blackwell GPU architecture, NVIDIA is set to provide a comprehensive deep dive at the Hot Chips 2024 conference next week

4

. This presentation is expected to offer detailed insights into the technical specifications and capabilities of the new GPUs, further solidifying NVIDIA's position at the forefront of AI and high-performance computing technology.References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology