Nvidia unveils Rubin platform and physical AI models, igniting Wall Street optimism for future

13 Sources

13 Sources

[1]

Jensen Huang CES keynote ignites analyst optimism on Nvidia's future

The 2026 Consumer Electronics Show, the world's largest annual tech trade show held every January in Las Vegas, this week hosted a keynote address by Nvidia CEO Jensen Huang, who took the stage Monday to announce new artificial intelligence products. Wall Street was keen to hear the Huang's presentation to gauge future chip demand and assess sentiment, especially since Nvidia shares have recently been rangebound, rising less than 2% in three months. Huang at CES unveiled Nvidia's Rubin platform -- the successor to its Blackwell architecture and an integrated ecosystem of six distinct chips co-designed to work one AI supercomputer -- and also introduced Alpamayo, an open reasoning model family for autonomous vehicle development. Huang has previously said that robotics, including self-driving cars, is Nvidia's second most important growth category after AI. Nvidia confirmed to CNBC on Monday that it is working with robotaxi operators in hopes of their using Nvidia's AI chips and Drive AV software stack to power their fleets of autonomous vehicles as soon as 2027. Several Wall Street banks -- among them, JPMorgan, Wells Fargo and Piper Sandler -- left Huang's speech with positive takeaways on Rubin's unique design and anticipated faster adoption than Nvidia's previous Blackwell and Hopper generation of chips. JPMorgan analyst Harlan Sur notably said in a Tuesday report to clients that Nvidia's development of physical AI products "could potentially drive the next leg of revenue growth" for the company. NVDA 1Y mountain Nvidia stock performance over the past year. Analysts stayed bullish on Nvidia after Huang's keynote, with their consensus price target suggesting about 33% potential upside ahead over the next year, according to LSEG. Of the 65 analysts covering Nvidia, 23 rate it a strong buy and 36 a buy. Only five analysts have a hold rating on shares, with just one underperform rating. Take a look at what the biggest names on the Street had to say: Wells Fargo: Overweight rating, $265 price target Analyst Aaron Rakers highlighted the design of Rubin, with six new co-designed chips, as a key differentiator for Nvidia, especially as rival chipmakers continue to gain market share. "Aside from emphasizing that demand is strong, NVDA's Keynote + Financial Q & A session will likely be viewed as a strong reaffirmation of the company's competitive positioning / extreme co-design differentiation vs driving financial model / estimate changes," he told clients in a Sunday note. "From a competitive standpoint, Mr. Huang believes it will be difficult for ASICs to keep up with NVIDIA systems building one chip at a time." JPMorgan: Overweight, $250 "Rubin GPU confirmed to be on track for C2H26 ramp; leaning even further into physical AI opportunity; performance differentiation achieved through co-design; stepping into storage market with new context memory controller," Analyst Harlan Sur said in a Monday note. "NVDA has deftly positioned itself to benefit from multiple aspects of physical AI development - from data center compute (model training), to simulation (Omniverse) to edge devices (Jetson Thor) - which in aggregate could potentially drive the next leg of revenue growth for NVDA." Morgan Stanley: Overweight, $250 Analyst Joseph Moore said that Nvidia's Rubin, which management said is now in full production, will "again raise the bar for performance." "No major surprises, but confidence on Rubin should be positively received given competitive noise exiting 2025 around broader TPU traction," Moore wrote in a Monday note to clients. "While the obvious pushback is that Rubin specs and timelines haven't changed, the stock is still 10% below highs immediately following Jensen's $500bn comments at GTC DC, numbers which have since moved higher post earnings and were reinforced in spirit today during the Q & A and fireside. With no hedging on supply or demand, we think enthusiasm can return as that plays out in numbers this year." UBS: Buy, $235 "NVDA emphasized very strong demand and highlighted early stages of agentic AI and physical AI starting to contribute this year. It more explicitly discussed Vera Rubin (now in full production and expected to ramp on track in 2H26) which is more typically launched at GTC in March. The system-level innovation at each of the six key chips in the rack results in ~3.5x performance improvements on peak workloads. The platform is also designed to be more modular, which should help yield/cycle time and bolster gross margin," analyst Timothy Arcuri said in a Monday note. "Net, we maintain our ests but still very much see an upward bias to C2026 and C2027 numbers with faster cycle times and Rubin ramp and potential resumption of China shipments." Piper Sandler: Overweight, $225 Analyst Harsh Kumar said Nvidia is trading at an attractive valuation given its "clear lead in rack-scale systems and expanding strategic partnerships," according to a Monday note to clients. Kumar was surprised by Nvidia's nearly 100 large language models released in 2025, noting that Nvidia led the group of companies releasing LLMs, with Google and Alibaba trailing, respectively. "We left extremely positive on NVDA's technological positioning to deliver ROI and value to its customers throughout the year. Vera Rubin is now in full production, with revenues expected to begin in 2H26 and an anticipated faster ramp than Blackwell and Hopper generations," "The scope of involvement in the AI space that NVDA demonstrates continues to impress. NVDA remains our favorite pick also considering a favorable valuation at a 24.5x P/E NTM."

[2]

NVIDIA Rubin Platform, Open Models, Autonomous Driving: NVIDIA Presents Blueprint for the Future at CES

NVIDIA founder and CEO Jensen Huang opened CES in Las Vegas with Rubin -- NVIDIA's first extreme-codesigned AI platform -- plus open models for healthcare, robotics and autonomy, and a Mercedes-Benz CLA showcasing AI-defined driving. NVIDIA founder and CEO Jensen Huang took the stage at the Fontainebleau Las Vegas today to open CES 2026, declaring that AI is scaling into every domain and every device. "Computing has been fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence," Huang said. "What that means is some $10 trillion or so of the last decade of computing is now being modernized to this new way of doing computing." Huang unveiled Rubin, NVIDIA's first extreme-codesigned, six-chip AI platform now in full production, and introduced Alpamayo, an open reasoning model family for autonomous vehicle development -- part of a sweeping push to bring AI into every domain. Noting that 80% of startups are building on open models, Huang also emphasized the role of NVIDIA open models across every domain, trained on NVIDIA supercomputers, forming a global ecosystem of intelligence that developers and enterprises can build on. "Every single six months, a new model is emerging, and these models are getting smarter and smarter," Huang said. "Because of that, you could see the number of downloads has exploded." Find all NVIDIA news from CES in this online press kit. A New Engine for Intelligence: The Rubin Platform Introducing the audience to pioneering American astronomer Vera Rubin, after whom NVIDIA named its next-generation computing platform, Huang announced that the NVIDIA Rubin platform, the successor to the record‑breaking NVIDIA Blackwell architecture and the company's first extreme-codesigned, six‑chip AI platform, is now in full production. Built from the data center outward, Rubin platform components span: * Rubin GPUs with 50 petaflops of NVFP4 inference * Vera CPUs engineered for data movement and agentic processing * NVLink 6 scale‑up networking * Spectrum‑X Ethernet Photonics scale‑out networking * ConnectX‑9 SuperNICs * BlueField‑4 DPUs Extreme codesign -- designing all these components together -- is essential because scaling AI to gigascale requires tightly integrated innovation across chips, trays, racks, networking, storage and software to eliminate bottlenecks and dramatically reduce the costs of training and inference, Huang explained. He also introduced AI-native storage with NVIDIA Inference Context Memory Storage Platform -- an AI‑native KV‑cache tier that boosts long‑context inference with 5x higher tokens per second, 5x better performance per TCO dollar and 5x better power efficiency. Put it all together and the Rubin platform promises to dramatically accelerate AI innovation, delivering AI tokens at one-tenth the cost. "The faster you train AI models, the faster you can get the next frontier out to the world," Huang said. "This is your time to market. This is technology leadership." Open Models for All NVIDIA's open models -- traained on NVIDIA's own supercomputers -- are powering breakthroughs across healthcare, climate science, robotics, embodied intelligence and autonomous driving. "Now on top of this platform, NVIDIA is a frontier AI model builder, and we build it in a very special way. We build it completely in the open so that we can enable every company, every industry, every country, to be part of this AI revolution." The portfolio spans six domains -- Clara for healthcare, Earth-2 for climate science, Nemotron for reasoning and multimodal AI, Cosmos for robotics and simulation, GR00T for embodied intelligence and Alpamayo for autonomous driving -- creating a foundation for innovation across industries. "These models are open to the world," Huang said, underscoring NVIDIA's role as a frontier AI builder with world-class models topping leaderboards. "You can create the model, evaluate it, guardrail it and deploy it." AI on Every Desk: RTX, DGX Spark and Personal Agents Huang emphasized that AI's future is not only about supercomputers -- it's personal. Huang showed a demo featuring a personalized AI agent running locally on the NVIDIA DGX Spark desktop supercomputer and embodied through a Reachy Mini robot using Hugging Face models -- showing how open models, model routing and local execution turn agents into responsive, physical collaborators. "The amazing thing is that is utterly trivial now, but yet, just a couple of years ago, that would have been impossible, absolutely unimaginable," Huang said. The world's leading enterprises are integrating NVIDIA AI to power their products, Huang said, citing companies including Palantir, ServiceNow, Snowflake, CodeRabbit, CrowdStrike, NetApp and Semantec. "Whether it's Palantir or ServiceNow or Snowflake -- and many other companies that we're working with -- the agentic system is the interface." At CES, NVIDIA also announced that DGX Spark delivers up to 2.6x performance for large models, with new support for Lightricks LTX‑2 and FLUX image models, and upcoming NVIDIA AI Enterprise availability. Physical AI AI is now grounded in the physical world, through NVIDIA's technologies for training, inference and edge computing. These systems can be trained on synthetic data in virtual worlds long before interacting with the real world. Huang showcased NVIDIA Cosmos open world foundation models trained on videos, robotics data and simulation. Cosmos: * Generates realistic videos from a single image * Synthesizes multi‑camera driving scenarios * Models edge‑case environments from scenario prompts * Performs physical reasoning and trajectory prediction * Drives interactive, closed‑loop simulation Advancing this story, Huang announced Alpamayo, an open portfolio of reasoning vision language action models, simulation blueprints and datasets enabling level 4‑capable autonomy. This includes: * Alpamayo R1 -- the first open, reasoning VLA model for autonomous driving * AlpaSim -- a fully open simulation blueprint for high‑fidelity AV testing "Not only does it take sensor input and activates steering wheel, brakes and acceleration, it also reasons about what action it is about to take," Huang said, teeing up a video showing a vehicle smoothly navigating busy San Francisco traffic. Huang announced the first passenger car featuring Alpamayo built on NVIDIA DRIVE full-stack autonomous vehicle platform will be on the roads soon in the all‑new Mercedes‑Benz CLA -- with AI‑defined driving coming to the U.S. this year, and follows the CLA's recent EuroNCAP five‑star safety rating. Huang also highlighted growing momentum behind DRIVE Hyperion, the open, modular, level‑4‑ready platform adopted by leading automakers, suppliers and robotaxi providers worldwide. "Our vision is that, someday, every single car, every single truck will be autonomous, and we're working toward that future," Huang said. Huang was then joined on stage by a pair of tiny beeping, booping, hopping robots as he explained how NVIDIA's full‑stack approach is fueling a global physical AI ecosystem. Huang rolled a video showing how robots are trained in NVIDIA Isaac Sim and Isaac Lab in photorealistic, simulated worlds -- before highlighting the work of partners in physical AI across the industry, including Synopsis and Cadence, Boston Dynamics and Franka, and more. He also announced an expanded partnership with Siemens, supported by a montage showing how NVIDIA's full stack integrates with Siemens' industrial software, enabling physical AI from design and simulation through production. "These manufacturing plants are going to be essentially giant robots," Huang said. Building the Future, Together Huang explained that NVIDIA builds entire systems now because it takes a full, optimized stack to deliver AI breakthroughs. "Our job is to create the entire stack so that all of you can create incredible applications for the rest of the world," he said. Watch the full keynote replay:

[3]

CES 2026: 3 major takeaways from Nvidia Live

It's hard to say whether Nvidia has ever truly been subtle with its announcements. At last year's CES, CEO and founder Jensen Huang stunned the industry with the debut of the GeForce RTX 50 series alongside Nvidia Cosmos, its ambitious world-model initiative. This year's show was more restrained on the consumer GPU front, but the message to CES 2026 attendees was still unmistakable: Nvidia wants it all. "All" isn't hyperbole. Nvidia is now the first company ever to surpass a $5 trillion valuation -- an almost inconceivable figure -- and Huang and company show no signs of slowing down. The company's ambitions now span factories, autonomous vehicles, robotics, and nearly any domain that can be trained, tested, or perfected in simulation before ever touching the real world. If something can be modeled, Nvidia wants to power it. The biggest buzzword of the night was "physical AI," Nvidia's term for AI systems that don't just generate content but actually act. These models are trained in virtual environments using synthetic data, then deployed into physical machines once they've learned how the world works. Huang showcased Cosmos, a world foundation model capable of simulating environments and predicting movement, alongside Alpamayo, a reasoning model specifically designed for autonomous driving. This is the tech Nvidia says will power robots, industrial automation, and self-driving vehicles, as demonstrated by the Mercedes-Benz CLA, which was shown running AI-defined driving on stage. The company also revealed plans to test its own robotaxi service with a partner as soon as 2027, using Level 4 autonomous vehicles capable of driving without human intervention in limited regions. Nvidia hasn't announced where the service will launch or with whom it's partnering, but the move signals a shift from being a behind-the-scenes supplier to actively participating in the self-driving race. Huang has already described robotics -- including autonomous vehicles -- as Nvidia's second-most important growth category after AI itself. If you were waiting for new consumer GPUs, you probably noticed very quickly that there weren't any. Nvidia didn't announce a single new GeForce card, and that felt entirely intentional. Instead, Huang spent most of the keynote talking about Rubin, Nvidia's next-generation AI platform that's already in full production. Rubin is described as more than just a chip, but an entire system. GPUs, CPUs, networking, and storage, all designed together to handle the immense (and environment-altering) compute demands of modern AI models at data center scale. Nvidia framed this as essential to keeping up with skyrocketing AI demand, where training costs, energy use, and bottlenecks are becoming existential problems. The absence of gaming hardware shouldn't be considered a snub, but it is clear that Nvidia is no longer driven by gamers. It's kind of been clear that's been the case for a while, but today's conference really drove the nail in the coffin. Instead, the company's ambitions are driven by hyperscalers, governments, and anyone trying to automate everything that moves. The third major takeaway was Nvidia's ongoing push to make itself unavoidable through openness -- or at least Nvidia's version of it. Huang repeatedly emphasized that the company isn't just selling hardware, but open AI models that developers can actually use, fine-tune, and deploy (not to be confused with ChatGPT developer OpenAI). Nvidia now has open models spanning healthcare, climate science, robotics, embodied intelligence, reasoning AI, and autonomous driving, all trained on Nvidia supercomputers and released as foundational building blocks. They've practically become the corn of tech. Even personal AI agents got some stage time, with demos of local agents running on Nvidia's DGX Spark hardware. Nvidia aims to be the platform beneath every AI system, from massive data centers to individual desktops. It's an elegant strategy -- sell openness, but still own the pipes. Taken together, the keynote felt like a declaration. Nvidia isn't chasing CES hype cycles any more. It's positioning itself as the backbone of an AI-powered world, where the most important announcements don't happen on stage, and the most impactful products aren't meant to fit under your desk.

[4]

"The entire stack is being changed" - Nvidia CEO Jensen Huang looks ahead to the next generation of AI

"Physical AI" and Vera Rubin is coming, so be prepared, Jensen Huang says Nvidia CEO Jensen Huang has declared a new age of AI in 2026 as the demands from across the world continue to grow. Speaking on stage at CES 2026 in his opening keynote, Huang hailed the work done by his company in recent years, but hinted things are only set to grow even more going forward. "Every 10 to 15 years, the computer industry resets - a new shift happens," Huang declared, "and each time, the world of applications target a new platform." "Except this time, there are two simultaneous platform shifts happening at the same time," he added - namely AIs and applications built on AI tools, but also how software is being run and developed now on GPUs rather than CPUs. "The entire stack is being changed," he added, "computing has been fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence....every single layer of that five-layer cake (of AI) is being reinvented." Sporting an all-new leather jacket and struggling with some tech gremlins, Huang noted how billions of dollars are being invested in AI and research, and how the "modernization of AI to AI" will be vital to making the next big breakthroughs. He hailed the "incredible year" of 2025, "where it seemed like everything was happening - and to be honest, it probably was" and the AI industry took huge steps forward. He particularly praised Nvidia's work in open models, via its DGX platform, which has helped crack problems in healthcare and cellular research, as well as its Earth-2 model to improve weather predictions, and in its new Alpamayo model for autonomous vehicles. Huang also revealed that Nvidia's next-generation Vera Rubin chips are now in full development, helping pave the way for the next step in AI. Containing over 17,000 components, the chips bring together a Vera CPU and two Rubin GPU to provide a massive step forward in power and performance, and the building blocks for the hyper-scaled racks required for Nvidia's AI Factories. Huang also spent some time discussing "physical AI" - a system which is able to really understand the actual world around us. The "complete unknowns...of the common sense of the physical world" pose a unique challenge, but one Nvidia is looking to address with models such as Cosmos, Gr00T and Alpamayo, which take in synthetic data to learn more about the world around them. Concluding on Nvidia's aim to build "one central platform for AI," Huang outlined how, "our job is to create the entire stack, so all of you can create incredible applications for the rest of the world."

[5]

"ChatGPT moment for physical AI": Nvidia CEO presents new AI models and chips

Why it matters: The strategy underscores where the next wave of AI and computing is headed, given Nvidia's dominance in the chip market. Driving the news: "The ChatGPT moment for physical AI is here -- when machines begin to understand, reason and act in the real world," Huang said in a statement. "Robotaxis are among the first to benefit." * Speaking onstage in Las Vegas, Huang said Alpamayo is "the world's first thinking, reasoning autonomous vehicle AI. Alpamayo is trained end-to-end, literally from camera-in to actuation-out." * Huang also said the new Mercedes Benz CLA will feature Nvidia's driver assistance software in what he said is the company's "first entire stack endeavor." He then showed a demo of the car driving in San Francisco, avoiding pedestrians and taking turns. * Huang also announced the launch of the Rubin platform, comprised of six chips. The products will be available for Nvidia partners in the second half of 2026, the company said. * "There's no question in my mind now that this is going to be one of the largest robotics industries, and I'm so happy that we worked on it," Huang said. "Our vision is that someday every single car, every single truck will be autonomous." Reality check: It will be a while until every car is autonomous. Nvidia's own plans to test a robotaxi service with a partner are slated for 2027. * Self-driving cars could threaten millions of jobs and already face pushback from unions. Catch up quick: The presentation follows Nvidia entering into a non-exclusive tech licensing agreement with Groq, a startup producing chips to support real-time chatbot queries. * That deal helps strengthen Nvidia in inference, the stage at which AI models use what they've learned in the training process to produce real-world results, Axios' Megan Morrone writes. * This phase is essential for AI to scale. The big picture: Nvidia has long been investing in physical AI, meaning AI interfacing with the world and not just software. * Last year, Nvidia stole the show at CES with a series of announcements, including its work in robotics and autonomous vehicles alongside new gaming chips and a smaller AI processing unit called DIGITS. * Of course, robotics extends beyond cars. Huang was joined onstage by two BD-1 units, droids from the Star Wars universe, as he displayed and discussed an image of other robots that rely on Nvidia's tech such as Caterpillar's construction equipment and Agibot's humanoid robots. Zoom out: Nvidia's competitors are also attending CES to stake their claims in the AI market.

[6]

Everything Nvidia's Jensen Huang announced at CES 2026

Nvidia is in Las Vegas sounding a little bit like an infrastructure ministry: Here are the chips, here are the racks, here's the networking, here's the software -- and by the way, those robots and cars you keep hearing about are supposed to run on all of it. The through-line in this year's Consumer Electronics Show (CES) batch is control of the full stack, with a particular fixation on storage and what Nvidia keeps framing as the next bottleneck: agentic AI that needs more context, more memory, more networking, and fewer excuses for why it can't run in the real world. The pitch is that "AI factories" are now a product category, and Nvidia intends to sell the blueprints, the machines, the operating system, and everything else. A lot of what Nvidia and CEO Jensen Huang announced Monday afternoon has been floating around for months -- Rubin as the post-Blackwell architecture, BlueField-4 as the DPU jump, Nemotron as Nvidia's "open" model family, Halos as the safety umbrella. What's new is the bundling. Nvidia is turning that roadmap into a single argument: six chips, one platform, plus the networking and "context memory" plumbing to keep long-horizon agents from stalling out. On the system side, Nvidia is positioning Vera Rubin NVL72 as the rack-scale workhorse (72 GPUs and 36 CPUs, with exaflops-class FP4 claims), and Rubin Ultra NVL288 as the bigger follow-on (288 GPUs and 144 CPUs). The company is also plugging Rubin into DGX-branded "AI factories," pairing DGX Rubin NVL72 for training with DGX Rubin NVL8 for inference as a more turnkey, standardized unit of capacity. Nvidia says Rubin-based products will be available from partners in the second half of 2026. Two infrastructure add-ons are doing a lot of quiet work here. First, Nvidia is leaning hard into networking as a first-class performance feature, touting Spectrum-X Ethernet photonics switch systems and attaching "five times" claims around inference performance and power efficiency. Second, the company is trying to make "long context" feel like an infrastructure purchasing decision, unveiling an "inference context memory" storage platform to extend agentic AI context windows. If the subtext of Rubin is "the roadmap is real," the subtext of the surrounding plumbing is "the next margin pool is everything around the GPU." "The ChatGPT moment for robotics is here," Huang said in a press release, arguing that "models that understand the real world, reason, and plan actions" are opening "entirely new applications." Automotive is, then, perhaps where Nvidia's "full stack" argument turns into a credibility test, because it's the one category where "demo" and "deployment" are separated by regulation, liability, and a decade of bruised optimism. Nvidia says its Drive AV platform for assisted driving tech is "in production" for the 2026 Mercedes-Benz CLA, which received the highest Euro NCAP safety score in all of 2025. The company says the car has "advanced Level 2 automated driving capabilities" with "point-to-point urban navigation," including "address-to-address" trips -- and frames Hyperion as the compute-and-sensor architecture that adds redundancy for safety. Nvidia says the car will be capable of hands-free driving on U.S. roads by the end of the year. Then, there's the broader bet. "We believe physical AI and robotics will eventually be the largest consumer electronics segment in the world," said Ali Kani, Nvidia's automotive VP. "Everything that moves will ultimately be fully autonomous, powered by physical AI." Kani said that Alpamayo, Nvidia's "family of open source AI models, simulation tools, physical AI datasets" for autonomous driving, is built to accelerate "safe, reasoning-based physical AI development." The company released 1,700 hours of driving data alongside an open-source simulation framework -- and positioned the tools as the starter kit for Level 4 autonomy. Nvidia is pointing to Isaac GR00T N1.6 as an open reasoning vision-language-action model for robot skills, along with Isaac Lab Arena as an evaluation framework for testing policies at scale. The company also calls out Cosmos Reason 2 as a model aimed at improving physical reasoning, and the broader Cosmos lineup as a way to generate synthetic data for training physical AI. Nvidia is also positioning Jetson T4000 as the edge compute target for robots, paired with the same training-to-deployment pipeline that feeds back into DGX-class infrastructure. Nvidia is trying to make the robot stack feel like the software stack: train in a world it can generate, test in a world it can vary, deploy on hardware it can sell. "In 2025, Nvidia was the top contributor ... on Hugging Face with 650 open models and 250 open datasets," Briski said. Essentially: Nvidia wants to be the place you start -- even if you don't stay "open" for long. The company says it's releasing Nemotron-CC, a multilingual pretraining corpus of 1.4 trillion tokens across more than 140 languages, positioned as an "open" foundation layer for building and adapting models. It also highlights a "Granary" instruction dataset meant to make models more useful out of the box for enterprise-style tasks. Nvidia is framing Nemotron as a toolkit for the agentic era: models and datasets for safety, RAG, speech, and reasoning. Zoomed out, Nvidia's CES message is consistent across all three buckets. The future is the pipeline, and Nvidia wants every bit of it -- compute, networking, storage, safety, simulation -- to run on something it already sells.

[7]

CES 2026 - AMD's Lisa Su does the yottaflop math, while NVIDIA Jensen Huang puts robotaxis on a collision course with Elon Musk

It was a case of 'chips with everything' as the new AI kingmakers took to center stage at the CES 2026 jamboree over the past 48 hours as NVIDIA's Jensen Huang found himself having to share the spotlight with his AMD counterpart, Lisa Su. First up was Su who declared that the world is set to enter the 'yottascale' era as demand for AI and the power to fuel it continues to accelerate. Su argued that we're going to need up to 10 yottaflops a year by the end of the decade. OK, hands up out there - what's a yottaflop in real money? No, me neither. Actually it's a one followed by 24 zeros, which Su pointed out is around 10,000 times the amount of global AI compute registered in 2022, which then stood at about one zettaflop (a one followed by 21 zeros). She told CES attendees: Since the launch of ChatGPT a few years ago, we've gone from a million people using AI to now more than a billion active users. This is just an incredible ramp. It took the internet decades to reach that same milestone. Now, what we are projecting is even more amazing. We see the adoption of AI growing to over 5 billion active users as AI truly becomes indispensable to every part of our lives, just like the cell phone and the internet of today. The foundation of AI is compute power, she reminded them: With all of that user growth, we have seen a huge surge in demand in the global compute infrastructure, growing from about one Z-flop in 2022 to more than 100 Z-flops in 2025. Now that sounds big, that's actually 100 times in just a few years. But what you're going to hear tonight from everyone is we won't have, we don't have nearly enough compute for everything that we can possibly do. We have incredible innovation happening. Models are becoming much more capable. They're thinking and reasoning, they're making better decisions, and that goes even further when we extend that to agents overall. So to enable AI everywhere, we need to increase the world's compute capacity another hundred times over the next few years to more than 10 yota flops over the next five years. That means that the entire tech ecosystem needs to come together, she urged: What we like to say is, the real challenge is how do we put AI infrastructure at yottascale? That requires more than just raw performance. It starts with leadership compute, CPUs, GPUs, networking coming together. It takes an open modular rack design that can evolve over product generations. It requires high-speed networking to connect thousands of accelerators into a single unified system. And it has to be really easy to deploy. AMD had a good AI year in 2025, stealing some of the gilt from the NVIDIA gingerbread in the process and boosting its own share price by 76%, way ahead of NVIDIA's growth rate of 30%. Little wonder then that Su said: AI is the most important technology of the last 50 years, and I can say it's absolutely our number one priority at AMD. Over at NVIDIA, on the first of what will inevitably be thousands of public appearances on the world stage while the AI bubble holds, CEO Huang pitched: Every 10 to 15 years, the computer industry resets - a new shift happens, and each time, the world of applications target a new platform. Except this time, there are two simultaneous platform shifts happening at the same time...The entire stack is being changed. Computing has been fundamentally re-shaped as a result of accelerated computing, And in his soundbite moment, he declared: The ChatGPT moment for physical AI is almost here -- when machines begin to understand, reason and act in the real world" Now, that might have a certain air of deja vu all over again about it as it's only 12 months ago on the same stage when Huang introduced NVIDIA's Cosmos world platform and described robotics' "ChatGPT moment" as "around the corner." We've still not turned that corner it seems, despite all the commentary about the speed at which the AI hype cycle is moving. But things take time, suggested Huang: The challenge is clear...The physical world is diverse and unpredictable. The use case he pitched to back up his physical AI vision centered on robotaxis, with the launch of new AI tech that NVIDIA claims will help self-driving cars think like humans to navigate more complex situations. The new offering, Alpamayo, is designed to help self-driving cars handle situations, such as unexpected roadworks or unpredictable driver behavior on the road, in real time, rather than just being trained to react based on previous patterns. Huang explained: Alpamayo brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments and explain their driving decision. It's the foundation for safe, scalable autonomy....Our vision is that someday, every single car, every single truck, will be autonomous, To demonstrate this in action, he rolled a single-shot video of a Mercedes vehicle kitted out with Alpamayo driving in busy downtown San Francisco traffic. A human driver sat behind the wheel throughout the drive but did not stage any interventions (Alpamayo is a Level 2 autonomous driving system -- similar to Tesla's Full Self-Driving -- which requires a human driver to remain attentive behind the wheel at all times.) The first fleet of Alpamayo-powered robotaxis, built in the 2025 Mercedes-Benz CLA vehicles, is due to launch in the US. in the first quarter, followed by Europe in the second quarter and Asia later in 2026. Of course NVIDIA's taxi ambitions surely put it on a collision course with Elon Musk's Tesla empire? Maybe so, but the view from the cheap seats from the Presidential on/off BFF elicited a blunt comment on X:

[8]

AI gets physical: Nvidia's self-driving platform captures consumer world's attention at CES - SiliconANGLE

AI gets physical: Nvidia's self-driving platform captures consumer world's attention at CES Last January, Nvidia Corp. Chief Executive Jensen Huang walked onto the keynote stage at the CES trade show in Las Vegas and declared that robotics had reached an inflection point and artificial intelligence was poised to deliver on its promise for navigating the physical world. This week at the annual event, Huang (pictured) made another keynote appearance and demonstrated how far physical AI had progressed. The company announced Alpamayo, a new open family of AI models designed to improve safety and reliability for autonomous driving systems by leveraging real data feeding simulation, and simulation feeding learning loops. At least for robotic cars, AI is growing up fast. "We have to create a system that allows AIs to learn the common sense of the physical world," Huang told the audience. "Our vision is that someday every single car and every single truck will be autonomous. This is already a giant business for us." Rapid advances in autonomous driving over the last two years have made cars a natural test bed for physical AI. Waymo, the self-driving arm of Alphabet Inc., currently offers fully driverless robotaxi services in Phoenix, Los Angeles, Atlanta, Austin and multiple cities in the San Francisco Bay Area. Tesla Inc. owners are beginning to test out the company's FSD v.14 self-driving update. Nvidia will deploy Alpamayo initially in Mercedes Benz models later this year. "This is likely one of the largest and fastest growing technology sectors in the next decade," Huang said at a CES press briefing on Tuesday. "Everything that moves should be autonomous." There was plenty of evidence at CES this week that a number of companies are taking Huang's advice to heart. Emblematic of the tech world in general, CES has become an AI showcase, with major companies demonstrating new autonomous products for the consumer world. LG Electronics Inc. brought a robot onstage during its press conference on Monday to showcase autonomous capabilities for household tasks. The bot rolled across the stage and placed a towel in a laundry machine on command. Hyundai Motor Group-owned Boston Dynamics Inc. announced its newest Atlas humanoid robot line during one CES presentation. Atlas, checking in at six feet, three inches tall and weighing about 200 pounds, will assist Hyundai with car assembly at a plant in Georgia. "We're seeing a ton of industrial applications," Bill Briggs, chief technology officer at Deloitte Touche Ltd., said during a panel session on Tuesday. "The promise of the consumer is the promise of the humanoid." The evolution of physical AI has been accompanied by a strategic decision on the part of consumer tech providers to position artificial intelligence on personal terms. This is no longer software run by sophisticated chips. AI has become the consumer's friend, what Samsung Electronics Co. Ltd. describes as a "true AI companion experience." To build "companionship" into its products, Samsung has deployed AI into its home appliance lineup. The company has upgraded its AI Vision capability inside of refrigerators with an expanded list of recognizable food items. The solution includes the ability to make recipe recommendations based on what AI sees, and offers a weekly report on the owner's food intake patterns. "It's an evolution of home appliances to true home companions," Cheolgi Kim, executive vice president and head of Samsung Electronics Co. Ltd.'s digital appliances business, said during the company's "First Look" unveiling at CES on Sunday. Another company in prime position to observe this personal connection with artificial intelligence is the one that kicked the technology into high gear three years ago: OpenAI Group PBC. The pioneering AI model firm was a notable presence in many of the presentations at CES this week in Las Vegas. An executive from Walmart Inc. spoke about the retail giant's recent partnership with OpenAI to complete purchases within ChatGPT and develop an agentic shopping experience. Zeta Global Holdings Corp. announced during the show that it will collaborate with OpenAI on powering a superintelligent agent for enterprise marketing. And Greg Brockman, OpenAI's co-founder, president and chairman, joined Advanced Micro Devices Inc. Chief Executive Lisa Su on the CES keynote stage Monday night to discuss the technology's continued evolution. "People are using it for very personal, very important things in their lives," Brockman said. "We are moving to a world where human intent becomes the most precious resource." Inevitably, the physical AI roadmap comes back to Nvidia. The company's unveiling of Alpamayo on Monday was accompanied by the rollout of Vera Rubin, the firm's six-chips-in-one machine to drive AI supercomputing. The Rubin platform represents Nvidia's answer to questions about what enterprises will need for the AI factory. As SiliconANGLE analysts have noted, Rubin reflects Nvidia's vision for the shift taking place from computing as infrastructure to computing as production. The demands of physical AI will require an ability to pull intelligence up the stack, beyond data centers and GPU clusters and into networking, storage, security, scheduling, and serviceability in a fully compatible architecture. "The demand for computing is really off the charts, this is why demand for Nvidia is so great," Huang told the assembled media on Tuesday. "Every year we are going to deliver more computing capability. We are going to try to keep this rhythm going as long as we can." Huang was asked how long he plans to keep going as Nvidia's leader. His tenure at the helm began in 1993, making him one of the longest-serving CEOs in the tech industry today. Huang deflected the question about his plans, but did offer advice to fellow top executives. "The secret to being a CEO this long is one, don't get fired and two, don't get bored," Huang said. "If you do something for 34 years, you are going to figure it out."

[9]

Jensen Huang Shakes Vegas With Nvidia's Physical A.I. Vision at CES

At a packed CES keynote, Nvidia's CEO outlined a future where A.I. understands and operates in the physical world. Nvidia CEO Jensen Huang is the biggest celebrity in Las Vegas this week. His CES keynote at the Fontainebleau Resort proved harder to get into than any sold-out Vegas shows. Journalists who cleared their schedules for the event waited for hours outside the 3,600-seat BleauLive Theatre. Many who arrived on time -- after navigating the sprawling maze of conference venues and, in some cases, flying in from overseas to see the tech king of the moment -- were turned away due to overcapacity and redirected to a watch party outside, where some 2,000 attendees gathered in a mix of frustration and reverence. Sign Up For Our Daily Newsletter Sign Up Thank you for signing up! By clicking submit, you agree to our <a href="http://observermedia.com/terms">terms of service</a> and acknowledge we may use your information to send you emails, product samples, and promotions on this website and other properties. You can opt out anytime. See all of our newsletters Shortly after 1 p.m., Huang jogged onto the stage, wearing a glistening, embossed black leather jacket, and wished the crowd a happy New Year. He opened with a brisk history of A.I., tracing the last few years of exponential progress -- from the rise of large language models to OpenAI's advances in reasoning systems and the explosion of so-called agentic A.I. All of it built toward the theme that dominated the bulk of his 90-minute presentation: physical A.I. Physical A.I. is a concept that has gained momentum among leading researchers over the past year. The goal is to train A.I. systems to understand the intuitive rules humans take for granted -- such as gravity, causality, motion and object permanence -- so machines can reason about and safely interact with real environments. Nvidia enters the self-driving race Huang unveiled Alpamayo, a world foundational model designed to power autonomous driving. He called it "the world's first reasoning autonomous driving A.I." To demonstrate, Nvidia played a one-shot video of a Mercedes vehicle equipped with Alpamayo navigating busy downtown San Francisco traffic. The car executed turns, stopped for lights and vehicles, yielded to pedestrians and changed lanes. A human driver sat behind the wheel throughout the drive but did not intervene. One particularly interesting thing Huang discussed was how Nvidia trains physical A.I. systems -- a fundamentally different challenge from training language models. Large language models learn from text, of which humanity has produced enormous quantities. But how do you teach an A.I. Newton's second law of motion? "Where does that data come from?" Huang asked. "Instead of languages -- because we created a bunch of text that we consider ground truths that A.I. can learn from -- how do we teach an A.I. the ground truths of physics? There are lots and lots of videos, but it's hardly enough to capture the diversity of interactions we need." Nvidia's answer is synthetic data: information generated by A.I. systems based on samples of real-world data. In the case of Alpamayo, another Nvidia world model -- called Cosmos -- uses limited real-world inputs to generate far more complex, physically plausible videos. A basic traffic scenario becomes a series of realistic camera views of cars interacting on crowded streets. A still image of a robot and vegetables turns into a dynamic kitchen scene. Even a text prompt can be transformed into a video with physically accurate motion. Nvidia said the first fleet of Alpamayo-powered robotaxis, built in the 2025 Mercedes-Benz CLA vehicles, is slated to launch in the U.S. in the first quarter, followed by Europe in the second quarter and Asia later in 2026. For now, Alpamayo remains a Level 2 autonomous driving system -- similar to Tesla's Full Self-Driving -- which requires a human driver to remain attentive behind the wheel at all times. Nvidia's longer-term goal is Level 4 autonomy, where vehicles can operate without human supervision in specific, constrained environments. That's one step below full autonomy, or Level 5. "The ChatGPT moment for physical A.I. is nearly here," Huang said in a voiceover accompanying one of the videos shown during the keynote.

[10]

CES 2026: All you need to know about Nvidia's major announcements - The Economic Times

Nvidia unveiled Alpamayo at CES 2026, an open-source AI model that adds reasoning to autonomous vehicles and robotics. The technology will debut with Mercedes-Benz from early 2026. Nvidia also launched the new Vera Rubin chip. Nvidia is also open-sourcing models, simulation tools and large driving datasets for developers globally.At Consumer Electronics Show (CES) 2026, a major annual trade event for consumer tech, Nvidia introduced Alpamayo, an open-source family of AI models, built to help autonomous vehicles reason and not just detect what's on the road, as well as train physical robots. Nvidia, Mercedes-Benz partnership Nvidia says Alpamayo-powered autonomous and driver-assistance features will debut with Mercedes-Benz, which launches in the US in Q1 2026, Europe in Q2 2026 and later the same year in Asia. Unlike traditional self-driving systems that focus on object detection and route planning, Alpamayo moves beyond just seeing the road to actually "reasoning" about it. "We started working on self-driving cars 8 years ago. We understood that we needed to build the entire stack. The model here is completely open sourced," said Nvidia CEO Jensen Huang at the event. "ChatGPT moment for physical AI is here," Huang said about Alpamayo, adding that it brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments, and explain their driving decisions. The flagship model: Alpamayo 1 At the centre of the release is Alpamayo 1, a 10-billion-parameter Vision-Language-Action (VLA) model. "The Alpamayo family introduces chain-of-thought, reasoning-based vision language action (VLA) models that bring human-like thinking to AV decision-making," the company said in a blog. Alpamayo analyses video input, generating a driving trajectory, and clearly explains the logic behind each decision. New chip release: Vera Rubin Alongside Alpamayo, Nvidia announced a new AI chip designed to deliver more performance, with lower power consumption. The chip, called Vera Rubin, has been in development for three years and is named after astronomer Vera Florence Cooper Rubin. Key highlights: This fulfills the promise Huang made last March at Nvidia's annual conference in San Jose. Sweet deal for developers Nvidia is open-sourcing the Alpamayo ecosystem, including: Alpamayo 1 AlpaSim Physical AI Open Datasets are also being released, containing more than 1,700 hours of driving data collected across diverse geographies and conditions and covering rare and complex real-world scenarios. Open-source robot foundation models Beyond vehicles, Nvidia also introduced a full robotics AI stack at CES 2026, signalling its ambition to become the default platform for general-purpose robotics, similar to Android's role in smartphones, according to TechCrunch. The company released new open foundation models, simulation tools, and edge hardware, all available on Hugging Face, enabling robots to reason, plan, and adapt across tasks and environments. New models include:

[11]

Nvidia stock slips after CES 2026 as company introduces humanoid robots and self-driving AI

At CES 2026, Nvidia revealed new humanoid robots and self-driving AI technology. The company showed AI models to teach robots and help autonomous cars handle unusual situations. Investors watched closely, and Nvidia stock fell slightly after-hours. Competitors like Intel, AMD, and Samsung are also focusing on AI chips and memory, making CES 2026 a key tech and stock event. Nvidia is showing its latest robotics technology at CES 2026 as tech companies try to make humanoid robots more common. CEO Jensen Huang said companies like Boston Dynamics, Caterpillar, LG Electronics, and NEURA Robotics are using Nvidia's tech to develop their robots. Nvidia believes physical AI could change the $50 trillion manufacturing and logistics industries and wants to lead in this area, as reported by Yahoo Finance. At CES, Nvidia introduced new AI models to teach robots how to understand and interact with the world, plus hardware to power them. Nvidia also revealed Alpamayo, a new AI model for self-driving cars, which uses chain-of-thought reasoning and vision-language-action technology. Alpamayo can recognize unusual driving situations, like a traffic light being out, and figure out how to act safely. The model is meant to act as a "large-scale teacher" for developers, helping them improve self-driving car systems over time. Companies like Lucid, Uber, and Berkeley DeepDrive are interested in using Alpamayo. Self-driving cars are growing worldwide, led by Google's Waymo, but they are still not perfect and sometimes cause confusion or traffic jams, as stated by Yahoo Finance. Nvidia believes virtual training can help AI models learn safely without putting cars on the road all the time. Nvidia shares fell 0.4% in after-hours trading after Huang's CES keynote, as noted by Reuters. Huang emphasized the importance of open-sourcing AI models and training data, saying: "Only in that way can you truly trust how the models came to be". Competitors like Intel and AMD also presented at CES, with Intel's share mostly stable and AMD falling 1.1% after-hours. CES is seen as a "gut-check" for AI hardware in 2026, with investors looking for real roadmaps and proof chipmakers can deliver. Memory supply is tightening because manufacturers focus on AI servers, pushing high-bandwidth memory (HBM) prices higher and creating a potential "supercycle" in the industry. Intel plans to show its Panther Lake laptop chip at CES, which tests its 18A manufacturing process, though the company has faced issues with production yield, as stated by Reuters. AMD's CEO Lisa Su is scheduled for a keynote at 9:30 p.m. EST, also under investor scrutiny. Nvidia faces competition from chipmakers expanding into AI PCs and data centers, while big customers want more control over the silicon running AI workloads. Samsung said the memory shortage is "unprecedented", as AI demand takes chips normally used for phones and electronics. Samsung plans to double devices with "Galaxy AI" features to 800 million units in 2026, many powered by Google's Gemini. Higher memory prices are affecting smartphone margins, and Samsung may increase product prices; the company's shares rose 7.5% in Seoul trading. Memory prices can fall if supply increases or demand slows, and CES launches can disappoint if specs or shipment timelines are delayed. Investors are watching Intel's 6 p.m. presentation and AMD's 9:30 p.m. keynote for details on specs, shipments, and Nvidia's future pricing power. Q1. What new robots did Nvidia show at CES 2026? Nvidia introduced new humanoid robots and AI models for self-driving cars at CES 2026. Q2. How did Nvidia stock react after CES 2026? Nvidia stock slipped slightly in after-hours trading following the CES 2026 keynote. (You can now subscribe to our Economic Times WhatsApp channel)

[12]

Is this truly Physical AI's ChatGPT moment?

If Nvidia CEO Jensen Huang has his way, all cars and trucks on planet Earth will not only be autonomous but also be able to explain their actions. The company's Alpamayo family of open AI models, described by Huang at CES 2026 as a potential "ChatGPT moment for physical AI" is meant to signal a quantum leap in capability like ChatGPT, producing machines that don't just react to sensor inputs but can think through complex, real-world scenarios, similar to how large language models reason over language. For Nvidia, this shift is occurring first in autonomous vehicles, where reasoning, especially in rare, unpredictable situations, is critical for safety and adoption. At the heart of this initiative is Alpamayo, which is built for Level 4 autonomy, and lets vehicles perceive, reason, and act with human-like judgment, while providing the interpretability and openness required for safety validation and regulatory collaboration. Alpamayo delivers fully open models, simulation frameworks, and datasets. Alpamayo 1 is an open, 10-billion-parameter vision-language-action (VLA) model, designed to combine sensor data, multi-modal understanding, planning, and chain-of-thought reasoning into a single AI stack. Similar to its Compute Unified Device Architecture, or CUDA, platform that lets developers use a GPU to run programs much faster than a regular CPU by doing many calculations at the same time, Nvidia isn't just releasing this model: it is packaging a software ecosystem (simulation tools like AlpaSim), open datasets (1,700+ hours of diverse driving edge-case data), and tools for developers to fine-tune and iterate on autonomous driving systems. This open-source approach aims to accelerate innovation across the industry, enabling both legacy automakers and startups to build on a shared foundation rather than each reinventing the wheel. Autonomous vehicles (AVs) are a natural proving ground for physical AI because they must perceive complex environments, model hidden causal factors (e.g., predicting a child's movement), and make safe control decisions in real time. Traditional AV systems have largely relied on reactive pattern recognition and hand-tuned rules. Alpamayo's reasoning architecture attempts to bridge this gap, enabling vehicles to handle "long-tail" scenarios -- rare, unusual events that are the thorn in the side of most AV stacks. Early commercialization is already underway. Mercedes-Benz plans to integrate Alpamayo-powered autonomy into its CLA model in the U.S. in early 2026, albeit initially at advanced assisted driving levels (e.g., Level 2+). Partnerships and interest have also been reported from companies such as Lucid, Jaguar Land Rover, Uber and Berkeley DeepDrive, all exploring reasoning-based autonomy stacks built on the platform. Market analysts see the autonomous driving software segment growing from a few billion dollars in the mid-2020s to potentially over $8 billion by 2035, while the broader autonomous vehicles market could be worth trillions by the mid-2030s if robotaxis and self-driving fleets scale. Nvidia's dominant position in AI GPUs (pegged at above 80% market share) gives it a strong foundation to capture value from this expansion. Robots, cobots and drones too Alpamayo's relevance isn't confined to vehicles. It sits within a much larger industry surge in Physical AI, or systems that merge cognition and action in the physical world. Research and market reports estimate the global physical AI market to be growing at a very fast clip. The global physical AI market size touched $5.13 billion in 2025 and is forecast to touch $68.54 billion by 2034 across robotics, autonomous systems, cobots, drones, healthcare automation and industrial AI as per Cervicorn Consulting. This broader category includes autonomous warehouse robots that navigate unstructured spaces, collaborative robots (cobots) working alongside humans on factory floors, drones for logistics or inspection, and increasingly capable humanoid platforms being developed by companies like Hyundai/Boston Dynamics, which plan to put robots into manufacturing environments by 2028. Such systems similarly require perception, real-world reasoning, and safe action -- the same "physical AI" core that Alpamayo exemplifies in the automotive domain. In 2024, North America led the physical AI market with a 41.3% revenue share, underpinned by early technology adoption, strong R&D investment, and a mature ecosystem of AI hardware manufacturers. The Asia-Pacific region, however, was the fastest-growing, accounting for a 30.5% share, driven by rapid industrialization, government incentives, and the expanding use of robotics across China, Japan, and South Korea. Hardware dominated the market by component, contributing 58.2% of total revenue, reflecting rising demand for sensors, processors, and embedded systems in intelligent machines. Industrial robots formed the largest robot-type segment, capturing 38.3% of market revenue as manufacturers embraced AI-powered automation for precision and cost efficiency, as per Cervicorn Consulting. By application, manufacturing and automotive led adoption through large-scale use of intelligent robotics in assembly, quality control, and supply chain optimization, while healthcare emerged as the fastest-growing segment, fueled by investments in surgical robotics, AI-assisted diagnostics, and elder-care automation. Early progress but challenges abound While Huang's "ChatGPT moment" line sounds alluring, it's important to ground expectations. Some companies and commentators treat physical AI as a branding term, similar to terms like "Industry 4.0", "autonomous everything", and "digital twins". Just as ChatGPT exaggerated its understanding in early versions, current physical AI systems including Alpamayo, still require human oversight. Generalised physical AI, entailing truly flexible, robust intelligence across domains, remains a longer-term vision. Today's systems excel in specific environments with defined tasks (e.g., highway driving, warehouse picking). Scaling these capabilities to broad everyday use, whether in homes, clinics, or city streets, may take years of iteration. That said, Nvidia's Alpamayo demonstrate that AI models are moving from perception-only tasks toward reasoned action in the physical world. Whether this becomes the definitive "ChatGPT moment for physical AI" will depend on how widely and safely these systems are adopted beyond narrow testbeds. In autonomous driving, Alpamayo's open suite, simulation tools, and reasoning outputs could accelerate industry progress and help unlock higher levels of autonomy. In other sectors such as robotics, logistics, and healthcare, the same principles of embodied AI are emerging, backed by strong market growth projections. Reasoning models, hardware constraints, safety certification, cost pressures, and regulatory frameworks all temper immediate expectations. Meanwhile, models like Alpamayo lay the foundation for the next generation of intelligent machines.

[13]

NVIDIA at CES 2026: When AI learned to finally touch physical reality

Physical AI moves from demos to real-world deployment says NVIDIA For a few years, and definitely since the ChatGPT moment at the end of 2022, the AI world has been largely obsessed with tuning up intelligence. Bigger models with more parameters trying to perform better on industry benchmarks every few months. But at CES 2026, NVIDIA raised the stakes with a whole new argument - that intelligence alone is useless if it can't reason, remember, and act safely in the real world. And hence, what NVIDIA unveiled in Las Vegas wasn't just a collection of disconnected launches. To me, it felt like a carefully thought out, deliberately interlocked blueprint of the AI roadmap that shows us what comes after chatbots - a question many have started to wonder about. According to NVIDIA, the next big thing in tech is a world of agentic AI, physical machines, and infrastructure built not just for speed, but better reasoning, with safety built in and deployed at scale. And at the centre of this shift sits Rubin, NVIDIA's next big AI chip platform. NVIDIA's Rubin platform is being pitched as "six chips, one AI supercomputer," but frankly that undersells what's happening under the hood. Rubin is NVIDIA openly declaring that the future of AI performance no longer lives inside a single processor. By co-designing the Vera CPU, Rubin GPU, NVLink 6, ConnectX-9, BlueField-4 and Spectrum-6 Ethernet as one tightly coupled system, NVIDIA claims it can deliver inference tokens at one-seventh the cost of Blackwell, while training massive mixture-of-experts models with a quarter of the GPUs. So for the industry worried with the escalating cost of AI, NVIDIA is presenting more performance at lower costs - at least on paper for now. Also read: Demis Hassabis says AI-led robot revolution is unfolding right now, here's why This matters because the industry has quietly hit a wall. Reasoning models, long-context systems and agentic workflows don't just want more FLOPS - they want cheaper FLOPS, predictable scaling and infrastructure that doesn't collapse under its own complexity. Rubin is NVIDIA's answer to all that and more. And the industry has noticed. Every major AI lab, hyperscaler and cloud platform worth mentioning - from OpenAI and Anthropic to Microsoft, Google, AWS and CoreWeave - has already lined up behind Rubin for 2026 deployments, according to NVIDIA. If Rubin is about compute economics, BlueField-4 is about something even more fundamental: memory. Because here's the thing, the AI world has operated on the assumption that AI inference (the stage immediately after training an AI on anything) treats every interaction like a fresh start. But agentic AI throws that notion out the window. Because to be actually useful, modern agents need to carry 'baggage' - they need to retain context across multiple turns, distinct tools, users, and even massive compute clusters. As things stand right now, all that context lives in Key-Value (KV) caches, forcing GPUs to hold onto it. And frankly, that is a massive misuse of silicon. GPUs are high-performance engines built for speed, not storage lockers. Using them to park long-term memory isn't just inefficient; it's like buying a Ferrari just to use the boot as a filing cabinet. NVIDIA's Inference Context Memory Storage Platform, powered by BlueField-4, moves that memory out of GPUs and into a shared, AI-native storage layer. The pitch is simple but game changing - persistent memory for AI agents, shared at rack scale, delivered with up to 5x higher throughput and 5x better power efficiency than traditional storage. This is NVIDIA quietly redefining storage as part of cognition. Memory is no longer a byproduct of inference - it's a first-class design constraint. And BlueField-4 is NVIDIA's solution to this problem before it becomes too big to manage. Beyond all the smarter datacenter tech that will power AI factories of tomorrow, NVIDIA is also dragging AI out of the screen and into the physical world. The company's physical AI announcements - Cosmos world models, GR00T humanoid models, Isaac Lab-Arena and the OSMO orchestration framework - are less about flashy robots and more about reducing friction. Training robots has traditionally been slow and brutally expensive. NVIDIA wants it to look more like modern software development. Also read: From fields to fridges: Physical AI takes center stage as CES 2026 kicks off Also read: Robots are the next wave of AI, says Nvidia CEO: Check details The proof of this begins with partners, as NVIDIA demonstrated at CES 2026. Boston Dynamics, Caterpillar, LG Electronics and NEURA Robotics aren't experimenting anymore - they're shipping machines built on NVIDIA's stack. And by integrating its models directly into Hugging Face's LeRobot ecosystem, NVIDIA is betting that scale, not secrecy, will ultimately prove decisive in taming future robotics applications. Autonomous driving remains the most unforgiving test of physical AI, and NVIDIA knows it. And its Alpamayo models are trying to attack the industry's longest-running problem: the "long tail" of rare, ambiguous driving scenarios. Instead of relying purely on perception, Alpamayo introduces chain-of-thought vision-language-action (VLA) models that explicitly reason about cause and effect - and, crucially, can explain their decisions to users. Paired with open datasets and simulation tools, Alpamayo acts as a teacher model that AV developers can distill into production systems. Because as NVIDIA put it, Uber, JLR and Lucid aren't chasing novelty here - they're ultimately chasing safety and trust. Strip away the product names and CES theatrics, and NVIDIA's message becomes clear. Rubin makes reasoning affordable. BlueField-4 makes memory scalable. Cosmos and GR00T make machines adaptable. Alpamayo makes autonomy explainable. Spectrum-X and confidential computing make it all deployable in the real world. "The ChatGPT moment for physical AI is here," said Jensen Huang. The era of stateless, one-shot AI is over. The next phase of AI doesn't just answer questions. It remembers what it did yesterday, reasons about what might happen tomorrow, and acts - sometimes in traffic, sometimes in factories, sometimes in hospitals.

Share

Share

Copy Link

Jensen Huang opened CES 2026 with major announcements including the Rubin platform—a six-chip AI supercomputer now in full production—and Alpamayo, an open reasoning model for autonomous vehicles. Wall Street analysts responded with bullish forecasts, citing potential for significant revenue growth as Nvidia expands beyond data centers into physical AI applications like robotaxis and robotics.

Jensen Huang CES Keynote Reveals Next-Generation AI Platform

Nvidia CEO Jensen Huang took the stage at CES 2026 in Las Vegas to unveil the company's most ambitious AI infrastructure yet, declaring that computing has been "fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence"

2

. The keynote centered on the Rubin platform, Nvidia's first extreme-codesigned, six-chip AI platform now in full production, alongside Alpamayo, an open reasoning model family designed specifically for autonomous vehicle development1

.

Source: Digit

The announcement comes as Nvidia shares have been rangebound, rising less than 2% over three months, making Wall Street particularly keen to assess future chip demand and market sentiment

1

. Huang emphasized that some $10 trillion of computing infrastructure from the last decade is now being modernized to accommodate this new paradigm2

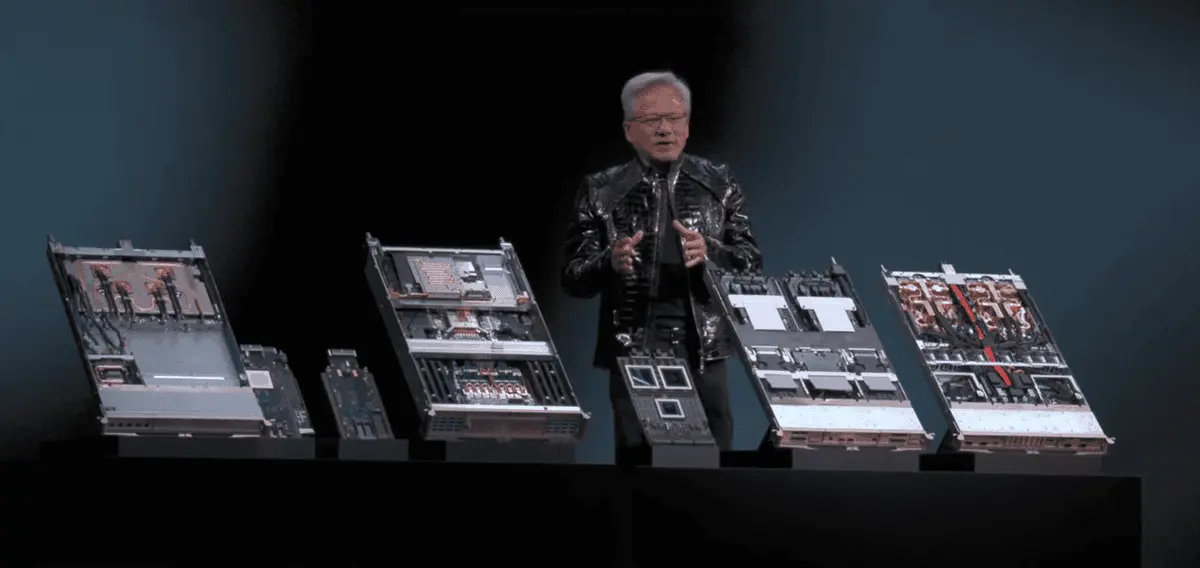

.Rubin Platform Delivers Six Co-Designed Chips for AI Supercomputer Performance

The Rubin platform represents a significant architectural shift, moving beyond single-chip design to an integrated ecosystem of six distinct components working as one AI supercomputer

1

. Built from the data center outward, the platform includes Rubin GPUs with 50 petaflops of NVFP4 inference, Vera CPUs engineered for data movement and agentic processing, NVLink 6 scale-up networking, Spectrum-X Ethernet Photonics scale-out networking, ConnectX-9 SuperNICs, and BlueField-4 DPUs2

.

Source: TechRadar

Huang explained that extreme codesign—designing all components together—is essential because scaling AI to gigascale requires tightly integrated innovation across chips, trays, racks, networking, storage and software to eliminate bottlenecks and dramatically reduce costs

2

. The platform promises to deliver AI tokens at one-tenth the cost while providing 3.5x performance improvements on peak workloads1

. Nvidia also introduced AI-native storage with its Inference Context Memory Storage Platform, which boosts long-context inference with 5x higher tokens per second, 5x better performance per TCO dollar, and 5x better power efficiency2

.Physical AI and Autonomous Vehicles Take Center Stage

The biggest theme of the keynote was "physical AI," Nvidia's term for AI systems that don't just generate content but actually act in the real world

3

. Huang described this as "the ChatGPT moment for physical AI"—when machines begin to understand, reason and act in the real world5

. These models are trained in virtual environments using synthetic data through platforms like Cosmos, then deployed into physical machines once they've learned how the world works3

.

Source: Quartz

Alpamayo emerged as the centerpiece of Nvidia's autonomous driving strategy. Huang called it "the world's first thinking, reasoning autonomous vehicle AI," trained end-to-end from camera input to actuation output

5

. The company demonstrated the technology through a Mercedes-Benz CLA showcasing AI-defined driving, with footage of the vehicle navigating San Francisco streets, avoiding pedestrians and taking turns5

. Nvidia confirmed it is working with robotaxi operators to deploy its AI chips and Drive AV software stack as soon as 2027, with plans to test its own robotaxi service with a partner1

3

.Huang has previously stated that robotics, including self-driving cars, is Nvidia's second most important growth category after AI

1

. "There's no question in my mind now that this is going to be one of the largest robotics industries," Huang said, expressing his vision that "someday every single car, every single truck will be autonomous"5

.Open AI Models Span Six Critical Domains

Nvidia emphasized its role as a frontier AI builder, with open AI models trained on its own supercomputers now powering breakthroughs across multiple industries

2

. Noting that 80% of startups are building on open models, Huang highlighted that "every single six months, a new model is emerging, and these models are getting smarter and smarter"2

.The portfolio spans six domains: Clara for healthcare, Earth-2 for climate science, Nemotron for reasoning and multimodal AI, Cosmos for robotics and simulation, GR00T for embodied intelligence, and Alpamayo for autonomous driving

2

4

. "These models are open to the world," Huang said, underscoring Nvidia's strategy to enable every company, industry, and country to participate in the AI revolution2

. The company also demonstrated personal AI agents running locally on the DGX Spark desktop supercomputer, embodied through a Reachy Mini robot using Hugging Face models2

.Related Stories

Wall Street Analysts Project 33% Upside Potential

Several Wall Street banks—including JPMorgan, Wells Fargo, and Piper Sandler—left the keynote with positive takeaways on Rubin's unique design and anticipated faster adoption than Nvidia's previous Blackwell and Hopper generation of chips

1

. Analysts stayed bullish on Nvidia after the presentation, with their consensus price target suggesting about 33% potential upside over the next year, according to LSEG1

.JPMorgan analyst Harlan Sur notably said in a Tuesday report that Nvidia's development of physical AI products "could potentially drive the next leg of revenue growth" for the company

1

. Wells Fargo analyst Aaron Rakers highlighted the design of Rubin as a key differentiator, especially as rival chipmakers continue to gain market share, noting that "Mr. Huang believes it will be difficult for ASICs to keep up with NVIDIA systems building one chip at a time"1

.Of the 65 analysts covering Nvidia, 23 rate it a strong buy and 36 a buy, with only five hold ratings and just one underperform rating

1

. Morgan Stanley analyst Joseph Moore noted that Rubin "should be positively received given competitive noise exiting 2025 around broader TPU traction," while UBS analyst Timothy Arcuri maintained estimates but saw "an upward bias to C2026 and C2027 numbers with faster cycle times and Rubin ramp"1

.Strategic Shift From Gaming to Enterprise AI Dominance

The absence of any new GeForce consumer GPU announcements at CES 2026 underscored Nvidia's strategic pivot

3

. Instead, Huang spent the keynote focused on data center infrastructure, enterprise partnerships, and physical AI applications. The company cited integrations with leading enterprises including Palantir, ServiceNow, Snowflake, CodeRabbit, CrowdStrike, NetApp, and Semantec2

.Nvidia has now become the first company ever to surpass a $5 trillion valuation, and the company's ambitions now span factories, autonomous vehicles, robotics, and nearly any domain that can be trained, tested, or perfected in simulation

3

. Huang declared that "every 10 to 15 years, the computer industry resets—a new shift happens," noting that this time there are two simultaneous platform shifts: AI applications and the move from CPU to GPU-based software development4

. "The entire stack is being changed," he added, emphasizing that "every single layer of that five-layer cake of AI is being reinvented"4

.References

Summarized by

Navi

[3]

[4]

Related Stories

Nvidia unveils powerful Vera Rubin chip as Jensen Huang maps ambitious AI future at CES

06 Jan 2026•Technology

Nvidia launches Alpamayo open AI models to bring human-like reasoning to autonomous vehicles

06 Jan 2026•Technology

Nvidia CEO Jensen Huang Unveils "Agentic AI" Vision at CES 2025, Predicting Multi-Trillion Dollar Industry Shift

07 Jan 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology