OpenAI Explores Google's TPUs Amid Hardware Diversification Strategy

10 Sources

10 Sources

[1]

There are no allegiances with OpenAI - just compute

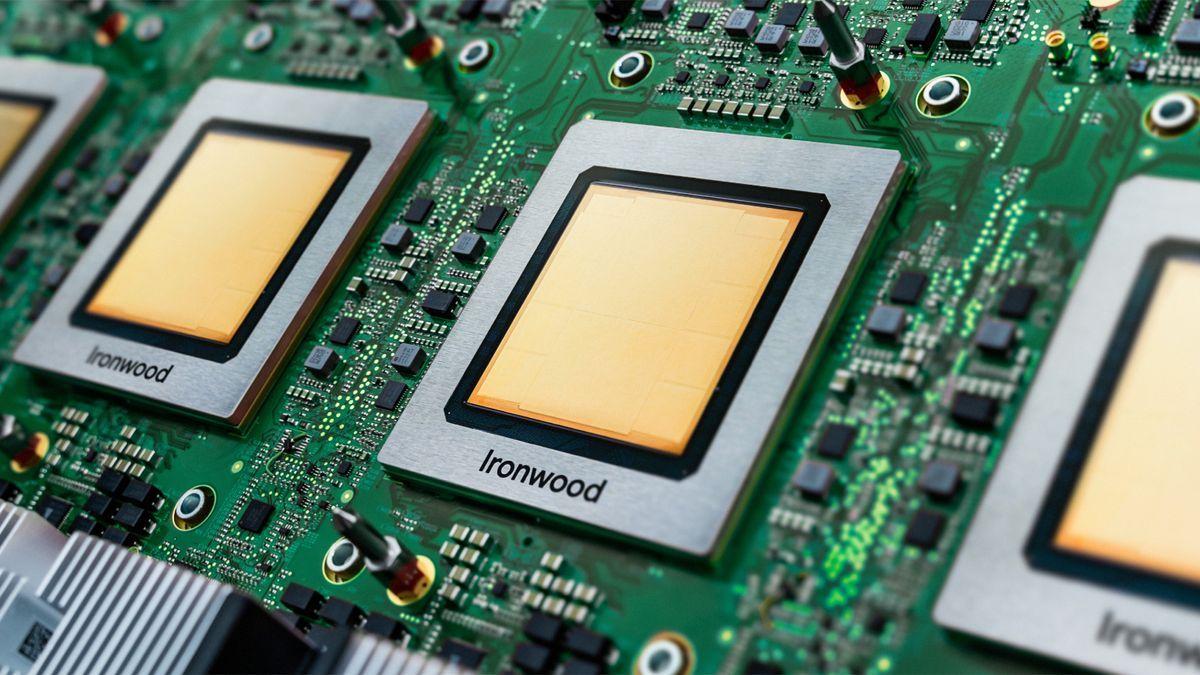

Google's TPUs might not be on Altman's menu just yet, but he's never been all that picky about hardware Analysis No longer bound to Microsoft's infrastructure, OpenAI is looking to expand its network of compute providers to the likes of Oracle, CoreWeave, and apparently even rival model builder Google. But while OpenAI may have set up shop at the Chocolate Factory, it won't be using Google's home-grown tensor processing units (TPUs) to run or train its models anytime soon, the AI darling tells Reuters. In a statement made to the publication over the weekend, OpenAI admitted it was playing with Google's TPUs, but didn't have any plans to deploy them at scale right now. The denial comes days after The Information reported that Google had managed to convince the model builder to transition its workloads over to the home-grown accelerators. OpenAI's alleged embrace of Google's TPU tech was seen by many as a sign that the Sam Altman-backed model builder wasn't only looking to end its reliance on Microsoft, but was also looking to curb its dependence on Nvidia hardware as well. However, if you've been paying attention, you'd know that OpenAI has been diversifying its hardware stack for a while now. The company may have gotten its start using Nvidia's DGX systems, but it's never been an exclusive relationship. Over the years, the model builder's fleet of GPTs has run on a wide variety of hardware. You may recall that Microsoft had GPT-3.5 running on its home-grown Maia accelerators. Microsoft -- OpenAI's chief infrastructure provider until just recently -- was also among the first to adopt AMD's Instinct MI300-series accelerators, with running models like GPT-4 being one of the key use cases for them. AMD's accelerators have historically offered higher memory capacities and bandwidth, likely making them more economical than Nvidia's GPUs for model serving. And even as OpenAI's ties to Microsoft soften, AMD remains a key hardware partner for the budding AI behemoth. Last month, Altman took the stage at AMD's Advancing AI event in San Jose to highlight their ongoing collaboration. If that weren't enough, OpenAI is reportedly developing an AI chip of its own to further optimize the ratio of compute, memory, bandwidth, and networking for its training and inference pipelines. Considering all this, the idea that OpenAI is playing with Google's home-grown silicon isn't that surprising. The search engine's Gemini models have already shown the architecture is more than capable of large-scale training. Google also offers a number of different configurations of these accelerators, each with different ratios of compute, memory, and scalability, which would give OpenAI a degree of flexibility depending on whether they were hurting for compute-intensive training jobs or memory bandwidth bound inference workloads. The Chocolate Factory's 7th-generation of Ironwood TPUs boast up to 4.6 petaFLOPS of dense FP8 performance, 192 GB of high-bandwidth memory (HBM) good for 7.4TB/s of bandwidth, and 1.2TB/s of inter-chip bandwidth, putting them in the same ballpark as Nvidia's Blackwell accelerators. TPUv7 can be had in two configurations: a pod with 256 chips or 9,216. We're told multiple pods can be tied together to further extend compute capacity to more than 400,000 accelerators. And if there's anything that gets Sam Altman excited, it's massive quantities of compute. So why is it that OpenAI decided against using Google's TPUs? There could be a couple of factors at play here. It's possible that performance just wasn't as good as expected, or that Google may not have had enough TPUs lying around to meet OpenAI's needs, or simply that the cost per token was too high. However, the most obvious answer is that OpenAI's software stack has, for the most part, been optimized to run on GPUs. Adapting this software to take full advantage of Google's TPU architecture would take time and additional resources, and ultimately may not offer much, if any, tangible benefit over sticking with GPUs. As they say, the grass is always greener on the other side. You'll never know for sure unless you check. ®

[2]

OpenAI turns to Google's AI chips to power its products, The Information reports

June 27 (Reuters) - OpenAI has recently begun renting Google's (GOOGL.O), opens new tab artificial intelligence chips to power ChatGPT and other products, The Information reported on Friday, citing a person involved in the arrangement. The move, which marks the first time OpenAI has used non-Nvidia chips in a meaningful way, shows the Sam Altman-led company's shift away from relying on backer Microsoft's (MSFT.O), opens new tab data centers, potentially boosting Google's tensor processing units (TPUs) as a cheaper alternative to Nvidia's (NVDA.O), opens new tab graphics processing units (GPUs), the report said. As one of the largest purchasers of Nvidia's GPUs, OpenAI uses AI chips to train models and also for inference computing, a process in which an AI model uses its trained knowledge to make predictions or decisions based on new information. OpenAI hopes the TPUs, which it rents through Google Cloud, will help lower the cost of inference, according to the report. However, Google, an OpenAI competitor in the AI race, is not renting its most powerful TPUs to its rival, The Information said, citing a Google Cloud employee. Both OpenAI and Google did not immediately respond to Reuters requests for comment. OpenAI planned to add Google Cloud service to meet its growing needs for computing capacity, Reuters had exclusively reported earlier this month, marking a surprising collaboration between two prominent competitors in the AI sector. For Google, the deal comes as it is expanding external availability of its in-house TPUs, which were historically reserved for internal use. That helped Google win customers including Big Tech player Apple (AAPL.O), opens new tab as well as startups like Anthropic and Safe Superintelligence, two OpenAI competitors launched by former OpenAI leaders. Reporting by Juby Babu in Mexico City; Editing by Alan Barona Our Standards: The Thomson Reuters Trust Principles., opens new tab Suggested Topics:Artificial Intelligence

[3]

OpenAI says it has no plan to use Google's in-house chip

June 30 - OpenAI said it has no active plans to use Google's (GOOGL.O), opens new tab in-house chip to power its products, two days after Reuters and other news outlets reported on the AI lab's move to turn to its competitor's artificial intelligence chips to meet growing demand. A spokesperson for OpenAI said on Sunday that while the AI lab is in early testing with some of Google's tensor processing units (TPUs), it has no plans to deploy them at scale right now. Google declined to comment. While it is common for AI labs to test out different chips, using new hardware at scale could take much longer and would require different architecture and software support. OpenAI is actively using Nvidia's graphics processing units (GPUs), and AMD's (AMD.O), opens new tab AI chips to power its growing demand. OpenAI is also developing its chip, an effort that is on track to meet the "tape-out" milestone this year, where the chip's design is finalized and sent for manufacturing. OpenAI has signed up for Google Cloud service to meet its growing needs for computing capacity, Reuters had exclusively reported earlier this month, marking a surprising collaboration between two prominent competitors in the AI sector. Most of the computing power used by OpenAI would be from GPU servers powered by the so-called neocloud company CoreWeave (CRWV.O), opens new tab. Google has been expanding the external availability of its in-house AI chips, or TPUs, which were historically reserved for internal use. That helped Google win customers, including Big Tech player Apple (AAPL.O), opens new tab, as well as startups like Anthropic and Safe Superintelligence, two ChatGPT-maker competitors launched by former OpenAI leaders. Reporting by Krystal Hu in New York and Kenrick Cai in San Francisco; Editing by Kenneth Li and Lisa Shumaker Our Standards: The Thomson Reuters Trust Principles., opens new tab Suggested Topics:Artificial Intelligence

[4]

OpenAI Testing Google's TPUs, But No Plans to Deploy at Scale: Report | AIM

OpenAI has revealed that it has 'no active plans' to use Google's in-house chips (Tensor Processing Units, or TPUs) for its AI products, Reuters reported on June 30. This comes after The Information put out that OpenAI is planning to use Google's TPUs to power ChatGPT and other AI products. However, Reuters quoted OpenAI's spokesperson as saying the company is in 'early testing with some of Google's TPUs' but it has no plans to deploy them at scale right now. OpenAI currently ranks as one of NVIDIA's largest GPU customers for training its AI models. Over the past couple of years, the massive surge in demand for NVIDIA's GPUs to build AI models has led the chip company to top the US stock markets on several occasions. However, several companies do intend to reduce their reliance on NVIDIA's GPUs to improve cost efficiency. Google utilises its in-house hardware systems, known as TPUs, to train and deploy its AI models. In the technical report of the Gemini 2.5 models, Google revealed that the model was trained on a massive cluster of its fifth-generation TPUs. Furthermore, Amazon Web Services (AWS) senior director for customer and product engineering, Gadi Hutt, told CNBC that Project Rainer, the company's initiative to build an AI supercomputer, will now contain half a million of the company's in-house Trainium2 chips. This order would have traditionally gone to NVIDIA. Hutt also said that while NVIDIA's Blackwell (the current flagship platform) offers better performance than Trainium2, the latter provides better cost performance. The company also claims that Trainium2 offers a 30-40% better price-performance ratio than the current generation of GPUs. OpenAI is also developing its in-house chips. Commercial Times, a Taiwanese media outlet, recently reported that OpenAI is expected to launch its chip in the fourth quarter of this year. The company is being assisted by Broadcom and Taiwan Semiconductor Manufacturing Company (TSMC) to build the chip.

[5]

OpenAI expands beyond Nvidia with Google chips

OpenAI is integrating Google's custom TPU chips for specific workloads to manage operational costs, following the recent launch of its new image generation platform earlier in 2025. This strategic shift for OpenAI involves moving portions of its computing tasks to Google's Tensor Processing Units (TPUs). This decision, reported by The Information, which cited an anonymous source, reflects a response to the increasing infrastructure demands placed on OpenAI, particularly since the introduction of its image generation platform. Previously, OpenAI relied extensively on Nvidia's Graphics Processing Units (GPUs), primarily through established collaborations with Microsoft and Oracle. Oracle, in particular, accumulated a substantial inventory of Nvidia GPUs, positioning itself as a critical provider within OpenAI's machine learning infrastructure. The escalating cost of Nvidia hardware and persistent supply constraints have prompted companies with significant GPU requirements to investigate alternative solutions. Google has actively promoted its TPU chips to third-party cloud infrastructure providers. This initiative aims to establish a competitive market that could challenge Nvidia's current market leadership, particularly in high-performance AI training and inference applications. Should Google succeed in attracting more providers to its TPU ecosystem, it could gradually acquire a portion of Nvidia's market share. This development is notable given Nvidia's established position as the industry standard for training large language models and other generative AI systems. Inside OpenAI's scramble to keep top minds Google possesses a unique capability, developing both AI models and the hardware necessary to operate them. The company utilizes TPUs for training its proprietary Gemini AI system, which is subsequently integrated across various Google services, including Gmail and Search. This dual capacity enables Google to offer an end-to-end AI stack. An increased reliance by OpenAI on Google's chips would represent a notable disruption to Nvidia's market dominance. This shift also indicates a move toward a more diversified hardware environment within the AI sector, suggesting that no single chip manufacturer can maintain an unchallenged position. OpenAI's apparent change in hardware strategy occurs just months after its partnership with Oracle for the Stargate program. This alliance had been viewed as a strategic move potentially signaling a departure from its long-standing relationship with Microsoft. By considering Google's silicon, OpenAI demonstrates a willingness to prioritize competitive advantage and cost management over historical alliances. This move could influence other AI companies seeking alternatives to Nvidia's solutions. If Google's TPUs demonstrate viability at scale, Nvidia's established leadership in the AI sector may encounter significant competition, driven by economic considerations and supply chain realities.

[6]

OpenAI Said to Turn to Google's AI Chips to Power Its Products

These Ai chips will be used to power the company's various services OpenAI has recently begun renting Google's artificial intelligence chips to power ChatGPT and its other products, a source close to the matter told Reuters on Friday. The ChatGPT maker is one of the largest purchasers of Nvidia's graphics processing units (GPUs), using the AI chips to train models and also for inference computing, a process in which an AI model uses its trained knowledge to make predictions or decisions based on new information. OpenAI planned to add Google Cloud service to meet its growing needs for computing capacity, Reuters had exclusively reported earlier this month, marking a surprising collaboration between two prominent competitors in the AI sector. For Google, the deal comes as it is expanding external availability of its in-house tensor processing units (TPUs), which were historically reserved for internal use. That helped Google win customers including Big Tech player Apple as well as startups like Anthropic and Safe Superintelligence, two ChatGPT-maker competitors launched by former OpenAI leaders. The move to rent Google's TPUs signals the first time OpenAI has used non-Nvidia chips meaningfully and shows the Sam Altman-led company's shift away from relying on backer Microsoft's data centers. It could potentially boost TPUs as a cheaper alternative to Nvidia's GPUs, according to the Information, which reported the development earlier. OpenAI hopes the TPUs, which it rents through Google Cloud, will help lower the cost of inference, according to the report. However, Google, an OpenAI competitor in the AI race, is not renting its most powerful TPUs to its rival, The Information said, citing a Google Cloud employee. Google declined to comment while OpenAI did not immediately respond to Reuters when contacted. Google's addition of OpenAI to its customer list shows how the tech giant has capitalized on its in-house AI technology from hardware to software to accelerate the growth of its cloud business.

[7]

OpenAI Says It Has No Plan to Use Google's In-House Chip

The company said it was early testing with some of Google's TPUs OpenAI said it has no active plans to use Google's in-house chip to power its products, two days after Reuters and other news outlets reported on the AI lab's move to turn to its competitor's artificial intelligence chips to meet growing demand. A spokesperson for OpenAI said on Sunday that while the AI lab is in early testing with some of Google's tensor processing units (TPUs), it has no plans to deploy them at scale right now. Google declined to comment. While it is common for AI labs to test out different chips, using new hardware at scale could take much longer and would require different architecture and software support. OpenAI is actively using Nvidia's graphics processing units (GPUs), and AMD's AI chips to power its growing demand. OpenAI is also developing its chip, an effort that is on track to meet the "tape-out" milestone this year, where the chip's design is finalized and sent for manufacturing. OpenAI has signed up for Google Cloud service to meet its growing needs for computing capacity, Reuters had exclusively reported earlier this month, marking a surprising collaboration between two prominent competitors in the AI sector. Most of the computing power used by OpenAI would be from GPU servers powered by the so-called neocloud company CoreWeave. Google has been expanding the external availability of its in-house AI chips, or TPUs, which were historically reserved for internal use. That helped Google win customers, including Big Tech player Apple, as well as startups like Anthropic and Safe Superintelligence, two ChatGPT-maker competitors launched by former OpenAI leaders.

[8]

OpenAI turns to Google's AI chips to power its products: The Information - The Economic Times

OpenAI has recently begun renting Google's artificial intelligence chips to power ChatGPT and other products, The Information reported on Friday, citing a person involved in the arrangement. The move, which marks the first time OpenAI has used non-Nvidia chips in a meaningful way, shows the Sam Altman-led company's shift away from relying on backer Microsoft's data centres, potentially boosting Google's tensor processing units (TPUs) as a cheaper alternative to Nvidia's graphics processing units (GPUs), the report said. As one of the largest purchasers of Nvidia's GPUs, OpenAI uses AI chips to train models and also for inference computing, a process in which an AI model uses its trained knowledge to make predictions or decisions based on new information. OpenAI hopes the TPUs, which it rents through Google Cloud, will help lower the cost of inference, according to the report. However, Google, an OpenAI competitor in the AI race, is not renting its most powerful TPUs to its rival, The Information said, citing a Google Cloud employee. Both OpenAI and Google did not immediately respond to Reuters requests for comment. OpenAI planned to add Google Cloud service to meet its growing needs for computing capacity, Reuters had exclusively reported earlier this month, marking a surprising collaboration between two prominent competitors in the AI sector. For Google, the deal comes as it is expanding external availability of its in-house TPUs, which were historically reserved for internal use. That helped Google win customers including Big Tech player Apple as well as startups like Anthropic and Safe Superintelligence, two OpenAI competitors launched by former OpenAI leaders.

[9]

OpenAI says it has no plan to use Google's in-house chip - The Economic Times

While it is common for AI labs to test out different chips, using new hardware at scale could take much longer and would require different architecture and software support. OpenAI is actively using Nvidia's graphics processing units (GPUs), and AMD's AI chips to power its growing demand.OpenAI said it has no active plans to use Google's in-house chip to power its products, two days after and other news outlets reported on the AI lab's move to turn to its competitor's artificial intelligence chips to meet growing demand. A spokesperson for OpenAI said on Sunday that while the AI lab is in early testing with some of Google's tensor processing units (TPUs), it has no plans to deploy them at scale right now. Google declined to comment. While it is common for AI labs to test out different chips, using new hardware at scale could take much longer and would require different architecture and software support. OpenAI is actively using Nvidia's graphics processing units (GPUs), and AMD's AI chips to power its growing demand. OpenAI is also developing its chip, an effort that is on track to meet the "tape-out" milestone this year, where the chip's design is finalized and sent for manufacturing. OpenAI has signed up for Google Cloud service to meet its growing needs for computing capacity, Reuters had exclusively reported earlier this month, marking a surprising collaboration between two prominent competitors in the AI sector. Most of the computing power used by OpenAI would be from GPU servers powered by the so-called neocloud company CoreWeave. Google has been expanding the external availability of its in-house AI chips, or TPUs, which were historically reserved for internal use. That helped Google win customers, including Big Tech player Apple, as well as startups like Anthropic and Safe Superintelligence, two ChatGPT-maker competitors launched by former OpenAI leaders.

[10]

NVIDIA Might Be Losing AI Dominance As OpenAI Shifts To Google's Chips - Report

This is not investment advice. The author has no position in any of the stocks mentioned. Wccftech.com has a disclosure and ethics policy. Google has managed to eke away some of OpenAI's AI computing requirements from NVIDIA, suggests a new report from The Information. The report, which quotes a single source, shares that OpenAI has turned to Google to rely on the latter's tensor processing units (TPUs) to power its AI products. OpenAI has primarily been believed to rely on Microsoft, Oracle and others to use NVIDIA's GPUs to train and run its AI software. According to the report, OpenAI is interested in lowering its operating costs by using Google's TPUs, and Google has not rented out its latest TPUs to its AI rival. Google launched its seventh-generation TPUs in April, which it claimed was its first AI chip inference. The TPUs made quite a splash in 2024 when Apple revealed that it had used the firm's TPUs to train its Apple Intelligence platform. However, training is just one part of the AI equation, as AI chips rely on inferencing to generate outputs in response to user queries. According to The Information, OpenAI is using Google's TPU to power ChatGPT and other AI products. The shift has been recent, and the publication quotes a single anonymous source in its report. The reason OpenAI is using Google's chips instead of NVIDIA's is to reduce costs, as the firm has faced heavy usage lately, as it learned after introducing a new image generation platform earlier this year. The Information also adds that Google is purportedly seeking to offer its TPU chips to cloud computing infrastructure providers. NVIDIA's high prices and short supply of its GPUs have opened up a market for alternative chips that can offer users lower costs. If Google is able to convince the infrastructure providers to adopt its chips as well, then it could aim at NVIDIA's market share and eke it away as supply continues to be constrained. Google also relies on its own chips to train its AI models. The firm is one of the most diverse AI companies in the industry as it operates across both the hardware and software stacks. Along with its TPUs, Google also offers its Gemini AI model and has been busy integrating Gemini across its platforms such as Gmail and Google Search. OpenAI's reliance on Microsoft and Oracle means that it has exclusively relied on NVIDIA's GPUs. Oracle has positioned itself to hold one of the largest inventories of NVIDIA GPUs in the industry. If OpenAI relies on TPUs from Google, then Google will have managed to break NVIDIA's near-monopoly like status on the high-end AI industry. Earlier this year, OpenAI made headlines after it partnered with Oracle as part of President Trump's Startgate project in a decision that appeared to have snubbed Microsoft.

Share

Share

Copy Link

OpenAI is testing Google's Tensor Processing Units (TPUs) as part of its strategy to diversify its hardware infrastructure, though it currently has no plans for large-scale deployment.

OpenAI's Hardware Diversification Strategy

OpenAI, the artificial intelligence powerhouse behind ChatGPT, has been exploring various hardware options to power its AI models. Recent reports suggest that the company is testing Google's Tensor Processing Units (TPUs), marking a potential shift in its computing infrastructure

1

2

. However, OpenAI has clarified that while they are in early testing phases with Google's TPUs, there are currently no plans to deploy them at scale3

.

Source: Gadgets 360

The Current Hardware Landscape

OpenAI has traditionally relied heavily on Nvidia's Graphics Processing Units (GPUs) for training and running its AI models. The company has been one of Nvidia's largest customers in the AI sector

4

. However, the AI industry's rapid growth has led to increased demand for these chips, resulting in supply constraints and rising costs.Exploring Alternatives

In response to these challenges, OpenAI has been diversifying its hardware stack:

- Microsoft's Maia accelerators have been used to run GPT-3.5

1

. - AMD's Instinct MI300-series accelerators have been adopted for running models like GPT-4

1

. - The company is reportedly developing its own AI chip, with the design expected to be finalized this year

3

.

The Google TPU Test

The recent testing of Google's TPUs is part of this broader strategy. Google's 7th-generation Ironwood TPUs offer impressive specifications:

- Up to 4.6 petaFLOPS of dense FP8 performance

- 192 GB of high-bandwidth memory (HBM)

- 7.4TB/s of bandwidth

- 1.2TB/s of inter-chip bandwidth

1

These specifications put Google's TPUs in a competitive position with Nvidia's latest offerings.

Industry Implications

OpenAI's exploration of various hardware options reflects a broader trend in the AI industry. Companies are seeking to optimize their computing infrastructure for both performance and cost-efficiency. This shift could potentially challenge Nvidia's dominance in the AI chip market

5

.Other tech giants are also developing their own AI chips:

- Google uses its TPUs for training its Gemini AI system

4

. - Amazon Web Services is building an AI supercomputer using its in-house Trainium2 chips

4

.

Related Stories

Challenges and Considerations

Despite the potential benefits, transitioning to new hardware architectures presents challenges:

- Software optimization: OpenAI's existing software stack is primarily optimized for GPUs, and adapting it for TPUs would require significant effort

1

. - Scale and availability: Google may not have enough TPUs available to meet OpenAI's large-scale computing needs

1

. - Cost considerations: The cost per token for inference computing is a crucial factor in OpenAI's decision-making process

2

.

Future Outlook

While OpenAI continues to test various hardware options, it remains committed to its existing partnerships. The company is actively using Nvidia's GPUs and AMD's AI chips to meet its growing demand

3

. Additionally, OpenAI has signed up for Google Cloud services and is working with CoreWeave for GPU-powered servers3

.

Source: Gadgets 360

As the AI industry continues to evolve, the hardware landscape is likely to become increasingly diverse. Companies will need to balance performance, cost, and availability as they seek the most efficient solutions for their AI computing needs.

Source: The Register

References

Summarized by

Navi

[1]

[5]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology