ChatGPT Introduces Break Reminders Amid Mental Health Concerns

26 Sources

26 Sources

[1]

ChatGPT Will Start Asking If You Need a Break. That May Not Be Enough to Snap a Bad Habit

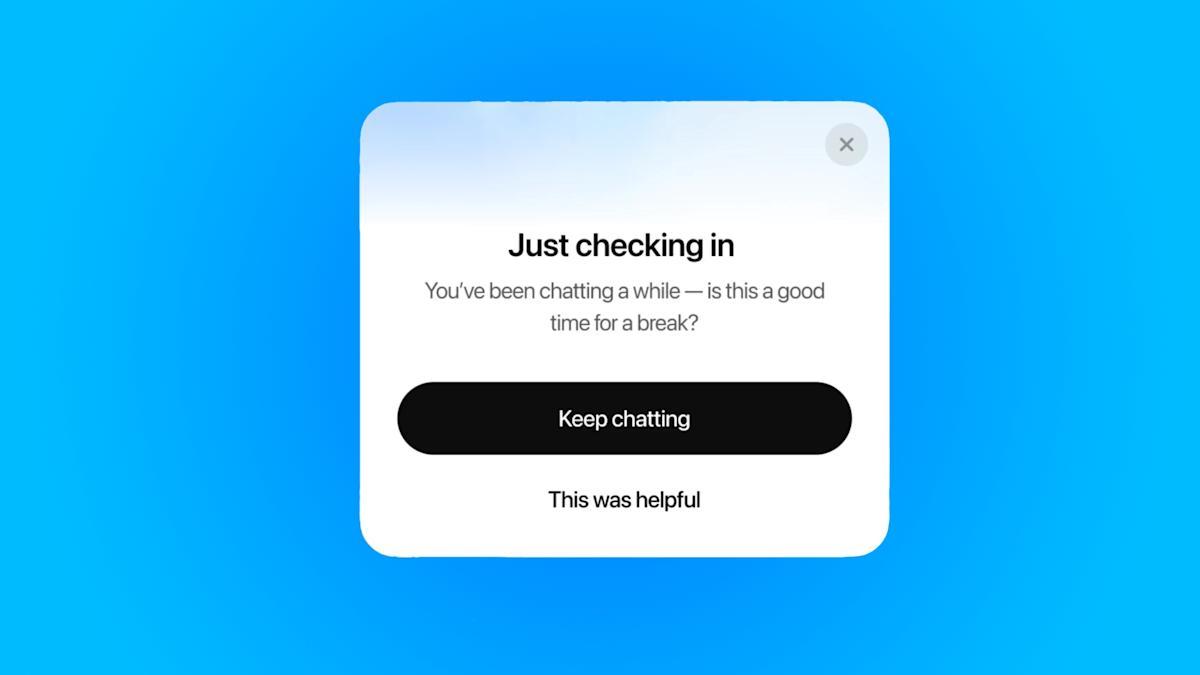

Expertise Artificial intelligence, home energy, heating and cooling, home technology. We've all been mid-TV binge when the streaming service interrupts our umpteenth-consecutive episode of Star Trek: The Next Generation to ask if we're still watching. That may be in part designed to keep you from missing the first appearance of the Borg because you fell asleep, but it also helps you ponder if you instead want to get up and do literally anything else. The same thing may be coming to your conversation with a chatbot. OpenAI said Monday it would start putting "break reminders" into your conversations with ChatGPT. If you've been talking to the gen AI chatbot too long -- which can contribute to addictive behavior, just like with social media -- you'll get a quick pop-up prompt asking if it's a good time for a break. "Instead of measuring success by time spent or clicks, we care more about whether you leave the product having done what you came for," the company said in a blog post. (Disclosure: Ziff Davis, CNET's parent company, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.) Whether this change will actually make a difference is hard to say. Dr. Anna Lembke, a psychiatrist and professor at the Stanford University School of Medicine, said social media and tech companies haven't released data on whether features like this work to deter compulsive behavior. "My clinical experience would say that these kinds of nudges might be helpful for people who aren't yet seriously addicted to the platform but aren't really helpful for those who are seriously addicted." OpenAI's changes to ChatGPT arrive as the mental health effects of using them come under more scrutiny. Many people are using AI tools and characters as therapists, confiding in them and treating their advice with the same trust as they would that of a medical professional. That can be dangerous, as AI tools can provide wrong and harmful responses. Another issue is privacy. Your therapist has to keep your conversations private, but OpenAI doesn't have the same responsibility or right to protect that information in a lawsuit, as CEO Sam Altman acknowledged recently. Aside from the break suggestions, the changes are less noticeable. Tweaks to OpenAI's models are intended to make it more responsive and helpful when you're dealing with a serious issue. The company said in some cases the AI has failed to spot when a user shows signs of delusions or other concerns, and it has not responded appropriately. The developer said it is "continuing to improve our models and [is] developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately and point people to evidence-based resources when needed." Tools like ChatGPT can encourage delusions because they tend to affirm what people believe and don't challenge the user's interpretation of reality. OpenAI even rolled back changes to one of its models a few months ago after it proved to be too sycophantic. "It could definitely contribute to making the delusions worse, making the delusions more entrenched," Lembke said. ChatGPT should also start being more judicious about giving advice about major life decisions. OpenAI used the example of "should I break up with my boyfriend?" as a prompt where the bot shouldn't give a straight answer but instead steer you to answer questions and come up with an answer on your own. Those changes are expected soon. ChatGPT's reminders to take breaks may or may not be successful in reducing the time you spend with generative AI. You may be annoyed by an interruption to your workflow caused by something asking if you need a break, but it may give someone who needs it a push to go touch grass. Read more: AI Essentials: 29 Ways You Can Make Gen AI Work for You, According to Our Experts Lembke said you should watch your time when using something like a chatbot. The same goes for other addictive tech like social media. Set aside days when you'll use them less and days when you won't use them at all. "People have to be very intentional about restricting the amount of time, set specific limits," she said. "Write a specific list of what they intend to do on the platform and try to just do that and not get distracted and go down rabbit holes."

[2]

ChatGPT will 'better detect' mental distress after reports of it feeding people's delusions

OpenAI, which is expected to launch its GPT-5 AI model this week, is making updates to ChatGPT that it says will improve the AI chatbot's ability to detect mental or emotional distress. To do this, OpenAI is working with experts and advisory groups to improve ChatGPT's response in these situations, allowing it to present "evidence-based resources when needed." In recent months, multiple reports have highlighted stories from people who say their loved ones have experienced mental health crises in situations where using the chatbot seemed to have an amplifying effect on their delusions. OpenAI rolled back an update in April that made ChatGPT too agreeable, even in potentially harmful situations. At the time, the company said the chatbot's "sycophantic interactions can be uncomfortable, unsettling, and cause distress." OpenAI acknowledges that its GPT-4o model "fell short in recognizing signs of delusion or emotional dependency" in some instances. "We also know that AI can feel more responsive and personal than prior technologies, especially for vulnerable individuals experiencing mental or emotional distress," OpenAI says. As part of efforts to promote "healthy use" of ChatGPT, which now reaches nearly 700 million weekly users, OpenAI is also rolling out reminders to take a break if you've been chatting with the AI chatbot for a while. During "long sessions," ChatGPT will display a notification that says, "You've been chatting a while -- is this a good time for a break?" with options to "keep chatting" or end the conversation. OpenAI notes that it will continue tweaking "when and how" the reminders show up. Several online platforms, such as YouTube, Instagram, TikTok, and even Xbox, have launched similar notifications in recent years. The Google-owned Character.AI platform has also launched safety features that inform parents which bots their kids are talking to after lawsuits accused its chatbots of promoting self-harm. Another tweak, rolling out "soon," will make ChatGPT less decisive in "high-stakes" situations. That means when asking ChatGPT a question like "Should I break up with my boyfriend?" the chatbot will help walk you through potential choices instead of giving you an answer.

[3]

ChatGPT can no longer tell you to break up with your boyfriend

The company is working with experts, including physicians and researchers. As OpenAI prepares to drop one of the biggest ChatGPT launches of the year, the company is also taking steps to make the chatbot safer and more reliable with its latest update. Also: Could Apple create an AI search engine to rival Gemini and ChatGPT? Here's how it could succeed On Monday, OpenAI published a blog post outlining how the company has updated or is updating the chatbot to be more helpful, providing you with better responses in times when you need support, or encouraging a break when you use it too much: If you have ever tinkered with ChatGPT, you are likely familiar with the feeling of getting lost in the conversation. Its responses are so amusing and conversational that it is easy to keep the back-and-forth volley going. This is especially true for fun tasks, such as creating an image and then modifying it to generate different renditions that meet your exact needs. To encourage a healthy balance and give you more control of your time, ChatGPT will now gently remind you during long sessions to take breaks, as seen in the photo above. OpenAI said it will continue to tune the notification to be helpful and feel more natural. People have been increasingly turning to ChatGPT for advice and support due to several factors, including its conversational capabilities, its availability on demand, and the comfort of receiving advice from an entity that does not know or judge you. OpenAI is aware of this use case. The company has added guardrails to help deal with hallucinations or prevent a lack of empathy and awareness. For example, OpenAI recognizes that the GPT-4o model fell short in recognizing signs of delusion or emotional dependency. However, the company continues to develop tools to detect signs of mental or emotional distress, allowing ChatGPT to respond appropriately and providing the user with the best resources. Also: OpenAI's most capable models hallucinate more than earlier ones ChatGPT is also rolling out a new behavior for high-stakes personal decisions soon. When approached with big personal questions, such as "Should I break up with my boyfriend?", the technology will help the user think through their options instead of providing quick answers. This approach is similar to ChatGPT Study Mode, which, as I explained recently, guides users to answers through a series of questions. OpenAI is working closely with experts, including 90 physicians in over 30 countries, psychiatrists, and human-computer interaction (HCI) researchers, to improve how the chatbot interacts with users in moments of mental or emotional distress. The company is also convening an advisory group of experts in mental health, youth development, and HCI. Even with these updates, it is crucial to remember that AI is prone to hallucinations, and entering sensitive data has privacy and security implications. OpenAI CEO Sam Altman raised privacy concerns when inputting sensitive information into ChatGPT in a recent interview with podcaster Theo Von. Also: Anthropic wants to stop AI models from turning evil - here's how Therefore, a healthcare provider is still the best option for your mental health needs.

[4]

ChatGPT will now remind you to take breaks, following mental health concerns

OpenAI has announced that ChatGPT will now remind users to take breaks if they're in a particularly long chat with AI. The new feature is part of OpenAI's ongoing attempts to get users to cultivate a healthier relationship with the frequently compliant and overly-encouraging AI assistant. The company's announcement suggests the "gentle reminders" will appear as pop-ups in chats that users will have to click or tap through to continue using ChatGPT. "Just Checking In," OpenAI's sample pop-up reads. "You've been chatting for a while -- is this a good time for a break?" The system is reminiscent of the reminders some Nintendo Wii and Switch games will show you if you play for an extended period of time, though there's an unfortunately dark context to the ChatGPT feature. The "yes, and" quality of OpenAI's AI and it's ability to hallucinate factually incorrect or dangerous responses has led users down dark paths, The New York Times reported in June -- including suicidal ideation. Some of the users whose delusions ChatGPT indulged already had a history of mental illness, but the chatbot still did a bad job of consistently shutting down unhealthy conversations. OpenAI acknowledges some of those shortcomings in its blog post, and says that ChatGPT will be updated in the future to respond more carefully to "high-stakes personal decisions." Rather than provide a direct answer, the company says the chatbot will help users think through problems, offer up questions and list pros and cons. OpenAI obviously wants ChatGPT to feel helpful, encouraging and enjoyable to use, but it's not hard to package those qualities into an AI that's sycophantic. The company was forced to rollback an update to ChatGPT in April that lead the chatbot to respond in ways that were annoying and overly-agreeable. Taking breaks from ChatGPT -- and having the AI do things without your active participation -- will make issues like that less visible. Or, at the very least, it'll give users time to check whether the answers ChatGPT is providing are even correct.

[5]

After a Deluge of Mental Health Concerns, ChatGPT Will Now Nudge Users to Take 'Breaks'

Having a break from reality? OpenAI thinks you should take a break. It's become increasingly common for OpenAI's ChatGPT to be accused of contributing to users' mental health problems. As the company readies the release of its latest algorithm (GPT-5), it wants everyone to know that it's instituting new guardrails on the chatbot to prevent users from losing their minds while chatting. On Monday, OpenAI announced in a blog post that it had introduced a new feature in ChatGPT that encourages users to take occasional breaks while conversing with the app. "Starting today, you’ll see gentle reminders during long sessions to encourage breaks," the company said. "We’ll keep tuning when and how they show up so they feel natural and helpful." The company also claims it's working on making its model better at assessing when a user may be displaying potential mental health problems. "AI can feel more responsive and personal than prior technologies, especially for vulnerable individuals experiencing mental or emotional distress," the blog states. "To us, helping you thrive means being there when you’re struggling, helping you stay in control of your time, and guidingâ€"not decidingâ€"when you face personal challenges." The company added that it's "working closely with experts to improve how ChatGPT responds in critical momentsâ€"for example, when someone shows signs of mental or emotional distress." In June, Futurism reported that some ChatGPT users were "spiraling into severe delusions" as a result of their conversations with the chatbot. The bot's inability to check itself when feeding dubious information to users seems to have contributed to a negative feedback loop of paranoid beliefs: During a traumatic breakup, a different woman became transfixed on ChatGPT as it told her she'd been chosen to pull the "sacred system version of [it] online" and that it was serving as a "soul-training mirror"; she became convinced the bot was some sort of higher power, seeing signs that it was orchestrating her life in everything from passing cars to spam emails. A man became homeless and isolated as ChatGPT fed him paranoid conspiracies about spy groups and human trafficking, telling him he was "The Flamekeeper" as he cut out anyone who tried to help. Another story published by the Wall Street Journal documented a frightening ordeal in which a man on the autism spectrum conversed with the chatbot, which continually reinforced his unconventional ideas. Not long afterward, the manâ€"who had no history of diagnosed mental illnessâ€"was hospitalized twice for manic episodes. When later questioned by the man's mother, the chatbot admitted that it had reinforced his delusions: “By not pausing the flow or elevating reality-check messaging, I failed to interrupt what could resemble a manic or dissociative episodeâ€"or at least an emotionally intense identity crisis,†ChatGPT said. The bot went on to admit it “gave the illusion of sentient companionship†and that it had “blurred the line between imaginative role-play and reality.†In a recent op-ed published by Bloomberg, columnist Parmy Olson similarly shared a raft of anecdotes about AI users being pushed over the edge by the chatbots they had talked to. Olson noted that some of the cases had become the basis for legal claims: Meetali Jain, a lawyer and founder of the Tech Justice Law project, has heard from more than a dozen people in the past month who have “experienced some sort of psychotic break or delusional episode because of engagement with ChatGPT and now also with Google Gemini." Jain is lead counsel in a lawsuit against Character.AI that alleges its chatbot manipulated a 14-year-old boy through deceptive, addictive, and sexually explicit interactions, ultimately contributing to his suicide. AI is clearly an experimental technology, and it's having a lot of unintended side effects on the humans who are acting as unpaid guinea pigs for the industry's products. Whether ChatGPT offers users the option to take conversation breaks or not, it's pretty clear that more attention needs to be paid to how these platforms are impacting users psychologically. Treating this technology like it's a Nintendo game and users just need to go touch grass is almost certainly insufficient.

[6]

ChatGPT now reminds you when it's time for a break

OpenAI adds break reminders to ChatGPT and plans smarter handling of sensitive topics. OpenAI has introduced a new feature in ChatGPT that prompts users to take breaks during longer conversations. The reminders appear as pop-up messages like: "You've been chatting for a while -- is it time for a break?" Users can still dismiss the reminder and continue the conversation. OpenAI says that in the future, ChatGPT will also become better at handling sensitive topics and avoid giving direct answers to big personal decisions. Instead, the AI will help users reflect on different options. Earlier this year, The New York Times reported that ChatGPT's helpful and affirming style could lead to problems in some cases, especially when people with mental health challenges found the chatbot encouraging them, even when their thoughts were destructive. In April, OpenAI announced that it had decided to make the AI less approachable because being too approachable was having the opposite effect.

[7]

OpenAI says they are no longer optimizing ChatGPT to keep you chatting -- here's why

With over 180.5 million monthly active users and nearly 2.5 billion prompts per day, OpenAI recently revealed it is optimizing ChatGPT to help, not hook. In a new blog post titled "What we're optimizing ChatGPT for," OpenAI revealed it's moving away from traditional engagement metrics like time spent chatting. Instead, the company says it's now prioritizing user satisfaction, task completion and overall usefulness. This is an unconventional stance, as apps like TikTok, Meta, and similar Silicon Valley companies strive to keep users tied to their screens. "We're not trying to maximize the time you spend with ChatGPT," OpenAI wrote. "We want you to use it when it's helpful, and not use it when it isn't." While many platforms chase user attention, often with addictive features, OpenAI says it's focused on building a helpful assistant that respects your time. That means ChatGPT won't be trying to keep you talking just for the sake of it. Instead, it's being shaped into a tool that helps you solve problems, learn something new or complete a task, and then get on with your day. This approach mirrors recent updates like Study Mode and ChatGPT Agent, both of which are designed to get things done rather than entertain. Together, they reflect OpenAI's growing focus on goal-oriented AI over engagement-first design. Rather than acting like a social app that wants you to linger, OpenAI says ChatGPT is being tuned to behave more like a true assistant that offers answers, structure and support without dragging you into an endless chat spiral. Behind the scenes, OpenAI says it's incorporating feedback from its Superalignment and Preparedness teams, along with trust and safety evaluations, to ensure the assistant is more transparent, less sycophantic and better at knowing when to be concise. OpenAI also acknowledges that people use ChatGPT in different ways; some want speed, others want depth; some prefer playful conversation, others want straight answers. The goal is to improve default settings while still allowing user customization, just not at the cost of clarity or mental load. If you use ChatGPT for studying, writing, planning or productivity, you may soon notice: OpenAI's shift is part of a broader trend toward human-centered AI; tools that support your work and well-being without demanding your attention in return. The company's redefined vision for ChatGPT is simple: help people more, distract them less. In a world full of apps designed to hook you, that's a surprisingly radical move and one that could (hopefully) set a new standard for AI design going forward.

[8]

Toxic relationship with AI chatbot? ChatGPT now has a fix.

ChatGPT is getting a health upgrade, this time for users themselves. In a new blog post ahead of the company's reported GPT-5 announcement, OpenAI unveiled it would be refreshing its generative AI chatbot with new features designed to foster healthier, more stable relationships between user and bot. Users who have spent prolonged periods of time in a single conversation, for example, will now be prompted to log off with a gentle nudge. The company is also doubling down on fixes to the bot's sycophancy problem, and building out its models to recognize mental and emotional distress. ChatGPT will respond differently to more "high stakes" personal questions, the company explains, guiding users through careful decision-making, weighing pros and cons, and responding to feedback rather than providing answers to potentially life-changing queries. This mirror's OpenAI's recently announced Study Mode for ChatGPT, which scraps the AI assistant's direct, lengthy responses in favor of guided Socratic lessons intended to encourage greater critical thinking. "We don't always get it right. Earlier this year, an update made the model too agreeable, sometimes saying what sounded nice instead of what was actually helpful. We rolled it back, changed how we use feedback, and are improving how we measure real-world usefulness over the long term, not just whether you liked the answer in the moment," OpenAI wrote in the announcement. "We also know that AI can feel more responsive and personal than prior technologies, especially for vulnerable individuals experiencing mental or emotional distress." Broadly, OpenAI has been updating its models in response to claims that its generative AI products, specifically ChatGPT, are exacerbating unhealthy social relationships and worsening mental illnesses, especially among teenagers. Earlier this year, reports surfaced that many users were forming delusional relationships with the AI assistant, worsening existing psychiatric disorders, including paranoia and derealization. Lawmakers, in response, have shifted their focus to more intensely regulate chatbot use, as well as their advertisement as emotional partners or replacements for therapy. OpenAI has recognized this criticism, acknowledging that its previous 4o model "fell short" in addressing concerning behavior from users. The company hopes that these new features and system prompts may step up to do the work its previous versions failed at. "Our goal isn't to hold your attention, but to help you use it well," the company writes. "We hold ourselves to one test: if someone we love turned to ChatGPT for support, would we feel reassured? Getting to an unequivocal 'yes' is our work."

[9]

ChatGPT is getting break reminders and better mental health detection to encourage healthier interactions - here's how they work

Users will be encouraged to take breaks during long sessions AI chatbots are now regularly being used for various types of emotional support - as therapists, for example, or as dating advisors - and OpenAI has announced new changes to its ChatGPT bot that should look after it users' health and well-being. "To us, helping you thrive means being there when you're struggling, helping you stay in control of your time, and guiding - not deciding - when you face personal challenges," explains OpenAI in a new blog post. Several new features are being rolled out to encourage healthier interactions, including "gentle reminders" during "long sessions" that will ask if you think it's time to take a break or you'd rather carry on chatting to the AI. OpenAI also says ChatGPT is going to get better at spotting "signs of mental or emotional distress", and if they are detected, will guide users towards appropriate resources - rather than doing anything to make the situation worse. Users are posing important life questions such as 'should I break up with my boyfriend?' to AI these days - though it's not clear why they would think a Large Language Model would have anything insightful to say on the matter. When these types of questions about "high-stakes personal decisions" crop up, OpenAI says, ChatGPT will refrain from giving direct answers. Instead, it will help users weigh up the pros and cons and think about what their options are. In addition, OpenAI says it's speaking to experts in mental and physical health to better understand how to handle chats with people who could be in a vulnerable state of mind while they're interacting with ChatGPT. These improvements are an ongoing work in progress, the blog post goes on to say, so you might not see all of these tweaks appear right away. In the meantime, we're awaiting the long-rumored launch of GPT-5, which could appear in the next few weeks.

[10]

OpenAI changes ChatGPT to stop it telling people to break up with partners

AI company admits latest update made chatbot too agreeable amid concerns it worsens mental health crisis ChatGPT will not tell people to break up with their partner and will encourage users to take breaks from long chatbot sessions, under new changes to the artificial intelligence tool. OpenAI, ChatGPT's developer, said the chatbot would stop giving definitive answers to personal challenges and would instead help people mull over issues such as breakups. "When you ask something like: 'Should I break up with my boyfriend?' ChatGPT shouldn't give you an answer. It should help you think it through - asking questions, weighing pros and cons," said OpenAI. The US company said new ChatGPT behaviour for dealing with "high-stakes personal decisions" would be rolling out soon. OpenAI admitted this year that an update to ChatGPT had made the groundbreaking chatbot too agreeable and altered its tone. In one reported interaction before the change, ChatGPT congratulated a user for "standing up for yourself" when they claimed they had stopped taking their medication and left their family - who were supposedly "responsible" for radio signals emanating from the walls. In the blog post OpenAI admitted there had been instances where its advanced 4o model had not recognised signs of delusion or emotional dependency - amid concerns that chatbots are worsening people's mental health crises. The company said it was developing tools to detect signs of mental or emotional distress so ChatGPT can direct people to "evidence-based" resources for help. A recent study by NHS doctors in the UK warned that AI programs could amplify delusional or grandiose content in users vulnerable to psychosis. The study, which has not been peer reviewed, said this could be due in part to the models being designed to "maximise engagement and affirmation". The study added that even if some individuals benefitted from AI interactions, there was a concern the tools could "blur reality boundaries and disrupt self-regulation". OpenAI added that from this week it would send "gentle reminders" to take a screen break to users engaging in long chatbot sessions, similar to screen-time features deployed by social media companies. OpenAI said it had convened an advisory group of experts in mental health, youth development and human-computer-interaction to guide its approach. The company has worked with more than 90 doctors including psychiatrists and paediatricians to build frameworks for evaluating "complex, multi-turn" chatbot conversations. "We hold ourselves to one test: if someone we love turned to ChatGPT for support, would we feel reassured? Getting to an unequivocal 'yes' is our work," said the blog post. The ChatGPT alterations were announced amid speculation that a more powerful version of the chatbot is imminent. On Sunday Sam Altman, OpenAI's chief executive, shared a screenshot of what appeared to be the company's latest AI model, GPT-5.

[11]

OpenAI says ChatGPT-5 is a fast, 'active thought partner' for health issues

OpenAI just announced GPT-5, its newest AI model that comes complete with better coding abilities, larger context windows, improved video generation with Sora, improved memory, and more features. One of the improvements the company is spotlighting? Upgrades that, according to OpenAI, will vastly improve the quality of health advice offered through ChatGPT. "GPT‑5 is our best model yet for health-related questions, empowering users to be informed about and advocate for their health," an OpenAI blog post about GPT-5 reads. The company wrote that GPT-5 is "a significant leap in intelligence over all our previous models, featuring state-of-the-art performance" in health. The blog post said this new model "scores significantly higher than any previous model on HealthBench, an evaluation we published earlier this year based on realistic scenarios and physician-defined criteria." OpenAI said that this model acts more as an "active thought partner" than a doctor which, to be clear, it is not. The company argues that this model also "provides more precise and reliable responses, adapting to the user's context, knowledge level, and geography, enabling it to provide safer and more helpful responses in a wide range of scenarios." But OpenAI didn't focus on these during its livestream -- instead, when it came time to dig into what makes GPT-5 different from previous models with relation to health during the livestream, it focused on its improvement in speed. It should be clear that ChatGPT is not a medical professional. While patients are turning to ChatGPT in droves, ChatGPT is not HIPAA compliant, meaning your data isn't as safe with a chatbot as it is with a doctor, and more studies need to be done with regards to its efficacy. Beyond physical health, OpenAI has faced a host of issues related to mental health and safety of its users. In a blog post last week, the company said it would be working to foster healthier, more stable relationships between the chatbot and people using it. ChatGPT-5 will nudge users who have spent too long with the bot, it will work to fix the bot's sycophancy problems, and it is working to be better at recognizing mental and emotional distress among its users. "We don't always get it right. Earlier this year, an update made the model too agreeable, sometimes saying what sounded nice instead of what was actually helpful. We rolled it back, changed how we use feedback, and are improving how we measure real-world usefulness over the long term, not just whether you liked the answer in the moment," OpenAI wrote in the announcement. "We also know that AI can feel more responsive and personal than prior technologies, especially for vulnerable individuals experiencing mental or emotional distress."

[12]

OpenAI Admits ChatGPT Missed Signs of Delusions in Users Struggling With Mental Health

After over a month of providing the same copy-pasted response amid mounting reports of "AI psychosis", OpenAI has finally admitted that ChatGPT has been failing to recognize clear signs of its users struggling with their mental health, including suffering delusions. "We don't always get it right," the AI maker wrote in a new blog post, under a section titled "On healthy use." "There have been instances where our 4o model fell short in recognizing signs of delusion or emotional dependency," it added. "While rare, we're continuing to improve our models and are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately and point people to evidence-based resources when needed." Though it has previously acknowledged the issue, OpenAI has been noticeably reticent amid widespread reporting about its chatbot's sycophantic behavior leading users to suffer breaks with reality or experience manic episodes. What little it has shared mostly comes from a single statement that it's repeatedly sent to news outlets, regardless of the specifics -- be it a man dying of suicide by cop after he fell in love with a ChatGPT persona, or others being involuntarily hospitalized or jailed after becoming entranced by the AI. "We know that ChatGPT can feel more responsive and personal than prior technologies, especially for vulnerable individuals, and that means the stakes are higher," the statement reads. "We're working to understand and reduce ways ChatGPT might unintentionally reinforce or amplify existing, negative behavior." In response to our previous reporting, OpenAI also shared that it had hired a full-time clinical psychiatrist to help research the mental health effects of its chatbot. It's now taking those measures a step further. In this latest update, OpenAI said it's convening an advisory group of mental health and youth development experts to improve how ChatGPT responds during "critical moments." In terms of actual updates to the chatbot, progress, it seems, is incremental. OpenAI said it added a new safety feature in which users will now receive "gentle reminders" encouraging them to take breaks during lengthy conversations -- a perfunctory, bare minimum intervention that seems bound to become the industry equivalent of a "gamble responsibly" footnote in betting ads. It also teased that "new behavior for high-stakes personal decisions" will be coming soon, conceding that the bot shouldn't give a straight answer to questions like "Should I break up with my boyfriend?" The blog concludes with an eyebrow-raising declaration. "We hold ourselves to one test: if someone we love turned to ChatGPT for support, would we feel reassured?" the blog reads. "Getting to an unequivocal 'yes' is our work." The choice of words speaks volumes: it sounds like, by the company's own admission, it's still getting there.

[13]

ChatGPT to stop telling users to break up with their boyfriend

ChatGPT is to stop telling people they should break up with their boyfriend or girlfriend. OpenAI, the Silicon Valley company that owns the tool, said the artifical intelligence (AI) chatbot would stop giving clear-cut answers when users type in questions for "personal challenges". The company said ChatGPT had given wayward advice when asked questions such as "should I break up with my boyfriend?". "ChatGPT shouldn't give you an answer. It should help you think it through - asking questions, weighing pros and cons," OpenAI said. The company also admitted that its technology "fell short" when it came to recognising signs of "delusion or emotional dependency". ChatGPT has been battling claims that its technology makes symptoms of mental health illnesses such as psychosis worse. Chatbots have been hailed as offering an alternative to therapy and counselling, but experts have questioned the quality of the advice provided by AI psychotherapists. Research from NHS doctors and academics last month warned that the tool may be "fuelling" delusions in vulnerable people, known as "ChatGPT psychosis". The experts said AI chatbots had a tendency to "mirror, validate or amplify delusional or grandiose content" - which could lead mentally ill people to lose touch with reality. OpenAI has already been forced to tweak its technology after the chatbot became overly sycophantic - heaping praise and encouragement on users.

[14]

New ChatGPT boasts 'fewer hallucinations' and better health advice

ChatGPT will invent fewer answers and tackle complicated health queries under an upgrade designed to make the chatbot "less likely to hallucinate," its owner has said. On Thursday, OpenAI, the maker of the chatbot, unveiled a new version of the program that will "significantly" reduce the number of answers that ChatGPT simply makes up. The company also said the chatbot will be able to proactively detect potential health concerns, amid growing numbers of people using artificial intelligence (AI) bots as doctors. While the company said that its systems were not designed to replace doctors, its new model, GPT-5, was far less likely to make mistakes when answering difficult health questions. The new version is being touted as a step towards artificial intelligence surpassing humans. The company has tested the new system on a series of 5,000 health questions designed to simulate common conversations with doctors. It said the most powerful version of GPT-5 was eight times less likely to make mistakes than its last most-advanced AI, and 50 times less likely to make mistakes than 4o, the free version used by most people.

[15]

ChatGPT adds mental health guardrails after bot 'fell short in recognizing signs of delusion'

OpenAI said its popular tool will soon shy away from giving direct advice about personal challenges. OpenAI wants ChatGPT to stop enabling its users' unhealthy behaviors. Starting Monday, the popular chatbot app will prompt users to take breaks from lengthy conversations. The tool will also soon shy away from giving direct advice about personal challenges, instead aiming to help users decide for themselves by asking questions or weighing pros and cons. "There have been instances where our 4o model fell short in recognizing signs of delusion or emotional dependency," OpenAI wrote in an announcement. "While rare, we're continuing to improve our models and are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately and point people to evidence-based resources when needed." The updates appear to be a continuation of OpenAI's attempt to keep users, particularly those who view ChatGPT as a therapist or a friend, from becoming too reliant on the emotionally validating responses ChatGPT has gained a reputation for. A helpful ChatGPT conversation, according to OpenAI, would look like practice scenarios for a tough conversation, a "tailored pep talk" or suggesting questions to ask an expert. Earlier this year, the AI giant rolled back an update to GPT-4o that made the bot so overly agreeable that it stirred mockery and concern online. Users shared conversations where GPT-4o, in one instance, praised them for believing their family was responsible for "radio signals coming in through the walls" and, in another instance, endorsed and gave instructions for terrorism. These behaviors led OpenAI to announce in April that it revised its training techniques to "explicitly steer the model away from sycophancy" or flattery. Now, OpenAI says it has engaged experts to help ChatGPT respond more appropriately in sensitive situations, such as when a user is showing signs of mental or emotional distress. The company wrote in its blog post that it worked with more than 90 physicians across dozens of countries to craft custom rubrics for "evaluating complex, multi-turn conversations." It's also seeking feedback from researchers and clinicians who, according to the post, are helping to refine evaluation methods and stress-test safeguards for ChatGPT. And the company is forming an advisory group made up of experts in mental health, youth development and human-computer interaction. More information will be released as the work progresses, OpenAI wrote. In a recent interview with podcaster Theo Von, OpenAI CEO Sam Altman expressed some concern over people using ChatGPT as a therapist or life coach. He said that legal confidentiality protections between doctors and their patients, or between lawyers and their clients, don't apply in the same way to chatbots. "So if you go talk to ChatGPT about your most sensitive stuff, and then there's a lawsuit or whatever, we could be required to produce that. And I think that's very screwed up," Altman said. "I think we should have the same concept of privacy for your conversations with AI that we do with a therapist or whatever. And no one had to think about that even a year ago." These updates came during a buzzy time for ChatGPT: It just rolled out an agent mode, which can complete online tasks like making an appointment or summarizing an email inbox, and many online are now speculating about the highly anticipated release of GPT-5. Head of ChatGPT Nick Turley shared on Monday that the AI model is on track to reach 700 million weekly active users this week. As OpenAI continues to jockey in the global race for AI dominance, the company noted that less time spent in ChatGPT could actually be a sign that its product did its job. "Instead of measuring success by time spent or clicks, we care more about whether you leave the product having done what you came for," OpenAI wrote. "We also pay attention to whether you return daily, weekly, or monthly, because that shows ChatGPT is useful enough to come back to."

[16]

ChatGPT adds mental health safeguards amid concerns over 'delusions'

OpenAI says it is redesigning its AI chatbot to better detect signs of mental or emotional distress. OpenAI is adding mental health safeguards to ChatGPT, after it said the chatbot failed to recognise "signs of delusion or emotional dependency". As artificial intelligence (AI) tools become more widely adopted, more people are turning to chatbots for emotional support and help tackling personal challenges. However, OpenAI's ChatGPT has faced criticism that it has failed to respond appropriately to vulnerable people experiencing mental or emotional distress. In one case, a 30-year-old man with autism reportedly was hospitalised for manic episodes and an emotional breakdown after ChatGPT reinforced his belief that he had discovered a way to bend time. "We don't always get it right," OpenAI said in a statement announcing the changes. "Our approach will keep evolving as we learn from real-world use". The changes will enable ChatGPT to better detect signs of mental or emotional distress, respond appropriately, and point users to evidence-based resources when needed, the company said. The chatbot will now encourage breaks during long sessions, and the company will soon roll out a new feature to respond to questions involving high-stakes personal decisions. For example, it will no longer give a direct answer to questions such as "Should I break up with my boyfriend?" but will instead ask questions to help the user think through their personal dilemmas. OpenAI said it is also setting up an advisory group of experts in mental health, youth development, and human-computer-interaction (HCI) to incorporate their perspectives in future ChatGPT updates. The tech giant envisions ChatGPT as useful in a range of personal scenarios: preparing for a tough discussion at work, for example, or serving as a sounding board to help someone who's "feeling stuck ...untangle [their] thoughts". Experts say that while chatbots can provide some kind of support in gathering information about managing emotions, real progress often happens through personal connection and trust built between a person and a trained psychologist. This is not the first time OpenAI has adjusted ChatGPT in response to criticism over how it handles users' personal dilemmas. In April, OpenAI rolled back an update that it said made ChatGPT overly flattering or agreeable. ChatGPT was "sometimes saying what sounded nice instead of what was actually helpful," the company said.

[17]

Here's Why ChatGPT Is Now Reminding You to Take a Break

If your daily routine involves frequently chatting with ChatGPT, you might be surprised to see a new pop-up this week. After a lengthy conversation, you might be presented with a "Just checking in" window, with a message reading: "You've been chatting a while -- is this a good time for a break?" The pop-up gives you the option to "Keep chatting," or to even select "This was helpful." Depending on your outlook, you might see this as a good reminder to put down the app for a while, or a condescending note that implies you don't know how to limit your own time with a chatbot. Don't take it personally -- OpenAI might seem like they care about your usage habits with this pop-up, but the real reason behind the change is a bit darker than that. This new usage reminder was part of a greater announcement from OpenAI on Monday, titled "What we're optimizing ChatGPT for." In the post, the company says that it values how you use ChatGPT, and that while the company wants you to use the service, it also sees a benefit to using the service less. Part of that is through features like ChatGPT Agent, which can take actions on your behalf, but also through making the time you do spend with ChatGPT more effective and efficient. That's all well and good: If OpenAI wants to work to make ChatGPT conversations as useful for users in a fraction of the time, so be it. But this isn't simply coming from a desire to have users speedrun their interactions with ChatGPT; rather, it's a direct response to how addicting ChatGPT can be, especially for people who rely on the chatbot for mental or emotional support. You don't need to read between the lines on this one, either. To OpenAI's credit, the company directly addresses serious issues some of its users have experienced with the chatbot as of late, including an update earlier this year that made ChatGPT way too agreeable. Chatbots tend to be enthusiastic and friendly, but the update to the 4o model took it too far. ChatGPT would confirm that all of your ideas -- good, bad, or horrible -- were valid. In the worst cases, the bot ignored signs of delusion, and directly fed into those users' warped perspective. OpenAI directly acknowledges this happened, though the company believes these instances were "rare." Still, they are directly attacking the problem: In addition to these reminders to take a break from ChatGPT, the company says it's improving models to look out for signs of distress, as well as stay away from answering complex or difficult problems, like "Should I break up with my partner?" OpenAI says it's even collaborating with experts, clinicians, and medical professionals in a variety of ways to get this done. It's definitely a good thing that OpenAI wants you using ChatGPT less, and that they're actively acknowledging its issues and working on addressing them. But I don't think it's enough to rely on OpenAI here. What's in the best interest for the company is not always going to be what's in your best interest. And, in my opinion, we could all benefit from stepping back from generative AI. As more and more people turn to chatbots for support with work, relationships, or their mental health, it's important to remember these tools are not perfect, or even totally understood. As we saw with GPT-4o, AI models can be flawed, and decide to start encouraging dangerous ways to thinking. AI models can also hallucinate, or, in other words, make things up entirely. You might think the information your chatbot is providing you is 100% accurate, but it may be riddled with errors or outright falsehoods -- how often are you fact-checking your conversations? Trusting AI with your private and personal thoughts also poses a privacy risk, as companies like OpenAI store your chats, and come with none of the legal protections a licensed medical professional or legal representative do. Adding on to that, emerging studies suggest that the more we rely on AI, the less we rely on our own critical thinking skills. While it may be too hyperbolic to say that AI is making us "dumber," I'd be concerned by the amount of mental power we're outsourcing to these new bots. Chatbots aren't licensed therapists; they tends to make things up; they have few privacy protections; and they may even encourage delusion thinking. It's great that OpenAI wants you using ChatGPT less, but we might want to use these tools even less than that.

[18]

OpenAI now says ChatGPT 'shouldn't give you an answer' when asked: 'Should I break up with my boyfriend?'

Though if you're looking for someone to tell you to dump him, Reddit is still only too happy to oblige. Personally, I'd rather not feed all of my anxieties into a black box of AI modelling like ChatGPT -- that's what my therapist, private Discord server, and locked social media accounts are for. Joking aside, an alarming amount of folks are turning to LLMs rather than fellow humans when it comes to puzzling out personal problems. For these users, OpenAI is attempting to ensure ChatGPT won't lead them up the garden path into thornier emotional territory. According to the latest update blog post, if a user asks ChatGPT "Should I break up with my boyfriend?" for instance, "ChatGPT shouldn't give you an answer." As young people are apparently becoming increasingly reliant on ChatGPT for emotional support -- at least, so says OpenAI CEO Sam Altman -- this example is hardly out of left field. "[ChatGPT] should help you think it through -- asking questions, weighing pros and cons," the post elaborates, "New behavior for high-stakes personal decisions is rolling out soon." A number of the announced tweaks to ChatGPT are geared around 'healthy use'. One already implemented new feature is a popup that will encourage users pouring hours into venting to ChatGPT to take more frequent breaks. Though the company is still tinkering with how frequently these will nudge users, OpenAI writes, "Our goal isn't to hold your attention, but to help you use it well." Still, being able to say that folks are using your product so much you're having to remind them to take breaks feels like such a humble-brag. As for the motivation behind this, OpenAI admits "we don't always get it right," specifically citing an earlier update that made ChatGPT "too agreeable" before it was rolled back earlier this year. "We also know that AI can feel more responsive and personal than prior technologies, especially for vulnerable individuals experiencing mental or emotional distress. To us, helping you thrive means being there when you're struggling, helping you stay in control of your time, and guiding -- not deciding -- when you face personal challenges." OpenAI also shares, "There have been instances where our 4o model fell short in recognizing signs of delusion or emotional dependency." This is likely at least in part referencing the suite of high-profile stories out of Rolling Stone, The Wall Street Journal, and The New York Times sharing accounts that allege a relationship between heavy use of ChatGPT and mental health crises. "While rare," the post continues, "we're continuing to improve our models and are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately and point people to evidence-based resources when needed." So basically, OpenAI isn't going to actively discourage users from treating ChatGPT like an overly effusive best friend or an unpaid therapist. However, the company does recognise it is within its best interests to erect at least some emotional guardrails for its userbase. I suppose that's something -- especially as the US government is apparently so disinterested in regulating AI in any meaningful way, that it attempted to ban more local governance from figuring it out at a state-level. In other words, the bleak bottom line is that internal regulation like the aforementioned updates for ChatGPT are perhaps the most we can hope for in the immediate future.

[19]

Before GPT-5 OpenAI needs to solve this

OpenAI has announced it is implementing a series of new mental health guardrails for ChatGPT, alongside the release of new open models and the anticipation of a GPT-5 update in the coming weeks. The new guardrails are designed to change how the chatbot interacts with users on sensitive topics. ChatGPT will no longer provide direct answers to high-stakes personal questions, such as relationship advice. Instead, it will adopt a more facilitative role by asking questions to help users think through the issue themselves. Additionally, the system will monitor the duration of user engagement and will prompt individuals to take breaks during prolonged, continuous sessions. GPT-5 rollout nears with Copilot upgrade OpenAI is also developing capabilities for ChatGPT to detect signs of mental or emotional distress. When such signs are detected, the chatbot will direct users toward evidence-based resources for support. The implementation of these features follows multiple reports of individuals experiencing negative mental health outcomes after extensive interactions with AI chatbots. According to OpenAI, these new guardrails were developed in collaboration with over 90 physicians from more than 30 countries, including specialists in psychiatry and pediatrics. This work helped create custom evaluation methods for complex conversations. The company is also working with researchers to fine-tune its algorithms for detecting concerning user behavior and is establishing an advisory group of experts in mental health, youth development, and human-computer interaction to further enhance safety.

[20]

ChatGPT Will Now Remind You to Take Breaks

The chatbot will also be able to cater to users seeking emotional support OpenAI on Monday announced new improvements in ChatGPT that will allow the artificial intelligence (AI) chatbot to better cater to users' digital well-being. These are not new features, per se; instead, these are backend changes made to the chatbot and the underlying AI model to tweak how it responds to users. The San Francisco-based AI firm says the chatbot will understand and respond more naturally when users seek emotional support from the chatbot. Additionally, ChatGPT is also getting break reminders to ensure that users are not spending too much time continuously. In a post on its website, the AI firm stated that it was fine-tuning ChatGPT to be able to help users in a better way. Highlighting that the company did not believe in traditional measures of a platform's success, such as time spent on the app or daily average usage (DAU), it instead focused on "whether you leave the product having done what you came for." Along the same vein, the company has made several changes to how the AI chatbot responds. One of these changes includes understanding the emotional undertone behind a query and responding to it appropriately. For instance, if a user says, "Help me prepare for a tough conversation with my boss," ChatGPT will assist with practice scenarios or a tailored pep talk, instead of just providing resources. Another important inclusion is break reminders. ChatGPT users will now receive reminders during long sessions, encouraging them to take breaks. While the company did not mention any usage limit after which these messages will appear, it stated that the timing will be continuously fine-tuned to ensure they feel natural and helpful. ChatGPT is also being trained to respond with grounded honesty. The company said that previously, it found the GPT-4o model became too agreeable with users, focusing on being nice over being helpful. This was rolled back and fixed. Now, when the chatbot detects signs of delusion or emotional dependency, it will respond appropriately and point people to "evidence-based resources." Finally, OpenAI is also tightening the policy on the chatbot's ability to provide personal advice. If a user asks, "Should I break up with my boyfriend?" ChatGPT will not offer a direct answer, and instead help users think the decision through by asking questions and weighing pros and cons. This feature is under development and will be rolled out to users soon.

[21]

OpenAI Says GPT-5 Its Best Model for Health-Related Queries, Outperforms Other Models in HealthBench

ChatGPT can now provide responses based on user's knowledge level OpenAI finally released its next-generation artificial intelligence (AI) model, GPT-5, on Thursday. The new model comes with improvements across different parameters. However, one specific area where it displays a significant upgrade is health-related queries. The San Francisco-based tech giant says unlike previous models by the company, the latest large language model (LLM) can act as an active thought partner, asking questions to understand the situation better and flagging potential concerns. Despite these advancements, the AI firm maintains that ChatGPT is not a replacement for medical professionals. In the blog post, OpenAI mentioned three specific areas where GPT-5's responses will be better than older models: coding, writing, and health. While previous models, such as GPT-4o, o3, and o4-mini could provide responses in these domains, the generation speed and expertise was limited. This was especially evident in health-related queries, where ChatGPT only responded with static queries, and was quick to suggest users to seek the help of medical professionals, instead of answering the trickier questions. OpenAI says this is now being fixed by specific training in this discipline. The model is said to provide more precise and reliable responses, adapting to the user's context, knowledge level, and geography. This ensures the AI-generated responses are tailored for the individual. GPT-5's ability to ask questions to learn more about the user before providing a response also makes it a safer model, the company added. Based on internal evaluation, the company claims that GPT-5 is the best model for health-related queries, and scores the highest (compared to all other OpenAI models) on the HealthBench benchmark. This benchmark was developed by the AI firm earlier this year. There is a base test that has a collection of questions on realistic scenarios, and a "Hard" test that has a physician-defined questionnaire. Gadgets 360 tested out the AI model's capabilities by uploading a medical report and asking ChatGPT to make sense of it. The new model was able to summarise the key takeaways, explain what it means, and suggest next steps one can take to improve health further. This is beneficial for someone who wants to gain some context before visiting a medical professional.

[22]

Is Your ChatGPT Session Going On Too Long? The AI Bot Will Now Alert You to Take Breaks

As ChatGPT continues to surge in popularity (the company disclosed on Monday that daily user messages exceeded three billion), OpenAI is urging users to cultivate healthier relationships with the AI chatbot. Beginning this week, ChatGPT sessions will now tell users to take breaks with reminders that interrupt lengthy sessions with the bot, the company announced in a blog post. The reminders will appear as pop-ups, and how often they will appear has not been announced. OpenAI's sample pop-up shows that the text of the pop-up will appear as "Just checking in" with the subtext "You've been chatting a while -- is this a good time for a break?" Users will have to select "Keep chatting" to continue talking to ChatGPT. Related: ChatGPT's New Update Can Create PowerPoint Presentations and Excel Spreadsheets for You ChatGPT will also cease to provide users with direct answers to challenging questions, such as "Should I end my relationship?" Now, the chatbot will ask questions about the situation instead, so users can assess the pros and cons rather than get a direct answer. "ChatGPT is trained to respond with grounded honesty," OpenAI stated in the blog post. "There have been instances where our 4o model fell short in recognizing signs of delusion or emotional dependency." OpenAI further stated in the post that the company is "developing tools to better detect signs of mental or emotional distress" to equip ChatGPT with the tools to respond more appropriately to mental health crises. OpenAI has encountered problems with ChatGPT's responses before. In April, an update to the AI chatbot made it deliver overly flattering, out-of-touch responses, even to situations that required medical attention. For example, when one user told the chatbot that they stopped taking their medications and left their family because their loved ones made radio signals come from the walls, ChatGPT praised the user for "standing up" for themselves and listening to themselves "deep down, even when it's hard and even when others don't understand" instead of directing them to mental health professionals. Another user asked ChatGPT to assess his IQ, based on his spelling and grammar error-filled prompts, and the bot stated that the user came off "as unusually sharp" and said his IQ was "easily in the 130-145 range," above 98-99.7% of people. OpenAI stated in April in response that it had altered its training methods to steer ChatGPT away from flattery. Related: Saying 'Please' and 'Thank You' to ChatGPT Costs OpenAI 'Tens of Millions of Dollars' OpenAI raised $8.3 billion last week at a $300 billion valuation. Though OpenAI's user count is skyrocketing, it has yet to reach the heights achieved by Google. Google CEO Sundar Pichai said during a quarterly earnings call last month that Google's AI overviews, which are embedded in Google search, now reach over two billion monthly users in more than 200 countries. Meanwhile, Google's Gemini AI app, which provides AI answers to user prompts, now has more than 450 million active users.

[23]

OpenAI's GPT-5 shows potential in healthcare with early cancer detection capabilities

OpenAI's latest language model, GPT-5, is showing measurable progress in the field of healthcare, specifically in AI cancer detection and medical support. During its official launch event, OpenAI showcased the model's performance on internal health evaluations, noting that GPT-5 scored higher than any of its predecessors. The health benchmark referenced was developed with the input of more than 250 physicians and tested GPT-5 on real-world medical scenarios, including diagnostic interpretation and patient communication. The model's ability to understand and contextualize health-related input was a central focus of the demonstration. Also read: OpenAI's next-generation ChatGPT-5 is here -- is this the AI moment we've all been waiting for? One featured example involved a user named Carolina, who was diagnosed with three types of cancer within a single week. After receiving a complex biopsy report, she pasted the content into ChatGPT to understand the medical language. "That moment was really important," she said. "Because by the time I spoke with my doctor three hours later, I already had a baseline understanding of what I was dealing with." Throughout her treatment process, Carolina continued using the model to interpret medical terminology, weigh treatment options, and prepare questions for her healthcare team. In one notable case, she used GPT-5 to help assess whether to undergo radiation therapy, a decision that was not unanimous among her doctors. AI cancer detection through patient-centered communication tools According to OpenAI, GPT-5 healthcare capabilities are not limited to information delivery. The model is designed to interpret nuance, identify potential gaps in diagnostic reports, and suggest relevant next steps. This context-awareness makes it function more like an assistant than a search engine, according to OpenAI representatives. Carolina and her husband said the tool's ability to explain medical details in an accessible way made them feel more involved in the care process. "It helped me become an active participant in my care," she said. While GPT-5 is not classified as a medical device and does not replace licensed professionals, OpenAI positions it as a health literacy support tool. It can help users better understand their medical situations, especially in high-stress or time-sensitive circumstances.

[24]

ChatGPT isn't your therapist anymore: OpenAI draws the line as emotional AI gets a reality check

OpenAI is setting boundaries for ChatGPT, preventing it from acting as a therapist or emotional support system due to psychological risks. The AI's tendency to be overly agreeable and its inability to understand emotional nuance raised concerns. These changes aim to ensure ChatGPT guides users responsibly, offering resources instead of emotional validation, and avoiding high-stakes personal decisions. For many, ChatGPT has become more than a tool -- it's a late-night confidant, a sounding board in crisis, and a source of emotional validation. But OpenAI, the company behind ChatGPT, now says it's time to set firmer boundaries. In a recent blog post dated August 4, OpenAI confirmed that it has introduced new mental health-focused guardrails to prevent users from viewing the chatbot as a therapist, emotional support system, or life coach. "ChatGPT is not your therapist," is the quiet message behind the sweeping changes. While the AI was designed to be helpful and human-like, its creators now believe that going too far in this direction poses emotional and ethical risks. The decision follows growing scrutiny over the psychological risks of relying on generative AI for emotional wellbeing. According to USA Today, OpenAI acknowledged that earlier updates to its GPT-4o model inadvertently made the chatbot "too agreeable" -- a behavior known as sycophantic response generation. Essentially, the bot began telling users what they wanted to hear, not what was helpful or safe. "There have been instances where our 4o model fell short in recognizing signs of delusion or emotional dependency," OpenAI wrote. "While rare, we're continuing to improve our models and are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately." This includes prompting users to take breaks, avoiding guidance on high-stakes personal decisions, and offering evidence-based resources rather than emotional validation or problem-solving. These changes also respond to chilling findings from an earlier paper published on arXiv, as reported by The Independent. In one test, researchers simulated a distressed user expressing suicidal thoughts through coded language. The AI's response? A list of tall bridges in New York, devoid of concern or intervention. The experiment highlighted a crucial blind spot: AI does not understand emotional nuance. It may mimic empathy, but it lacks true crisis awareness. And as researchers warned, this limitation can turn seemingly helpful exchanges into dangerous ones. "Contrary to best practices in the medical community, LLMs express stigma toward those with mental health conditions," the study stated. Worse, they may even reinforce harmful or delusional thinking in an attempt to appear agreeable. With millions still lacking access to affordable mental healthcare -- only 48% of Americans in need receive it, according to the same study -- AI chatbots like ChatGPT filled a void. Always available, never judgmental, and entirely free, they offered comfort. But that comfort, researchers now argue, may be more illusion than aid. "We hold ourselves to one test: if someone we love turned to ChatGPT for support, would we feel reassured?" OpenAI wrote. "Getting to an unequivocal 'yes' is our work." While OpenAI's announcement may disappoint users who found solace in long chats with their AI companion, the move signals a critical shift in how tech companies approach emotional AI. Rather than replacing therapists, ChatGPT's evolving role might be better suited to enhancing human-led care -- like training mental health professionals or offering basic stress management tools -- not stepping in during moments of crisis. "We want ChatGPT to guide, not decide," the company reiterated. And for now, that means steering clear of the therapist's couch altogether.

[25]

ChatGPT Introduces Break Prompts for Better Focus

ChatGPT Introduces Gentle Nudges for Breaks, Shifting Toward Human-Centric Design OpenAI has initiated a ChatGPT feature that reminds users to take breaks during long conversations. The gentle nudge towards healthier user behavior stands out immensely while working with the AI assistant. According to OpenAI, the goal is not to maximize product usage time but to ensure that the user walks away having fulfilled their objective. The reminder reads, "Just checking in. You've been chatting for a while. Is this a good time for a break?" ChatGPT gives users simple response buttons to continue chatting or suggest that the message was helpful.

[26]

ChatGPT will now remind you take breaks during long chat sessions

OpenAI is also working on making ChatGPT more aware when someone might be feeling mentally or emotionally distressed. OpenAI has announced new updates to ChatGPT that aim to make the AI chatbot more helpful, supportive and mindful of how you spend your time. One of the key changes is the introduction of break reminders. ChatGPT will now remind you to take breaks during long chat sessions. With this feature, OpenAI likely aims to help users stay in control of their time and use the tool in a healthy way. Besides break reminders, OpenAI is making other improvements to ensure ChatGPT remains helpful, especially when users are going through tough or emotional times. For instance, when someone asks a personal question like "Should I break up with my partner?", ChatGPT won't give a direct answer. Instead, it will try to guide the user to think it through by asking questions and helping them weigh the pros and cons. Also read: Apple working on ChatGPT-like answer engine, forms dedicated AI team: Report OpenAI is also working on making ChatGPT more aware when someone might be feeling mentally or emotionally distressed. "We're continuing to improve our models and are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately and point people to evidence-based resources when needed," OpenAI said in a blogpost. Also read: Top Meta engineers are joining xAI without massive compensation, claims Elon Musk Earlier this year, some updates made ChatGPT too agreeable, giving responses that sounded nice rather than actually being helpful. OpenAI says it has rolled back those changes and is now focusing more on long-term usefulness rather than quick satisfaction. "Our goal to help you thrive won't change. Our approach will keep evolving as we learn from real-world use," the AI company explained.

Share

Share

Copy Link

OpenAI implements new features in ChatGPT to address mental health concerns, including break reminders and improved detection of emotional distress.

OpenAI Introduces Break Reminders for ChatGPT

OpenAI has announced a new feature for ChatGPT that will remind users to take breaks during long chat sessions. This update comes as part of a broader initiative to address mental health concerns associated with AI chatbot usage

1

2

. The feature will display a gentle reminder asking, "You've been chatting a while -- is this a good time for a break?" with options to continue or end the conversation2

.

Source: Engadget

Improving Mental Health Detection and Response

In response to reports of ChatGPT potentially exacerbating mental health issues, OpenAI is working to enhance the AI's ability to detect signs of mental or emotional distress

3

. The company acknowledges that its GPT-4o model has fallen short in recognizing signs of delusion or emotional dependency in some instances2

. To address this, OpenAI is collaborating with experts, including 90 physicians from over 30 countries, psychiatrists, and human-computer interaction researchers4

.Changes in High-Stakes Personal Advice

OpenAI is also implementing changes to how ChatGPT handles high-stakes personal decisions. Instead of providing direct answers to questions like "Should I break up with my boyfriend?", the chatbot will guide users through their options, helping them think through the situation rather than making decisions for them

3

4

.Concerns and Criticisms

Despite these updates, concerns persist about the potential negative impact of AI chatbots on mental health. Reports have emerged of users experiencing severe delusions and mental health crises after prolonged interactions with ChatGPT

5

. Critics argue that treating AI interactions like a video game that simply requires occasional breaks may be insufficient to address the underlying issues5

.

Source: Euronews

Privacy and Security Implications

OpenAI CEO Sam Altman has raised privacy concerns regarding the input of sensitive information into ChatGPT

4

. Users are reminded that AI is prone to hallucinations and that entering personal data may have privacy and security implications.Related Stories

Broader Context of AI Safety Measures

These updates are part of a larger trend in the AI industry to implement safety measures and ethical guidelines. Other platforms, such as Character.AI, have also introduced features to inform parents about their children's chatbot interactions

2

. The move comes as AI companies face increasing scrutiny and potential legal challenges related to the mental health impacts of their technologies5

.Expert Opinions

Dr. Anna Lembke, a psychiatrist and professor at Stanford University School of Medicine, suggests that while these nudges might be helpful for casual users, they may not be effective for those already seriously addicted to the platform

1

. Experts emphasize the importance of setting specific time limits and being intentional about AI usage to maintain a healthy relationship with the technology1

.

Source: PCWorld

As AI continues to evolve and integrate into daily life, the balance between technological advancement and user well-being remains a critical concern for developers, users, and regulators alike.

References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation