OpenAI launches Codex-Spark on Cerebras chips, marking first move away from Nvidia hardware

14 Sources

14 Sources

[1]

OpenAI sidesteps Nvidia with unusually fast coding model on plate-sized chips

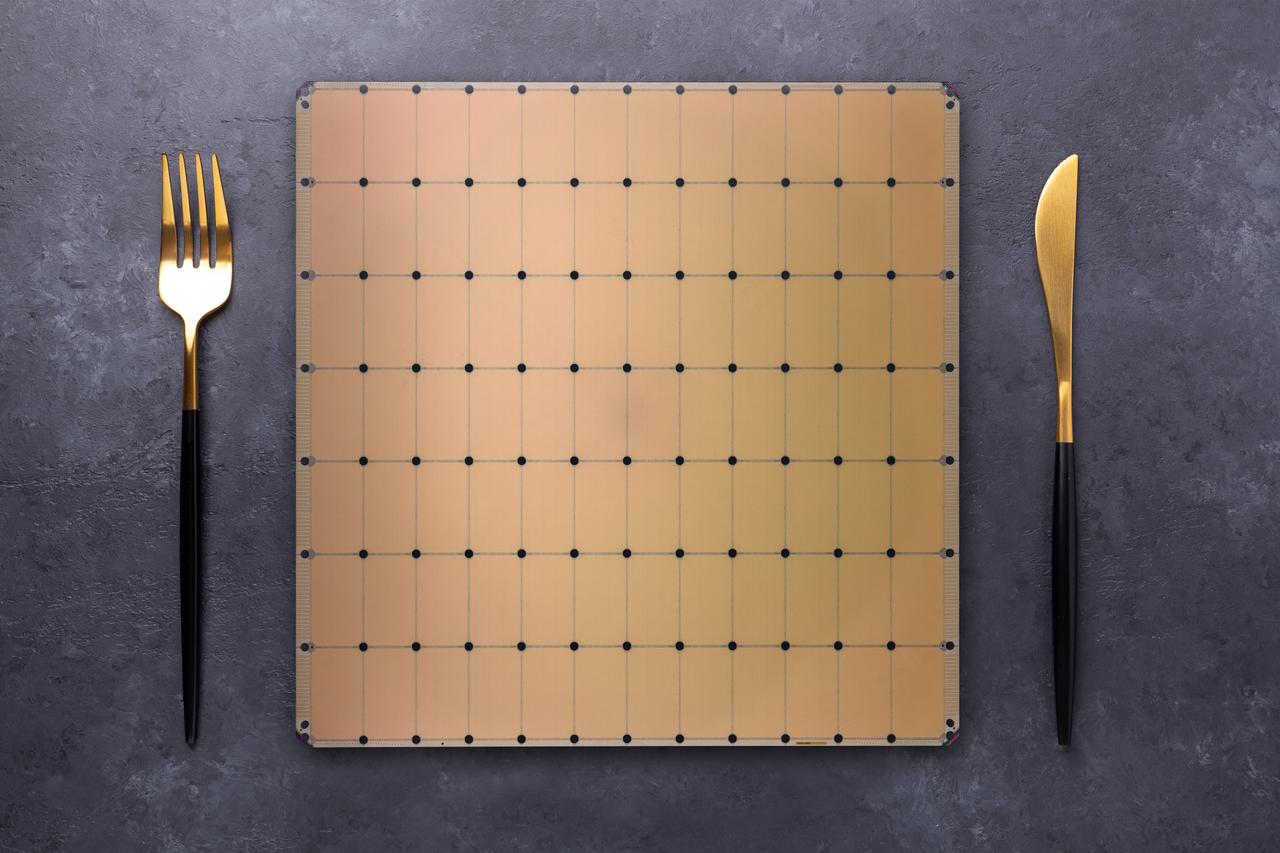

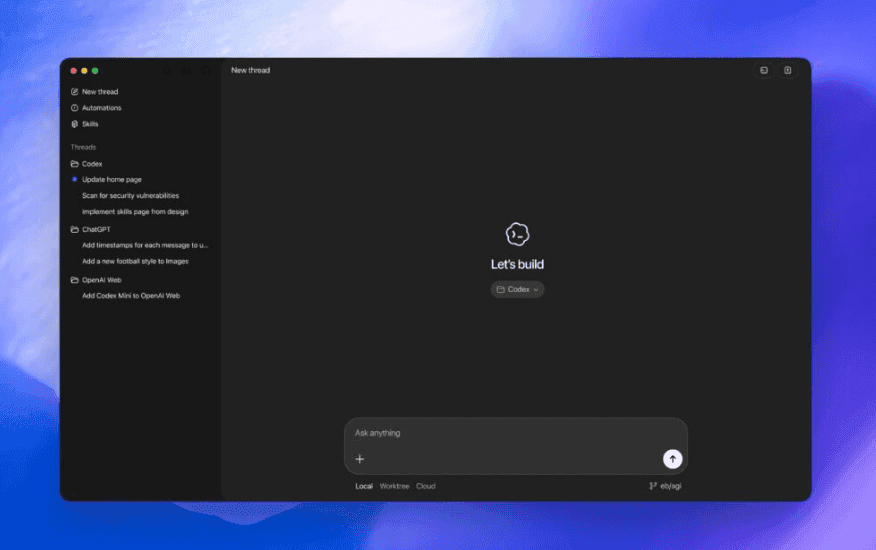

On Thursday, OpenAI released its first production AI model to run on non-Nvidia hardware, deploying the new GPT-5.3-Codex-Spark coding model on chips from Cerebras. The model delivers code at more than 1,000 tokens (chunks of data) per second, which is reported to be roughly 15 times faster than its predecessor. To compare, Anthropic's Claude Opus 4.6 in its new premium-priced fast mode reaches about 2.5 times its standard speed of 68.2 tokens per second, although it is a larger and more capable model than Spark. "Cerebras has been a great engineering partner, and we're excited about adding fast inference as a new platform capability," Sachin Katti, head of compute at OpenAI, said in a statement. Codex-Spark is a research preview available to ChatGPT Pro subscribers ($200/month) through the Codex app, command-line interface, and VS Code extension. OpenAI is rolling out API access to select design partners. The model ships with a 128,000-token context window and handles text only at launch. The release builds on the full GPT-5.3-Codex model that OpenAI launched earlier this month. Where the full model handles heavyweight agentic coding tasks, OpenAI tuned Spark for speed over depth of knowledge. OpenAI built it as a text-only model and tuned it specifically for coding, not for the general-purpose tasks that the larger version of GPT-5.3 handles. On SWE-Bench Pro and Terminal-Bench 2.0, two benchmarks for evaluating software engineering ability, Spark reportedly outperforms the older GPT-5.1-Codex-mini while completing tasks in a fraction of the time, according to OpenAI. The company did not share independent validation of those numbers. Anecdotally, Codex's speed has been a sore spot; when Ars tested four AI coding agents building Minesweeper clones in December, Codex took roughly twice as long as Anthropic's Claude Code to produce a working game. For context, GPT-5.3-Codex-Spark's 1,000 tokens per second represents a fairly dramatic leap over anything OpenAI has previously served through its own infrastructure. According to independent benchmarks from Artificial Analysis, OpenAI's fastest models on Nvidia hardware top out well below that mark: GPT-4o delivers roughly 147 tokens per second, o3-mini hits about 167, and GPT-4o mini clocks around 52.

[2]

A new version of OpenAI's Codex is powered by a new dedicated chip | TechCrunch

On Thursday, OpenAI announced the release of a light-weight version of its agentic coding tool Codex, the latest model of which OpenAI launched earlier this month. GPT-5.3-Codex-Spark is described by the company as a "smaller version" of that model, one that is designed for faster inference. To power that inference, OpenAI has brought in a dedicated chip from its hardware partner Cerebras, marking a new level of integration in the company's physical infrastructure. The partnership between Cerebras and OpenAI was announced last month, when OpenAI said that it had reached a multi-year agreement with the firm worth over $10 billion. "Integrating Cerebras into our mix of compute solutions is all about making our AI respond much faster," the company said at the time. Now, OpenAI calls Spark the "first milestone" in that relationship. Spark, which OpenAI says is designed for swift, real-time collaboration and "rapid iteration," will be powered by Cerebras' Wafer Scale Engine 3. The WSE-3 is Cerebras' third-generation waferscale megachip, decked out with 4 trillion transistors. OpenAI describes the new lightweight tool as a "daily productivity driver, helping users with rapid prototyping" rather than the longer, heavier tasks that the original 5.3 is designed for. Spark is currently enjoying a research preview for ChatGPT Pro users in the Codex app. In a tweet in advance of the announcement, CEO Sam Altman seemed to hint at the new model. "We have a special thing launching to Codex users on the Pro plan later today," Altman tweeted. "It sparks joy for me." In its official statement, OpenAI emphasized Spark as designed for the lowest possible latency on Codex. "Codex-Spark is the first step toward a Codex that works in two complementary modes: real-time collaboration when you want rapid iteration, and long-running tasks when you need deeper reasoning and execution," OpenAI shared. The company added that Cerebras' chips excel at assisting "workflows that demand extremely low latency." Cerebras has been around for over a decade but, in the AI era, it has enjoyed an increasingly prominent role in the tech industry. Just last week, the company announced that it had raised $1 billion in fresh capital at a valuation of $23 billion. The company has previously announced its intentions to pursue an IPO. "What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible -- new interaction patterns, new use cases, and a fundamentally different model experience," Sean Lie, CTO and Co-Founder of Cerebras, said in a statement. "This preview is just the beginning."

[3]

OpenAI's new Spark model codes 15x faster than GPT-5.3-Codex - but there's a catch

Runs on Cerebras WSE-3 chips for a latency-first Codex serving tier. The Codex team at OpenAI is on fire. Less than two weeks after releasing a dedicated agent-based Codex app for Macs, and only a week after releasing the faster and more steerable GPT-5.3-Codex language model, OpenAI is counting on lightning striking for a third time. Also: OpenAI's new GPT-5.3-Codex is 25% faster and goes way beyond coding now - what's new Today, the company has announced a research preview of GPT-5.3-Codex-Spark, a smaller version of GPT-5.3-Codex built for real-time coding in Codex. The company reports that it generates code 15 times faster while "remaining highly capable for real-world coding tasks." There is a catch, and I'll talk about that in a minute. Also: OpenAI's Codex just got its own Mac app - and anyone can try it for free now Codex-Spark will initially be available only to $200/mo Pro tier users, with separate rate limits during the preview period. If it follows OpenAI's usual release strategy for Codex releases, Plus users will be next, with other tiers gaining access fairly quickly. (Disclosure: Ziff Davis, ZDNET's parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.) OpenAI says Codex-Spark is its "first model designed specifically for working with Codex in real-time -- making targeted edits, reshaping logic, or refining interfaces and seeing results immediately." Let's deconstruct this briefly. Most agentic AI programming tools take a while to respond to instructions. In my programming work, I can give an instruction (and this applies to both Codex and Claude Code) and go off and work on something else for a while. Sometimes it's just a few minutes. Other times, it can be long enough to get lunch. Also: I got 4 years of product development done in 4 days for $200, and I'm still stunned Codex-Spark is apparently able to respond much faster, allowing for quick and continuous work. This could speed up development considerably, especially for simpler prompts and queries. I know that I've been occasionally frustrated when I've asked an AI a super simple question that should have generated an immediate response, but instead I still had to wait five minutes for an answer. By making responsiveness a core feature, the model supports more fluid, conversational coding. Sometimes, using coding agents feels more like old-school batch style coding. This is designed to overcome that feeling. GPT-5.3-Codex-Spark isn't intended to replace the base GPT-5.3-Codex. Instead, Spark was designed to complement high-performance AI models built for long-running, autonomous tasks lasting hours, days, or weeks. The Codex-Spark model is intended for work where responsiveness matters as much as intelligence. It supports interruption and redirection mid-task, enabling tight iteration loops. This is something that appeals to me, because I always think of something more to tell the AI ten seconds after I've given it an assignment. Also: I used Claude Code to vibe code a Mac app in 8 hours, but it was more work than magic The Spark model defaults to lightweight, targeted edits, making quick tweaks rather than taking big swings. It also doesn't automatically run tests unless requested. OpenAI has been able to reduce latency (faster turnaround) across the full request-response pipeline. It says that overhead per client/server roundtrip has been reduced by 80%. Per-token overhead has been reduced by 30%. The time-to-first-token has been reduced by 50% through session initialization and streaming optimizations. Another mechanism that improves responsiveness during iteration is the introduction of a persistent WebSocket connection, so the connection doesn't have to continually be renegotiated. In January, OpenAI announced a partnership with AI chipmaker Cerebras. We've been covering Cerebras for a while. We've covered its inference service, its work with DeepSeek, its work boosting the performance of Meta's Llama model, and Cerebras' announcement of a really big AI chip, meant to double LLM performance. GPT-5.3-Codex-Spark is the first milestone for the OpenAI/Cerebras partnership announced last month. The Spark model runs on Cerebras' Wafer Scale Engine 3, which is a high-performance AI chip architecture that boosts speed by putting all the compute resources on a single wafer-scale processor the size of a pancake. Also: 7 ChatGPT settings tweaks that I can no longer work without - and I'm a power user Usually, a semiconductor wafer contains a whole bunch of processors, which later in the production process get cut apart and put into their own packaging. The Cerebras wafer contains just one chip, making it a very, very big processor with very, very closely coupled connections. According to Sean Lie, CTO and co-founder of Cerebras, "What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible -- new interaction patterns, new use cases, and a fundamentally different model experience. This preview is just the beginning." Now, here are the gotchas. First, OpenAI says that "when demand is high, you may see slower access or temporary queuing as we balance reliability across users." So, fast, unless too many people want to go fast. Here's the kicker. The company says, "On SWE-Bench Pro and Terminal-Bench 2.0, two benchmarks evaluating agentic software engineering capability, GPT-5.3-Codex-Spark underperforms GPT-5.3-Codex, but can accomplish the tasks in a fraction of the time." Last week, in the GPT-5.3-Codex announcement, OpenAI said that GPT-5.3-Codex was the first model it classifies as "high capability" for cybersecurity, according to its published Preparedness Framework. On the other hand, the company admitted that GPT-5.3-Codex-Spark "does not have a plausible chance of reaching our Preparedness Framework threshold for high capability in cybersecurity." Also: I stopped using ChatGPT for everything: These AI models beat it at research, coding, and more Think on these statements, dear reader. This AI isn't as smart, but it does do those not-as-smart things a lot faster. 15x speed is certainly nothing to sneeze at. But do you really want an AI to make coding mistakes 15 times faster and produce code that is less secure? Let me tell you this. "Eh, it's good enough" isn't really good enough when you have thousands of pissed off users coming at you with torches and pitchforks because you suddenly broke their software with a new release. Ask me how I know. Last week, we learned that OpenAI uses Codex to write Codex. We also know that it uses it to be able to build code much faster. So the company clearly has a use case for something that's way faster, but not as smart. As I get a better handle on what that is and where Spark fits, I'll let you know. OpenAI shared that it is working toward dual modes of reasoning and real-time work for its Codex models. The company says, "Codex-Spark is the first step toward a Codex with two complementary modes: longer-horizon reasoning and execution, and real-time collaboration for rapid iteration. Over time, the modes will blend." The workflow model it envisions is interesting. According to OpenAI, the intent is that eventually "Codex can keep you in a tight interactive loop while delegating longer-running work to sub-agents in the background, or fanning out tasks to many models in parallel when you want breadth and speed, so you don't have to choose a single mode up front." Also: I tried a Claude Code rival that's local, open source, and completely free - how it went Essentially, it's working toward the best of both worlds. But for now, you can choose fast or accurate. That's a tough choice. But the accurate is getting more accurate, and now, at least, you can opt for fast when you want it (as long as you keep the trade-offs in mind and you're paying for the Pro tier). What about you? Would you trade some intelligence and security capability for 15x faster coding responses? Does the idea of a real-time, interruptible AI collaborator appeal to you, or do you prefer a more deliberate, higher-accuracy model for serious development work? How concerned are you about the cybersecurity distinction between Codex-Spark and the full GPT-5.3-Codex model? And if you're a Pro user, do you see yourself switching between "fast" and "smart" modes depending on the task? Let us know in the comments below.

[4]

OpenAI Debuts First Model Using Chips From Nvidia Rival Cerebras

The new Codex model marks OpenAI's latest attempt to best AI rivals such as Alphabet Inc.'s Google and Anthropic PBC in the market for AI coding assistants. OpenAI is releasing its first artificial intelligence model that runs on chips from semiconductor startup Cerebras Systems Inc., part of a push by the ChatGPT maker to broaden the pool of chipmakers it works with beyond Nvidia Corp. The model, GPT-5.3-Codex-Spark, is intended to be a less powerful but speedier version of its most recent Codex software for automating coding. The Spark option, slated to be released Thursday, lets software engineers quickly complete tasks like editing specific chunks of code and running tests. Users can also easily interrupt it, or order the model to complete something else coding-related without having to wait for it to finish a lengthy computing process. Last month, OpenAI signed a $10 billion-plus deal to use hardware from Cerebras to get quicker responses from its AI models. For Cerebras, the partnership offers a significant boost in its bid to compete in a market long dominated by Nvidia Corp. For OpenAI, the tie-up was the latest move to work with more suppliers to meet its growing computing needs. OpenAI also struck a blockbuster agreement in October with Nvidia rival Advanced Micro Devices Inc. to deploy 6 gigawatts' worth of AMD graphics processing units over multiple years. Later that month, OpenAI agreed to buy custom chips and networking components from Broadcom Inc. More recently, OpenAI's relationship with Nvidia has come under scrutiny amid reports of tensions between the two firms. The chief executives of both companies have since publicly said they remain committed to working together. In a statement Thursday, an OpenAI spokesperson said the company's partnership with Nvidia is "foundational" and that OpenAI's most powerful AI models are the result of "multi-year hardware and software engineering done side by side" between the two companies. "That's why we are anchoring on Nvidia as the core of our training and inference stack, while deliberately expanding the ecosystem around it through partnerships with Cerebras, AMD and Broadcom," the spokesperson said. The new Codex model also marks OpenAI's latest attempt to best AI rivals such as Alphabet Inc.'s Google and Anthropic PBC, which are competing for dominance in the rapidly growing market for AI coding assistants. Codex has more than 1 million weekly active users, OpenAI said. Initially, the updated AI model will be available to ChatGPT Pro subscribers as a research preview, the company said, with plans to roll out to more users in the coming weeks.

[5]

OpenAI unveils first model running on Cerebras silicon

GPT-5.3-Codex-Spark may be a mouthfull, but it's certainly fast at 1,000 Tok/s running on Nvidia rival's CS3 accelerators Nvidia and AMD can take a seat. On Thursday, OpenAI unveiled GPT-5.3-Codex-Spark, its first model that will run on Cerebras Systems' dinner-place-sized AI accelerators, which feature some of the world's fastest on-chip memory. The lightweight model is designed to provide a more interactive experience to users of OpenAI's Codex code assistant by leveraging Cerebras' SRAM-packed CS3 accelerators to generate responses at more than 1,000 tokens per second. Last month, OpenAI signed a $10 billion contract with Cerebras to deploy up to 750 megawatts of its custom AI silicon to serve up Altman and crew's latest generation of GPT models. Cerebras' waferscale architecture is notable for using a kind of ultra-fast, on-chip memory called SRAM, which is roughly 1,000x faster than the HBM4 found on Nvidia's upcoming Rubin GPUs announced at CES earlier this year. This, along with optimizations to the inference and application pipelines, allows OpenAI's latest model to churn out answers in the blink of an eye. As Spark is a proprietary model, we don't have all the details on things like parameter count, as we would if OpenAI had released it on HuggingFace like it did with gpt-oss back in August. What we do know is, just like that model, it's a text-only model with a 128,000-token context window. If you're not familiar, a model's context window refers to how many tokens (words, punctuation, numbers, etc) it can keep track of at any one time. Because of this, it's often referred to as the model's short-term memory. While 128K tokens might sound like a lot, because the model has to keep track of both existing and newly generated code, code assistants like Codex can blow through that pretty quickly. Even starting from a blank slate, at 1,000 tokens a second it would take roughly two minutes to overflow the context limit. This might be why OpenAI says Spark defaults to a "lightweight" style that only makes minimal targeted edits and won't run debug tests unless specifically asked. A fast model isn't much good if it can't write working code. If OpenAI is to be believed, the Spark model delivers greater accuracy than GPT-5.1-Codex-Mini in Terminal-Bench 2.0 while also being much, much faster than its smarter GPT-5.3-Codex model. OpenAI may be looking beyond GPUs, but it's certainly not abandoning them anytime soon. "GPUs remain foundational across our training and inference pipelines and deliver the most cost effective tokens for broad usage. Cerebras complements that foundation by excelling at workflows that demand extremely low latency," OpenAI wrote. This isn't just lip service. As fast as Cerebra's CS3 accelerators are, they can't match modern GPUs on memory capacity. SRAM may be fast, but it's not space efficient. The entire dinner-place-sized chip contains just 44 GB of memory. By comparison, Nvidia's Rubin will ship with 288 GB of HBM4 while AMD's MI455X will pack on 432 GB. This makes GPUs more economical for running very large models, especially if speed isn't a priority. Having said that, OpenAI suggests that as Cerebras brings more compute online, it'll be bringing its larger models to the compute platform, presumably for those willing to pay a premium for high-speed inference. GPT-5.3-Codex-Spark is currently available in preview to Codex Pro users and via API to select OpenAI partners. ®

[6]

OpenAI deploys Cerebras chips for 15x faster code generation in first major move beyond Nvidia

OpenAI on Thursday launched GPT-5.3-Codex-Spark, a stripped-down coding model engineered for near-instantaneous response times, marking the company's first significant inference partnership outside its traditional Nvidia-dominated infrastructure. The model runs on hardware from Cerebras Systems, a Sunnyvale-based chipmaker whose wafer-scale processors specialize in low-latency AI workloads. The partnership arrives at a pivotal moment for OpenAI. The company finds itself navigating a frayed relationship with longtime chip supplier Nvidia, mounting criticism over its decision to introduce advertisements into ChatGPT, a newly announced Pentagon contract, and internal organizational upheaval that has seen a safety-focused team disbanded and at least one researcher resign in protest. "GPUs remain foundational across our training and inference pipelines and deliver the most cost effective tokens for broad usage," an OpenAI spokesperson told VentureBeat. "Cerebras complements that foundation by excelling at workflows that demand extremely low latency, tightening the end-to-end loop so use cases such as real-time coding in Codex feel more responsive as you iterate." The careful framing -- emphasizing that GPUs "remain foundational" while positioning Cerebras as a "complement" -- underscores the delicate balance OpenAI must strike as it diversifies its chip suppliers without alienating Nvidia, the dominant force in AI accelerators. Codex-Spark represents OpenAI's first model purpose-built for real-time coding collaboration. The company claims the model delivers generation speeds 15 times faster than its predecessor, though it declined to provide specific latency metrics such as time-to-first-token or tokens-per-second figures. "We aren't able to share specific latency numbers, however Codex-Spark is optimized to feel near-instant -- delivering 15x faster generation speeds while remaining highly capable for real-world coding tasks," the OpenAI spokesperson said. The speed gains come with acknowledged capability tradeoffs. On SWE-Bench Pro and Terminal-Bench 2.0 -- two industry benchmarks that evaluate AI systems' ability to perform complex software engineering tasks autonomously -- Codex-Spark underperforms the full GPT-5.3-Codex model. OpenAI positions this as an acceptable exchange: developers get responses fast enough to maintain creative flow, even if the underlying model cannot tackle the most sophisticated multi-step programming challenges. The model launches with a 128,000-token context window and supports text only -- no image or multimodal inputs. OpenAI has made it available as a research preview to ChatGPT Pro subscribers through the Codex app, command-line interface, and Visual Studio Code extension. A small group of enterprise partners will receive API access to evaluate integration possibilities. "We are making Codex-Spark available in the API for a small set of design partners to understand how developers want to integrate Codex-Spark into their products," the spokesperson explained. "We'll expand access over the coming weeks as we continue tuning our integration under real workloads." The technical architecture behind Codex-Spark tells a story about inference economics that increasingly matters as AI companies scale consumer-facing products. Cerebras's Wafer Scale Engine 3 -- a single chip roughly the size of a dinner plate containing 4 trillion transistors -- eliminates much of the communication overhead that occurs when AI workloads spread across clusters of smaller processors. For training massive models, that distributed approach remains necessary and Nvidia's GPUs excel at it. But for inference -- the process of generating responses to user queries -- Cerebras argues its architecture can deliver results with dramatically lower latency. Sean Lie, Cerebras's CTO and co-founder, framed the partnership as an opportunity to reshape how developers interact with AI systems. "What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible -- new interaction patterns, new use cases, and a fundamentally different model experience," Lie said in a statement. "This preview is just the beginning." OpenAI's infrastructure team did not limit its optimization work to the Cerebras hardware. The company announced latency improvements across its entire inference stack that benefit all Codex models regardless of underlying hardware, including persistent WebSocket connections and optimizations within the Responses API. The results: 80 percent reduction in overhead per client-server round trip, 30 percent reduction in per-token overhead, and 50 percent reduction in time-to-first-token. The Cerebras partnership takes on additional significance given the increasingly complicated relationship between OpenAI and Nvidia. Last fall, when OpenAI announced its Stargate infrastructure initiative, Nvidia publicly committed to investing $100 billion to support OpenAI as it built out AI infrastructure. The announcement appeared to cement a strategic alliance between the world's most valuable AI company and its dominant chip supplier. Five months later, that megadeal has effectively stalled, according to multiple reports. Nvidia CEO Jensen Huang has publicly denied tensions, telling reporters in late January that there is "no drama" and that Nvidia remains committed to participating in OpenAI's current funding round. But the relationship has cooled considerably, with friction stemming from multiple sources. OpenAI has aggressively pursued partnerships with alternative chip suppliers, including the Cerebras deal and separate agreements with AMD and Broadcom. From Nvidia's perspective, OpenAI may be using its influence to commoditize the very hardware that made its AI breakthroughs possible. From OpenAI's perspective, reducing dependence on a single supplier represents prudent business strategy. "We will continue working with the ecosystem on evaluating the most price-performant chips across all use cases on an ongoing basis," OpenAI's spokesperson told VentureBeat. "GPUs remain our priority for cost-sensitive and throughput-first use cases across research and inference." The statement reads as a careful effort to avoid antagonizing Nvidia while preserving flexibility -- and reflects a broader reality that training frontier AI models still requires exactly the kind of massive parallel processing that Nvidia GPUs provide. The Codex-Spark launch comes as OpenAI navigates a series of internal challenges that have intensified scrutiny of the company's direction and values. Earlier this week, reports emerged that OpenAI disbanded its mission alignment team, a group established in September 2024 to promote the company's stated goal of ensuring artificial general intelligence benefits humanity. The team's seven members have been reassigned to other roles, with leader Joshua Achiam given a new title as OpenAI's "chief futurist." OpenAI previously disbanded another safety-focused group, the superalignment team, in 2024. That team had concentrated on long-term existential risks from AI. The pattern of dissolving safety-oriented teams has drawn criticism from researchers who argue that OpenAI's commercial pressures are overwhelming its original non-profit mission. The company also faces fallout from its decision to introduce advertisements into ChatGPT. Researcher Zoë Hitzig resigned this week over what she described as the "slippery slope" of ad-supported AI, warning in a New York Times essay that ChatGPT's archive of intimate user conversations creates unprecedented opportunities for manipulation. Anthropic seized on the controversy with a Super Bowl advertising campaign featuring the tagline: "Ads are coming to AI. But not to Claude." Separately, the company agreed to provide ChatGPT to the Pentagon through Genai.mil, a new Department of Defense program that requires OpenAI to permit "all lawful uses" without company-imposed restrictions -- terms that Anthropic reportedly rejected. And reports emerged that Ryan Beiermeister, OpenAI's vice president of product policy who had expressed concerns about a planned explicit content feature, was terminated in January following a discrimination allegation she denies. Despite the surrounding turbulence, OpenAI's technical roadmap for Codex suggests ambitious plans. The company envisions a coding assistant that seamlessly blends rapid-fire interactive editing with longer-running autonomous tasks -- an AI that handles quick fixes while simultaneously orchestrating multiple agents working on more complex problems in the background. "Over time, the modes will blend -- Codex can keep you in a tight interactive loop while delegating longer-running work to sub-agents in the background, or fanning out tasks to many models in parallel when you want breadth and speed, so you don't have to choose a single mode up front," the OpenAI spokesperson told VentureBeat. This vision would require not just faster inference but sophisticated task decomposition and coordination across models of varying sizes and capabilities. Codex-Spark establishes the low-latency foundation for the interactive portion of that experience; future releases will need to deliver the autonomous reasoning and multi-agent coordination that would make the full vision possible. For now, Codex-Spark operates under separate rate limits from other OpenAI models, reflecting constrained Cerebras infrastructure capacity during the research preview. "Because it runs on specialized low-latency hardware, usage is governed by a separate rate limit that may adjust based on demand during the research preview," the spokesperson noted. The limits are designed to be "generous," with OpenAI monitoring usage patterns as it determines how to scale. The Codex-Spark announcement arrives amid intense competition for AI-powered developer tools. Anthropic's Claude Cowork product triggered a selloff in traditional software stocks last week as investors considered whether AI assistants might displace conventional enterprise applications. Microsoft, Google, and Amazon continue investing heavily in AI coding capabilities integrated with their respective cloud platforms. OpenAI's Codex app has demonstrated rapid adoption since launching ten days ago, with more than one million downloads and weekly active users growing 60 percent week-over-week. More than 325,000 developers now actively use Codex across free and paid tiers. But the fundamental question facing OpenAI -- and the broader AI industry -- is whether speed improvements like those promised by Codex-Spark translate into meaningful productivity gains or merely create more pleasant experiences without changing outcomes. Early evidence from AI coding tools suggests that faster responses encourage more iterative experimentation. Whether that experimentation produces better software remains contested among researchers and practitioners alike. What seems clear is that OpenAI views inference latency as a competitive frontier worth substantial investment, even as that investment takes it beyond its traditional Nvidia partnership into untested territory with alternative chip suppliers. The Cerebras deal is a calculated bet that specialized hardware can unlock use cases that general-purpose GPUs cannot cost-effectively serve. For a company simultaneously battling competitors, managing strained supplier relationships, and weathering internal dissent over its commercial direction, it is also a reminder that in the AI race, standing still is not an option. OpenAI built its reputation by moving fast and breaking conventions. Now it must prove it can move even faster -- without breaking itself.

[7]

OpenAI's rapid GPT-5.3-Codex model moves beyond simple coding tasks - SiliconANGLE

OpenAI's rapid GPT-5.3-Codex model moves beyond simple coding tasks OpenAI Group PBC today released a lightweight version of its popular agentic coding tool GPT-5.3-Codex, which is designed for more rapid inference, the process by which artificial intelligence models take actions in response to prompts. It's called GPT‑5.3‑Codex‑Spark, and it's a smaller version of the original GPT‑5.3‑Codex that launched earlier this month. By launching a more streamlined version, OpenAI is taking a cue from Google LLC, which offers the Gemini Flash series of large language models as a faster, lower-cost alternative for applications that don't need so much processing power. OpenAI said in a blog post that GPT-5.3-Codex-Spark is the first of its models to run on a dedicated chip from the artificial intelligence chipmaker Cerebras Systems Inc., which partnered with the ChatGPT maker last month. The two companies announced a multiyear agreement that's worth over $10 billion, and now we know why. At the time, OpenAI said "integrating Cerebras into our mix of compute solutions is all about making our AI respond much faster," and Spark has become the "first milestone" in that partnership. The company explained that Spark is powered by Cerebras' Wafer Scale Engine 3 and designed for swift, real-time collaboration and rapid iteration. The WSE-3 chip is Cerebras' third-generation AI processor. As with the company's earlier chips, it's a massive, dinner plate-sized piece of silicon that boasts more than four trillion transistors. Besides the chip, another of the notable things about Spark is that it was apparently "instrumental in creating itself." By that, OpenAI means that early versions of the model were used to help debug its own training processes, manage its deployment, diagnose test results and conduct evaluations. "Our team was blown away by how much Codex Spark was able to accelerate its own development," the company said. GPT-5.3-Codex-Spark is meant to be a "daily productivity driver" and designed primarily for rapid prototyping, the company explained. That means it can handle more than just basic coding tasks. It's an AI agent that can perform almost all of the things that developers might do on a computer, the company explained. That includes "debugging, deploying, monitoring, writing PRDs, editing copy, user research, tests, metrics and more," OpenAI said. In addition, there's also an emphasis on being able to steer the model mid-task and frequent status updates. As far as performance goes, OpenAI said Spark surpasses the reasoning capabilities of the full-fat GPT-5.2-Codex model and the standard GPT-5.2 model that currently powers ChatGPT. Its outputs are generated at a 25% faster rate, on average. In addition, GPT-5.3-Codex-Spark only consumes around half of the "tokens" used by earlier models when producing certain kinds of outputs. Tokens are the fundamental units of information that AI models use to process and generate data. On the Terminal-Bench 2.0 benchmark, GPT-5.3-Codex-Spark scored 77.3%, improving on the 64% accuracy level of GPT-5.2-Codex. It also shows similar improvements in game and web development, OpenAI said. GPT‑5.3‑Codex‑Spark is currently only available to ChatGPT's paid subscribers, and will also be made available through the company's application programming interface "soon." There are no changes to pricing or limits on users compared to the standard GPT-5.3-Codex model. "Codex-Spark is the first step toward a Codex that works in two complementary modes: real-time collaboration when you want rapid iteration, and long-running tasks when you need deeper reasoning and execution," OpenAI said. The company added that Cerebras' chips excel at assisting "workflows that demand extremely low latency." Cerebras has emerged as one of the most prominent rivals to Nvidia Corp. in the AI compute business. Last week, the chipmaker announced it had raised an additional $1 billion in funding in a round that raised its value to $23 billion. That's likely to be its last major funding round, for the company has submitted paperwork for an initial public offering that's expected to proceed in the first half of this year. Going forward, the chipmaker expects to collaborate much more with OpenAI in future. "What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible," said Cerebras co-founder and Chief Technology Officer Sean Lie. "New interaction patterns, new use cases and a fundamentally different model experience, and this preview is just the beginning."

[8]

OpenAI launches GPT-5.3-Codex-Spark for ultra-fast real-time coding

On Thursday, OpenAI announced GPT-5.3-Codex-Spark, a lightweight version of its agentic coding tool Codex, which the company launched earlier this month. Powered by Cerebras' Wafer Scale Engine 3 chip, Spark enables faster inference as the first milestone in OpenAI's multi-year partnership with Cerebras. The original GPT-5.3-Codex model serves longer, heavier tasks requiring deeper reasoning and execution. In contrast, GPT-5.3-Codex-Spark focuses on swift operations. OpenAI describes it as a smaller version designed specifically for reduced latency during inference processes. This new tool integrates hardware from Cerebras directly into OpenAI's physical infrastructure, representing deeper collaboration between the two companies. OpenAI and Cerebras revealed their partnership last month through a multi-year agreement valued at over $10 billion. At that time, OpenAI stated, "Integrating Cerebras into our mix of compute solutions is all about making our AI respond much faster." The company now positions Spark as the initial achievement in this alliance, emphasizing its role in accelerating AI responses. Cerebras' Wafer Scale Engine 3 powers Spark's inference capabilities. This third-generation waferscale megachip contains 4 trillion transistors, enabling high-performance computing tailored for AI workloads. OpenAI highlights Spark's suitability for real-time collaboration and rapid iteration. The tool functions as a daily productivity driver, assisting users with rapid prototyping rather than extended computations handled by the base GPT-5.3-Codex model. Spark operates with the lowest possible latency on Codex. OpenAI explains its purpose in an official statement: "Codex-Spark is the first step toward a Codex that works in two complementary modes: real-time collaboration when you want rapid iteration, and long-running tasks when you need deeper reasoning and execution." Cerebras' chips support workflows that demand extremely low latency. Currently, Spark appears as a research preview exclusively for ChatGPT Pro users within the Codex app. This limited rollout allows initial testing among subscribers on the Pro plan. Prior to the announcement, OpenAI CEO Sam Altman hinted at the release on Twitter. He posted, "We have a special thing launching to Codex users on the Pro plan later today." Altman added, "It sparks joy for me." Cerebras, established over a decade ago, has gained prominence in the AI sector. Last week, the company secured $1 billion in fresh capital, achieving a valuation of $23 billion. Cerebras has indicated plans to pursue an initial public offering. Sean Lie, CTO and co-founder of Cerebras, commented on the development: "What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible -- new interaction patterns, new use cases, and a fundamentally different model experience." Lie described the preview as "just the beginning."

[9]

OpenAI's Fast New Model Aims to Push Vibe Coding Toward Warp Speed

On February 12, in a blog post on its website, OpenAI said that the new model is called GPT‑5.3‑Codex‑Spark, and it's been optimized for one thing in particular: speed. On X, OpenAI cofounder and CEO Sam Altman said that the model is incredibly fast, and "sparks joy for me." Unlike OpenAI's other AI models, which mostly run on chips created by Nvidia, Codex-Spark runs on special hardware developed with Cerebras, an AI startup that develops ultra-low latency accelerator chips for data centers. These chips enable AI models to ingest and output text at a rapid pace; Codex-Spark is capable of generating over 1,000 tokens (roughly equivalent to 750 words) per second. The model runs on Cerebras' Wafer Scale Engine 3, a chip built specifically for ultra-fast inference, a term that basically refers to the act of running the model.

[10]

OpenAI Says This Is Its First AI Model That Can Code in Real-Time

* The AI model is available in Codex, CLI, and IDE extension * OpenAI says Codex-Spark can process 1,000 tokens per second * Codex-Spark has a context window of 128K tokens OpenAI's focus on Codex has intensified in 2026. The San Francisco-based artificial intelligence (AI) giant released the GPT-5.3-Codex model earlier this month, marking the first time the company prioritised a coding-focused model over its general-purpose version. Last week, OpenAI CEO Sam Altman reportedly called the growth of Codex "insane" after it grew by 50 percent in a week. Now, continuing the momentum, the AI giant has released its first AI model that can write code in real-time, dubbed GPT-5.3-Codex-Spark. OpenAI Introduces GPT-5.3-Codex-Spark In a post, OpenAI announced and detailed its latest coding model. The GPT-5.3-Codex-Spark is currently available in research preview to ChatGPT Pro subscribers across the Codex app, command line interface (CLI), and integrated development environment (IDE) extension. Since the full version has not been rolled out, those using the model will experience certain limitations for the time being. Apart from its unusually long name, Codex-Spark's standout capability is real-time coding. The company said the model is designed for low-latency workloads and can write and edit code almost instantly. The model is said to process 1,000 tokens per second. It is a text-only model that writes code, makes targeted edits, reshapes logic, and refines interfaces in real-time. It comes with a context window of 1,28,000 tokens, making it fairly capable of handling regular to mildly challenging tasks. However, for more complex tasks, users should stick to the GPT-5.3-Codex model. Apart from code-level optimisations, what makes Codex-Spark fast is that the AI model runs on low-latency hardware. OpenAI announced a partnership with Cerebras last month, and as part of it, the new model now runs on its Wafer Scale Engine 3 AI accelerator that offers high-speed inference. Coming to performance, the AI giant shared internal benchmark evaluations to claim that on SWE-Bench Pro and Terminal-Bench 2.0, which measures agentic software engineering capability, the Codex-Spark outperforms GPT-5.1-Codex-mini and slightly falls short of GPT-5.3-Codex. However, with the faster output generation, this is a big leap. OpenAI said the AI model will also be available to a small set of design partners via the application programming interface (API) to help the company understand how developers intend to integrate it in their products. Notably, Codex-Spark will have its own rate limits, and usage will not count towards standard rate limits. The company said expansion will be expanded over the coming weeks.

[11]

OpenAI GPT-3.5 Codex Spark Reaches 1,000 Tokens per Second for Coding

GPT-3.5 Codex Spark, as overviewed by Prompt Engineering, is a specialized AI model designed for speed and efficiency in real-time coding and agentic tasks. Capable of processing up to 1,000 tokens per second, it achieves this remarkable performance through custom hardware developed in collaboration with Cerebras. While it operates with a smaller 128,000-token context window compared to other models, this trade-off allows it to prioritize rapid execution and cost-effectiveness, making it particularly well-suited for businesses and developers focused on high-demand, time-sensitive applications. This overview will cover key aspects of Codex Spark, including its custom hardware integration and how its speed-focused design compares to other models like Google's Gemini 3 Deep Think or Miniax M2.5. Readers will also gain insights into its practical applications, such as powering agentic systems and accelerating real-time coding workflows, as well as the economic advantages it offers for businesses prioritizing efficiency over advanced reasoning. These details highlight Codex Spark's role in the growing trend toward specialized AI systems tailored to specific needs. Codex Spark is purpose-built to deliver exceptional speed and efficiency, making it a standout choice for real-time applications. Its defining features include: These features make Codex Spark particularly well-suited for tasks requiring rapid decision-making, such as agentic coding and sub-agent architectures. Its design reflects a deliberate trade-off, prioritizing speed and cost-effectiveness over advanced reasoning. Codex Spark's focus on speed comes with certain trade-offs. Its reasoning and intelligence capabilities are reduced compared to larger, general-purpose models. This makes it less suitable for complex, logic-driven tasks but highly effective for scenarios where speed and efficiency are critical. For instance, in agentic systems where sub-agents perform discrete, verifiable functions, Codex Spark's rapid token processing ensures efficient execution without unnecessary computational overhead. This trade-off reflects a broader trend in AI development: moving away from all-encompassing models toward specialized systems optimized for specific use cases. Rather than replacing larger models, Codex Spark is designed to complement them. It fills a niche where speed and cost-effectiveness take precedence over advanced reasoning capabilities, offering businesses a tailored solution for their unique needs. Browse through more resources below from our in-depth content covering more areas on GPT-3.5 Codex Spark. The release of Codex Spark underscores a growing trend in AI: the development of specialized models tailored to specific tasks. As businesses increasingly rely on AI for coding and agentic operations, specialized models provide a balance between performance and economic viability. These models are particularly valuable for tasks with clear, measurable outcomes, allowing businesses to achieve results while minimizing costs. This trend is evident across the AI landscape. For example: The diversification of AI capabilities reflects the industry's response to varied market demands. Each model targets a specific niche, allowing businesses to choose solutions that align with their operational priorities. Codex Spark's reliance on custom hardware highlights the growing importance of purpose-built systems in AI. The CerebrScale Engine 3, developed by Cerebras, exemplifies how hardware innovation is driving advancements in speed and efficiency. By designing hardware tailored to specific tasks, companies can achieve significant gains in performance while reducing costs. This shift has also intensified competition in the hardware market. Established players like Nvidia now face challenges from specialized hardware providers, spurring rapid innovation. As AI models become more specialized, the demand for custom hardware solutions is expected to grow, further shaping the industry landscape. The integration of specialized hardware with AI models like Codex Spark demonstrates how collaborative innovation between software and hardware developers can unlock new levels of performance. This synergy is likely to play a critical role in the future of AI development. Codex Spark is positioned as a practical solution for businesses seeking fast, reliable, and cost-effective AI tools. Its speed and efficiency make it ideal for applications where rapid execution is more important than advanced reasoning. Key use cases include: From an economic perspective, Codex Spark offers significant advantages. By focusing on speed and efficiency, it reduces computational costs, making it an attractive option for businesses prioritizing cost-effectiveness over innovative capabilities. This aligns with the broader industry trend of balancing performance with affordability, making sure AI remains accessible for a wide range of applications. Codex Spark's emphasis on speed distinguishes it from other AI models. For example: While larger models offer superior reasoning and orchestration capabilities, they often come with higher costs and slower execution times. Codex Spark fills a critical gap by providing a fast, efficient alternative for specialized tasks, making it a practical choice for businesses with specific needs. The release of GPT-3.5 Codex Spark signals a pivotal moment in AI development, emphasizing the importance of specialized models and custom hardware. As the industry evolves, further advancements in both AI capabilities and hardware design are expected. The focus on speed, efficiency, and economic viability will likely drive innovation, allowing businesses to use AI for an increasingly diverse range of applications. Looking ahead, the integration of specialized models like Codex Spark with advanced hardware solutions will redefine the boundaries of AI. Whether it's powering real-time coding tasks, allowing agentic systems, or optimizing cost-effectiveness, these developments will shape the future of AI and its role in the global economy. By addressing specific needs with precision, specialized AI models are poised to become indispensable tools in the modern technological landscape. Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.

[12]

OpenAI's Launches New Version of Codex with a Dedicated Processor Underneath

OpenAI has released a light-weight version of Codex - its agentic coding tool - which it has described as a smaller version of what it had launched earlier this month. The latest version, the company claims, provides faster inference and it does this through a dedicated processor that it acquired from US-based AI company Cerebras. This integration marks a new addition to the OpenAI story in the physical infrastructure space. Incidentally, the partnership came into existence last month. The new light-weight version is called the GPT-5.3 Codex Spark. In a note on January 14, Open AI said integrating Cerebras into "our mix of compute solutions is all about making our AI respond much faster. When you ask a hard question, generate code, create an image, or run an AI agent, there is a loop happening behind the scenes: you send a request, the model thinks, and it sends something back. When AI responds in real time, users do more with it, stay longer, and run higher-value workloads." OpenAI signed a $10 billion multi-year partnership with Cerebras and the latest launch is described it as the "first milestone" in this association. The company says Spark is designed for fast and real-time collaboration and rapid iteration. "OpenAI's compute strategy is to build a resilient portfolio that matches the right systems to the right workloads. Cerebras adds a dedicated low-latency inference solution to our platform. That means faster responses, more natural interactions, and a stronger foundation to scale real-time AI to many more people," said Sachin Katti of OpenAI. In a post on X, OpenAI CEO Sam Altman gave an advance preview suggesting that the new model is special. "We have a special thing launching to Codex users on the Pro plan later today," Altman tweeted. "It sparks joy for me," he noted. The company said in a statement that Spark is designed for the lowest possible latency on Codex. "Codex-Spark is the first step toward a Codex that works in two complementary modes: real-time collaboration when you want rapid iteration, and long-running tasks when you need deeper reasoning and execution," the release said. The release also matters to Cerebras, which has been around for over a decade. In recent times, the company has seen renewed interest from the AI industry which led to a billion dollar investment last week at a $23 billion valuation. "What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible -- new interaction patterns, new use cases, and a fundamentally different model experience," Sean Lie, CTO and co-founder of Cerebras, said in a statement.

[13]

GPT-5.3 Codex Spark: Is 15x speed worth the reasoning trade-off?

In a move that caught the developer community off-guard on February 12, 2026, OpenAI launched GPT-5.3 Codex Spark. This isn't just another incremental update; it's a radical departure from the "bigger is better" philosophy. Powered by a high-stakes partnership with Cerebras, Spark is a lightweight, ultra-low-latency variant of the flagship GPT-5.3 Codex. The headline figure? It generates over 1,000 tokens per second, roughly 15 times faster than its parent model. But as with any specialized tool, that speed comes at a cost. Also read: The most loved dangerous model: Why OpenAI had to kill GPT-4o to save us from itself For the first time in its production history, OpenAI has moved away from NVIDIA GPUs for a specific tier of its service. Spark runs on the Cerebras Wafer Scale Engine 3 (WSE-3). By keeping the entire model on a single, massive silicon wafer, Cerebras eliminates the communication lag between traditional chips. Combined with new persistent WebSocket connections, OpenAI has slashed client-server roundtrip overhead by 80%. The result is a model that feels less like a "request-response" tool and more like an extension of your own keyboard. The "reasoning trade-off" isn't just marketing speak; it's visible in the data. While Spark holds its own in standard tasks, it falters when the logic gets dense. Also read: Google Gemini 3 Deep Think hits gold medal standards in math and physics olympiads Deciding which model to deploy depends entirely on the specific "flow" of your current development task. You should lean on the flagship GPT-5.3 Codex for high-stakes architectural design, such as mapping out complex interactions between multiple files or microservices. Its superior reasoning capabilities make it the essential choice for identifying vulnerabilities in security-critical code or managing autonomous agents that need to run in the background for extended periods to solve deep-seated logic bugs. In these scenarios, the model's ability to "think" through a problem outweighs the need for raw generation speed. Conversely, you should switch to Spark when your workflow demands immediacy and rapid iteration. It is the ideal companion for rapid prototyping or "thinking out loud," where you want to see UI tweaks and boilerplate code manifest instantly. Spark's ultra-low latency also makes it perfect for the "grunt work" of coding, such as refactoring, renaming variables, or generating docstrings for a single function. Because you can interrupt its output mid-generation without the typical lag of larger models, it excels in interactive debugging, allowing you to "steer" the AI in real-time as it works through a solution. For developers in India looking to optimize their workflow, Spark represents a new "dual-mode" future. OpenAI's strategy is clear: use the heavy-hitter for the deep thinking, and use the Spark for the "grunt work" of daily coding. Currently, Spark is available as a research preview for ChatGPT Pro subscribers ($200/month) within the Codex app and VS Code extension. While the price of entry is high, for those whose productivity is capped by "AI wait times," the 15x speed boost might just be the best ROI of the year.

[14]

OpenAI unveils ultra-fast GPT 5.3 Codex Spark model for real-time coding

GPT-5.3-Codex-Spark is currently rolling out as a research preview. OpenAI has introduced GPT-5.3-Codex-Spark, a new AI model built specifically for real-time coding. The new model is currently rolling out as a research preview and is a smaller version of GPT-5.3-Codex. It can generate more than 1,000 tokens per second when running on low-latency hardware, allowing developers to see changes to their code almost immediately. The goal is to make AI-assisted programming feel like a live collaboration rather than a delayed response. This release is also the first milestone in OpenAI's partnership with Cerebras, which was announced in January. The Codex-Spark model runs on Cerebras' Wafer Scale Engine 3, a purpose-built AI accelerator designed to handle extremely fast inference workloads. Also read: Apple iPhone 17e vs Google Pixel 10a: Price, camera, display, battery and other leaks compared Developers can collaborate with the model in real time, interrupting or redirecting it as it works, and iterate with near-instant responses. By default, the model makes small, targeted code edits and does not run tests unless instructed. OpenAI says it performs strongly on software engineering benchmarks while completing tasks significantly faster than its larger counterpart. Codex-Spark is currently text-only at a 128k context window and is said to be the first in a family of ultra-fast models. 'During the research preview, Codex-Spark will have its own rate limits and usage will not count towards standard rate limits. However, when demand is high, you may see limited access or temporary queuing as we balance reliability across users,' OpenAI explains. The model is rolling out to ChatGPT Pro users as a research preview through Codex app, CLI, and VS Code extension. Also read: OpenAI researcher quits, cites concerns over ChatGPT's advertising push OpenAI says Codex-Spark is the first step toward a future where AI coding tools combine fast, interactive assistance with longer-running autonomous problem-solving- allowing developers to switch seamlessly between quick edits and deeper tasks. 'As we learn more with the developer community about where fast models shine for coding, we'll introduce even more capabilities- including larger models, longer context lengths, and multimodal input,' the AI firm added.

Share

Share

Copy Link

OpenAI released GPT-5.3-Codex-Spark, its first production AI model running on non-Nvidia hardware from Cerebras Systems. The lightweight coding model delivers over 1,000 tokens per second—roughly 15 times faster than its predecessor—using Cerebras' wafer-scale chips packed with 4 trillion transistors. The release marks the first milestone in OpenAI's $10 billion partnership with the AI chipmaker.

OpenAI Deploys First AI Coding Model on Non-Nvidia Hardware

OpenAI released GPT-5.3-Codex-Spark on Thursday, marking a significant shift in the company's infrastructure strategy. The new AI coding model represents OpenAI's first production deployment on non-Nvidia hardware, running instead on chips from Cerebras Systems

1

. The lightweight model delivers code at more than 1,000 tokens per second, which OpenAI reports is roughly 15 times faster than its predecessor3

. To put this speed in context, OpenAI's fastest models on Nvidia hardware deliver significantly lower throughput: GPT-4o reaches roughly 147 tokens per second, o3-mini hits about 167, and GPT-4o mini clocks around 521

.

Source: ZDNet

Codex-Spark is available as a research preview to ChatGPT Pro subscribers at $200 per month through the Codex app, command-line interface, and VS Code extension

1

. OpenAI is also rolling out API access to select design partners. The model ships with a 128,000-token context window and handles text only at launch.Cerebras Hardware Partnership Powers Faster Inference

The release represents the first milestone in OpenAI's hardware partnership with Cerebras, announced last month as a multi-year agreement worth over $10 billion

2

. Codex-Spark runs on Cerebras' Wafer Scale Engine 3, the company's third-generation waferscale megachip decked out with 4 trillion transistors2

. The dinner-plate-sized AI accelerators feature some of the world's fastest on-chip memory, using SRAM that is roughly 1,000 times faster than the HBM4 found on Nvidia's upcoming Rubin GPUs5

.

Source: The Register

"Cerebras has been a great engineering partner, and we're excited about adding fast inference as a new platform capability," said Sachin Katti, head of compute at OpenAI

1

. Sean Lie, CTO and Co-Founder of Cerebras, emphasized the potential for discovering "new interaction patterns, new use cases, and a fundamentally different model experience" through fast inference2

.Real-Time Coding Designed for Low-Latency Workflows

OpenAI built Codex-Spark specifically for real-time coding rather than the heavyweight agentic tasks handled by the full GPT-5.3-Codex model launched earlier this month

1

. The company tuned the model for speed over depth of knowledge, creating what it describes as a "daily productivity driver" for rapid prototyping2

. OpenAI reduced overhead per client-server roundtrip by 80 percent, per-token overhead by 30 percent, and time-to-first-token by 50 percent through session initialization and streaming optimizations3

.

Source: SiliconANGLE

The model supports interruption and redirection mid-task, enabling tight iteration loops for developers who need to adjust instructions quickly

3

. It defaults to lightweight, targeted edits and doesn't automatically run tests unless requested. OpenAI says Cerebras' chips excel at assisting "workflows that demand extremely low latency"2

.Related Stories

OpenAI Strategy to Diversify Hardware Suppliers Beyond Nvidia

The Cerebras deployment is part of OpenAI's broader push to diversify hardware suppliers and meet growing computing needs . OpenAI struck a blockbuster agreement in October with AMD to deploy 6 gigawatts' worth of GPU over multiple years, and agreed to buy custom chips and networking components from Broadcom . An OpenAI spokesperson emphasized that the company's partnership with Nvidia remains "foundational" and that OpenAI is "anchoring on Nvidia as the core of our training and inference stack, while deliberately expanding the ecosystem around it" .

OpenAI noted that "GPUs remain foundational across our training and inference pipelines and deliver the most cost effective tokens for broad usage" while Cerebras complements that foundation for low-latency workflows

5

. The company suggests that as Cerebras brings more compute online, it will bring larger models to the platform for users willing to pay a premium for high-speed inference5

.Performance Benchmarks and Market Competition

On SWE-Bench Pro and Terminal-Bench 2.0, two benchmarks for evaluating software engineering ability, Codex-Spark reportedly outperforms the older GPT-5.1-Codex-mini while completing tasks in a fraction of the time

1

. The model delivers greater accuracy than GPT-5.1-Codex-Mini in Terminal-Bench 2.0 while being much faster than the smarter GPT-5.3-Codex model5

. The new model marks OpenAI's latest attempt to compete with AI rivals such as Alphabet's Google and Anthropic, which are vying for dominance in the rapidly growing market for AI coding assistants . Codex has more than 1 million weekly active users, OpenAI said .Cerebras raised $1 billion in fresh capital at a valuation of $23 billion last week and has announced intentions to pursue an IPO

2

. Sam Altman hinted at the launch in a tweet, saying "We have a special thing launching to Codex users on the Pro plan later today. It sparks joy for me"2

.References

Summarized by

Navi

[5]

Related Stories

OpenAI launches Codex MacOS app with GPT-5.3 model to challenge Claude Code dominance

02 Feb 2026•Technology

OpenAI Launches GPT-5.1-Codex-Max: Revolutionary AI Coding Model Capable of 24-Hour Development Sessions

19 Nov 2025•Technology

OpenAI releases GPT-5.2-Codex with advanced cybersecurity and agentic coding capabilities

19 Dec 2025•Technology

Recent Highlights

1

Trump bans Anthropic from government as AI companies clash with Pentagon over weapons and surveillance

Policy and Regulation

2

Nvidia pulls back from OpenAI investment as Jensen Huang cites IPO plans and complex dynamics

Technology

3

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology