OpenAI strikes $10 billion AI chip deal with Cerebras to power faster ChatGPT responses

10 Sources

10 Sources

[1]

OpenAI signs deal, worth $10 billion, for compute from Cerebras | TechCrunch

OpenAI announced Wednesday that it had reached a multi-year agreement with AI chipmaker Cerebras. The chipmaker will deliver 750 megawatts of compute to the AI giant starting this year and continuing through the year 2028, Cerebras said. The deal is worth over $10 billion, a source familiar with the details told TechCrunch. Reuters also reported the deal size. Both companies said that the deal is about delivering faster outputs for OpenAI's customers. In a blog post, OpenAI said these systems would speed responses that currently require more time to process. Andrew Feldman, co-founder and CEO of Cerebras, said just as "broadband transformed the internet, real-time inference will transform AI." Cerebras has been around for over a decade but its star has risen significantly since the launch of ChatGPT in 2022 and the AI boom that followed. The company claims its systems, built with its chips designed for AI use, are faster than GPU-based systems (such as Nvidia's offerings). Cerebras filed for an IPO in 2024 but, since then, has pushed it back a number of times. In the meantime, the company has continued to raise large amounts of money. On Tuesday, it was reported that the company was in talks to raise another billion dollars at a $22 billion valuation. It's also worth noting that OpenAI's CEO, Sam Altman, is already an investor in the company, and that OpenAI once considered acquiring it. "OpenAI's compute strategy is to build a resilient portfolio that matches the right systems to the right workloads," said Sachin Katti of OpenAI. "Cerebras adds a dedicated low-latency inference solution to our platform. That means faster responses, more natural interactions, and a stronger foundation to scale real-time AI to many more people."

[2]

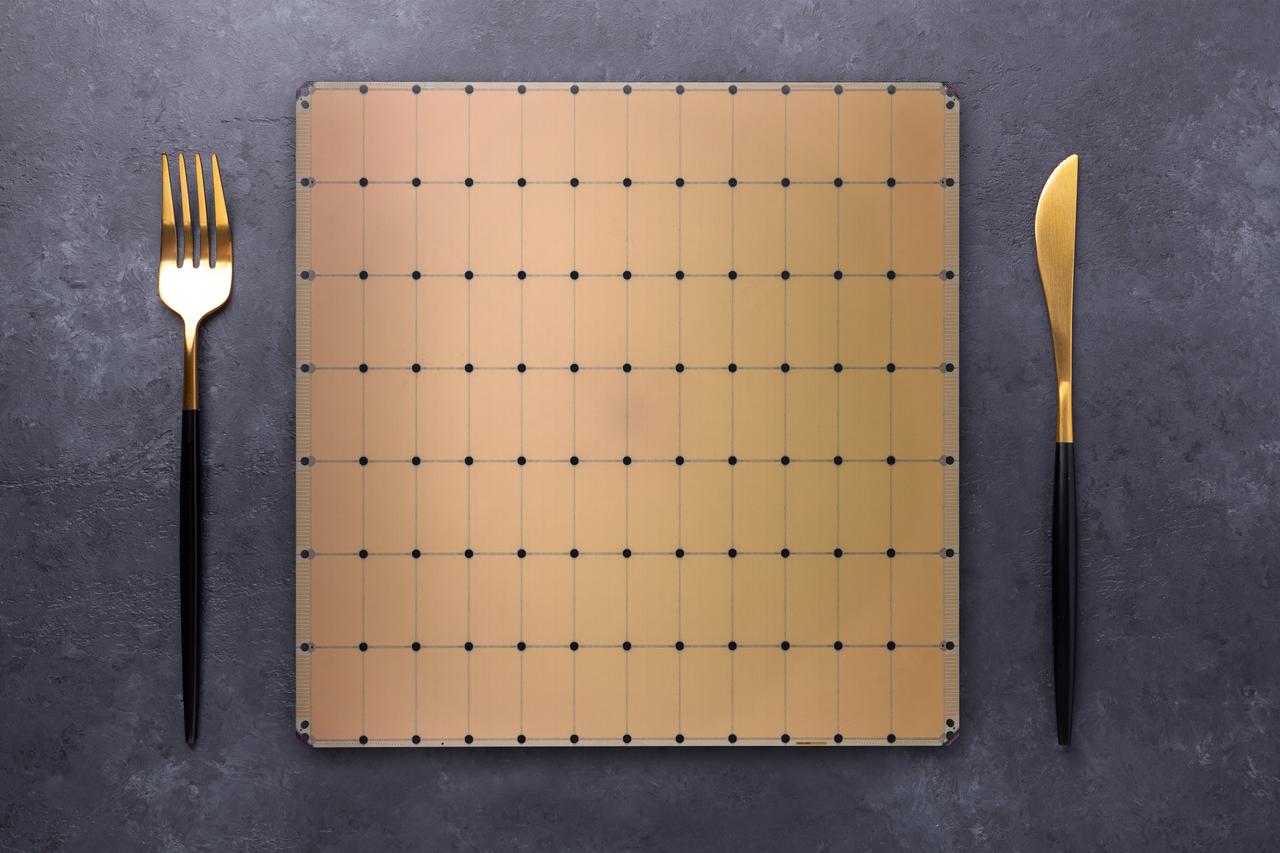

OpenAI to serve ChatGPT on Cerebras' AI dinner plates

SRAM-heavy compute architecture promises real-time agents, extended reasoning capabilities to bolster Altman's valuation OpenAI says it will deploy 750 megawatts worth of Nvidia competitor Cerebras' dinner-plate sized accelerators through 2028 to bolster its inference services. The deal, which will see Cerebras take on the risk of building and leasing datacenters to serve OpenAI, is valued at more than $10 billion, sources familiar with the matter tell El Reg. By integrating Cerebras' wafer-scale compute architecture into its inference pipeline, OpenAI can take advantage of the chip's massive SRAM capacity to speed up inference. Each of the chip startup's WSE-3 accelerators measures in at 46,225 mm2 and is equipped with 44 GB of SRAM. Compared to the HBM found on modern GPUs, SRAM is several orders of magnitude faster. While a single Nvidia Rubin GPU can deliver around 22 TB/s of memory bandwidth, Cerebras' chips achieve nearly 1,000x that at 21 Petabytes a second. All that bandwidth translates into extremely fast inference performance. Running models like OpenAI's gpt-oss 120B, Cerebras' chips can purportedly achieve single user performance of 3,098 tokens a second as compared to 885 tok/s for competitor Together AI, which uses Nvidia GPUs. In the age of reasoning models and AI agents, faster inference means models can "think" for longer without compromising on interactivity. "Integrating Cerebras into our mix of compute solutions is all about making our AI respond much faster. When you ask a hard question, generate code, create an image, or run an AI agent, there is a loop happening behind the scenes: you send a request, the model thinks, and it sends something back," OpenAI explained in a recent blog post. "When AI responds in real time, users do more with it, stay longer, and run higher-value workloads." However, Cerebras' architecture has some limitations. SRAM isn't particularly space efficient, which is why, despite the chip's impressive size, they only pack about as much memory as a six-year-old Nvidia A100 PCIe card. Because of this, larger models need to be parallelized across multiple chips, each of which are rated for a prodigious 23 kW of power. Depending on the precision used, the number of chips required can be considerable. At 16-bit precision, which Cerebras has historically preferred for its higher-quality outputs, every billion parameters ate up 2 GB of SRAM capacity. As a result, even modest models like Llama 3 70B required at least four of its CS-3 accelerators to run. It's been nearly two years since Cerebras unveiled a new wafer scale accelerator, and since then the company's priorities have shifted from training to inference. We suspect the chip biz's next chip may dedicate a larger area to SRAM and add support for modern block floating point data types like MXFP4, which should dramatically increase the size of the models that can be served on a single chip. Having said that, the introduction of a model router with the launch of OpenAI's GPT-5 last summer should help mitigate Cerebras' memory constraints. The approach ensures that the vast majority of requests fielded by ChatGPT are fulfilled by smaller cost-optimized models. Only the most complex queries run on OpenAI's largest and most resource-intensive models. It's also possible that OpenAI may choose to run a portion of its inference pipeline on Cerebras' kit. Over the past year, the concept of disaggregated inference has taken off. In theory, OpenAI could run compute-heavy prompt processing on AMD or Nvidia GPUs and offload token generation to Cerebras' SRAM packed accelerators for the workload's bandwidth-constrained token generation phase. Whether this is actually an option will depend on Cerebras. "This is a Cloud service agreement. We build out datacenters with our equipment for OpenAI to power their models with the fastest inference," a company spokesperson told El Reg when asked about the possibility of using its CS-3s in a disaggregated compute architecture. This doesn't mean it won't happen, but it would be on Cerebras to deploy the GPU systems required to support such a configuration in its datacenters alongside its waferscale accelerators. ®

[3]

OpenAI Forges $10 Billion Deal With Cerebras for AI Computing

The deal is valued at more than $10 billion, according to people familiar with the matter, and is part of OpenAI's efforts to expand its computing capacity and unlock "the next generation of use cases and onboard the next billion users to AI". OpenAI signed a multiyear deal to use hardware from Cerebras Systems Inc. for 750 megawatts' worth of computing power, an alliance that will support the company's rapid build-out of AI infrastructure. OpenAI will use Cerebras as a supplier of computing to get faster response times when running AI models, according to a joint statement Wednesday. The infrastructure will be built in multiple stages "through 2028" and hosted by Cerebras, the companies said. Though terms weren't disclosed, people familiar with the matter put the size of the deal at more than $10 billion. "This partnership will make ChatGPT not just the most capable but also the fastest AI platform in the world," said Greg Brockman, OpenAI co-founder and president. This speed will help unlock "the next generation of use cases and onboard the next billion users to AI," he said. Cerebras, a semiconductor startup, has pioneered a unique approach to processing information using huge chips. It's seeking widespread adoption of its technology in a bid to challenge market leader Nvidia Corp. and also operates data centers to showcase the capabilities of its components and bring in recurring revenue. High-profile wins like the OpenAI agreement take Cerebras closer to tapping into the tens of billions of dollars being poured into new infrastructure for artificial intelligence computing. For OpenAI, the pact is just the latest massive data center deal aimed at expanding its computing capacity. It's part of an unprecedented bet by the technology industry that runaway demand for power-hungry AI tools will continue unabated. In September, Nvidia announced it would invest as much as $100 billion in OpenAI to build AI infrastructure and new data centers with a capacity of at least 10 gigawatts of power. In October, Advanced Micro Devices Inc. said it would deploy 6 gigawatts' worth of graphics processing units over multiple years for OpenAI. A gigawatt is about the capacity of a conventional nuclear power plant. OpenAI, the maker of ChatGPT and other AI tools, is also developing its own chip with Broadcom Inc. Cerebras and OpenAI have been exploring the idea of collaborating since 2017, according to the statement. Recent work by Cerebras in support of OpenAI's GPT-OSS-120B model showed it running 15 times faster than "conventional hardware," the companies said. Cerebras founder and Chief Executive Officer Andrew Feldman said that AI's inference stage -- the process of getting models to respond to queries -- is crucial to the advancement of the technology and that's where his products shine. "They're choosing a new and different architecture because it's faster and drives value for them," Feldman said in an interview. "This transaction launches us into the big league and launches high-speed inference into the mainstream." His company is also in talks to raise money ahead of a potential initial public offering. Cerebras has discussed a new funding round of roughly $1 billion, a person familiar with the matter said earlier this week. The round would value the startup at $22 billion before the investment, said the person. The Wall Street Journal previously reportedBloomberg Terminal on the $10 billion OpenAI-Cerebras deal.

[4]

OpenAI has committed billions to recent chip deals. Some big names have been left out

Open AI CEO Sam Altman speaks during a talk session with SoftBank Group CEO Masayoshi Son at an event titled "Transforming Business through AI" in Tokyo, on Feb. 3, 2025. In November, following Nvidia's latest earnings beat, CEO Jensen Huang boasted to investors about his company's position in artificial intelligence and said about the hottest startup in the space, "Everything that OpenAI does runs on Nvidia today." While it's true that Nvidia maintains a dominant position in AI chips and is now the most valuable company in the world, competition is emerging, and OpenAI is doing everything it can to diversify as it pursues a historically aggressive expansion plan. On Wednesday, OpenAI announced a $10 billion deal with chipmaker Cerebras, a relatively nascent player in the space but one that's angling for the public market. It was the latest in a string of deals between OpenAI and the companies making the processors needed to build large language models and run increasingly sophisticated workloads. Last year, OpenAI committed more than $1.4 trillion to infrastructure deals with companies including Nvidia, Advanced Micro Devices and Broadcom, en route to commanding a $500 billion private market valuation. As OpenAI races to meet anticipated demand for its AI technology, it has signaled to the market that it wants as much processing power as it can find. Here are the major chip deals that OpenAI has signed as of January, and potential partners to keep an eye on in the future.

[5]

Cerebras scores OpenAI deal worth over $10 billion ahead of AI chipmaker's IPO

AI chipmaker Cerebras has signed a deal with OpenAI to deliver 750 megawatts of computing power through 2028, according to a blog post Wednesday by the maker of ChatGPT. The arrangement is worth over $10 billion, according to people close to the company. The deal will help diversify Cerebras away from the United Arab Emirates' G42, which accounted for 87% of revenue in the first half of 2024. Cerebras has built a large processor that can train and run generative artificial intelligence models. That makes it a challenger to Nvidia, which sells large quantities of its chips to cloud providers such as Amazon and Microsoft -- those companies then rent the graphics cards to clients by the hour. Nvidia became the first company to reach a $5 trillion market capitalization in October, as investors sought to capitalize on further AI growth. In December, Cerebras rival Groq said Nvidia had signed a non-exclusive licensing agreement that would result in some employees moving to Nvidia. Groq's cloud service is not part of the deal, which CNBC reported is worth $20 billion in cash, making it Nvidia's largest transaction to date. "Cerebras adds a dedicated low-latency inference solution to our platform," Sachin Katti, who works on compute infrastructure at OpenAI, wrote in the blog. "That means faster responses, more natural interactions, and a stronger foundation to scale real-time AI to many more people." The deal comes months after OpenAI worked with Cerebras to ensure that its gpt-oss open-weight models would work smoothly on Cerebras silicon, alongside chips from Nvidia and Advanced Micro Devices. Cerebras filed for an initial public offering in September 2024, revealing that revenue in the second quarter of that year approached $70 million, up from about $6 million in the second quarter of 2023. The company's net loss swelled to almost $51 million, from $26 million a year earlier. Investment banks that typically participate in the top technology IPOs were missing from the prospectus, and the company used an auditor other than the so-called Big Four accounting firms. Cerebras withdrew the paperwork in October, days after announcing a $1.1 billion round of funding that valued it at $8.1 billion. The company said it pulled the prospectus because details were out of date. "Given that the business has improved in meaningful ways we decided to withdraw so that we can re-file with updated financials, strategy information including our approach to the rapidly changing AI landscape," Cerebras' co-founder and CEO Andrew Feldman wrote in a LinkedIn post. A revised filing will provide a better explanation of the business to potential investors, he wrote. Cerebras' customer list includes Cognition, Hugging Face, IBM and Nasdaq, and in March 2025, the company said the Committee on Foreign Investment in the United States had approved Cerebras' request to sell shares to G42. The Wall Street Journal reported on the deal earlier on Wednesday.

[6]

OpenAI commits to buying over $10B worth of AI compute capacity from Cerebras Systems - SiliconANGLE

OpenAI commits to buying over $10B worth of AI compute capacity from Cerebras Systems OpenAI Group PBC has signed a multibillion-dollar deal with the artificial intelligence chipmaker Cerebras Systems Inc. to secure much-needed compute capacity for its large language models. The company said it will use Cerebras' chips to power ChatGPT. It has agreed to purchase up to 750 megawatts of computing power over the next three years in an agreement that's valued at more than $10 billion, according to anonymous sources that spoke to the Wall Street Journal. The deal is a big boost for Cerebras, and will enable the chipmaker to diversify its revenue base from its major customers, the United Arab Emirates-based AI firm G42, which accounted for 87% of its sales in the first half of 2024. Cerebras is the creator of a large, dinner plate-sized processor that's designed to train and run LLMs at scale. It's widely considered to be one of the most intriguing challengers to Nvidia Corp., which dominates the AI industry, selling massive amounts of graphics processing units to cloud providers such as Amazon Web Services Inc. and Microsoft Corp. The deal is notable because OpenAI Chief Executive Sam Altman personally invested in Cerebras during its early years, and explored a partnership with the chipmaker as early as 2017. OpenAI desperately needs as much computing capacity as it can get its hands on, as it prepares itself for a new phase of growth. The company said ChatGPT currently has more than 900 million weekly users, and it's facing a drastic shortage of compute capacity to keep up with the chatbot's growth. It's not just capacity that's an issue for OpenAI, though, for the company also wants to find cheaper and more efficient alternatives to Nvidia's GPUs, which are some of the most expensive chips on the market today. Last year, the chatbot maker revealed it was working with Broadcom Inc. on the development of a customized AI processor, and has also signed a deal with Advanced Micro Devices Inc. to use that company's upcoming M1450 chips. The partnership with Cerebras has been in the pipeline for a while. Cerebras CEO Andrew Feldman told CNBC that his company has been working with OpenAI for the last couple of months to ensure that its open-weight gpt-oss models run perfectly on its processors. During that optimization work, the companies' engineering teams began having "technical conversations" that led to a term sheet being signed last year, just before Thanksgiving, the CEO said. According to Feldman, Cerebras has more than enough capacity to cater to OpenAI's needs. He said the company has multiple data centers in the U.S. and globally, and is continuing to expand its footprint both at home and abroad. OpenAI reportedly first looked at using Cerebras' chips as early as 2017, shortly after Altman first invested in the chipmaker, but it's not clear why it chose not to do so at the time. Cerebras has raised $1.8 billion in funding to date, with its most prominent investors including G42, Benchmark, Fidelity Management & Research Co. and Atreides Management. However, it's looking to raise much more than this. Just yesterday, a report by The Information claimed that the company is currently holding discussions over a new $1 billion raise that would lift its valuation to more than $22 billion. Although it has raked in tons of cash from investors, Cerebras has struggled to make much of an impact on Nvidia's dominance of the AI industry. When it first filed to go public in September 2024, it disclosed that the vast majority of its revenue came from a single customer, G42. Cerebras ultimately decided to abandon its listing plans and instead raised $1.1 billion in a private funding round, but there are rumors that it's now looking to file for an initial public offering once again, perhaps in the next few months. During the last year, Cerebras has reportedly expanded its customer base quite a bit, signing deals with IBM Corp., Meta Platforms Inc. and Hugging Face Inc. The deal with OpenAI will help make a Cerebras IPO look even more attractive, although some analysts have raised concerns over the AI model maker's ability to pay for all of the computing resources it has committed to buying. Last year, it generated revenue of approximately $13 billion, but that number is dwarfed by the $600 billion in cloud contracts it has signed with Amazon, Microsoft, Oracle Corp. and others. Altman has pointed out that the deals are phased over time, and will be paid for with future revenue growth. However, it will probably be investors that end up footing the bill, at least for the foreseeable future. Last month, reports emerged that OpenAI is seeking to raise at least $10 billion, if not more, from Amazon.com Inc., in a round that could lift its value to a staggering $830 billion.

[7]

OpenAI signs $10 billion computing deal with Nvidia challenger Cerebras - The Economic Times

OpenAI will purchase up to 750 megawatts of computing power over three years from chipmaker Cerebras as the ChatGPT maker looks to pull ahead in the AI race and meet the growing demand, the two companies said on Wednesday. This deal is worth more than $10 billion over the life of the contract, according to a source familiar with the matter. The ChatGPT maker plans to use the systems built by Cerebras to power its popular chatbot in what is the latest in a string of multi-billion-dollar deals struck by OpenAI. Cerebras Chief Executive Andrew Feldman said the two companies began talks last August after Cerebras demonstrated that OpenAI's open-source models could run more efficiently on its chips than on traditional GPUs. After months of negotiations, the companies reached an agreement under which Cerebras will sell cloud services powered by its chips to OpenAI, focusing on inference and reasoning models, which typically take time to "think" before generating responses. As part of the deal, Cerebras will build or lease data centers filled with its chips, while OpenAI will pay to use Cerebras' cloud services to run inference for its AI products. The capacity will come online in multiple tranches through 2028. "Integrating Cerebras into our mix of compute solutions is all about making our AI respond much faster," OpenAI said in a post on its website. The deal underscores the industry's strong appetite for computing power to run inference - the process by which models respond to queries - as companies race to build reasoning models and applications to drive adoption. The tie-up will be key to Cerebras' latest efforts in going public, as it will help Cerebras diversify its revenue away from UAE-based tech firm G42, which has been both an investor and one of its largest customers. Founded in 2015, Cerebras is known for its wafer-scale engines, chips designed to accelerate training and inference for large AI models, competing with offerings from Nvidia and other AI chipmakers. Sam Altman, CEO at OpenAI, is an early investor in Cerebras. Reuters reported last month that Cerebras was preparing to file for an initial public offering, targeting a listing in the second quarter of this year. This marks the company's second attempt at the public market. It first filed paperwork for IPO in 2024, before postponing and ultimately withdrawing in October. OpenAI is laying the groundwork for its own IPO that could value it at up to $1 trillion, Reuters had also reported. CEO Sam Altman had said last year that OpenAI is committed to spending $1.4 trillion to develop 30 gigawatts of computing resources - enough to power roughly 25 million U.S. homes. While companies commit hefty amounts to the booming technology and valuations soar, investors and experts have raised concerns that the industry might be turning into a bubble reminiscent of the dotcom boom and bust.

[8]

OpenAI Reduces NVIDIA GPU Reliance with Faster Cerebras Chips

What if ChatGPT could be 100 times faster, responding to your queries almost instantaneously? That's not just a distant dream, it's the bold vision behind OpenAI's new $10 billion partnership with Cerebras Systems. In this breakdown, Matthew Berman walks through how this collaboration is set to transform AI performance by using Cerebras' specialized chip technology. Unlike traditional GPUs, these chips are purpose-built for AI inference, promising unprecedented speed and efficiency. The implications are staggering: faster AI responses, smoother user experiences, and a major shift in the hardware landscape that could challenge Nvidia's long-standing dominance. This explainer unpacks the key details of the OpenAI-Cerebras partnership and why it's a pivotal moment for the future of AI. You'll discover how Cerebras' innovative hardware, capable of processing over 3,000 tokens per second -- is reshaping the way AI systems scale and perform. But it's not just about speed; this move signals a broader industry trend toward specialized solutions that prioritize energy efficiency and real-time processing. Whether you're curious about the tech behind the headlines or what this means for the AI services you use every day, this story offers a fascinating glimpse into the next chapter of AI evolution. The partnership between OpenAI and Cerebras is a strategic response to the escalating computational requirements of modern AI systems. Over the next three years, Cerebras will provide OpenAI with 750 megawatts of compute power, a substantial increase that will enable faster AI inference, improved scalability, and enhanced performance for tools like ChatGPT. This collaboration also allows OpenAI to diversify its hardware ecosystem, reducing its dependency on Nvidia. As Nvidia continues to dominate the AI hardware market, this move signals a broader industry trend toward exploring alternative solutions to address the limitations of traditional GPU-based systems. By adopting Cerebras' specialized chips, OpenAI positions itself to meet the growing demand for AI services while maintaining its competitive edge. Specialized chips, such as those developed by Cerebras, are rapidly emerging as the preferred choice for AI inference tasks. Unlike general-purpose GPUs, which are optimized for training large AI models, specialized chips are specifically designed to handle inference workloads, where speed and efficiency are paramount. This shift toward specialized hardware reflects the industry's focus on optimizing AI systems for real-time user interactions, allowing faster response times and improved scalability. Learn more about OpenAI by reading our previous articles, guides and features : Cerebras' chips stand out due to their innovative design and exceptional performance capabilities. These chips are capable of processing over 3,000 tokens per second, significantly outperforming traditional GPUs in inference tasks. Their unique features include: By adopting this technology, OpenAI can enhance the responsiveness and reliability of tools like ChatGPT, delivering a smoother and faster user experience. This improvement is particularly significant as AI systems become more integrated into everyday applications, from customer service to content generation. AI inference has become a critical revenue stream for companies like OpenAI. Unlike model training, which is a one-time process, inference generates continuous revenue as users interact with AI systems. Increasing inference capacity offers several strategic advantages: This dual focus on inference and training positions OpenAI to maintain its leadership in the competitive AI industry, where the ability to scale efficiently is becoming a key differentiator. The OpenAI-Cerebras partnership reflects a broader trend toward specialized hardware in the AI sector. As AI systems grow more complex and resource-intensive, scalability and efficiency are becoming critical priorities. This shift has several far-reaching implications: These developments underscore the importance of hardware innovation in shaping the future of AI, as companies strive to meet the increasing demands of users and applications. For users, the partnership between OpenAI and Cerebras promises tangible benefits, including faster response times and improved performance of AI models. As specialized chips become more widely adopted, the efficiency and scalability of AI systems will continue to improve, unlocking new applications and use cases across various industries. Looking ahead, this collaboration could deepen, potentially leading to an acquisition or other strategic developments. Regardless of the outcome, the partnership marks a significant milestone in the evolution of the AI industry, paving the way for a new era of innovation driven by specialized hardware. This shift not only enhances the capabilities of AI systems but also sets the stage for broader adoption and integration of AI into everyday life.

[9]

Nvidia, AMD shares dip as OpenAI partners with Cerebras By Investing.com

Investing.com -- Nvidia (NASDAQ:NVDA) and AMD (NASDAQ:AMD) shares edged lower late Wednesday after OpenAI announced a multibillion-dollar computing partnership with AI chip startup Cerebras. The partnership, valued at more than $10 billion according to the Wall Street Journal, will integrate Cerebras' low-latency inference capabilities into OpenAI's computing infrastructure through 2028. Cerebras specializes in purpose-built AI systems designed to accelerate outputs from AI models using a single giant chip architecture. "OpenAI's compute strategy is to build a resilient portfolio that matches the right systems to the right workloads. Cerebras adds a dedicated low-latency inference solution to our platform," said Sachin Katti of OpenAI in the announcement. The news comes amid broader tech weakness Wednesday, with the Nasdaq 100 down 1.5%. Nvidia shares were down 2% while AMD was trading slightly higher before the announcement. Both stocks initially dipped following the headline but quickly recovered most of those losses. Cerebras' technology focuses on reducing latency in AI responses by eliminating bottlenecks that slow inference on conventional hardware. The partnership will be implemented in phases across various workloads, with capacity coming online in multiple tranches through 2028. Andrew Feldman, co-founder and CEO of Cerebras, stated, "Just as broadband transformed the internet, real-time inference will transform AI, enabling entirely new ways to build and interact with AI models." This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.

[10]

OpenAI to buy compute capacity from Cerebras in latest AI deal

Jan 14 (Reuters) - OpenAI said on Wednesday it has agreed to purchase up to 750 megawatts of computing power over three years from Cerebras as the ChatGPT maker looks to pull ahead in the AI race and capitalize on growing demand. The deal is worth more than $10 billion, the Wall Street Journal reported, citing people familiar with the matter. The ChatGPT maker plans to use the systems built by Cerebras to power its popular chatbot in what is the latest in a string of multi-billion dollar deals struck by OpenAI. The capacity will come online in multiple tranches through 2028, OpenAI said in post on its website. (Reporting by Zaheer Kachwala in Bengaluru; Editing by Alan Barona)

Share

Share

Copy Link

OpenAI has signed a multi-year agreement with AI chipmaker Cerebras worth over $10 billion to deliver 750 megawatts of computing power through 2028. The deal aims to accelerate ChatGPT's response times and support real-time AI applications, while reducing OpenAI's reliance on Nvidia's GPU-based systems. Cerebras will build and lease dedicated datacenters featuring its wafer-scale accelerators.

OpenAI and Cerebras Forge Massive Computing Partnership

OpenAI announced Wednesday that it reached a multi-year agreement with AI chipmaker Cerebras in a $10 billion deal that will deliver 750 megawatts of computing power starting this year and continuing through 2028

1

. The partnership marks a strategic move by OpenAI to diversify its supply chain beyond traditional GPU providers and accelerate inference capabilities for ChatGPT users.

Source: SiliconANGLE

According to sources familiar with the matter, the AI chip deal is valued at more than $10 billion, making it one of the largest compute agreements in the AI industry

3

. Cerebras will take on the risk of building and leasing datacenters equipped with its wafer-scale AI accelerators to serve OpenAI's inference workloads2

. The AI infrastructure will be deployed in multiple stages, with Cerebras hosting the systems dedicated to delivering low-latency responses.Why Speed Matters for Real-Time AI Applications

Both companies emphasized that the partnership centers on delivering faster outputs for OpenAI's customers. "Integrating Cerebras into our mix of compute solutions is all about making our AI respond much faster," OpenAI explained in a blog post

2

. When users ask complex questions, generate code, create images, or run AI agents, there's a loop happening behind the scenes where the model processes requests and sends responses back.Andrew Feldman, co-founder and CEO of the semiconductor startup, drew a powerful analogy: "Just as broadband transformed the internet, real-time inference will transform AI"

1

. He emphasized that AI's inference stage—the process of getting AI models to respond to queries—is crucial to advancement, and that's where Cerebras' products excel. Recent work showed Cerebras running OpenAI's GPT-OSS-120B model 15 times faster than conventional hardware3

.Sachin Katti of OpenAI stated that "Cerebras adds a dedicated low-latency inference solution to our platform. That means faster responses, more natural interactions, and a stronger foundation to scale real-time AI to many more people"

1

. In the age of reasoning models and AI agents, faster inference means models can "think" for longer without compromising on interactivity2

.Technical Advantages of Cerebras' Wafer-Scale Architecture

By integrating Cerebras' wafer-scale compute architecture into its inference pipeline, OpenAI can leverage the chip's massive SRAM capacity to accelerate processing power. Each WSE-3 accelerator measures 46,225 mm² and is equipped with 44 GB of SRAM

2

. Compared to the HBM found on modern GPUs, SRAM operates several orders of magnitude faster. While a single Nvidia Rubin GPU can deliver around 22 TB/s of memory bandwidth, Cerebras' chips achieve nearly 1,000x that at 21 petabytes per second2

.

Source: The Register

Running models like OpenAI's gpt-oss 120B, Cerebras' chips can reportedly achieve single-user performance of 3,098 tokens per second compared to 885 tok/s for competitor Together AI, which uses Nvidia GPUs

2

. This performance advantage positions Cerebras as a compelling alternative in the market dominance currently held by Nvidia.Related Stories

OpenAI's Strategy to Diversify Beyond Nvidia

The partnership represents OpenAI's continued effort to diversify supply chain dependencies as it pursues an aggressive expansion plan. "OpenAI's compute strategy is to build a resilient portfolio that matches the right systems to the right workloads," said Katti

1

. While Nvidia CEO Jensen Huang boasted in November that "everything that OpenAI does runs on Nvidia today," competition is clearly emerging4

.Last year, OpenAI committed more than $1.4 trillion to infrastructure deals with companies including Nvidia, Advanced Micro Devices, and Broadcom

4

. In September, Nvidia announced it would invest as much as $100 billion in OpenAI to build AI infrastructure with at least 10 gigawatts of power capacity. In October, AMD said it would deploy 6 gigawatts worth of graphics processing units over multiple years for OpenAI3

. OpenAI is also developing its own chip with Broadcom3

.Implications for Cerebras' IPO and Market Position

For Cerebras, this high-profile win moves it closer to tapping into the tens of billions of dollars being poured into new AI infrastructure. The company has been around for over a decade, but its star has risen significantly since the launch of ChatGPT in 2022 and the AI boom that followed

1

. Feldman noted that "this transaction launches us into the big league and launches high-speed inference into the mainstream"3

.

Source: ET

Cerebras filed for an IPO in September 2024, revealing that revenue in the second quarter approached $70 million, up from about $6 million in the second quarter of 2023

5

. However, the company withdrew the paperwork in October after announcing a $1.1 billion funding round that valued it at $8.1 billion. The company is now in talks to raise another billion dollars at a $22 billion valuation1

. The OpenAI deal will help diversify Cerebras away from G42, which accounted for 87% of revenue in the first half of 20245

.It's worth noting that Sam Altman is already an investor in Cerebras, and OpenAI once considered acquiring the company

1

. The relationship between the two companies dates back to 2017, when they first began exploring collaboration3

. Greg Brockman, OpenAI co-founder and president, stated that "this partnership will make ChatGPT not just the most capable but also the fastest AI platform in the world," helping unlock "the next generation of use cases and onboard the next billion users to AI"3

.References

Summarized by

Navi

[2]

Related Stories

OpenAI Partners with Broadcom for Custom AI Chips in Massive Infrastructure Expansion

13 Oct 2025•Technology

AMD Challenges Nvidia's AI Dominance with Massive OpenAI Deal

06 Oct 2025•Business and Economy

Nvidia and OpenAI Forge $100 Billion Partnership to Revolutionize AI Infrastructure

22 Sept 2025•Business and Economy

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation