Oracle Unveils World's First Zettascale AI Supercomputer in Collaboration with NVIDIA

6 Sources

6 Sources

[1]

Oracle Expands Distributed Cloud Offerings for AI

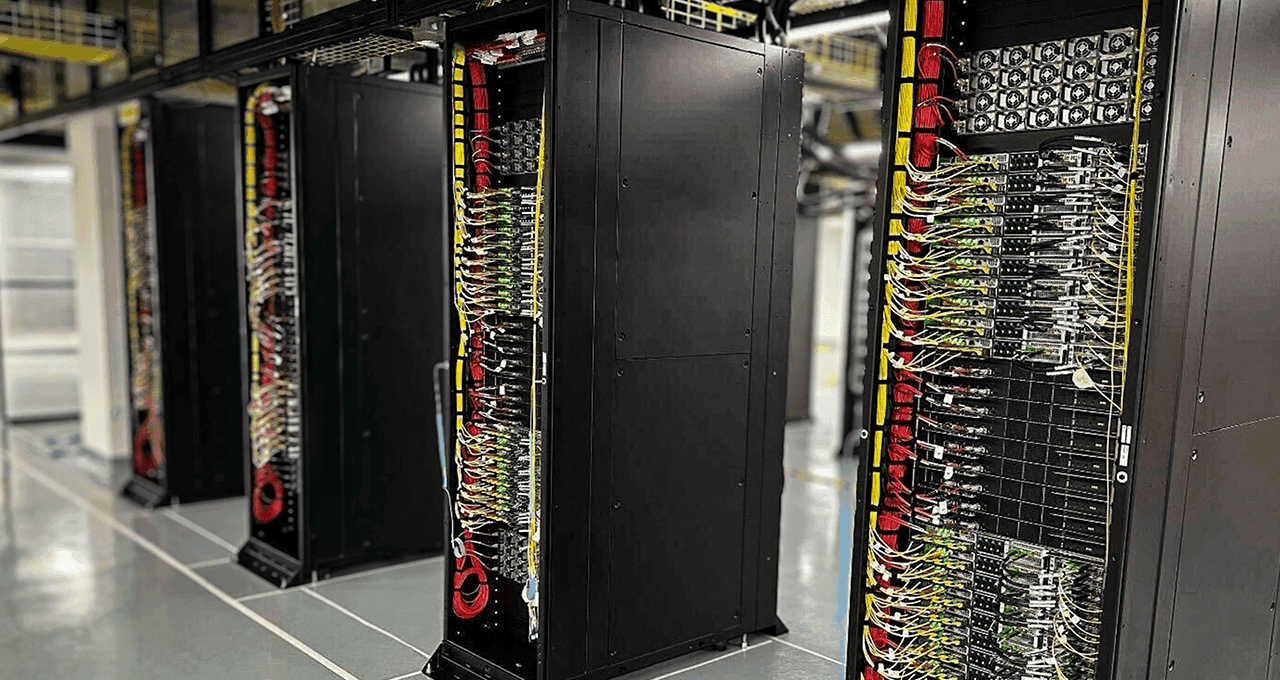

Oracle's Distributed Cloud Gets a Boost with AI Supercomputing and Sovereign AI Oracle has announced new distributed cloud innovations in Oracle Cloud Infrastructure (OCI) to meet growing global demand for AI and cloud services. These latest developments include Oracle Database@AWS, Oracle Database@Azure, Oracle Database@Google Cloud, OCI Dedicated Region, and OCI Supercluster, allowing customers to deploy OCI's services at the edge, across clouds, or in the public cloud. Oracle Cloud now operates in 85 regions globally, with plans for an additional 77 regions, making it available in more locations than any other hyperscaler. In a statement, Mahesh Thiagarajan, executive vice president of Oracle Cloud Infrastructure, said, "Our priority is giving customers the choice and flexibility to leverage cloud services in the model that makes the most sense for their business." OCI's distributed cloud offers flexibility for deploying AI infrastructure, addressing data privacy and low-latency requirements. Among its latest offerings, Oracle is taking orders for what it claims to be the largest AI supercomputer in the cloud, with up to 131,072 NVIDIA Blackwell GPUs delivering 2.4 zettaFLOPS of peak performance. This supercomputer provides more than six times the GPU capacity of other hyperscalers, along with enhanced storage and low-latency networks. Oracle also introduced new infrastructure to support sovereign AI, using NVIDIA L40S and Hopper architecture GPUs. These advancements enable organisations to build AI models with strong data residency controls. Further expanding its capabilities, Oracle announced a smaller, more scalable OCI Dedicated Region configuration, "Dedicated Region25," starting at only three racks, set to launch next year. This new offering aims to bring OCI's full range of AI and cloud services to more customers, particularly those looking for a localized cloud solution. Additionally, Oracle has enhanced multi cloud capabilities through partnerships with AWS, Azure, and Google Cloud, allowing customers to combine services from multiple providers to optimize performance and costs. Finally, OCI introduced Roving Edge Infrastructure enhancements, including a new three-GPU option designed for remote AI inferencing. The ruggedized, portable device supports critical data processing even in disconnected environments.

[2]

Oracle Unveils World's First Zettascale AI Supercomputer with 131K NVIDIA Blackwell GPUs, Delivering 2.4 ZettaFLOPS for Training Advanced AI Workloads

Meanwhile, xAI is building its own AI data center with 100,000 NVIDIA chips. Oracle today announced the launch of world's first zettascale cloud computing clusters. This cluster is powered by NVIDIA Blackwell GPUs. It offers up to 131,072 GPUs and delivers 2.4 zettaFLOPS of peak performance. Oracle's robust AI infrastructure, powered by NVIDIA GPUs, enables businesses to handle large-scale AI workloads with enhanced flexibility and sovereignty. "We have one of the broadest AI infrastructure offerings and are supporting customers that are running some of the most demanding AI workloads in the cloud," said Mahesh Thiagarajan, EVP of Oracle Cloud Infrastructure. By combining NVIDIA's advanced GPU architecture with Oracle's cloud infrastructure, they're offering scalable AI computing solutions critical for businesses and researchers globally. "NVIDIA's full-stack AI computing platform on Oracle's broadly distributed cloud will deliver AI compute capabilities at unprecedented scale," said Ian Buck, VP of Hyperscale and HPC, NVIDIA. This new development from Oracle supports advanced AI research and development while ensuring regional data sovereignty, a crucial factor for industries like healthcare and collaboration platforms such as Zoom and WideLabs among others. Oracle vs the world Oracle Cloud Infrastructure (OCI) offers up to 131,072 NVIDIA B200 GPUs, which is six times more than other cloud hyperscalers like AWS, Azure, and Google Cloud. For example, while AWS UltraClusters can host up to 20,000 GPUs, OCI offers more than three times that amount, enabling unprecedented levels of computational power. Oracle also claimed that it is first to offer a zettascale AI supercomputer, delivering 2.4 zettaFLOPs while competitors have only reached exascale levels. Moreover, OCI supports a wide range of GPUs, including NVIDIA H200, B200, and GB200 GPUs, with general availability across multiple architectures (Hopper, Blackwell) and the ability to run AI workloads of varying scales. OCI will be the first to offer NVIDIA GB200 Grace Blackwell Superchips, which are designed for 4x faster training and 30x faster inference compared to H100 GPUs. This is important for AI model requiring real-time inference and multimodal LLM training. There is no stopping Oracle. Oracle's AI supercomputer is not only the largest, but also offers flexible, scalable, and highly secure AI infrastructure that other cloud providers currently cannot match. This, in a way, positions Oracle as the top choice for businesses looking to train massive AI models or leverage advanced computing for their AI innovations. That explains why OpenAI recently partnered with OCI to expand its capacity for running ChatGPT, leveraging its AI infrastructure to meet the rising demand for generative AI services, alongside its collaboration with Microsoft Azure. "Leaders like OpenAI are choosing OCI because it is the world's fastest and most cost-effective AI infrastructur," said Larry Ellison, Oracle Chairman and CTO. Now with its supercluster scaling up to 131,072 NVIDIA B200 GPUs, there is no stopping Oracle and OpenAI. "OCI will extend Azure's platform and enable OpenAI to continue to scale," said OpenAI's chief Sam Altman. Elon Musk is not smiling: Oracle and Elon Musk's AI startup xAI recently ended talks on a potential $10 billion cloud computing deal, with xAI opting to build its own data center in Memphis, Tennessee. At the time, Musk emphasised the need for speed and control over its own infrastructure. "Our fundamental competitiveness depends on being faster than any other AI company. This is the only way to catch up," he added. xAI is constructing its own AI data center with 100,000 NVIDIA chips. It claimed that it will be the world's most powerful AI training cluster, marking a significant shift in strategy from cloud reliance to full infrastructure ownership. Marks the Completion of Oracle's Multi-Cloud Strategy After Microsoft Azure and Google Cloud, Oracle has finally partnered with AWS to launch Oracle Database@AWS, enabling customers to access Oracle Autonomous Database and Exadata services on AWS infrastructure. "To meet this demand and give customers the choice and flexibility they want, Amazon and Oracle are seamlessly connecting AWS services with the very latest Oracle Database technology, including the Oracle Autonomous Database," said Larry Ellison, Oracle Chairman and CTO. The partnership offers zero-ETL integration and unified support, helping businesses modernise and improve outcomes. "This partnership provides customers with a unified experience for migrating and managing their Oracle databases," said AWS chief Matt Garman.

[3]

Oracle to offer 'world's first' zettascale AI cluster via its public cloud - SiliconANGLE

Oracle to offer 'world's first' zettascale AI cluster via its public cloud Oracle Corp. plans to equip its public cloud with what it describes as the world's first zettascale computing cluster. The cluster, which the company previewed today at its Oracle CloudWorld conference, will provide up to 2.4 zettaflops of performance for artificial intelligence workloads. One zettaflop equals a trillion billion computing operations per second. The speed of the world's fastest supercomputers is typically measured in exaflops, units of compute that are three orders of magnitude smaller than a zettaflop. Under the hood, the AI cluster is based on Nvidia Corp.'s flagship Blackwell B200 graphics processing unit. The cluster can reach its 2.4-zettaflop top speed when customers provision it with 131,072 B200 chips, the maximum GPU count that Oracle plans to support. That's more than three times the number of graphics cards in the world's fastest supercomputer, a system called Frontier that the U.S. Energy Department uses for scientific research. The B200 comprises two separate compute modules, or dies, that are made using a four-nanometer manufacturing process. They're linked together by an interconnect that can transfer up to 10 terabytes of data between them every second. The B200 also features 192 gigabytes worth of HBM3e memory, a type of high-speed RAM, that brings its total transistor count to 208 billion. One of the chip's flagship features is a so-called microscaling capability. AI models process information in the form of floating point numbers, units of data that contain 4 to 32 bits' worth of information. The smaller the unit of data, the less time it takes to process. The B200's microscaling capability can compress some floating point numbers into smaller ones and thereby speeding up calculations. Oracle's B200-powered AI cluster will support two networking protocols: InfiniBand and RoCEv2, an enhanced version of Ethernet. Both technologies include so-called kernel bypass features, which allow network traffic to bypass some of the components tat it must usually go through to reach its destination. This arrangement enables data to reach GPUs faster and thereby speeds up processing. The B200 cluster will become available in the first quarter of 2025. Around the same time, Oracle plans to expand its public cloud with another new infrastructure option based on Nvidia's GB200 NVL72 system. It's a liquid-cooled compute appliance that ships with 36 GB200 accelerators, which each include two B200 graphics cards and one central processing unit. The GB200 supports an Nvidia networking technology called SHARP. AI chips must regularly exchange data with one another over the network that links them to coordinate their work. Moving data in this manner consumes some of the chips' processing power. SHARP reduces the amount of information that has to be sent over the network, which reduces the associated processing requirements and leaves more GPU capacity for AI workloads. "We include supercluster monitoring and management APIs to enable you to quickly query for the status of each node in the cluster, understand performance and health, and allocate nodes to different workloads, greatly enhancing availability," Mahesh Thiagarajan, the executive vice president of Oracle Cloud Infrastructure, detailed in a blog post. Oracle is also enhancing its cloud platform's support for other chips in Nvidia's product portfolio. Later this year, the company plans to add a new cloud cluster based on the H200, the chip that headlined Nvidia's data center GPU lineup until the debut of the B200 in March. The cluster will allow users to provision up to 65,536 H200 chips for 260 exaflops, or just over a quarter zettaflop, of performance. Thiagarajan detailed that Oracle is also upgrading its cloud's storage infrastructure to accommodate the new AI clusters. Large-scale neural networks require the ability to quickly move data to and from storage while running calculations. "We will also soon introduce a fully managed Lustre file service that can support dozens of terabits per second," Thiagarajan wrote. "To match the increased storage throughput, we're increasing the OCI GPU Compute frontend network capacity from 100 Gbps in the H100 GPU-accelerated instances to 200 Gbps with H200 GPU-accelerated instances, and 400 Gbps per instance for B200 GPU and GB200 instances."

[4]

NVIDIA and Oracle to Accelerate AI and Data Processing for Enterprises

Enterprises are looking for increasingly powerful compute to support their AI workloads and accelerate data processing. The efficiency gained can translate to better returns for their investments in AI training and fine-tuning, and improved user experiences for AI inference. At the Oracle CloudWorld conference today, Oracle Cloud Infrastructure (OCI) announced the first zettascale OCI Supercluster, accelerated by the NVIDIA Blackwell platform, to help enterprises train and deploy next-generation AI models using more than 100,000 of NVIDIA's latest-generation GPUs. OCI Superclusters allow customers to choose from a wide range of NVIDIA GPUs and deploy them anywhere: on premises, public cloud and sovereign cloud. Set for availability in the first half of next year, the Blackwell-based systems can scale up to 131,072 Blackwell GPUs with NVIDIA ConnectX-7 NICs for RoCEv2 or NVIDIA Quantum-2 InfiniBand networking to deliver an astounding 2.4 zettaflops of peak AI compute to the cloud. (Read the press release to learn more about OCI Superclusters.) At the show, Oracle also previewed NVIDIA GB200 NVL72 liquid-cooled bare-metal instances to help power generative AI applications. The instances are capable of large-scale training with Quantum-2 InfiniBand and real-time inference of trillion-parameter models within the expanded 72-GPU NVIDIA NVLink domain, which can act as a single, massive GPU. This year, OCI will offer NVIDIA HGX H200 -- connecting eight NVIDIA H200 Tensor Core GPUs in a single bare-metal instance via NVLink and NVLink Switch, and scaling to 65,536 H200 GPUs with NVIDIA ConnectX-7 NICs over RoCEv2 cluster networking. The instance is available to order for customers looking to deliver real-time inference at scale and accelerate their training workloads. (Read a blog on OCI Superclusters with NVIDIA B200, GB200 and H200 GPUs.) OCI also announced general availability of NVIDIA L40S GPU-accelerated instances for midrange AI workloads, NVIDIA Omniverse and visualization. (Read a blog on OCI Superclusters with NVIDIA L40S GPUs.) For single-node to multi-rack solutions, Oracle's edge offerings provide scalable AI at the edge accelerated by NVIDIA GPUs, even in disconnected and remote locations. For example, smaller-scale deployments with Oracle's Roving Edge Device v2 will now support up to three NVIDIA L4 Tensor Core GPUs. Companies are using NVIDIA-powered OCI Superclusters to drive AI innovation. Foundation model startup Reka, for example, is using the clusters to develop advanced multimodal AI models to develop enterprise agents. "Reka's multimodal AI models, built with OCI and NVIDIA technology, empower next-generation enterprise agents that can read, see, hear and speak to make sense of our complex world," said Dani Yogatama, cofounder and CEO of Reka. "With NVIDIA GPU-accelerated infrastructure, we can handle very large models and extensive contexts with ease, all while enabling dense and sparse training to scale efficiently at cluster levels." Oracle Autonomous Database is gaining NVIDIA GPU support for Oracle Machine Learning notebooks to allow customers to accelerate their data processing workloads on Oracle Autonomous Database. At Oracle CloudWorld, NVIDIA and Oracle are partnering to demonstrate three capabilities that show how the NVIDIA accelerated computing platform could be used today or in the future to accelerate key components of generative AI retrieval-augmented generation pipelines. The first will showcase how NVIDIA GPUs can be used to accelerate bulk vector embeddings directly from within Oracle Autonomous Database Serverless to efficiently bring enterprise data closer to AI. These vectors can be searched using Oracle Database 23ai's AI Vector Search. The second demonstration will showcase a proof-of-concept prototype that uses NVIDIA GPUs, NVIDIA RAPIDS cuVS and an Oracle-developed offload framework to accelerate vector graph index generation, which significantly reduces the time needed to build indexes for efficient vector searches. The third demonstration illustrates how NVIDIA NIM, a set of easy-to-use inference microservices, can boost generative AI performance for text generation and translation use cases across a range of model sizes and concurrency levels. Together, these new Oracle Database capabilities and demonstrations highlight how NVIDIA GPUs can be used to help enterprises bring generative AI to their structured and unstructured data housed in or managed by an Oracle Database. NVIDIA and Oracle are collaborating to deliver sovereign AI infrastructure worldwide, helping address the data residency needs of governments and enterprises. Brazil-based startup Wide Labs trained and deployed Amazonia IA, one of the first large language models for Brazilian Portuguese, using NVIDIA H100 Tensor Core GPUs and the NVIDIA NeMo framework in OCI's Brazilian data centers to help ensure data sovereignty. "Developing a sovereign LLM allows us to offer clients a service that processes their data within Brazilian borders, giving Amazônia a unique market position," said Nelson Leoni, CEO of Wide Labs. "Using the NVIDIA NeMo framework, we successfully trained Amazônia IA." In Japan, Nomura Research Institute, a leading global provider of consulting services and system solutions, is using OCI's Alloy infrastructure with NVIDIA GPUs to enhance its financial AI platform with LLMs operating in accordance with financial regulations and data sovereignty requirements. Communication and collaboration company Zoom will be using NVIDIA GPUs in OCI's Saudi Arabian data centers to help support compliance with local data requirements. And geospatial modeling company RSS-Hydro is demonstrating how its flood mapping platform -- built on the NVIDIA Omniverse platform and powered by L40S GPUs on OCI -- can use digital twins to simulate flood impacts in Japan's Kumamoto region, helping mitigate the impact of climate change. These customers are among numerous nations and organizations building and deploying domestic AI applications powered by NVIDIA and OCI, driving economic resilience through sovereign AI infrastructure. Enterprises can accelerate task automation on OCI by deploying NVIDIA software such as NIM microservices and NVIDIA cuOpt with OCI's scalable cloud solutions. These solutions enable enterprises to quickly adopt generative AI and build agentic workflows for complex tasks like code generation and route optimization. NVIDIA cuOpt, NIM, RAPIDS and more are included in the NVIDIA AI Enterprise software platform, available on the Oracle Cloud Marketplace. Join NVIDIA at Oracle CloudWorld 2024 to learn how the companies' collaboration is bringing AI and accelerated data processing to the world's organizations. Register to the event to watch sessions, see demos and join Oracle and NVIDIA for the solution keynote, "Unlock AI Performance with NVIDIA's Accelerated Computing Platform" (SOL3866), on Wednesday, Sept. 11, in Las Vegas.

[5]

Nvidia and Oracle team up for Zettascale cluster: Available with up to 131,072 Blackwell GPUs

Oracle on Wednesday introduced new types of clusters set to be available for AI training through Oracle Cloud Infrastructure (OCI). The most powerful cluster will be based on Nvidia's upcoming on Blackwell GPUs and will offer up to 2.4 ZettaFLOPS of AI performance, making it even more powerful than Elon Musk's recently announced AI clusters. Oracle's new supercomputer clusters can be configured with Nvidia's Hopper or Blackwell GPUs for AI and HPC as well as different networking gear, including ultra-low latency RoCEv2 with ConnectX-7 NICs and ConnectX-8 SuperNICs or Nvidia's Quantum-2 InfiniBand-based networks, and a choice of HPC storage, depending on performance needs: OCI's upcoming supercomputing clusters far exceed the capabilities of current leading systems. The range-topping B200-based OCI Superclusters feature over three times more GPUs than the Frontier supercomputer (which uses 37,888 AMD Instinct MI250X GPUs) and six times more than other hyperscalers, according to Oracle. "We have one of the broadest AI infrastructure offerings and are supporting customers that are running some of the most demanding AI workloads in the cloud," said Mahesh Thiagarajan, executive vice president, Oracle Cloud Infrastructure. "With Oracle's distributed cloud, customers have the flexibility to deploy cloud and AI services wherever they choose while preserving the highest levels of data and AI sovereignty." Several companies are already benefiting from this advanced infrastructure. WideLabs and Zoom are leveraging OCI's high-performance AI infrastructure to accelerate their AI development while maintaining sovereignty controls. "As businesses, researchers and nations race to innovate using AI, access to powerful computing clusters and AI software is critical," said Ian Buck, vice president of Hyperscale and High Performance Computing at Nvidia. "Nvidia's full-stack AI computing platform on Oracles broadly distributed cloud will deliver AI compute capabilities at unprecedented scale to advance AI efforts globally and help organizations everywhere accelerate research, development and deployment." The upcoming OCI Superclusters will use Nvidia's GB200 NVL72 liquid-cooled cabinets with 72 GPUs that communicate with each other at an aggregate bandwidth of 129.6 TB/s in a single NVLink domain. Oracle said that Nvidia's Blackwell GPUs will be available in the first half of 2025 (as availability of Blackwell this year will be limited), though it is unclear when OCI will offer fully loaded Blackwell-powered clusters.

[6]

Oracle's zettascale supercluster comes with a 4-bit asterisk

Comment Oracle says it's already taking orders on a 2.4 zettaFLOPS cluster with "three times as many GPUs as the Frontier supercomputer." But let's get precise about precision: Oracle hasn't actually managed a 2,000x performance boost over the United States' top-ranked supercomputer -- those are "AI zettaFLOPS" and the tiniest, sparsest ones Nvidia's chips can muster versus the standard 64-bit way of measuring super performance. This might be common knowledge for most of our readers, but it seems Oracle needs a reminder: FLOPS are pretty much meaningless unless they're accompanied by a unit of measurement. In scientific computing, FLOPS are usually measured at double precision or 64-bit. However, for the kind of fuzzy math that makes up modern AI algorithms, we can usually get away with far lower precision, down to 16-bit, 8-bit, or in the case of Oracle's new Blackwell cluster, 4-bit floating points. The 131,072 Blackwell accelerators that make up Oracle's "AI supercomputer" are in fact capable of churning out 2.4 zettaFLOPS of sparse FP4 compute, but the claim is more marketing than reality. That's because most models today are still trained at 16-bit floating point or brain float precision. But at that precision, Oracle's cluster only manages about 459 exaFLOPS of AI compute, which just doesn't have the same ring to it as "zettascale". Note that there's nothing technically stopping you from training models at FP8 or even FP4, but doing so comes at the cost of accuracy. Instead, these lower precisions are more commonly used to speed up the inferencing of quantized models, a scenario where you'll pretty much never need all 131,072 chips even when serving up a multi-trillion parameter model. What's funny is that if Oracle can actually network all those GPUs together with RoCEv2 or InfiniBand, we're still talking about a pretty beefy HPC cluster. At FP64, the peak performance of Oracle's Blackwell Supercluster is between 5.2 and 5.9 exaFLOPS, depending on whether we're talking about the B200 or GB200. That's more than 3x that of the AMD Frontier system's peak performance. Interconnect overheats being what they are, we'll note that even getting close to peak performance as these scales is next to impossible. Oracle already offers H100 and H200 superclusters capable of scaling to 16,384 and 65,536 GPUs, respectively. Blackwell-based superclusters, including Nvidia's flagship GB200 NVL72 rack-scale systems, will be available beginning in the first half of 2025. ®

Share

Share

Copy Link

Oracle has announced the world's first zettascale AI supercomputer, featuring 131,072 NVIDIA Blackwell GPUs. This groundbreaking system, delivering 2.4 zettaflops of AI performance, will be available through Oracle Cloud Infrastructure.

Oracle's Zettascale AI Supercomputer: A Leap in Cloud Computing

In a groundbreaking announcement, Oracle has unveiled plans for the world's first zettascale AI supercomputer, marking a significant milestone in cloud computing and artificial intelligence. This massive system, developed in collaboration with NVIDIA, is set to revolutionize the landscape of AI training and inference

1

.Technical Specifications and Performance

The supercomputer will be powered by an impressive array of 131,072 NVIDIA Blackwell GPUs, delivering a staggering 2.4 zettaflops of AI performance

2

. This unprecedented computing power is designed to handle the most demanding AI workloads, including training large language models and conducting complex scientific simulations.Availability and Access

What sets this supercomputer apart is its accessibility. Oracle plans to make this zettascale AI cluster available through its Oracle Cloud Infrastructure (OCI), democratizing access to cutting-edge AI computing resources

3

. This move is expected to accelerate AI innovation across various industries by providing researchers, businesses, and developers with unprecedented computational power.Expanding Distributed Cloud Offerings

The zettascale AI supercomputer is part of Oracle's broader strategy to expand its distributed cloud offerings. The company is introducing new OCI Dedicated Region configurations, which bring Oracle's public cloud services directly to customer data centers and facilities

4

. This expansion aims to meet the growing demand for high-performance computing in AI and machine learning applications.Related Stories

Implications for AI Research and Development

The introduction of this supercomputer is expected to have far-reaching implications for AI research and development. With its immense processing power, the system will enable researchers to train larger and more complex AI models, potentially leading to breakthroughs in natural language processing, computer vision, and other AI domains

5

.Industry Collaboration and Future Prospects

The collaboration between Oracle and NVIDIA in creating this zettascale AI supercomputer highlights the importance of industry partnerships in pushing the boundaries of technology. As AI continues to evolve and demand for computational resources grows, such collaborations are likely to become increasingly crucial in driving innovation and addressing the challenges of large-scale AI deployment.

References

Summarized by

Navi

[3]

Related Stories

Oracle's AI Infrastructure Expansion: AMD and Nvidia Compete for Dominance

14 Oct 2025•Technology

Oracle and NVIDIA Forge Strategic Partnership to Accelerate Enterprise AI Adoption

19 Mar 2025•Technology

Oracle Cloud Infrastructure Deploys Thousands of NVIDIA Blackwell GPUs for Advanced AI Development

29 Apr 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy