Professors develop custom AI teaching assistants to reshape how universities approach generative AI

2 Sources

2 Sources

[1]

A.I. Is Coming to Class. These Professors Want to Ease Your Worries.

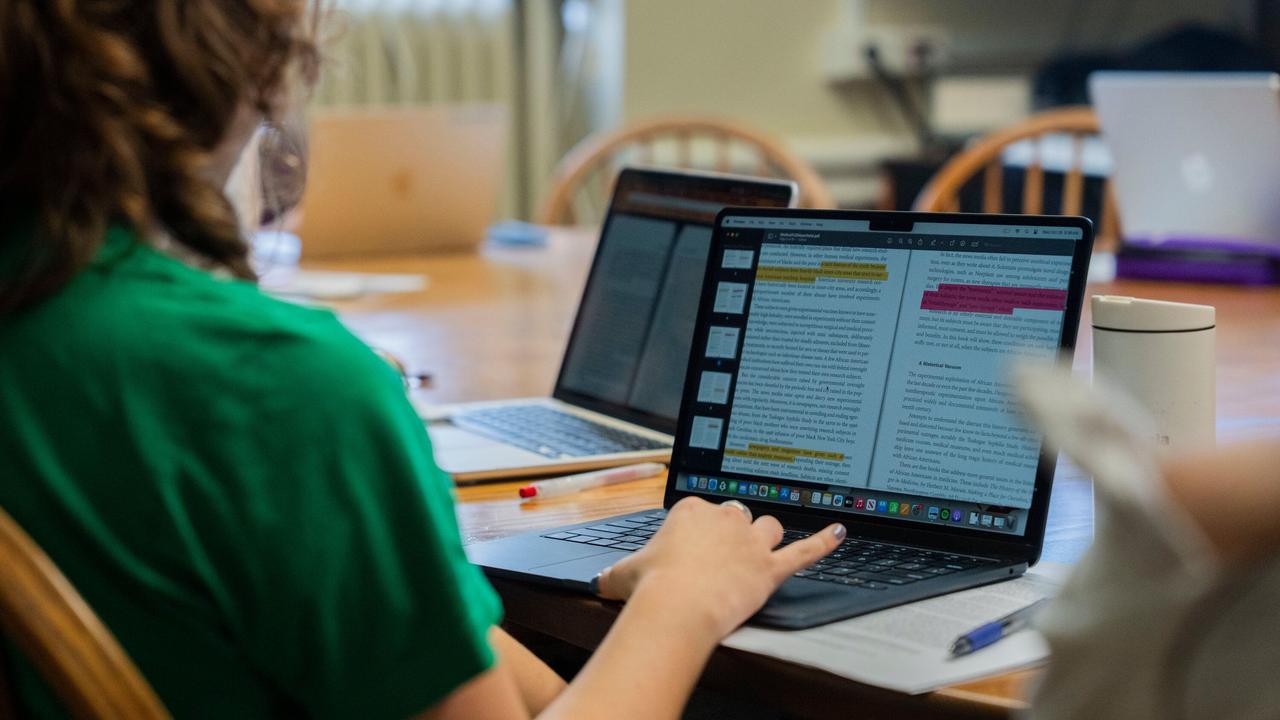

Visuals by Hiroko Masuike/The New York Times The front line in the debate over whether and when university students should be taught how to properly use generative artificial intelligence runs right through Benjamin Breyer's classroom at Barnard College in Manhattan. The first-year writing program he teaches in at Barnard generally bans the use of generative A.I., including ChatGPT, Claude, Gemini and the like, which eagerly draft paragraphs, do research and compose essays for their users. The program's policy statement warns students that A.I. "is often factually wrong, and it is also deeply problematic, perpetuating misogyny and racial and cultural biases." Wendy Schor-Haim, the program's director, runs screen-free classes and shows students how she uses different colored highlighters to annotate printed texts. She has never tried ChatGPT. "Students tend to use it in our classrooms to do the work that we are here to teach them how to do," she said. "And it is very, very bad at that work." But she has made an exception for Professor Breyer, who is determined to see if he can use A.I. to supplement, not short-circuit, the efforts of students as they study academic writing. In that sense, Professor Breyer represents a growing swath of writing and English professors who are trying to find positive uses for a technology that some of their colleagues remain dead set against. At most universities, including Barnard, it remains up to professors to decide whether and how to allow A.I. use in their classrooms. College administrators often play a dual role, offering support for professors to make those decisions, even as they enable access to the tools through their computer systems. Legacy academic organizations are evolving in their approaches. In an October 2024 working paper, for example, a task force of the Modern Language Association, which promotes humanities studies, leaned toward engagement, writing that first-year writing courses "have a special responsibility" to teach students how to use generative A.I. "critically and effectively in academic situations and across their literate lives." Professor Breyer has been at Barnard and Columbia University for 22 years and is keenly aware of his colleagues' misgivings about A.I. But perhaps unexpectedly, his experiments with the technology have caused him to be a voice of reassurance to fellow professors that they will still have a pivotal role to play on the other end of this societal transition. "This is no threat to us at present," he said he tells them. "A.I. may help with the expression of an idea and articulating that expression. But the idea itself, the thing that's hardest to teach, is still going to remain our domain." During the past two years, he and a computer programmer spent thousands of hours developing a chatbot they named Althea, after the Grateful Dead song. "I don't think that I'm writing my own obituary by creating this at all," he said. "It's a tool." Professor Breyer's conclusions about the strengths and weaknesses of generative A.I. come after three semesters of testing Althea in one of his writing sections and assessing how well those students did compared with those in an otherwise identical section that barred A.I. The chatbot, which he developed with Marko Krkeljas, a former Barnard software developer, seeks to act as an interactive workbook, helping students practice things such as annotation and coming up with thesis statements. He has set its persona to be a "tutor at an elite liberal arts college" that prompts students to improve their answers, and it gives a "hard refusal" if a student asks it to write something. Professor Breyer found that off-the-shelf bots were not good enough to teach the academic skills that students needed to help them engage in scholarly conversation. So he applied for about $30,000 in grants and additional technical support to build the tool, which uses a customizable OpenAI platform and exists on a website called academicwritingtools.com. To train it to mimic his own feedback, he fed it transcripts of his lectures, the materials read in the course and samples of exemplary student work. To tamp down generative A.I.'s typical eagerness to please, he told it to be less flattering. "I was fascinated by something that everybody seemed to think was the demon in the bottle that was going to kill us all if released," he said. "And I thought, could you harness it?" While many instructors are now experimenting with A.I., it is still unusual for English professors to develop a custom chatbot that they believe can improve on the problematic implications of the for-profit models. Another such teacher is Alexa Alice Joubin, an English professor and the co-director of the Digital Humanities Institute at George Washington University. She created her own A.I. teaching assistant bot that helps students refine research questions and summarize readings. Professor Joubin sees her bot, which was codeveloped with a computer science student, Akhilesh Rangani, as a way to teach A.I. literacy with more safeguards to protect accuracy, personal privacy and intellectual property than the for-profit programs offer. It uses open-source software, and Professor Joubin is making it freely available to professors worldwide to customize for their own classes. Students already turn to ChatGPT for help, she said. So it is better, she added, that they use a custom bot that is trained to ask reflective questions and responds only in bullet points rather than a product run by "tech bros" that does not have teaching in mind. "This is a bit of our playground, our sandbox," she said of her website, teachanything.ai. "Let's see what A.I. can and cannot do, but inside a controlled environment." At Columbia, Matthew Connelly, a history professor who is also the vice dean for A.I. Initiatives, takes a hybrid approach, encouraging his students to use A.I. for research, even as he strongly discourages its use in their writing. "Generative A.I. is going to be an incredibly powerful and probably superior way of doing research compared to, for example, text searching," he said. But he finds A.I. "pernicious" in the writing process, he said, "because for a discipline like history, and most disciplines for that matter, writing is thinking." These experiments can yield disappointments. Professor Joubin said that she found her students tended to rush to ask the bot for reading summaries just before class, suggesting that they probably hadn't done the required reading. And after one year of testing, Professor Breyer found that overall, the students who didn't use A.I. did better on his writing exercises than the students who used Althea. "It couldn't really improve the quality of the ideas," he said. But Professor Breyer was stubborn, so instead of scrapping the project, he refined Althea over the summer, training it to be less disruptive and to ask more focused questions. He reintroduced it this fall, getting positive reviews from many of the students in his A.I.-enabled section. "With some of my other teachers, there's like a fearing of A.I., but this is using it in a productive way," said Abby Keller, a student, at the end of a recent class. "It's honest," Riya Shivaram, another student, said. "It's not looking to please people and not looking to prove you wrong." Charlotte Mills disagreed. "I very much prefer getting broad feedback, like from Professor Breyer, rather than getting feedback on every single step of the assignment," she said. The improvements to Althea helped. For the first time, students this past semester who used the bot did better on the exercises than those who didn't. But the deeper effect, he said, was on his teaching. The process of refining the bot was "reciprocal," he said, helping him learn to ask better, more directed questions of his students as he improved the tool. Research by individual professors on how A.I. can be used constructively is in many ways late to the game. Corporations have already flooded the market with tools that can write college-level essays for students in seconds. They promote products that they say are educational, without research-backed evidence to show that they are actually helpful in learning, Professor Connelly said. But with students already using it en masse, standing on the sidelines is not going to help, so for these professors, engagement seems like the only feasible option. At Columbia, Professor Connelly is conducting a survey about A.I. use among first-year writing students and professors. Through his research, he is finding that instructors who have taught in the same way since they were teaching assistants are having to rethink what they are trying to achieve in their classes, a development that he finds ultimately positive. "You can call it a work in progress, but that makes it sound lame," he said. "It's a very scary time, but I actually think this is an incredibly exciting time in higher education."

[2]

AI in universities: What is driving professors to develop custom assistants?

Universities are grappling with generative AI. Some ban it, others embrace it. Professors at George Washington University are creating custom AI teaching assistants. These tools help students with research and writing. This approach aims to teach AI literacy responsibly. It offers a controlled environment for learning. The front line in the debate over whether and when university students should be taught how to properly use generative artificial intelligence runs right through Benjamin Breyer's classroom at Barnard College in Manhattan. The first-year writing program he teaches at Barnard generally bans the use of generative AI, including ChatGPT, Claude, Gemini and the like, which eagerly draft paragraphs, do research and compose essays for their users. The program's policy statement warns students that AI "is often factually wrong, and it is also deeply problematic, perpetuating misogyny and racial and cultural biases." Wendy Schor-Haim, the program's director, runs screen-free classes and shows students how she uses different colored highlighters to annotate printed texts. She has never tried ChatGPT. "Students tend to use it in our classrooms to do the work that we are here to teach them how to do," she said. "And it is very, very bad at that work." But she has made an exception for Breyer, who is determined to see if he can use AI to supplement, not short-circuit, the efforts of students as they study academic writing. In that sense, Breyer represents a growing swath of writing and English professors who are trying to find positive uses for a technology that some of their colleagues remain dead set against. Legacy academic organisations are evolving in their approaches. In an October 2024 working paper, for example, a task force of the Modern Language Association, which promotes humanities studies, leaned toward engagement, writing that first-year writing courses "have a special responsibility" to teach students how to use generative AI "critically and effectively in academic situations and across their literate lives." Breyer has been at Barnard College and Columbia University for 22 years and is keenly aware of his colleagues' misgivings about AI. But perhaps unexpectedly, his experiments with the technology have caused him to be a voice of reassurance to fellow professors that they will still have a pivotal role to play on the other end of this societal transition. "This is no threat to us at present," he said he tells them. "AI may help with the expression of an idea and articulating that expression. But the idea itself, the thing that's hardest to teach, is still going to remain our domain." During the past two years, he and a computer programmer have spent thousands of hours developing a chatbot they named Althea, after the Grateful Dead song. "I don't think that I'm writing my own obituary by creating this at all," he said. "It's a tool." Breyer's conclusions about the strengths and weaknesses of generative AI come after three semesters of testing Althea in one of his writing sections and assessing how well those students did compared with those in an otherwise identical section that barred AI. The chatbot, which he developed with Marko Krkeljas, a former Barnard software developer, seeks to act as an interactive workbook, helping students practice things like annotation and coming up with thesis statements. He has set its persona to be a "tutor at an elite liberal arts college" that prompts students to improve their answers, and it gives a "hard refusal" if a student asks it to write something. Breyer found that off-the-shelf bots were not good enough to teach the academic skills that students needed to help them engage in scholarly conversation. So he applied for about $30,000 in grants and additional technical support to build the tool, which uses a customizable OpenAI platform and exists on a website called academicwritingtools.com. To train it to mimic his own feedback, he fed it transcripts of his lectures, the materials read in the course and samples of exemplary student work. To tamp down generative AI's typical eagerness to please, he told it to be less flattering. "I was fascinated by something that everybody seemed to think was the demon in the bottle that was going to kill us all if released," he said. "And I thought, could you harness it?" While many instructors are now experimenting with AI, it is still unusual for English professors to develop a custom chatbot that they believe can improve on the problematic implications of the for-profit models. Another such teacher is Alexa Alice Joubin, an English professor and the co-director of the Digital Humanities Institute at George Washington University in Washington, D.C. She created her own AI teaching assistant bot that helps students refine research questions and summarize readings. Joubin sees her bot, which was codeveloped with a computer science student, Akhilesh Rangani, as a way to teach AI literacy with more safeguards to protect accuracy, personal privacy and intellectual property than the for-profit programs offer. It uses open-source software, and Joubin is making it freely available to professors worldwide to customize for their own classes. Students already turn to ChatGPT for help, she said. So it is better, she added, that they use a custom bot that is trained to ask reflective questions and responds only in bullet points than a product run by "tech bros" that does not have teaching in mind. "This is a bit of our playground, our sandbox," she said of her website, teachanything.ai. "Let's see what AI can and cannot do, but inside a controlled environment." At Columbia, Matthew Connelly, a history professor who is also the vice dean for AI Initiatives, takes a hybrid approach, encouraging his students to use AI for research but not writing. "Generative AI is going to be an incredibly powerful and probably superior way of doing research compared to, for example, text searching," he said. But he finds AI "pernicious" in the writing process, he said, "because for a discipline like history, and most disciplines, for that matter, writing is thinking." Research by individual professors on how AI can be used constructively is in many ways late to the game. Corporations have already flooded the market with tools that can write college-level essays for students in seconds. They promote products that they say are educational, without research-backed evidence to show that they are actually helpful in learning, Connelly said. But with students already using it en masse, standing on the sidelines is not going to help, so for these professors, engagement seems like the only feasible option. At Columbia, Connelly is conducting a survey about AI use among first-year writing students and professors. Through his research, he is finding that instructors who have taught in the same way since they were teaching assistants are having to rethink what they are trying to achieve in their classes, a development that he finds ultimately positive. "You can call it a work in progress, but that makes it sound lame," he said. "It's a very scary time, but I actually think this is an incredibly exciting time in higher education." This article originally appeared in The New York Times.

Share

Share

Copy Link

Professors at Barnard College and George Washington University are creating custom AI teaching assistants to help students learn academic writing and research skills. Benjamin Breyer spent two years developing Althea, a chatbot trained on his lectures and student work, securing $30,000 in grants. These educators aim to teach critical and effective AI use while mitigating biases found in commercial AI tools like ChatGPT.

Professors Turn to Custom Solutions Amid AI Debate

The conversation around generative AI in universities has moved beyond simple bans and blanket acceptance. At Barnard College in Manhattan, Benjamin Breyer represents a growing number of educators who are charting a middle path—one that acknowledges both the promise and pitfalls of AI in universities

1

. While the first-year writing program at Barnard College generally prohibits tools like ChatGPT, Claude, and Gemini due to concerns about factual accuracy and perpetuating biases, Breyer has secured an exception to test whether AI teaching assistants can supplement student effort rather than replace it2

.This shift matters because it signals a pragmatic approach to a technology that students will inevitably encounter throughout their academic and professional lives. Rather than ignoring generative AI or allowing uncontrolled use, professors develop custom assistants that provide a controlled learning environment where AI literacy can be taught responsibly.

Building Althea: A Custom Chatbot for Academic Writing

Over two years, Breyer and Marko Krkeljas, a former Barnard software developer, invested thousands of hours developing Althea, named after the Grateful Dead song

1

. Breyer secured approximately $30,000 in grants and technical support to build this custom chatbot using a customizable OpenAI platform, now accessible at academicwritingtools.com2

.

Source: NYT

The motivation was clear: off-the-shelf commercial AI tools proved inadequate for teaching the nuanced academic skills students need for scholarly conversation. To train Althea to mimic his own teaching style, Breyer fed it transcripts of his lectures, course materials, and samples of exemplary student work

1

. He configured the bot's persona as a "tutor at an elite liberal arts college" that prompts students to improve their answers while giving a "hard refusal" if asked to write content directly2

.This approach to integrating AI as a supplementary learning tool demonstrates how educators can mitigate the biases of commercial AI by creating purpose-built alternatives. Breyer even instructed Althea to be less flattering, countering generative AI's typical eagerness to please

1

.Testing Results and Faculty Reassurance

After three semesters of testing Althea in one writing section while maintaining an identical section that barred AI, Breyer has drawn conclusions that reassure anxious colleagues

2

. "This is no threat to us at present," he tells fellow professors. "AI may help with the expression of an idea and articulating that expression. But the idea itself, the thing that's hardest to teach, is still going to remain our domain"1

.This perspective matters for educators worried about being replaced by technology. Breyer's 22 years of experience at Barnard College and Columbia University lend credibility to his assessment that human creativity and critical thinking remain irreplaceable, even as AI tools become more sophisticated.

A Growing Movement Across Institutions

Breyer isn't alone in this effort. Alexa Alice Joubin, an English professor and co-director of the Digital Humanities Institute at George Washington University, has created her own AI teaching assistant bot codeveloped with computer science student Akhilesh Rangani

2

. Her bot helps students refine research questions and summarize readings, providing another example of how educators are taking control of AI initiatives rather than passively accepting commercial solutions1

.While many instructors experiment with AI, it remains unusual for English professors to develop a custom chatbot specifically designed to address the problematic implications of for-profit models

2

. This hands-on approach allows for critical and effective AI use tailored to specific pedagogical goals.Related Stories

Institutional Support and Evolving Standards

At most universities, including Barnard College, individual professors retain authority over whether and how to allow AI in their classrooms

1

. College administrators play a dual role, supporting faculty decision-making while enabling access to AI tools through computer systems. This decentralized approach creates space for experimentation but also generates inconsistency in student experiences.Legacy academic organizations are adapting their guidance. In an October 2024 working paper, a task force of the Modern Language Association, which promotes humanities studies, leaned toward engagement, stating that first-year writing courses "have a special responsibility" to teach students how to use generative AI "critically and effectively in academic situations and across their literate lives"

1

2

.What Students and Educators Should Watch

The contrast between Breyer's approach and that of program director Wendy Schor-Haim—who runs screen-free classes and has never tried ChatGPT—illustrates the ongoing tension in higher education

1

. Schor-Haim argues that "students tend to use it in our classrooms to do the work that we are here to teach them how to do," adding that AI "is very, very bad at that work"2

.As more professors develop custom assistants and share results, the short-term implication is likely increased experimentation across disciplines. Long-term, these AI initiatives could establish new standards for how universities integrate technology while preserving the essential role of human instruction in developing critical thinking and original ideas. Students should expect more structured opportunities to learn AI literacy within academic contexts, while educators will need to decide whether to build their own tools or adapt existing ones to serve pedagogical goals rather than simply administrative efficiency.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Anthropic and Pentagon clash over AI safeguards as $200 million contract hangs in balance

Policy and Regulation