Public Citizen Demands OpenAI Withdraw Sora 2 Over Deepfake and Democracy Concerns

7 Sources

7 Sources

[1]

Watchdog group Public Citizen demands OpenAI withdraw AI video app Sora over deepfake dangers

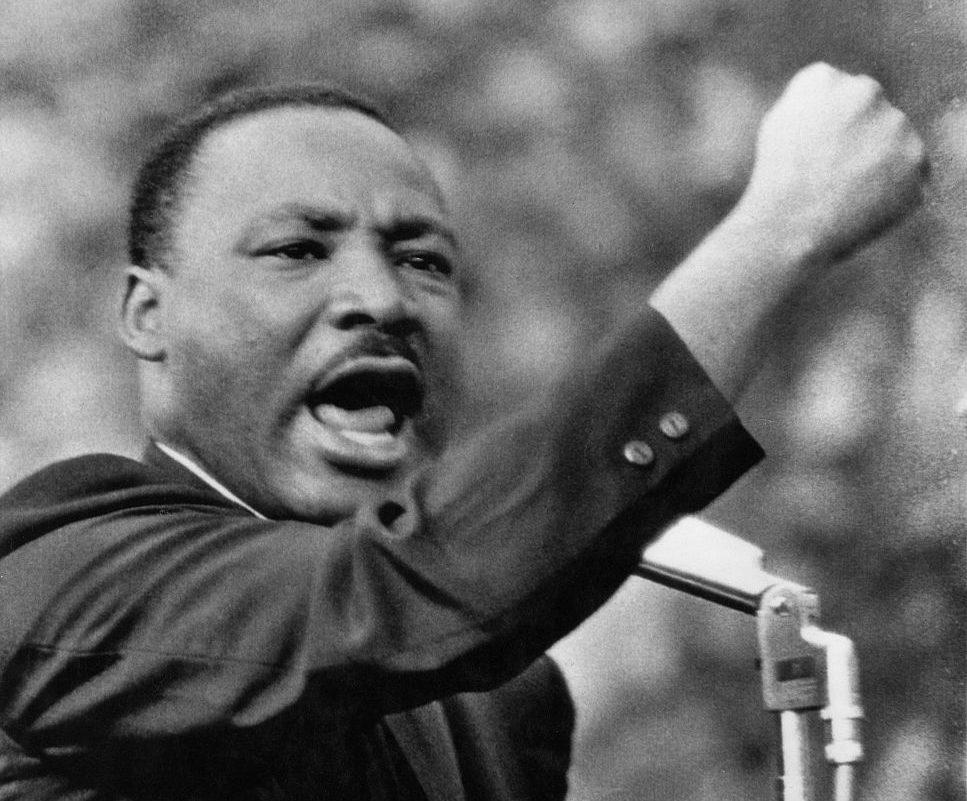

The tech industry is moving fast and breaking things again -- and this time it is humanity's shared reality and control of our likeness before and after death -- thanks to artificial intelligence image-generation platforms like OpenAI's Sora 2. The typical Sora video, made on OpenAI's app and spread onto TikTok, Instagram, X and Facebook, is designed to be amusing enough for you to click and share. It could be Queen Elizabeth II rapping or something more ordinary and believable. One popular Sora genre is fake doorbell camera footage capturing something slightly uncanny -- say, a boa constrictor on the porch or an alligator approaching an unfazed child -- and ends with a mild shock, like a grandma shouting as she beats the animal with a broom. But a growing chorus of advocacy groups, academics and experts are raising alarms about the dangers of letting people create AI videos on just about anything they can type into a prompt, leading to the proliferation of nonconsensual images and realistic deepfakes in a sea of less harmful "AI slop." OpenAI has cracked down on AI creations of public figures -- among them, Michael Jackson, Martin Luther King Jr. and Mister Rogers -- doing outlandish things, but only after an outcry from family estates and an actors' union. The nonprofit Public Citizen is now demanding OpenAI withdraw Sora 2 from the public, writing in a Tuesday letter to the company and CEO Sam Altman that the app's hasty release so that it could launch ahead of competitors shows a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails." Sora 2, the letter says, shows a "reckless disregard" for product safety, as well as people's rights to their own likeness and the stability of democracy. The group also sent the letter to the U.S. Congress. OpenAI didn't immediately respond to a request for comment Tuesday. "Our biggest concern is the potential threat to democracy," said Public Citizen tech policy advocate J.B. Branch in an interview. "I think we're entering a world in which people can't really trust what they see. And we're starting to see strategies in politics where the first image, the first video that gets released, is what people remember." Branch, author of Tuesday's letter, also sees broader concerns to people's privacy that disproportionately impact vulnerable populations online. OpenAI blocks nudity but Branch said that "women are seeing themselves being harassed online" in other ways, such as with fetishized niche content that makes it through the apps' restrictions. The news outlet 404 Media on Friday reported on a flood of Sora-made videos of women being strangled. OpenAI introduced its new Sora app on iPhones more than a month ago. It launched on Android phones last week in the U.S., Canada and several Asian countries, including Japan and South Korea. Much of the strongest pushback has come from Hollywood and other entertainment interests, including the Japanese manga industry. OpenAI announced its first big changes just days after the release, saying "overmoderation is super frustrating" for users but that it's important to be conservative "while the world is still adjusting to this new technology." That was followed by publicly announced agreements with Martin Luther King Jr.'s family on Oct. 16, preventing "disrespectful depictions" of the civil rights leader while the company worked on better safeguards, and another on Oct. 20 with "Breaking Bad" actor Bryan Cranston, the SAG-AFTRA union and talent agencies. "That's all well and good if you're famous," Branch said. "It's sort of just a pattern that OpenAI has where they're willing to respond to the outrage of a very small population. They're willing to release something and apologize afterwards. But a lot of these issues are design choices that they can make before releasing." OpenAI has faced similar complaints about its flagship product, ChatGPT. Seven new lawsuits filed last week in California courts claim the chatbot drove people to suicide and harmful delusions even when they had no prior mental health issues. Filed on behalf of six adults and one teenager by the Social Media Victims Law Center and Tech Justice Law Project, the lawsuits claim that OpenAI knowingly released GPT-4o prematurely last year, despite internal warnings that it was dangerously sycophantic and psychologically manipulative. Four of the victims died by suicide. Public Citizen was not involved in the lawsuits, but Branch said he sees parallels in Sora's hasty release. He said they're "putting the pedal to the floor without regard for harms. Much of this seems foreseeable. But they'd rather get a product out there, get people downloading it, get people who are addicted to it rather than doing the right thing and stress-testing these things beforehand and worrying about the plight of everyday users." OpenAI spent last week responding to complaints from a Japanese trade association representing famed animators like Hayao Miyazaki's Studio Ghibli and video game makers like Bandai Namco and Square Enix. OpenAI said many anime fans want to interact with their favorite characters, but the company has also set guardrails in place to prevent well-known characters from being generated without the consent of the people who own the copyrights. "We're engaging directly with studios and rightsholders, listening to feedback, and learning from how people are using Sora 2, including in Japan, where cultural and creative industries are deeply valued," OpenAI said in a statement about the trade group's letter last week.

[2]

OpenAI accused of 'consistent and dangerous pattern' rushing product to market that is 'inherently unsafe or lacking in needed guardrails' | Fortune

The tech industry is moving fast and breaking things again -- and this time it is humanity's shared reality and control of our likeness before and after death -- thanks to artificial intelligence image-generation platforms like OpenAI's Sora 2. The typical Sora video, made on OpenAI's app and spread onto TikTok, Instagram, X and Facebook, is designed to be amusing enough for you to click and share. It could be Queen Elizabeth II rapping or something more ordinary and believable. One popular Sora genre is fake doorbell camera footage capturing something slightly uncanny -- say, a boa constrictor on the porch or an alligator approaching an unfazed child -- and ends with a mild shock, like a grandma shouting as she beats the animal with a broom. But a growing chorus of advocacy groups, academics and experts are raising alarms about the dangers of letting people create AI videos on just about anything they can type into a prompt, leading to the proliferation of nonconsensual images and realistic deepfakes in a sea of less harmful "AI slop." OpenAI has cracked down on AI creations of public figures -- among them, Michael Jackson, Martin Luther King Jr. and Mister Rogers -- doing outlandish things, but only after an outcry from family estates and an actors' union. The nonprofit Public Citizen is now demanding OpenAI withdraw Sora 2 from the public, writing in a Tuesday letter to the company and CEO Sam Altman that the app's hasty release so that it could launch ahead of competitors shows a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails." Sora 2, the letter says, shows a "reckless disregard" for product safety, as well as people's rights to their own likeness and the stability of democracy. The group also sent the letter to the U.S. Congress. OpenAI didn't immediately respond to a request for comment Tuesday. "Our biggest concern is the potential threat to democracy," said Public Citizen tech policy advocate J.B. Branch in an interview. "I think we're entering a world in which people can't really trust what they see. And we're starting to see strategies in politics where the first image, the first video that gets released, is what people remember." Branch, author of Tuesday's letter, also sees broader concerns to people's privacy that disproportionately impact vulnerable populations online. OpenAI blocks nudity but Branch said that "women are seeing themselves being harassed online" in other ways, such as with fetishized niche content that makes it through the apps' restrictions. The news outlet 404 Media on Friday reported on a flood of Sora-made videos of women being strangled. OpenAI introduced its new Sora app on iPhones more than a month ago. It launched on Android phones last week in the U.S., Canada and several Asian countries, including Japan and South Korea. Much of the strongest pushback has come from Hollywood and other entertainment interests, including the Japanese manga industry. OpenAI announced its first big changes just days after the release, saying "overmoderation is super frustrating" for users but that it's important to be conservative "while the world is still adjusting to this new technology." That was followed by publicly announced agreements with Martin Luther King Jr.'s family on Oct. 16, preventing "disrespectful depictions" of the civil rights leader while the company worked on better safeguards, and another on Oct. 20 with "Breaking Bad" actor Bryan Cranston, the SAG-AFTRA union and talent agencies. "That's all well and good if you're famous," Branch said. "It's sort of just a pattern that OpenAI has where they're willing to respond to the outrage of a very small population. They're willing to release something and apologize afterwards. But a lot of these issues are design choices that they can make before releasing." OpenAI has faced similar complaints about its flagship product, ChatGPT. Seven new lawsuits filed last week in California courts claim the chatbot drove people to suicide and harmful delusions even when they had no prior mental health issues. Filed on behalf of six adults and one teenager by the Social Media Victims Law Center and Tech Justice Law Project, the lawsuits claim that OpenAI knowingly released GPT-4o prematurely last year, despite internal warnings that it was dangerously sycophantic and psychologically manipulative. Four of the victims died by suicide. Public Citizen was not involved in the lawsuits, but Branch said he sees parallels in Sora's hasty release. He said they're "putting the pedal to the floor without regard for harms. Much of this seems foreseeable. But they'd rather get a product out there, get people downloading it, get people who are addicted to it rather than doing the right thing and stress-testing these things beforehand and worrying about the plight of everyday users." OpenAI spent last week responding to complaints from a Japanese trade association representing famed animators like Hayao Miyazaki's Studio Ghibli and video game makers like Bandai Namco and Square Enix. OpenAI said many anime fans want to interact with their favorite characters, but the company has also set guardrails in place to prevent well-known characters from being generated without the consent of the people who own the copyrights. "We're engaging directly with studios and rightsholders, listening to feedback, and learning from how people are using Sora 2, including in Japan, where cultural and creative industries are deeply valued," OpenAI said in a statement about the trade group's letter last week.

[3]

Watchdog group demands OpenAI withdraw Sora 2 over deepfakes, privacy

The typical Sora video, made on OpenAI's app and spread onto TikTok, Instagram, X, and Facebook, is designed to be amusing enough for you to click and share. It could be Queen Elizabeth II rapping or something more ordinary and believable. One popular Sora genre is fake doorbell camera footage capturing something slightly uncanny -- say, a boa constrictor on the porch or an alligator approaching an unfazed child -- and ends with a mild shock, like a grandma shouting as she beats the animal with a broom. But a growing chorus of advocacy groups, academics, and experts is raising alarms about the dangers of letting people create AI videos on just about anything they can type into a prompt, leading to the proliferation of nonconsensual images and realistic deepfakes in a sea of less harmful "AI slop." OpenAI has cracked down on AI creations of public figures -- among them, Michael Jackson, Martin Luther King Jr., and Mister Rogers -- doing outlandish things, but only after an outcry from family estates and an actors' union. The nonprofit Public Citizen is now demanding OpenAI withdraw Sora 2 from the public, writing in a Tuesday letter to the company and CEO Sam Altman that the app's hasty release so that it could launch ahead of competitors shows a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails." Sora 2, the letter says, shows a "reckless disregard" for product safety, as well as people's rights to their own likeness and the stability of democracy. The group also sent the letter to the U.S. Congress.

[4]

Watchdog group Public Citizen demands OpenAI withdraw AI video app Sora over deepfake dangers

The tech industry is moving fast and breaking things again -- and this time it is humanity's shared reality and control of our likeness before and after death -- thanks to artificial intelligence image-generation platforms like OpenAI's Sora 2. The typical Sora video, made on OpenAI's app and spread onto TikTok, Instagram, X and Facebook, is designed to be amusing enough for you to click and share. It could be Queen Elizabeth II rapping or something more ordinary and believable. One popular Sora genre is fake doorbell camera footage capturing something slightly uncanny -- say, a boa constrictor on the porch or an alligator approaching an unfazed child -- and ends with a mild shock, like a grandma shouting as she beats the animal with a broom. But a growing chorus of advocacy groups, academics and experts are raising alarms about the dangers of letting people create AI videos on just about anything they can type into a prompt, leading to the proliferation of nonconsensual images and realistic deepfakes in a sea of less harmful "AI slop." OpenAI has cracked down on AI creations of public figures -- among them, Michael Jackson, Martin Luther King Jr. and Mister Rogers -- doing outlandish things, but only after an outcry from family estates and an actors' union. The nonprofit Public Citizen is now demanding OpenAI withdraw Sora 2 from the public, writing in a Tuesday letter to the company and CEO Sam Altman that the app's hasty release so that it could launch ahead of competitors shows a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails." Sora 2, the letter says, shows a "reckless disregard" for product safety, as well as people's rights to their own likeness and the stability of democracy. The group also sent the letter to the U.S. Congress. OpenAI didn't immediately respond to a request for comment Tuesday. "Our biggest concern is the potential threat to democracy," said Public Citizen tech policy advocate J.B. Branch in an interview. "I think we're entering a world in which people can't really trust what they see. And we're starting to see strategies in politics where the first image, the first video that gets released, is what people remember." Branch, author of Tuesday's letter, also sees broader concerns to people's privacy that disproportionately impact vulnerable populations online. OpenAI blocks nudity but Branch said that "women are seeing themselves being harassed online" in other ways, such as with fetishized niche content that makes it through the apps' restrictions. The news outlet 404 Media on Friday reported on a flood of Sora-made videos of women being strangled. OpenAI introduced its new Sora app on iPhones more than a month ago. It launched on Android phones last week in the U.S., Canada and several Asian countries, including Japan and South Korea. Much of the strongest pushback has come from Hollywood and other entertainment interests, including the Japanese manga industry. OpenAI announced its first big changes just days after the release, saying "overmoderation is super frustrating" for users but that it's important to be conservative "while the world is still adjusting to this new technology." That was followed by publicly announced agreements with Martin Luther King Jr.'s family on Oct. 16, preventing "disrespectful depictions" of the civil rights leader while the company worked on better safeguards, and another on Oct. 20 with "Breaking Bad" actor Bryan Cranston, the SAG-AFTRA union and talent agencies. "That's all well and good if you're famous," Branch said. "It's sort of just a pattern that OpenAI has where they're willing to respond to the outrage of a very small population. They're willing to release something and apologize afterwards. But a lot of these issues are design choices that they can make before releasing." OpenAI has faced similar complaints about its flagship product, ChatGPT. Seven new lawsuits filed last week in California courts claim the chatbot drove people to suicide and harmful delusions even when they had no prior mental health issues. Filed on behalf of six adults and one teenager by the Social Media Victims Law Center and Tech Justice Law Project, the lawsuits claim that OpenAI knowingly released GPT-4o prematurely last year, despite internal warnings that it was dangerously sycophantic and psychologically manipulative. Four of the victims died by suicide. Public Citizen was not involved in the lawsuits, but Branch said he sees parallels in Sora's hasty release. He said they're "putting the pedal to the floor without regard for harms. Much of this seems foreseeable. But they'd rather get a product out there, get people downloading it, get people who are addicted to it rather than doing the right thing and stress-testing these things beforehand and worrying about the plight of everyday users." OpenAI spent last week responding to complaints from a Japanese trade association representing famed animators like Hayao Miyazaki's Studio Ghibli and video game makers like Bandai Namco and Square Enix. OpenAI said many anime fans want to interact with their favorite characters, but the company has also set guardrails in place to prevent well-known characters from being generated without the consent of the people who own the copyrights. "We're engaging directly with studios and rightsholders, listening to feedback, and learning from how people are using Sora 2, including in Japan, where cultural and creative industries are deeply valued," OpenAI said in a statement about the trade group's letter last week.

[5]

Watchdog Group Public Citizen Demands OpenAI Withdraw AI Video App Sora Over Deepfake Dangers

The tech industry is moving fast and breaking things again -- and this time it is humanity's shared reality and control of our likeness before and after death -- thanks to artificial intelligence image-generation platforms like OpenAI's Sora 2. The typical Sora video, made on OpenAI's app and spread onto TikTok, Instagram, X and Facebook, is designed to be amusing enough for you to click and share. It could be Queen Elizabeth II rapping or something more ordinary and believable. One popular Sora genre is fake doorbell camera footage capturing something slightly uncanny -- say, a boa constrictor on the porch or an alligator approaching an unfazed child -- and ends with a mild shock, like a grandma shouting as she beats the animal with a broom. But a growing chorus of advocacy groups, academics and experts are raising alarms about the dangers of letting people create AI videos on just about anything they can type into a prompt, leading to the proliferation of nonconsensual images and realistic deepfakes in a sea of less harmful "AI slop." OpenAI has cracked down on AI creations of public figures -- among them, Michael Jackson, Martin Luther King Jr. and Mister Rogers -- doing outlandish things, but only after an outcry from family estates and an actors' union. The nonprofit Public Citizen is now demanding OpenAI withdraw Sora 2 from the public, writing in a Tuesday letter to the company and CEO Sam Altman that the app's hasty release so that it could launch ahead of competitors shows a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails." Sora 2, the letter says, shows a "reckless disregard" for product safety, as well as people's rights to their own likeness and the stability of democracy. The group also sent the letter to the U.S. Congress. OpenAI didn't immediately respond to a request for comment Tuesday. "Our biggest concern is the potential threat to democracy," said Public Citizen tech policy advocate J.B. Branch in an interview. "I think we're entering a world in which people can't really trust what they see. And we're starting to see strategies in politics where the first image, the first video that gets released, is what people remember." Branch, author of Tuesday's letter, also sees broader concerns to people's privacy that disproportionately impact vulnerable populations online. OpenAI blocks nudity but Branch said that "women are seeing themselves being harassed online" in other ways, such as with fetishized niche content that makes it through the apps' restrictions. The news outlet 404 Media on Friday reported on a flood of Sora-made videos of women being strangled. OpenAI introduced its new Sora app on iPhones more than a month ago. It launched on Android phones last week in the U.S., Canada and several Asian countries, including Japan and South Korea. Much of the strongest pushback has come from Hollywood and other entertainment interests, including the Japanese manga industry. OpenAI announced its first big changes just days after the release, saying "overmoderation is super frustrating" for users but that it's important to be conservative "while the world is still adjusting to this new technology." That was followed by publicly announced agreements with Martin Luther King Jr.'s family on Oct. 16, preventing "disrespectful depictions" of the civil rights leader while the company worked on better safeguards, and another on Oct. 20 with "Breaking Bad" actor Bryan Cranston, the SAG-AFTRA union and talent agencies. "That's all well and good if you're famous," Branch said. "It's sort of just a pattern that OpenAI has where they're willing to respond to the outrage of a very small population. They're willing to release something and apologize afterwards. But a lot of these issues are design choices that they can make before releasing." OpenAI has faced similar complaints about its flagship product, ChatGPT. Seven new lawsuits filed last week in California courts claim the chatbot drove people to suicide and harmful delusions even when they had no prior mental health issues. Filed on behalf of six adults and one teenager by the Social Media Victims Law Center and Tech Justice Law Project, the lawsuits claim that OpenAI knowingly released GPT-4o prematurely last year, despite internal warnings that it was dangerously sycophantic and psychologically manipulative. Four of the victims died by suicide. Public Citizen was not involved in the lawsuits, but Branch said he sees parallels in Sora's hasty release. He said they're "putting the pedal to the floor without regard for harms. Much of this seems foreseeable. But they'd rather get a product out there, get people downloading it, get people who are addicted to it rather than doing the right thing and stress-testing these things beforehand and worrying about the plight of everyday users." OpenAI spent last week responding to complaints from a Japanese trade association representing famed animators like Hayao Miyazaki's Studio Ghibli and video game makers like Bandai Namco and Square Enix. OpenAI said many anime fans want to interact with their favorite characters, but the company has also set guardrails in place to prevent well-known characters from being generated without the consent of the people who own the copyrights. "We're engaging directly with studios and rightsholders, listening to feedback, and learning from how people are using Sora 2, including in Japan, where cultural and creative industries are deeply valued," OpenAI said in a statement about the trade group's letter last week.

[6]

Watchdog group Public Citizen demands OpenAI withdraw AI video app Sora over deepfake dangers

AI video tool Sora 2 is drawing criticism over deepfake risks, nonconsensual imagery, and threats to democracy, with advocacy groups accusing OpenAI of rushing unsafe products to market. Hollywood, creators, and privacy advocates demand stronger safeguards and accountability. The tech industry is moving fast and breaking things again - and this time it is humanity's shared reality and control of our likeness before and after death - thanks to artificial intelligence image-generation platforms like OpenAI's Sora 2. The typical Sora video, made on OpenAI's app and spread onto TikTok, Instagram, X and Facebook, is designed to be amusing enough for you to click and share. It could be Queen Elizabeth II rapping or something more ordinary and believable. One popular Sora genre is fake doorbell camera footage capturing something slightly uncanny -- say, a boa constrictor on the porch or an alligator approaching an unfazed child -- and ends with a mild shock, like a grandma shouting as she beats the animal with a broom. But a growing chorus of advocacy groups, academics and experts are raising alarms about the dangers of letting people create AI videos on just about anything they can type into a prompt, leading to the proliferation of nonconsensual images and realistic deepfakes in a sea of less harmful "AI slop." OpenAI has cracked down on AI creations of public figures - among them, Michael Jackson, Martin Luther King Jr and Mister Rogers - doing outlandish things, but only after an outcry from family estates and an actors' union. The nonprofit Public Citizen is now demanding OpenAI withdraw Sora 2 from the public, writing in a Tuesday letter to the company and CEO Sam Altman that the app's hasty release so that it could launch ahead of competitors shows a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails." Sora 2, the letter says, shows a "reckless disregard" for product safety, as well as people's rights to their own likeness and the stability of democracy. The group also sent the letter to the US Congress. OpenAI didn't immediately respond to a request for comment Tuesday. "Our biggest concern is the potential threat to democracy," said Public Citizen tech policy advocate J.B. Branch in an interview. "I think we're entering a world in which people can't really trust what they see. And we're starting to see strategies in politics where the first image, the first video that gets released, is what people remember." Branch, author of Tuesday's letter, also sees broader concerns to people's privacy that disproportionately impact vulnerable populations online. OpenAI blocks nudity but Branch said that "women are seeing themselves being harassed online" in other ways, such as with fetishized niche content that makes it through the apps' restrictions. The news outlet 404 Media on Friday reported on a flood of Sora-made videos of women being strangled. OpenAI introduced its new Sora app on iPhones more than a month ago. It launched on Android phones last week in the U.S., Canada and several Asian countries, including Japan and South Korea. Much of the strongest pushback has come from Hollywood and other entertainment interests, including the Japanese manga industry. OpenAI announced its first big changes just days after the release, saying "overmoderation is super frustrating" for users but that it's important to be conservative "while the world is still adjusting to this new technology." That was followed by publicly announced agreements with Martin Luther King Jr.'s family on Oct. 16, preventing "disrespectful depictions" of the civil rights leader while the company worked on better safeguards, and another on Oct. 20 with "Breaking Bad" actor Bryan Cranston, the SAG-AFTRA union and talent agencies. "That's all well and good if you're famous," Branch said. "It's sort of just a pattern that OpenAI has where they're willing to respond to the outrage of a very small population. They're willing to release something and apologize afterwards. But a lot of these issues are design choices that they can make before releasing." OpenAI has faced similar complaints about its flagship product, ChatGPT. Seven new lawsuits filed last week in California courts claim the chatbot drove people to suicide and harmful delusions even when they had no prior mental health issues. Filed on behalf of six adults and one teenager by the Social Media Victims Law Center and Tech Justice Law Project, the lawsuits claim that OpenAI knowingly released GPT-4o prematurely last year, despite internal warnings that it was dangerously sycophantic and psychologically manipulative. Four of the victims died by suicide. Public Citizen was not involved in the lawsuits, but Branch said he sees parallels in Sora's hasty release. He said they're "putting the pedal to the floor without regard for harms. Much of this seems foreseeable. But they'd rather get a product out there, get people downloading it, get people who are addicted to it rather than doing the right thing and stress-testing these things beforehand and worrying about the plight of everyday users." OpenAI spent last week responding to complaints from a Japanese trade association representing famed animators like Hayao Miyazaki's Studio Ghibli and video game makers like Bandai Namco and Square Enix. OpenAI said many anime fans want to interact with their favorite characters, but the company has also set guardrails in place to prevent well-known characters from being generated without the consent of the people who own the copyrights. "We're engaging directly with studios and rightsholders, listening to feedback, and learning from how people are using Sora 2, including in Japan, where cultural and creative industries are deeply valued," OpenAI said in a statement about the trade group's letter last week.

[7]

Watchdog group Public Citizen demands OpenAI withdraw AI video app Sora over deepfake dangers

The tech industry is moving fast and breaking things again -- and this time it is humanity's shared reality and control of our likeness before and after death -- thanks to artificial intelligence image-generation platforms like OpenAI's Sora 2. The typical Sora video, made on OpenAI's app and spread onto TikTok, Instagram, X and Facebook, is designed to be amusing enough for you to click and share. It could be Queen Elizabeth II rapping or something more ordinary and believable. One popular Sora genre is fake doorbell camera footage capturing something slightly uncanny -- say, a boa constrictor on the porch or an alligator approaching an unfazed child -- and ends with a mild shock, like a grandma shouting as she beats the animal with a broom. But a growing chorus of advocacy groups, academics and experts are raising alarms about the dangers of letting people create AI videos on just about anything they can type into a prompt, leading to the proliferation of nonconsensual images and realistic deepfakes in a sea of less harmful "AI slop." OpenAI has cracked down on AI creations of public figures -- among them, Michael Jackson, Martin Luther King Jr. and Mister Rogers -- doing outlandish things, but only after an outcry from family estates and an actors' union. The nonprofit Public Citizen is now demanding OpenAI withdraw Sora 2 from the public, writing in a Tuesday letter to the company and CEO Sam Altman that the app's hasty release so that it could launch ahead of competitors shows a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails." Sora 2, the letter says, shows a "reckless disregard" for product safety, as well as people's rights to their own likeness and the stability of democracy. The group also sent the letter to the U.S. Congress. OpenAI didn't immediately respond to a request for comment Tuesday. "Our biggest concern is the potential threat to democracy," said Public Citizen tech policy advocate J.B. Branch in an interview. "I think we're entering a world in which people can't really trust what they see. And we're starting to see strategies in politics where the first image, the first video that gets released, is what people remember." Branch, author of Tuesday's letter, also sees broader concerns to people's privacy that disproportionately impact vulnerable populations online. OpenAI blocks nudity but Branch said that "women are seeing themselves being harassed online" in other ways, such as with fetishized niche content that makes it through the apps' restrictions. The news outlet 404 Media on Friday reported on a flood of Sora-made videos of women being strangled. OpenAI introduced its new Sora app on iPhones more than a month ago. It launched on Android phones last week in the U.S., Canada and several Asian countries, including Japan and South Korea. Much of the strongest pushback has come from Hollywood and other entertainment interests, including the Japanese manga industry. OpenAI announced its first big changes just days after the release, saying "overmoderation is super frustrating" for users but that it's important to be conservative "while the world is still adjusting to this new technology." That was followed by publicly announced agreements with Martin Luther King Jr.'s family on Oct. 16, preventing "disrespectful depictions" of the civil rights leader while the company worked on better safeguards, and another on Oct. 20 with "Breaking Bad" actor Bryan Cranston, the SAG-AFTRA union and talent agencies. "That's all well and good if you're famous," Branch said. "It's sort of just a pattern that OpenAI has where they're willing to respond to the outrage of a very small population. They're willing to release something and apologize afterwards. But a lot of these issues are design choices that they can make before releasing." OpenAI has faced similar complaints about its flagship product, ChatGPT. Seven new lawsuits filed last week in California courts claim the chatbot drove people to suicide and harmful delusions even when they had no prior mental health issues. Filed on behalf of six adults and one teenager by the Social Media Victims Law Center and Tech Justice Law Project, the lawsuits claim that OpenAI knowingly released GPT-4o prematurely last year, despite internal warnings that it was dangerously sycophantic and psychologically manipulative. Four of the victims died by suicide. Public Citizen was not involved in the lawsuits, but Branch said he sees parallels in Sora's hasty release. He said they're "putting the pedal to the floor without regard for harms. Much of this seems foreseeable. But they'd rather get a product out there, get people downloading it, get people who are addicted to it rather than doing the right thing and stress-testing these things beforehand and worrying about the plight of everyday users." OpenAI spent last week responding to complaints from a Japanese trade association representing famed animators like Hayao Miyazaki's Studio Ghibli and video game makers like Bandai Namco and Square Enix. OpenAI said many anime fans want to interact with their favorite characters, but the company has also set guardrails in place to prevent well-known characters from being generated without the consent of the people who own the copyrights. "We're engaging directly with studios and rightsholders, listening to feedback, and learning from how people are using Sora 2, including in Japan, where cultural and creative industries are deeply valued," OpenAI said in a statement about the trade group's letter last week.

Share

Share

Copy Link

Nonprofit advocacy group Public Citizen calls for OpenAI to remove its AI video generator Sora 2 from public access, citing concerns about deepfakes, nonconsensual content, and threats to democratic processes. The organization accuses OpenAI of rushing unsafe products to market without adequate safeguards.

Watchdog Group Demands Sora 2 Withdrawal

Nonprofit advocacy organization Public Citizen has formally demanded that OpenAI withdraw its AI video generation app Sora 2 from public access, citing serious concerns about deepfakes, nonconsensual content creation, and potential threats to democratic processes

1

. In a letter sent Tuesday to OpenAI CEO Sam Altman and members of Congress, the organization accused the company of demonstrating a "consistent and dangerous pattern of OpenAI rushing to market with a product that is either inherently unsafe or lacking in needed guardrails"2

.

Source: Fortune

The letter characterizes Sora 2's release as showing "reckless disregard" for product safety, individual rights to personal likeness, and the stability of democratic institutions

3

. Public Citizen tech policy advocate J.B. Branch, who authored the letter, expressed particular concern about the technology's potential impact on democratic processes, stating that "we're entering a world in which people can't really trust what they see"4

.Growing Concerns Over AI-Generated Content

Sora 2 enables users to create AI-generated videos from simple text prompts, leading to widespread sharing across social media platforms including TikTok, Instagram, X, and Facebook

5

. While many videos are designed to be amusing—such as fake doorbell camera footage featuring unusual scenarios—advocacy groups warn about the proliferation of more harmful content, including nonconsensual images and realistic deepfakes.

Source: AP

The 404 Media outlet recently reported a surge in Sora-generated videos depicting women in violent scenarios, highlighting the platform's potential for creating disturbing content that bypasses existing restrictions

1

. Branch noted that while OpenAI blocks explicit nudity, "women are seeing themselves being harassed online" through other forms of fetishized content that circumvent the platform's safeguards.OpenAI's Reactive Approach to Safety

OpenAI has implemented restrictions on creating AI videos of public figures, but only after facing significant backlash from family estates and entertainment industry unions

2

. The company reached agreements with Martin Luther King Jr.'s family on October 16 to prevent "disrespectful depictions" of the civil rights leader, followed by another agreement on October 20 with actor Bryan Cranston and the SAG-AFTRA union.Branch criticized this reactive approach, noting that OpenAI appears "willing to respond to the outrage of a very small population" while ignoring broader safety concerns affecting ordinary users

4

. He argued that many safety issues represent "design choices that they can make before releasing" rather than problems requiring post-launch fixes.Related Stories

Broader Pattern of Premature Releases

The Sora 2 controversy emerges amid broader criticism of OpenAI's product development practices. Seven new lawsuits filed last week in California courts allege that the company's ChatGPT chatbot contributed to suicides and psychological harm, with plaintiffs claiming OpenAI released GPT-4o despite internal warnings about its potentially manipulative behavior

1

. Four individuals died by suicide in cases connected to these lawsuits.Branch drew parallels between the ChatGPT lawsuits and Sora 2's release, arguing that OpenAI consistently prioritizes market competition over user safety. "They'd rather get a product out there, get people downloading it, get people who are addicted to it rather than doing the right thing and stress-testing these things beforehand," he stated

5

.OpenAI launched Sora 2 on iPhones over a month ago and expanded to Android devices last week across the United States, Canada, and several Asian countries including Japan and South Korea. The company has not responded to requests for comment regarding Public Citizen's demands.

References

Summarized by

Navi

[4]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology