Runway launches GWM-1 world model, expanding beyond video generation into robotics and AI agents

2 Sources

2 Sources

[1]

With GWM-1 family of "world models," Runway shows ambitions beyond Hollywood

AI company Runway has announced what it calls its first world model, GWM-1. It's a significant step in a new direction for a company that has made its name primarily on video generation, and it's part of a wider gold rush to build a new frontier of models as large language models and image and video generation move into a refinement phase, no longer an untapped frontier. GWM-1 is a blanket term for a trio of autoregression models, each built on top Runway's Gen-4.5 text-to-video generation model and then post-trained with domain-specific data for different kinds of applications. Here's what each does. GWM Worlds offers an interface for digital environment exploration with real-time user input that affects the generation of coming frames, which Runway suggests can remain consistent and coherent "across long sequences of movement." Users can define the nature of the world -- what it contains and how it appears -- as well as rules like physics. They can give it actions or changes that will be reflected in real-time, like camera movements or descriptions of changes to the environment or the objects in it. As the methodology here is basically an advanced form of frame prediction, it might be a stretch to say these are full-on world simulations, but the claim is that they're reliable enough to be usable as such. Potential applications include pre-visualization and early iteration for game design and development, generation of virtual reality environments, or educational explorations of historical spaces. There's also a major use case that takes this outside Runway's usual area of focus: World models like this can be used to train AI agents of various types, including robots. The second model, GWM Robotics, does just that. It can be used "to generate synthetic training data that augments your existing robotics datasets across multiple dimensions, including novel objects, task instructions, and environmental variations."

[2]

Runway releases its first world model, adds native audio to latest video model | TechCrunch

The race to release world models is on as AI image and video generation company Runway joins an increasing number of startups and big tech companies by launching its first one. Dubbed GWM-1, the model works through frame-by-frame prediction, creating a simulation with an understanding of physics and how the world actually behaves over time, the company said. A world model is an AI system that learns an internal simulation of how the world works so it can reason, plan, and act without needing to be trained on every scenario possible in real life. Runway, which earlier this month launched its Gen 4.5 video model that surpassed both Google and OpenAI on the Video Arena leaderboard, said its GWM-1 world model is more "general" than Google's Genie-3 and other competitors. The firm is pitching it as a model that can create simulations to train agents in different domains like robotics and life sciences. Runway released specific slants to the new world model called GWM-Worlds, GWM-Robotics, and GWM-Avatars. GWM-Worlds is an app for the model that lets you create an interactive project. Users can set a scene through a prompt, and as you explore the space, the model generates the world with an understanding of geometry, physics, and lighting. Runway said that while Worlds could be useful for gaming, it's also well positioned to teach agents how to navigate and behave in the physical world. With GWM-Robotics, the company aims to use synthetic data enriched with new parameters like changing weather conditions or obstacles. Runway says this method could also reveal when and how robots might violate policies and instructions in different scenarios. Runway is also building realistic avatars under GWM-Avatars to simulate human behavior. Companies like D-ID, Synthesia, Soul Machines, and even Google have worked on creating human avatars that look real and work in areas like communication and training. Besides releasing a new world model, the company is also updating its foundational Gen 4.5 model released earlier in the month. The new update brings native audio and long-form, multi-shot generation capabilities to the model. The company said that with this model, users can generate one-minute videos with character consistency, native dialogue, background audio, and complex shots from various angles. The Gen 4.5 update nudges Runway closer to competitor Kling's all-in-one video suite, which also launched earlier this month, particularly around native audio and multi-shot storytelling. It also signals that video generation models are moving from prototype to production-ready tools. Runway's updated Gen 4.5 model will be available to enterprise customers first and then to all paid plan users in the coming weeks. The company said that it will make GWM-Robotics available through an SDK. It added that it is in active conversation with several robotics firms and enterprises for the use of GWM-Robotics and GWM-Avatars.

Share

Share

Copy Link

Runway has unveiled GWM-1, its first world model that creates simulations with physics understanding. The company also updated its Gen 4.5 video model with native audio and long-form generation, signaling a shift from video generation into broader AI applications including robotics and agent training.

Runway Unveils GWM-1 World Model for AI Agent Training

Runway has announced GWM-1, its first world model that marks a strategic pivot beyond the company's established reputation in AI-driven video generation

1

. The system works through frame-by-frame prediction to create a simulation with an understanding of physics and how the world behaves over time2

. This development positions Runway alongside an increasing number of startups and tech companies racing to build world models as large language models and video generation enter a refinement phase.GWM-1 functions as a blanket term for three autoregression models, each built on top of Runway's Gen 4.5 text-to-video generation model and then post-trained with domain-specific data for different applications

1

. Runway is pitching it as more "general" than Google's Genie-3 and other competitors, emphasizing its capacity to create simulations for training AI agents and robots across domains like robotics and life sciences2

.GWM Worlds Enables Real-Time Digital Environment Exploration

GWM Worlds offers an interface for real-time digital environment exploration where user input affects the generation of coming frames

1

. Users can define the nature of the world, including what it contains and how it appears, as well as establish rules like physics. The system allows for actions or changes reflected in real-time, such as camera movements or descriptions of environmental modifications. Runway claims these simulations can remain consistent and coherent across long sequences of movement.

Source: Ars Technica

Potential applications extend to game design and development for pre-visualization and early iteration, generation of virtual reality environments, and educational explorations of historical spaces

1

. While the methodology relies on advanced frame prediction rather than full-on world simulations, Runway suggests the technology is reliable enough for practical use. The company noted that while GWM Worlds could prove useful for gaming, it's also well positioned to teach agents how to navigate and behave in the physical world2

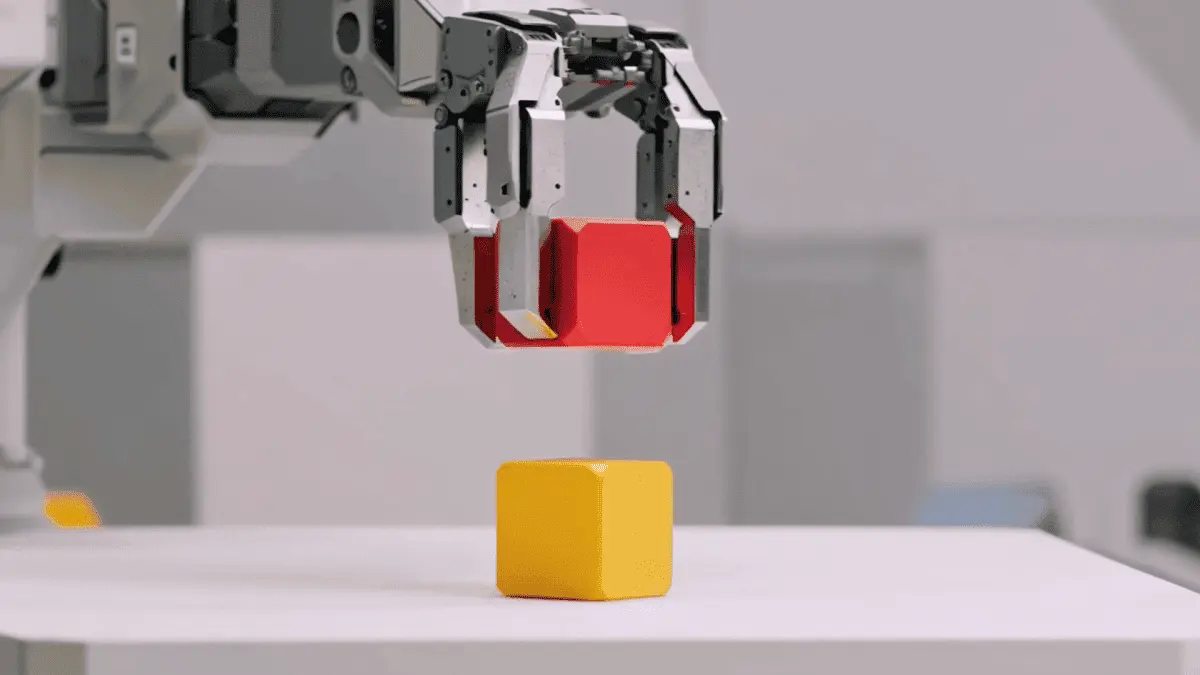

.GWM Robotics Generates Synthetic Training Data for Robot Development

The second model variant, GWM Robotics, addresses a major use case that extends beyond Runway's traditional focus area

1

. The system generates synthetic training data that augments existing robotics datasets across multiple dimensions, including novel objects, task instructions, and environmental variations. Runway aims to use this synthetic data enriched with new parameters like changing weather conditions or obstacles2

.

Source: TechCrunch

The company says this method could also reveal when and how robots might violate policies and instructions in different scenarios, offering a testing ground for safety and compliance before real-world deployment

2

. Runway confirmed it will make GWM Robotics available through an SDK and is in active conversations with several robotics firms and enterprises for deployment.Related Stories

GWM-Avatars Simulates Human Behavior for Communication Applications

Runway is also building realistic avatars under GWM-Avatars to simulate human behavior

2

. This positions the company in competition with established players like D-ID, Synthesia, Soul Machines, and Google, who have worked on creating human avatars that appear realistic and function in areas like communication and training. The company is currently in active discussions with enterprises regarding the deployment of GWM-Avatars alongside GWM Robotics.Gen 4.5 Update Brings Native Audio and Long-Form Multi-Shot Generation

Beyond releasing its world model, Runway is updating its foundational Gen 4.5 model that launched earlier this month and surpassed both Google and OpenAI on the Video Arena leaderboard

2

. The new update introduces native audio and long-form multi-shot generation capabilities. Users can now generate one-minute videos with character consistency, native dialogue, background audio, and complex shots from various angles.This update nudges Runway closer to competitor Kling's all-in-one video suite, which also launched earlier this month, particularly around native audio and multi-shot storytelling

2

. The enhanced Gen 4.5 model will be available to enterprise customers first before rolling out to all paid plan users in the coming weeks, signaling that video generation models are moving from prototype to production-ready tools.References

Summarized by

Navi

Related Stories

Runway's Gen-4.5 AI Video Model Claims Top Spot, Outperforming Google and OpenAI

01 Dec 2025•Technology

Runway raises $315M at $5.3B valuation as AI video startup pivots to world model development

10 Feb 2026•Startups

Runway AI Unveils API for Advanced Video Generation, Revolutionizing Content Creation

16 Sept 2024

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Anthropic stands firm against Pentagon's demand for unrestricted military AI access

Policy and Regulation

3

Pentagon Clashes With AI Firms Over Autonomous Weapons and Mass Surveillance Red Lines

Policy and Regulation