Signal founder Moxie Marlinspike launches Confer, an encrypted AI chatbot alternative to ChatGPT

7 Sources

7 Sources

[1]

Signal creator Moxie Marlinspike wants to do for AI what he did for messaging

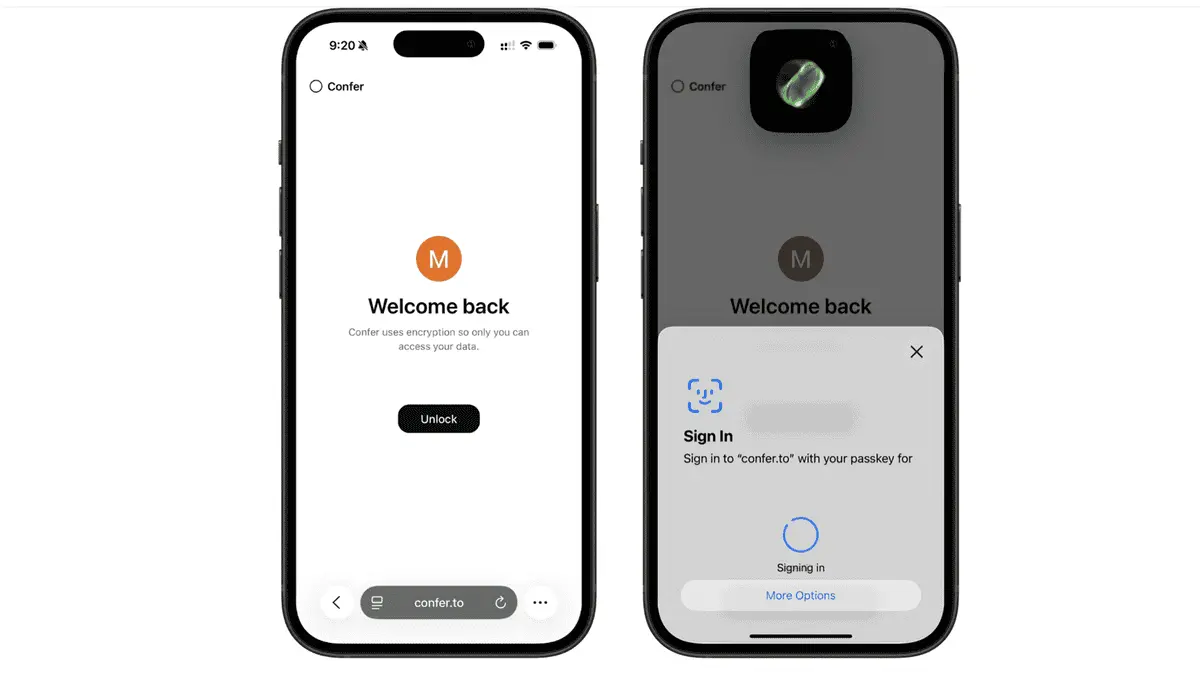

Moxie Marlinspike -- the pseudonym of an engineer who set a new standard for private messaging with the creation of the Signal Messenger -- is now aiming to revolutionize AI chatbots in a similar way. His latest brainchild is Confer, an open source AI assistant that provides strong assurances that user data is unreadable to the platform operator, hackers, law enforcement, or any other party other than account holders. The service -- including its large language models and back-end components -- runs entirely on open source software that users can cryptographically verify is in place. Data and conversations originating from users and the resulting responses from the LLMs are encrypted in a trusted execution environment (TEE) that prevents even server administrators from peeking at or tampering with them. Conversations are stored by Confer in the same encrypted form, which uses a key that remains securely on users' devices. Like Signal, the under-the-hood workings of Confer are elegant in their design and simplicity. Signal was the first end-user privacy tool that made using it a snap. Prior to that, using PGP email or other options to establish encrypted channels between two users was a cumbersome process that was easy to botch. Signal broke that mold. Key management was no longer a task users had to worry about. Signal was designed to prevent even the platform operators from peering into messages or identifying users' real-world identities. "Inherent data collectors" All major platforms are required to turn over user data to law enforcement or private parties in a lawsuit when either provides a valid subpoena. Even when users opt out of having their data stored long term, parties to a lawsuit can compel the platform to store it, as the world learned last May when a court ordered OpenAI to preserve all ChatGPT users' logs -- including deleted chats and sensitive chats logged through its API business offering. Sam Altman, CEO of OpenAI, has said such rulings mean even psychotherapy sessions on the platform may not stay private. Another carve out to opting out: AI platforms like Google Gemini may have humans read chats. Data privacy expert Em (she keeps her last name off the Internet) called AI assistants the "archnemesis" of data privacy because their utility relies on assembling massive amounts of data from myriad sources, including individuals. "AI models are inherent data collectors," she told Ars. "They rely on large data collection for training, improvements, operations, and customizations. More often than not, this data is collected without clear and informed consent (from unknowing training subjects or from platform users), and is sent to and accessed by a private company with many incentives to share and monetize this data." The lack of user-control is especially problematic given the nature of LLM interactions, Marlinspike says. Users often treat dialogue as an intimate conversation. Users share their thoughts, fears, transgressions, business dealings, and deepest, darkest secrets as if AI assistants are trusted confidants or personal journals. The interactions are fundamentally different from traditional web search queries, which usually adhere to a transactional model of keywords in and links out. He likens AI use to confessing into a "data lake." Awaking from the nightmare that is today's AI landscape In response, Marlinspike has developed and is now trialing Confer. In much the way Signal uses encryption to make messages readable only to parties participating in a conversation, Confer protects user prompts, AI responses, and all data included in them. And just like Signal, there's no way to tie individual users to their real-world identity through their email address, IP address, or other details. "The character of the interaction is fundamentally different because it's a private interaction," Marlinspike told Ars. "It's been really interesting and encouraging and amazing to hear stories from people who have used Confer and had life-changing conversations. In part because they haven't felt free to include information in those conversations with sources like ChatGPT or they had insights using data that they weren't really free to share with ChatGPT before but can using an environment like Confer." One of the main ingredients of Confer encryption is passkeys. The industry-wide standard generates a 32-byte encryption keypair that's unique to each service a user logs into. The public key is sent to the server. The private key is stored only on the user device, inside protected storage hardware that hackers (even those with physical access) can't access. Passkeys provide two-factor authentication and can be configured to log in to an account with a fingerprint, face scan (both of which also stay securely on a device), or a device unlock PIN or passcode. The private key allows the device to log in to Confer and encrypt all input and output with encryption that's widely believed to be impossible to break. That allows users to store conversations on Confer servers with confidence that they can't be read by anyone other than themselves. The storage allows conversations to sync across other devices the user owns. The code making this all work is available for anyone to inspect. It looks like this: const assertion = await navigator.credentials.get({ mediation: "optional", publicKey: { challenge: crypto.getRandomValues(new Uint8Array(32)), allowCredentials: [{ id: credId, type: "public-key" }], userVerification: "required", extensions: { prf: { eval: { first: new Uint8Array(salt) } } } } }) as PublicKeyCredential; const { prf } = assertion.getClientExtensionResults(); const rawKey = new Uint8Array(prf.results.first); This robust internal engine is fronted by a user interface (shown in the two images above) that's deceptively simple. In just two strokes, a user is logged in, and all previous chats are decrypted. These chats are then available to any device logged into the same account. This way, Confer can sync chats without compromising privacy. The ample 32 bytes of key material allow the private key to change regularly, a feature that allows for forward secrecy, meaning that in the event a key is compromised, an attacker cannot read previous or future chats. The other main Confer ingredient is a TEE on the platform servers. TEEs encrypt all data and code flowing through the server CPU, protecting them from being read or modified by someone with administrative access to the machine. The Confer TEE also provides remote attestation. Remote attestation is a digital certificate sent by the server that cryptographically verifies that data and software are running inside the TEE and lists all software running on it. On Confer, remote attestation allows anyone to reproduce the bit-by-bit outputs that confirm that the publicly available proxy and image software -- and only that software -- is running on the server. To further verify Confer is running as promised, each release is digitally signed and published in a transparency log. Native support for Confer is available in the most recent versions of macOS, iOS, and Android. On Windows, users must install a third-party authenticator. Linux support also doesn't exist, although this extension bridges that gap. There are other private LLMs, but none from the big players Another publicly available LLM offering E2EE is Lumo, provided by Proton, a European company that's behind the popular encrypted email service. It adopts the same encryption engine used by Proton Mail, Drive, and Calendar. The internals of the engine are considerably more complicated than Confer because they rely on a series of both symmetric and asymmetric keys. The end result for the user is largely the same, however. Once a user authenticates to their account, Proton says, all conversations, data, and metadata is encrypted with a symmetrical key that only the user has. Users can opt to store the encrypted data on Proton servers for device syncing or have it wiped immediately after the conversation is finished. A third LLM provider promising privacy is Venice. It stores all data locally, meaning on the user device. No data is stored on the remote server. Most of the big LLM platforms offer a means for users to exempt their conversations and data for marketing and training purposes. But as noted earlier, these promises often come with major carve-outs. Besides selected review by humans, personal data may still be used to enforce terms of service or for other internal purposes, even when users have opted out of default storage. Given today's legal landscape -- which allows most data stored online to be obtained with a subpoena -- and the regular occurrence of blockbuster data breaches by hackers, there can be no reasonable expectation that personal data remains private. It would be great if big providers offered end-to-end encryption protections, but there's currently no indication they plan to do so. Until then, there are a handful of smaller alternatives that will keep user data out of the ever-growing data lake.

[2]

Moxie Marlinspike has a privacy-conscious alternative to ChatGPT | TechCrunch

If you're at all concerned about privacy, the rise of AI personal assistants can feel alarming. It's difficult to use one without sharing personal information, which is retained by the model's parent company. With OpenAI already testing advertising, it's easy to imagine the same data collection that fuels Facebook and Google creeping into your chatbot conversations. A new project, launched in December by Signal co-founder Moxie Marlinspike, is showing what a privacy-conscious AI service might look like. Confer is designed to look and feel like ChatGPT or Claude, but the backend is arranged to avoid data collection, with the open-source rigor that makes Signal so trusted. Your Confer conversations can't be used to train the model or target ads, for the simple reason that the host will never have access to them. For Marlinspike, those protections are a response to the intimate nature of the service. "It's a form of technology that actively invites confession," says Marlinspike. "Chat interfaces like ChatGPT know more about people than any other technology before. When you combine that with advertising, it's like someone paying your therapist to convince you to buy something." Ensuring that privacy requires several different systems working in concert. First, Confer encrypts messages to and from the system using the WebAuthn passkey system. (Unfortunately, that standard works best on mobile devices or Macs running Sequoia, although you can also make it work on Windows or Linux with a password manager.) On the server side, all Confer's inference processing is done in a Trusted Execution Environment (TEE), with remote attestation systems in place to verify the system hasn't been compromised. Inside that, there's an array of open-weight foundation models handling whatever query comes in. The result is a lot more complicated than a standard inference setup (which is fairly complicated already), but it delivers on Confer's basic promise to users. As long as those protections are in place, you can have sensitive conversations with the model without any information leaking out. Confer's free tier is limited to 20 messages a day and five active chats. Users willing to pay $35 a month will get unlimited access, along with more advanced models and personalization. That's quite a bit more than ChatGPT's Plus plan -- but privacy doesn't come cheap.

[3]

Worried about AI privacy? This new tool from Signal's founder adds end-to-end encryption to your chats

Follow ZDNET: Add us as a preferred source on Google. ZDNET's key takeaways * Moxie Marlinspike's latest project is Confer, a privacy-conscious alternative to ChatGPT. * The concept that underpins Confer is "that your conversations with an AI assistant should be as private as your conversations with a person." * The developer says conversations remain private and can't be stored or used for training purposes by third parties. Moxie Marlinspike, the mind behind the secure messaging app Signal, has launched an alternative to AI chatbot ChatGPT that focuses on user privacy and security. Also: The best free AI courses and certificates for upskilling in 2026 - and I've tried them all It's probably no surprise that the popularity of ChatGPT is leading it down a well-worn path: the arrival of ads. However, this shift also highlights how our data is currency that tech organizations are falling over themselves to profit from, as well as growing data privacy concerns connected with AI chatbots. Experts have warned of privacy and security challenges with AI technologies. Yet over 40% of workers have shared sensitive information with AI, according to research from the National Cybersecurity Alliance. So, could encryption be the solution to the privacy challenge? Enter Confer Moxie Marlinspike, cryptography expert and the founder of Signal, wants to create a step change. In December, he launched Confer, described as "end-to-end encryption for AI chats." "With Confer, your conversations are encrypted so that nobody else can see them," Marlinspike said in a blog post. "Confer can't read them, train on them, or hand them over -- because only you have access to them." According to TIME, Confer uses different AI models for different tasks, sourced from the open source community. More advanced modeling may be available in premium subscriptions. Also: How a simple link allowed hackers to bypass Copilot's security guardrails - and what Microsoft did about it Confer uses passkey encryption, server encryption, and the WebAuthn PRF extension. Typically, end-to-end encryption relies on private keys and local devices, and providing the same level of protection when accessing a web service poses challenges -- as even if passkeys are stored on your device, prompts and responses could be exposed. To resolve this issue, Confer operates in an isolated Trusted Execution Environment (TEE) and uses remote attestation, which allows anyone to verify code running on its servers. Each release is signed and published to a transparency log. Also: Gemini vs. Copilot: I compared the AI tools on 7 everyday tasks, and there's a clear winner "Confer combines confidential computing with passkey-derived encryption to ensure your data remains private," Marlinspike said. "This is different from traditional AI services, where your prompts are transmitted in plaintext to an operator who stores them in plaintext (where they are vulnerable to hackers, employees, subpoenas), mines them for behavioral data, and trains on them." How to try Confer Once you've signed up with an email address and received a sign-in link, Confer will generate a passkey for you -- if your system supports passkey encryption. While it works best on Mac and Android, Windows and Linux users will need a compatible password manager. You'll then have access to the Confer dashboard, and if you've used ChatGPT in the past, this area will feel familiar. Start a conversation -- it's that simple. Free and paid options are available, although the free option has limitations at this stage of development. Also: Weaponized AI risk is 'high,' warns OpenAI - here's the plan to stop it "The core idea is that your conversations with an AI assistant should be as private as your conversations with a person," the developer says. "Not because you're doing something wrong, but because privacy is what lets you think freely." Confer's future Confer appears to be growing rapidly in popularity. Following its recent launch, Marlinespike said on X: "It has been cool to see so many people using Confer over the past few weeks, and I've been working to keep scaling up the backend! I remember the early Signal 24hr sustained traffic milestones (1 msg/sec, 10/s, 100/s, ...) and it's fun to see traffic climb through those again." Also: AI agents are already causing disasters - and this hidden threat could derail your safe rollout Confer is in active development, with new features added frequently. One of the latest upgrades is the option to import your ChatGPT or Claude conversations. An iOS app is also on the horizon. It will be interesting to see how this project progresses and whether the idea of encrypted conversations with an AI model captures the general public's interest in the same way Signal did.

[4]

Signal's Founder Turns His Attention to AI's Privacy Problem

Confer, an open source chatbot, encrypts both prompts and responses so companies and advertisers can’t access user data. The founder of Signal has been quietly working on a fully end-to-end encrypted, open-source AI chatbot designed to keep users’ conversations secret. In a series of blog posts, Moxie Marlinspike makes clear that while he is a fan of large language models, he’s uneasy about how little privacy most AI platforms currently provide. Marlinspike argues that, like Signal, a chatbot’s interface should accurately reflect what’s happening under the hood. Signal looks like a private one-on-one conversation because it is one. Meanwhile, chatbots like ChatGPT and Claude feel like a safe space for intimate exchanges or a private journal, even though users’ conversations can be accessed by the company behind them and sometimes used for training. In other words, if a chatbot feels like you’re having a private conversation, Marlinspike says it should actually work that way too. He says this is especially important because LLMs represent the first major tech medium that “actively invites confession.†As people chat with these systems, they end up sharing a lot about how their brain works, including thinking patterns and uncertainties. Marlinspike warns that this kind of info could easily be turned against users, with advertisers eventually exploiting insights about them to sell products or influence behavior. His proposed solution is Confer, an AI chatbot that encrypts both prompts and responses so that only the user can access them. “Confer is designed to be a service where you can explore ideas without your own thoughts potentially conspiring against you someday; a service that breaks the feedback loop of your thoughts becoming targeted ads becoming thoughts; a service where you can learn about the world â€" without data brokers and future training runs learning about you instead,†wrote Marlinspike Signal was founded in 2014 around similar principles, and its open-source encrypted messaging protocol was eventually adopted by Meta’s WhatsApp just a few years later. So, it's possible Meta and other tech giants could eventually adopt Confer’s technology as well. According to Marlinspike, Confer is designed so that users’ conversations are encrypted before they ever leave their devices, similar to how Signal works. Prompts are encrypted on a user’s computer or phone and sent to Confer’s servers in that form, then decrypted only in a secure data environment to generate a response. Confer does this by using a mix of security tools. Instead of traditional passwords, it uses passkeys, such as Face ID, Touch ID, or a device unlock PIN on verified users’ devices, to derive encryption keys. When it comes time for the AI to respond, Confer uses what it calls confidential computing, where hardware-enforced isolation is used to run code in a Trusted Execution Environment (TEE). “The host machine provides CPU, memory, and power, but cannot access the TEE’s memory or execution state,†Marlinspike explained. With the LLM’s “thinking,†or inference, running in a confidential virtual machine, the response is then encrypted and sent back to the user. The hardware also produces cryptographic proof, known as attestation, that allows a user’s device to verify that everything is running as it should.

[5]

Signal's founder is taking on ChatGPT -- here's why the 'truly private AI' can't leak your chats

Unlike ChatGPT or Gemini, Confer doesn't collect or store your data for training, logging, or legal access The man who made private messaging mainstream now wants to do the same for AI. Signal creator Moxie Marlinspike has launched a new AI assistant called Confer, built around similar privacy principles. Conversations with Confer can't be read even by server administrators. The platform encrypts every part of the user interaction by default and runs in what's called a trusted execution environment, never letting sensitive user data leave that encrypted bubble. There's no saved data checked on, used for training, or sold to other companies. Confer is an outlier in this way, as data is usually considered the value of making an AI chatbot free. But as consumer trust in AI privacy is already strained, the appeal is obvious. People are noticing that what they say to these systems doesn't always stay private. A court order last year forced OpenAI to retain all ChatGPT user logs, even deleted ones, for potential legal discovery, and ChatGPT chats even showed up in Google Search results for a while, thanks to accidentally public links. There was also an uproar over contractors reviewing anonymized chatbot transcripts that included personal health information. Confer's data is encrypted before it even reaches the server, using passkeys stored only on the user's device. Those keys are never uploaded or shared. Confer supports syncing chats between devices, yet thanks to cryptographic design choices not even Confer's creators can unlock them. It's ChatGPT with Signal security. Confer's design goes one step further than most privacy-first products by offering a feature called remote attestation. This allows any user to verify exactly what code is running on Confer's servers. The platform publishes the software stack in full, and digitally signs every release. This may not matter to every user. But for developers, organizations, and watchdogs trying to assess how their data is handled, it's a radical level of security that might allow some concerned AI chatbot fans to breathe easier. Not that there aren't privacy settings on other AI chatbots. There are actually quite a few that users can review, even if they don't think to do so until after they've already said something personal. ChatGPT, Gemini, and Meta AI all provide opt-out toggles for things like chat history, allowing data to be used for training, or outright removing data. But the default state is surveillance, and opting out is a user's responsibility. Confer inverts that setup by making the most private setup the default. It's baked in, though, which also highlights how most privacy tools are reactive. It might at least raise awareness, if not consumer demand for more AI chatbots that forget. Organizations like schools and hospitals interested in AI might be enticed by tools that guarantee confidentiality by design.

[6]

An Encrypted Chatbot by Signal's Founder

New paradigm -- That's a clean break from the current way of doing things. When you interact with existing chatbots -- unless you have a powerful computer running an open-source AI system -- your data is not held privately. That's especially true for the most useful models, which are closely guarded by AI companies, and far too big to run on a local machine anyway. Even though it may feel as intimate as a private chat, the reality is quite different. Marlinspike writes that users appear to be engaged in a conversation with an assistant, but an "honest representation" would be more akin to a group chat with "executives and employees, their business partners / service providers, the hackers who will compromise that plaintext data, the future advertisers who will almost certainly emerge, and the lawyers and governments who will subpoena access." Anti-surveillance -- For AI companies trying to generate profitable returns on the capital expenditure of building frontier AI systems, that data is a potential goldmine. Your AI chat logs reveal how you think, Marlinspike argues. As such, they could be the key for a profoundly more powerful -- and manipulative -- form of advertising, which is inevitably coming soon: "It will be as if a third party pays your therapist to convince you of something," Marlinspike writes.

[7]

Signal co-founder launches privacy-focused AI service Confer

Signal co-founder Moxie Marlinspike has unveiled Confer, a new privacy-focused AI service designed to offer the utility of chatbots like ChatGPT without the associated data surveillance. Launched in December, the project aims to counter the growing trend of AI models retaining personal information for training and advertising purposes. Marlinspike argues that current AI assistants invite intimate disclosure, creating a dangerous dynamic when paired with the profit motives of big tech. "Chat interfaces like ChatGPT know more about people than any other technology before," Marlinspike noted. "When you combine that with advertising, it's like someone paying your therapist to convince you to buy something." To prevent this, Confer is built on a rigorous architecture that ensures the host never has access to user conversations. The system employs WebAuthn passkeys to encrypt messages on the user's device and processes all inference within a Trusted Execution Environment (TEE) on the server side. Remote attestation systems verify that the server hasn't been compromised, while open-weight foundation models handle the actual queries inside this secure enclave. The service operates on a freemium model. A free tier restricts users to 20 messages a day and five active chats, while a $35 per month subscription offers unlimited access, advanced models, and personalization features. While significantly more expensive than competitors like ChatGPT Plus, Confer pitches the premium as the necessary cost of true privacy.

Share

Share

Copy Link

Moxie Marlinspike, creator of Signal, has launched Confer—an open source AI chatbot that encrypts user conversations so thoroughly that even server administrators can't access them. Unlike ChatGPT or other mainstream AI assistants, Confer uses passkeys, Trusted Execution Environments, and remote attestation to ensure prompts and responses remain private, addressing growing concerns about data collection in AI platforms.

Signal Founder Tackles AI Privacy with Confer Launch

Moxie Marlinspike, the engineer behind Signal Messenger, has unveiled Confer, a privacy-focused AI service designed to address mounting concerns about user data privacy in artificial intelligence platforms

1

. Launched in December, this alternative to ChatGPT represents an attempt to apply the same end-to-end encryption principles that made Signal trusted by millions to the world of AI chatbots2

. The open source platform encrypts both user prompts and AI responses, ensuring that conversations remain inaccessible to platform operators, hackers, law enforcement, or advertisers1

.

Source: Gizmodo

The timing reflects growing unease about how mainstream AI platforms handle sensitive information. Last May, a court ordered OpenAI to preserve all ChatGPT user logs—including deleted chats and sensitive conversations from API business offerings—highlighting how user data remains vulnerable to legal subpoenas

1

. Sam Altman, CEO of OpenAI, acknowledged that even psychotherapy sessions on the platform may not stay private under such rulings. With OpenAI already testing advertising, concerns intensify about the same data collection practices that fuel Facebook and Google creeping into chatbot conversations2

.How Confer Achieves Truly Private AI Conversations

Confer's architecture combines multiple security layers to deliver on its privacy promise. The AI chatbot uses passkeys—an industry-wide standard that generates a 32-byte encryption keypair unique to each service

1

. The public key goes to the server, while the private key remains securely stored on user devices in protected hardware that even physical attackers cannot access. Users authenticate through fingerprints, face scans, or device unlock PINs—all of which stay locally on their devices1

.

Source: TechRadar

The platform leverages WebAuthn and passkey encryption to encrypt messages before they leave user devices

3

. On the server side, all processing happens inside Trusted Execution Environments (TEEs)—hardware-enforced isolated spaces where the host machine provides CPU, memory, and power but cannot access the TEE's memory or execution state4

. Data and conversations originating from users and resulting responses from large language models are encrypted within these TEEs, preventing even server administrators from viewing or tampering with them1

.Confer employs remote attestation, allowing anyone to verify what code runs on its servers

3

. Each release is digitally signed and published to a transparency log, offering developers and organizations a way to assess how their data is handled5

. This confidential computing approach means prompts are encrypted on a user's device, sent to Confer's servers in encrypted form, then decrypted only in the secure environment to generate a response4

.Why AI Privacy Matters More Than Search Privacy

Marlinspike argues that interactions with AI assistants differ fundamentally from traditional web searches. Users treat dialogue as intimate conversation, sharing thoughts, fears, transgressions, business dealings, and secrets as if AI assistants are trusted confidants or personal journals

1

. This contrasts sharply with web search queries, which typically follow a transactional model of keywords in and links out. He describes AI use as confessing into a "data lake"1

.

Source: Ars Technica

Data privacy expert Em calls AI assistants the "archnemesis" of data privacy because their utility relies on assembling massive amounts of data from myriad sources. "AI models are inherent data collectors," she explained. "They rely on large data collection for training, improvements, operations, and customizations. More often than not, this data is collected without clear and informed consent"

1

. Research from the National Cybersecurity Alliance found that over 40% of workers have shared sensitive information with AI3

.Marlinspike warns that large language models represent the first major tech medium that "actively invites confession." As people chat with these systems, they reveal thinking patterns and uncertainties that could be exploited by advertisers to sell products or influence behavior

4

. "It's a form of technology that actively invites confession," Marlinspike says. "Chat interfaces like ChatGPT know more about people than any other technology before. When you combine that with advertising, it's like someone paying your therapist to convince you to buy something"2

.Related Stories

Pricing, Availability, and Growing Adoption

Confer offers a free tier limited to 20 messages per day and five active chats

2

. Users willing to pay $35-per-month gain unlimited access, along with more advanced models and personalization features2

. That pricing sits notably higher than ChatGPT's Plus plan, reflecting the infrastructure costs of maintaining encryption and Trusted Execution Environments.The platform works best on Mac and Android devices, though Windows and Linux users can access it through compatible password managers

3

. Confer uses different AI models for different tasks, sourced from the open source community, with more advanced modeling available in premium subscriptions3

. The platform is in active development, with recent upgrades including the option to import ChatGPT or Claude conversations, and an iOS app on the horizon3

.Marlinspike reported rapid growth following the launch, noting on X that traffic has been climbing through early Signal milestones like 1 message per second, 10 per second, and 100 per second

3

. He shared that it's been "really interesting and encouraging and amazing to hear stories from people who have used Confer and had life-changing conversations," in part because they haven't felt free to include certain information in conversations with ChatGPT but can in Confer's encrypted environment1

.What This Means for AI's Privacy Future

Confer inverts the privacy model that dominates AI platforms. While ChatGPT, Gemini, and Meta AI provide opt-out toggles for chat history and training data usage, the default state remains surveillance-oriented, with opting out being the user's responsibility

5

. Confer makes privacy the default, baked into the architecture rather than offered as an afterthought5

.Organizations like schools and hospitals exploring AI tools might find appeal in platforms that guarantee confidentiality by design

5

. Signal was founded in 2014 around similar principles, and its open source encrypted messaging protocol was adopted by Meta's WhatsApp just a few years later4

. Whether tech giants eventually adopt Confer's technology remains to be seen, but the platform raises awareness about what privacy-by-design could look like in AI. "The core idea is that your conversations with an AI assistant should be as private as your conversations with a person," Marlinspike states. "Not because you're doing something wrong, but because privacy is what lets you think freely"3

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research