SK Hynix Accelerates HBM4 Development to Meet Nvidia's Demand, Unveils 16-Layer HBM3E

12 Sources

12 Sources

[1]

HBM and beyond: SK Hynix deepens strategic partnership with TSMC

SK Hynix has strengthened its collaboration with TSMC, designating the foundry to manufacture its next-generation HBM4 logic die. The company's development of high-capacity Compute Express Link (CXL) memory solutions will leverage TSMC's advanced process capabilities, marking a strategic shift in high-performance memory manufacturing. According to ETNews, SK Hynix has signed a contract valued at KRW31.1 billion(US$23.2 million) with AsicLand to design a CXL memory controller, with the agreement extending through June 30, 2026. AsicLand, as South Korea's exclusive DSP provider within TSMC's Value Chain Aggregator (VCA) program, specializes in developing semiconductor IP optimized for TSMC's production processes, ensuring compatibility and streamlining mass production. CXL, an interface technology built on PCIe, links CPUs, GPUs, accelerators, and other memory devices. By enabling modular memory capacity and bandwidth expansion, CXL is emerging as the next evolution in HBM technology. Industry reports reveal that SK Hynix previously sourced memory controllers externally but has now opted to bring this function in-house through a strategic alliance with TSMC. The new generation of CXL memory, anticipated to support CXL 3.0 or 3.1 standards, will be produced using TSMC's advanced 5nm process technology, aligning with the project's development timeline. According to the Korea Economic Daily and other sources, SK Group Chairman Tae-won Chey disclosed at the "SK AI Summit 2024" that Nvidia CEO Jensen Huang requested SK Hynix to expedite the HBM4 chip supply by six months. Chey also emphasized the critical role of SK Group's partnership with TSMC in fulfilling this request. He explained that even the best-designed chips require robust production capabilities to be impactful. "Through close collaboration with Nvidia and TSMC, SK Group is working to provide the high-performance chips essential for advancing AI technologies," stated the Group Chairman. In a pre-recorded message, TSMC Chairman Mark Liu recognized SK Hynix's HBM as a key element driving AI acceleration in today's data-intensive environment. Chey recalled his previous discussions with TSMC founder Morris Chang, mentioning that more than a decade ago, he sought Chang's advice on acquiring Hynix Semiconductor. Chang strongly endorsed the acquisition and commended TSMC's deep commitment to its customers. As SK Hynix deepens its partnerships with Nvidia and TSMC, competitive pressure mounts on Samsung Electronics. With Samsung's HBM3E product yet to receive Nvidia certification, SK Hynix's potential six-month acceleration in HBM4 mass production could significantly widen the gap between their HBM operations, potentially establishing SK Hynix as the industry leader. In response, Chey observed that Samsung has greater technological and resource capacity than SK Hynix and, amid the AI boom, is well-positioned for strong outcomes. Without full insight into Samsung's current positioning, Chey refrained from specific competitive comments, reaffirming instead SK Hynix's focus on its objectives and commitment to producing the necessary chips on schedule and in alignment with customer demands.

[2]

Nvidia CEO asked SK hynix to make 12-layer HBM4 chips early

Nvidia CEO Jensen Huang asked Korean chipmaker SK hynix to pull forward delivery of 12-layer HBM4 chips by half a year, according to the company's group chairman Chey Tae-won. In a keynote speech at the SK AI Summit 2024 on Monday, Chey said he responded by deferring to CEO Kwak Noh-jung, who in turn promised to try. The chips were originally set for delivery in the first half of 2026, but bringing the schedule forward by six months would see them released before the end of 2025. That's quite a tall order. SK hynix's 12-layer HBM3E products were scheduled to be placed into the supply chain just this quarter - Q4 2024. Mass production of the most advanced chip to date only began in late September. 16-layer HBM3E samples are expected to be available in the first half of 2025, Kwak announced during the summit. The chips are made using Mass Reflow Molded Underfill (MR-MUF) process, a packaging technique that improves thermal management and was used on the 12-layer chips. There's more on them in our sister site, Blocks & Files. The CEO described the 16-layer HBM3E chips as having an 18 percent improvement in learning performance and 32 percent improvement in inference performance over the 12-layer chips of the same generation. Kwak also confirmed his company was developing LPCAMM2 module for PCs and datacenters, as well as 1cm based LPDDR5 and LPDDR6. Huang made a video appearance at the summit, as did Microsoft CEO Satya Nadella and TSMC CEO CC Wei. As is customary at such events, all three praised their companies' respective partnerships with SK hynix, while Huang also reportedly said SK hynix's development plan was both "super aggressive" and "super necessary." Nvidia accounts for more than 80 percent of the world's AI chip consumption. SK hynix execs brushed off the notion of any AI chip oversupply in its recent Q3 2024 earnings call. HBM chip sales were reported up 330 percent year-on-year. SK Group chairman Choi Tae-won predicted in a speech this week that the AI market will likely baloon further in or around 2027 due to the emergence of the next-generation ChatGPT. ®

[3]

Nvidia wants South Korea's SK hynix to work faster in face of global shortage of crucial advanced AI chips

The head of AI computing giant Nvidia asked South Korea's SK hynix to speed up delivery of newer, more advanced HBM4 chips by six months, the head of SK Group said Monday. Nvidia's chief executive, Jensen Huang, made the request amid a global shortage of crucial advanced chips, which SK has pledged to work on with fellow market leader TSMC of Taiwan. SK hynix, the world's second-largest memory chip maker, is racing to meet explosive demand for the HBM chips that are used to process vast amounts of data to train AI, including from Nvidia, which dominates the market. "The current pace of HBM memory technology development and product launches is impressive, but AI still requires higher-performance memory," said Huang by video link at an AI summit in Seoul. The firm said last month that it was on course to deliver the 12-layer HBM4 chips by the second half of 2025. SK hynix also said Monday that it would ship samples of the first ever 16-layer HBM3E chips by early 2025, as it seeks to bolster its growing AI chip dominance. "Nvidia is demanding more HBM as it releases better chip versions every year," Chey Tae-won, CEO of SK Group said. He said it was a "happy challenge" which kept his company busy. The company said it was mass-producing the world's first 12-layer HBM3E product in September, and now aims to ship samples to clients of the newer, more advanced products very quickly. The additional layers add more bandwidth speed, power efficiency and improve the total capacity of the chips. "SK hynix has been preparing for various 'world first' products by being the first in the industry to develop and start volume shipping," SK hynix CEO Kwak Noh-jung said. "SK hynix has been developing 48GB 16-high HBM3E in a bid to secure technological stability and plans to provide samples to customers early next year," Kwak added. In 2013, SK hynix launched the first high-bandwidth memory chips -- cutting-edge semiconductors that enable faster data processing and the more complex tasks of generative AI. Rival Samsung has been lagging behind SK hynix when it comes to HBM chips, and the market capitalisation gap between Samsung Electronics and SK hynix reached its narrowest level in 13 years in October.

[4]

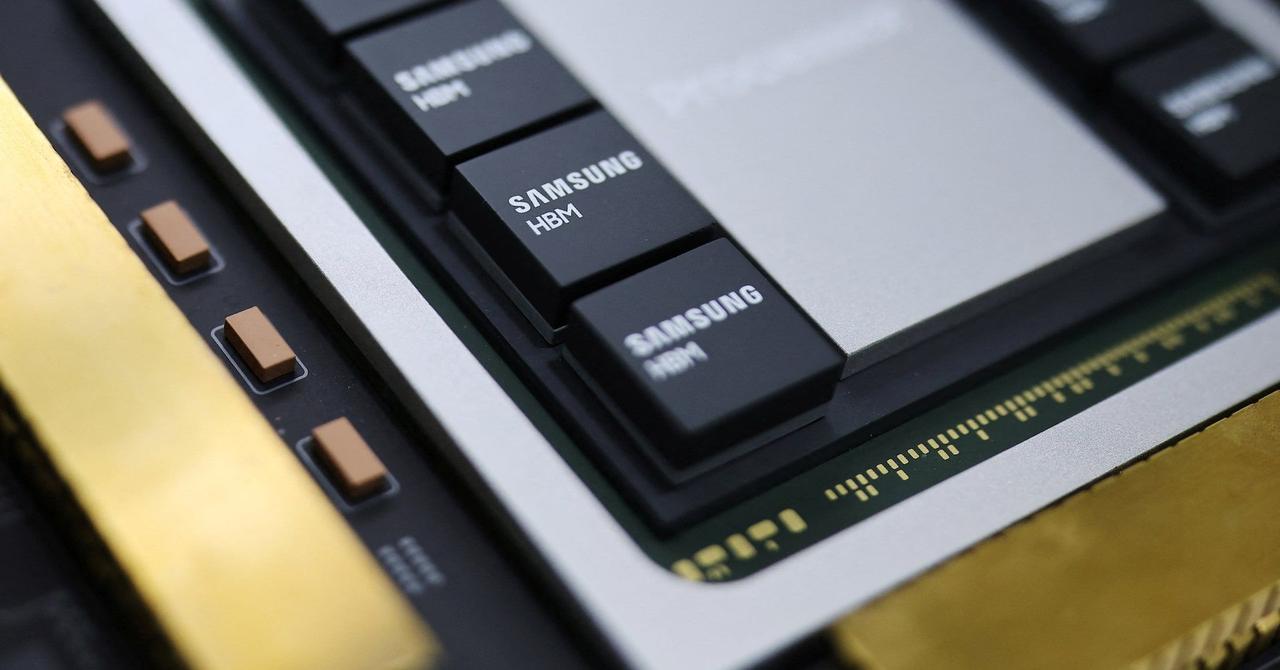

SK Hynix and Samsung Compete for Nvidia Memory Chip Contracts

Nvidia's HBM contracts are a major prize for South Korean rivals. Nvidia relies on the latest high-bandwidth memory (HBM) chips to build its most powerful graphics processing units (GPUs). As demand for Nvidia GPUs has increased in recent years, its suppliers have struggled to keep up. For South Korean memory chip rivals SK Hynix and Samsung, Nvidia contracts can provide significant revenues and promise future growth. As they compete for these lucrative deals, both companies are racing to develop better HBM solutions and scale their manufacturing capacity. The Role of HBM Chips in AI While Nvidia still uses an alternative memory technology known as graphics double data rate (GDDR) for its smaller GPUs, it favors HBM for the heavyweight processors used in AI training. A crucial difference between the two chip families is that while GDDR memory is typically positioned separately on the GPU circuit board, HBM can be placed next to the processor itself. This proximity to the processor gives HBM its primary advantage -- speed. As Nvidia has accelerated GPU development, its memory needs have increased, and the firm has called on suppliers to improve their game. For instance, CEO Jensen Huang reportedly asked SK Hynix to accelerate the production of more advanced HBM4 chips by six months. The Race to HBM4 Originally slated to hit mass production in 2026, SK Hynix reportedly plans to expedite its HBM roadmap to ship the first chips in the second half of 2025 -- just in time for the anticipated launch of Nvidia's next-generation Rubin R100 GPU. Meanwhile, Samsung has also brought forward its HBM4 timeline, with a similar target to hit mass production in late 2025. Nvidia's Memory Needs When it started incorporating HBM into its GPU designs, Nvidia originally favored SK Hynix chips. Media reports suggest Samsung's chips didn't meet Nvidia's standards for use in its AI processors due to problems with heat and power consumption. However, in July this year, Nvidia reportedly approved Samsung's HBM3 for use in H20 processors, a slimmed-down version of its flagship H200 made for the Chinese market. Working with Samsung could help Nvidia ramp up GPU production by addressing supply chain bottlenecks. Meanwhile, Samsung investors see an expanded Nvidia partnership as a potential cash cow. During its recent quarterly earnings call, Samsung reported strong demand for HBM, driven by increased investments in AI infrastructure. "The Company plans to actively respond to the demand for high-value-added products for AI and will expand capacity to increase the portion of HBM3E sales," it said in a statement.

[5]

SK hynix announces the world's first 48GB 16-Hi HBM3E memory -- Next-gen PCIe 6.0 SSDs and UFS 5.0 storage are also in the works

SK hynix touts a 32% performance increment in inference vs its 12-Hi offerings. At the SK AI Summit 2024, SK hynix CEO took the stage and revealed the industry's first 16-Hi HBM3E memory - beating both Samsung and Micron to the punch. With the development of HBM4 going strong, SK hynix prepared a 16-layer version of its HBM3E offerings to ensure "technological stability" and aims to offer samples as early as next year. A few weeks ago, SK hynix unveiled a 12-Hi variant of its HBM3E memory - securing contracts from AMD (MI325X) and Nvidia (Blackwell Ultra). Raking in record profits last quarter, SK hynix is in full steam once again as the giant has just announced a 16-layer upgrade to its HBM3E lineup, boasting capacities of 48GB (3GB per individual die) per stack. This increase in density now allows AI accelerators to feature up to 384GB of HBM3E memory in an 8-stack configuration. SK hynix claims an 18% improvement in training alongside a 32% boost in inference performance. Like its 12-Hi counterpart, the new 16-Hi HBM3E memory incorporates packaging technologies like MR-MUF which connects chips by melting the solder between them. SK hynix expects 16-Hi HBM3E samples to be ready by early 2025. However, this memory could be shortlived as Nvidia's next-gen Rubin chips are slated for mass production later next year and will be based on HBM4. That's not all as the company is actively working on PCIe 6.0 SSDs, high-capacity QLC (Quad Level Cell) eSSDs aimed at AI servers, and UFS 5.0 for mobile devices. In addition, to power future laptops and even handhelds, SK hynix is developing an LPCAMM2 module and soldered LPDDR5/6 memory using its 1cnm-node. There isn't any mention of CAMM2 modules for desktops, so PC folk will need to wait - at least until CAMM2 adoption matures. To overcome what SK hynix calls a "memory wall", the memory maker is developing solutions such as Processing Near Memory (PNM), Processing in Memory (PIM), and Computational Storage. Samsung has already demoed its version of PIM - wherein data is processed within the memory so that data doesn't have to move to an external processor. HBM4 will double the channel width from 1024 bits to 2048 bits while supporting upwards of 16 vertically stacked DRAM dies (16-Hi) - each packing up to 4GB of memory. Those are some monumental upgrades, generation on generation, and should be ample to fulfill the high memory demands of upcoming AI GPUs. Samsung's HBM4 tape-out is set to advance later this year. On the flip side, reports suggest that SK hynix already achieved its tape-out phase back in October. Following a traditional silicon development lifecycle, expect Nvidia and AMD to receive qualification samples by Q1/Q2 next year.

[6]

Nvidia seeks accelerated supply of SK hynix's HBM4 chips: SK chairman

SK Group Chairman Chey Tae-won delivers a speech during the SK AI Summit 2024 at COEX in Seoul, Monday. Yonhap SK reaffirms alliance with Nvidia, TSMC amid AI boomBy Lee Min-hyung Nvidia CEO Jensen Huang requested SK hynix to speed up the supply of next-generation high-bandwidth memory (HBM) chips during his meeting with SK Group Chairman Chey Tae-won, in light of the unprecedented demand for semiconductors used in artificial intelligence (AI), the SK chief said, Monday. "The Nvidia chief asked us to step up the timeline of the HBM4 chip supply by six months," Chey said during the SK AI Summit 2024. HBM4 is the sixth generation of high-bandwidth memory chips, designed for high-speed data transfer. These advanced memory chips are becoming a significant game changer in the global semiconductor industry, particularly with the rapid growth of artificial intelligence (AI). Chey said he asked Kwak Noh-jung, CEO of SK hynix, a leading global supplier of HBM, about the feasibility of meeting Nvidia's request. Kwak replied, "I will do my best." SK hynix plans to produce the 12-layer HBM4 chips in the latter half of next year, faster than its initial schedule of 2026, upon Huang's request. The chairman also boasted SK's group-wide partnership with Nvidia and TSMC. "Nvidia, SK hynix and TSMC are fortifying a trilateral partnership to develop the world's top-notch chips," Chey said. The SK AI Summit is the group's annual event focused on discussing the latest trends in the AI industry. In response to the booming interest in AI, the company has decided to elevate this year's summit to a global event by inviting prominent business leaders from leading AI companies, including OpenAI Chairman and President Greg Brockman. SK Group is one of the most aggressive Korean conglomerates in making substantial investments in AI. In June, top executives at the group and its affiliates announced a plan to invest 80 trillion won ($58.42 billion) in AI and chip technology by 2026. "One of the reasons why we are still worried about the winter of AI is because we have yet to find specific use cases and revenue models in the industry, even if massive investments are being made," Chey said. SK hynix CEO Kwak Noh-jung speaks during the SK AI Summit 2024 in Seoul, Monday. Yonhap Kwak Noh-jung also delivered a keynote speech at the event, where he outlined the company's plan to provide samples of the 16-layer HBM3E chips to clients early next year. "We prepare to introduce a series of 'world's first' products developed and mass-produced for the first time in the world," the SK hynix head said. SK Telecom President and CEO Ryu Young-sang also delivered the company's vision to build multiple hyper-scale AI data centers across the nation. SK Telecom President and CEO Ryu Young-sang speaks on the firm's vision to build AI data centers nationwide during the SK AI Summit 2024 in Seoul, Monday. Yonhap "The telecommunication industry has so far engaged in a war of speed and capacity, but now the network paradigm should be changed," Ryu said. "The upcoming sixth-generation (6G) network will evolve into a combination of telecommunication and AI." The company anticipates that the establishment of its AI data centers will attract new investments totaling 50 trillion won and create jobs for 550,000 people. Additionally, it expects these initiatives to generate economic effects valued at 175 trillion won and stimulate the growth of AI-driven cutting-edge industries.

[7]

NVIDIA CEO Requests SK Hynix To Initiate HBM4 Delivery "Six Months" Earlier, Saying There Is Desperate Need Of Accelerated Performance

NVIDIA's CEO has requested SK Hynix to speed up the delivery of next-gen HBM4 memory by up to six months, as Team Green can't wait for the next phase of AI. For those unaware, HBM4 is probably seen as the "gateway" towards moving into the next era of AI computational power, mainly since the memory type is expected to be developed to allow manufacturers to significantly upscale the capabilities of future AI products. In a new report by Reuters, NVIDIA's CEO Jensen Huang has formally requested SK Hynix's Group Chairman Chey Tae-won, at the SK AI Summit held in Seoul, to initiate the delivery of HBM4 memory six months before the expected delivery times in an attempt to have an early lead on the process. SK Hynix has said that HBM4 is originally scheduled for delivery by H2 2025, so Jensen is probably looking to get hands-on with the memory chips at the beginning of 2025. The reason behind the request hasn't been specified yet, but it is likely a "precautionary" move by NVIDIA, given that the integration of HBM4 in next-gen architectures, notably Rubin, will bring in manufacturing complexities since HBM4 is "much more" important component for next-gen AI GPUs, NVIDIA is eager to avoid any sort of design flaws with the release of Rubin, to prevent what it had encountered with Blackwell. HBM4 is also called a "multi-functional" HBM memory since, with this particular standard, the industry has decided to integrate memory and logic semiconductors into a single package, which means that there won't be a need for packaging technology and given that individual dies would be much closer to this implementation, it would prove to be much more performance efficient. The memory process involves semiconductor companies like TSMC in the manufacturing process, and with no need for packaging technology, it will surely ease the pressure on the CoWoS supply chain. SK Hynix has already been said to have taped out HBM4, which means the company is far from the mass-production process. Competitors such as Samsung and Micron are too in the race to develop HBM4, but by the looks of it, the spotlight is all towards SK Hynix for now.

[8]

Nvidia asked SK Hynix to speed up HBM4 chip production amid soaring AI demand By Investing.com

Investingcom -- Nvidia (NASDAQ:NVDA) CEO Jensen Huang has urged SK Hynix to expedite production of its next-generation high-bandwidth memory chips, known as HBM4, SK Group (KS:000660) Chairman Chey Tae-won said on Monday, as per a Reuters report. Initially, SK Hynix aimed to start supplying these advanced chips in the latter half of 2025, as announced in October, the report said. However, the South Korean company confirmed this week that it is now working toward an accelerated timeline, though it did not provide further details. Huang's request underscores the strong demand for high-capacity, energy-efficient memory chips that power Nvidia's GPUs, which are instrumental in advancing artificial intelligence. Nvidia currently controls over 80% of the global AI chip market, heightening the need for faster delivery of HBM chips, the report said. SK Hynix has been a key player in the global competition to address the growing demand for HBM, which is essential for processing extensive datasets required for AI training and is crucial for Nvidia's AI chipsets. However, the company is also facing increasing competition from other major memory producers like Samsung (LON:0593xq) Electronics (KS:005930) and Micron (NASDAQ:MU). SK Hynix has further announced plans to deliver its latest 12-layer HBM3E to an undisclosed customer this year and expects to release samples of a more advanced 16-layer HBM3E by early next year, according to its CEO, Kwak Noh-Jung, during the SK AI SUMMIT 2024 in Seoul. Meanwhile, Samsung recently indicated progress on a supply agreement with a major customer following previous delays. The company also mentioned that it is negotiating with other major clients to produce "improved" HBM3E products in the first half of the coming year and intends to start producing HBM4 in the second half of next year, the report added.

[9]

SK hynix boss says NVIDIA CEO asked him to bring forward supply of HBM4 chips by 6 months

TL;DR: NVIDIA CEO Jensen Huang has requested SK hynix to expedite the supply of its next-generation HBM4 memory by six months, originally planned for the second half of 2025. NVIDIA currently uses SK hynix's HBM3E memory for its AI chips and plans to use HBM4 in its upcoming Rubin R100 AI GPU. NVIDIA CEO Jensen Huang called up SK hynix chairman Tae-won to bring forward its supply of next-generation HBM4 memory by 6 months. Originally, SK hynix planned to have its next-gen HBM4 memory ready in the second half of 2025, but now NVIDIA is pushing the South Korean memory giant to make its HBM4 now, now, now. NVIDIA currently uses SK hynix's bleeding edge HBM3E memory on its new Blackwell B200 and GB200 AI chips, with HBM4 memory planned to be inside of its next-gen Rubin R100 AI GPU. SK hynix had its next-gen HBM4 reportedly ready to tape out in October, something we reported on in August, and it looks like a call from Jensen will be enough to kick HBM4 into reality from SK hynix earlier than planned... 6 months ahead of time is a big ask from NVIDIA, but they know SK hynix is at the top of its game and ready for HBM4.

[10]

SK Hynix Speeds Up Release of New AI Chips At Nvidia's Urging

SK Hynix Inc. is accelerating the launch of its next-generation AI memory chips after Nvidia Corp. Chief Executive Officer Jensen Huang asked the South Korean supplier if it can provide samples six months earlier than originally planned, signaling persistent global demand for cutting-edge semiconductors. Huang raised the request regarding the upcoming high-bandwidth memory chips, or HBM4, during a recent meeting with SK Group Chairman Chey Tae-won, the South Korean tycoon told reporters on the sideline of the SK AI Summit in Seoul on Monday. Chey said Huang has succeeded as a leader in part because of his emphasis on speed.

[11]

A Nvidia partner's stock surged because Nvidia wants chips faster

AI is revolutionizing content creation -- including an Emmy-winning breakthrough, exec says SK Hynix's South Korea-listed shares closed up almost 6.5% after SK Group chairman Chey Tae-won told reporters that Nvidia CEO Jensen Huang asked the company to move supply of its next-generation, high-bandwidth memory chips up by six months. Chey, speaking at the SK AI Summit in Seoul, added that Nvidia is still struggling to fulfill chip demand but that the two are working together on the shortage, Bloomberg reported. Huang requested that SK Hynix provide samples of its HBM4 chips earlier than expected, Chey said. In October, SK Hynix said it expected to supply customers with the new HBM chip, which Nvidia needs for its artificial intelligence chips, by the second half of 2025. Chey said both Nvidia and SK Hynix "are on the same page" about HBM4's schedule, according to Bloomberg. The South Korea-based chipmaker's shares are up 37.1% so far this year. The company also unveiled a 16-layer HBM3E at the summit that it said will be with customers early next year. SK Hynix CEO Kwak Noh-Jung said the company will release the next-generation HBM5 and HBM5E sometime between 2028 and 2030. In October, SK Hynix reported record revenue, operating profit, and net profit, "achieving best-ever quarterly performance" in the third quarter on demand for its memory chips for AI technology. "SK Hynix has solidified its position as the world's No.1 AI memory company by achieving the highest business performance ever in the third quarter of this year," Kim Woohyun, chief financial officer at SK Hynix, said in a statement. "We will continue to maximize profitability while securing stable revenues by taking flexible product and supply strategies in line with market demand."

[12]

Nvidia's Need For Speed: Jensen Huang Pushes SK Hynix For Earlier Memory Chip Delivery - Micron Technology (NASDAQ:MU), SK Hynix (OTC:HXSCF)

Nvidia Corp NVDA CEO Jensen Huang has requested SK Hynix Inc. HXSCF to expedite the supply of its next-generation high-bandwidth memory chips by six months. What Happened: The request was made during a meeting between Huang and SK Group Chairman Chey Tae-won, reported Reuters on Monday. SK Hynix had previously announced its plan to provide the chips to clients in the second half of 2025, a timeline that was already ahead of the initial target. The demand for high-capacity, energy-efficient chips for Nvidia's graphic processing units, used in AI technology development, has been on the rise. Nvidia currently holds over 80% of the global AI chip market. SK Hynix, a leading player in the global race to meet the surging demand for HBM chips, is facing intensified competition from companies like Samsung Electronics SSNLF and Micron Technology MU. The company is also planning to supply the latest 12-layer HBM3E to an undisclosed customer this year and intends to ship samples of the more advanced 18-layer HBM3E early next year. See Also: Jim Cramer Warns Apple Stock Should Have 'Never Been Up That Much' After Q3 Earnings Even As This Analyst Defends iPhone 16 Launch Why It Matters: The demand for AI technology has been a significant driver of growth for SK Hynix. The company reported a record-breaking quarterly profit in October, with a 7% revenue surge, largely attributed to the rising demand for AI technology. Despite Samsung's soaring profits, its delays in Nvidia's certification for AI memory chips have allowed competitors like SK Hynix and Micron Technology to take the lead in high-bandwidth memory. SK Hynix's commencement of mass production of their latest high-bandwidth memory chips in September positioned the company ahead in the competitive race to meet the growing demand driven by artificial intelligence advancements. SK Hynix plans to invest $6.8 billion in a new production facility in South Korea, as part of a larger commitment to invest 120 trillion won in the construction of four fabs in the Yongin cluster. Read Next: What's Going On With Rivian Stock Today? Image Via Shutterstock This story was generated using Benzinga Neuro and edited by Kaustubh Bagalkote Market News and Data brought to you by Benzinga APIs

Share

Share

Copy Link

SK Hynix strengthens its position in the AI chip market by advancing HBM4 production and introducing new HBM3E technology, responding to Nvidia's request for faster delivery amid growing competition with Samsung.

SK Hynix Accelerates HBM4 Development

SK Hynix, the world's second-largest memory chip maker, is ramping up efforts to meet the surging demand for high-bandwidth memory (HBM) chips crucial for AI computing. In a significant move, Nvidia CEO Jensen Huang has requested SK Hynix to expedite the delivery of next-generation HBM4 chips by six months

1

3

. This request underscores the global shortage of advanced AI chips and the intense competition in the semiconductor industry.Strategic Partnerships and Technological Advancements

SK Hynix has strengthened its collaboration with Taiwan Semiconductor Manufacturing Company (TSMC), designating the foundry to manufacture its next-generation HBM4 logic die

1

. This partnership is expected to leverage TSMC's advanced process capabilities, particularly in developing high-capacity Compute Express Link (CXL) memory solutions. The company has also signed a contract with AsicLand to design a CXL memory controller, further solidifying its position in the AI chip market1

.Unveiling of 16-Layer HBM3E

In a groundbreaking announcement, SK Hynix revealed plans to ship samples of the world's first 16-layer HBM3E chips by early 2025

3

5

. This new technology boasts a capacity of 48GB per stack and claims an 18% improvement in training performance and a 32% boost in inference performance compared to its 12-layer counterpart5

. The development of these chips utilizes advanced packaging techniques like Mass Reflow Molded Underfill (MR-MUF) to enhance thermal management2

.Competition with Samsung

As SK Hynix advances its HBM technology, the competition with Samsung intensifies. Samsung has been lagging in HBM chip development, with its HBM3E product yet to receive Nvidia certification

1

4

. The market capitalization gap between Samsung Electronics and SK Hynix reached its narrowest level in 13 years in October, reflecting the shifting dynamics in the memory chip industry3

.Related Stories

Future Developments and Industry Impact

SK Hynix is not stopping at HBM3E and HBM4. The company is actively working on next-generation technologies, including PCIe 6.0 SSDs, high-capacity QLC eSSDs for AI servers, and UFS 5.0 for mobile devices

5

. To address the "memory wall" challenge, SK Hynix is developing innovative solutions such as Processing Near Memory (PNM), Processing in Memory (PIM), and Computational Storage5

.Nvidia's Influence and Market Dynamics

Nvidia, accounting for more than 80% of the world's AI chip consumption, plays a crucial role in shaping the memory chip market

2

. The company's demand for faster and more advanced HBM chips is driving innovation and competition among suppliers. SK Group chairman Chey Tae-won predicts that the AI market will likely expand further around 2027, coinciding with the emergence of next-generation AI technologies2

.As the race for AI chip supremacy continues, SK Hynix's accelerated development of HBM4 and introduction of 16-layer HBM3E position the company at the forefront of the AI memory chip market. The industry eagerly anticipates the impact of these advancements on AI computing capabilities and the broader technological landscape.

References

Summarized by

Navi

[2]

Related Stories

Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

02 Jan 2026•Technology

Samsung HBM4 nears Nvidia approval as rivalry with SK Hynix intensifies for Rubin AI platform

26 Jan 2026•Technology

SK hynix Leads the Charge in Next-Gen AI Memory with World's First 12-Layer HBM4 Samples

19 Mar 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology