SK Hynix Forecasts 30% Annual Growth in AI Memory Market Through 2030

5 Sources

5 Sources

[1]

SK hynix projects HBM market to be worth tens of billions of dollars by 2030 -- says AI memory industry will expand 30% annually over five years

Amidst all the theatrics of the ongoing China-U.S. semiconductor wars, SK Hynix -- a South Korean giant also affected by tariffs -- expects the global market for High Bandwidth Memory (HBM) chips used in artificial intelligence to grow by around 30% a year until 2030, driven by accelerating AI adoption and a shift toward more customized designs. The forecast, shared with Reuters, points to what the company sees as a long-term structural expansion in a sector traditionally treated like a commodity. HBM is already one of the most sought-after components in AI datacenters, stacking memory dies vertically alongside a "base" logic die to improve performance and efficiency. SK Hynix, which commands the largest share of the HBM market, says demand is "firm and strong," with capital spending by hyperscalers such as Amazon, Microsoft, and Google likely to be revised upward over time. The company estimates the market for custom HBM alone could be worth tens of billions of dollars by 2030. Customization is becoming a key differentiator. While large customers -- including GPU leaders -- already receive bespoke HBM tuned for power or performance needs, SK Hynix expects more clients to move away from one-size-fits-all products. That shift, along with advances in packaging and the upcoming HBM4 generation, is making it harder for buyers to swap between rival offerings, supporting margins in a space once dominated by price competition. Rivals are not standing still. Samsung has cautioned that HBM3E supply may briefly outpace demand, which could pressure prices in the short term. Micron is also scaling up its HBM footprint, and SK Hynix is also exploring alternatives like High Bandwidth Flash (HBF), a NAND-based design promising higher capacity and non-volatile storage -- though it remains in early stages and unlikely to displace HBM in the near term. Geopolitical developments add another layer. U.S. President Donald Trump has announced a 100% tariff on chips imported from countries without U.S.-based manufacturing, but South Korean officials say SK Hynix and Samsung would be exempt due to existing and planned U.S. facilities. SK Hynix is building an advanced packaging plant and AI R&D hub in Indiana, part of a strategy to lock in supply to North American customers while sidestepping trade risks. The stakes are higher than ever. Market estimates put the total HBM opportunity near $98 billion by 2030, with SK Hynix holding around a 70% share today. The company's fortunes are tied closely to AI infrastructure spending, and while oversupply, customer concentration, or disruptive memory technologies could slow growth, its current lead in customization and packaging leaves it well-positioned if AI demand continues its upward march. See our HBM roadmaps for Micron, Samsung, and SK Hynix to learn more about what's coming.

[2]

Exclusive: SK Hynix expects AI memory market to grow 30% a year to 2030

SEOUL/SAN FRANCISCO, Aug 11 (Reuters) - South Korea's SK Hynix (000660.KS), opens new tab forecasts that the market for a specialized form of memory chip designed for artificial intelligence will grow 30% a year until 2030, a senior executive said in an interview with Reuters. The upbeat projection for global growth in high-bandwidth memory (HBM) for use in AI brushes off concern over rising price pressures in a sector that for decades has been treated like commodities such as oil or coal. "AI demand from the end user is pretty much, very firm and strong," said SK Hynix's Choi Joon-yong, the head of HBM business planning at SK Hynix. The billions of dollars in AI capital spending that cloud computing companies such as Amazon (AMZN.O), opens new tab, Microsoft (MSFT.O), opens new tab and Alphabet's (GOOGL.O), opens new tab Google are projecting will likely be revised upwards in the future, which would be "positive" for the HBM market, Choi said. The relationship between AI build-outs and HBM purchases is "very straightforward" and there is a correlation between the two, Choi said. SK Hynix's projections are conservative and include constraints such as available energy, he said. But the memory business is undergoing a significant strategic change during this period as well. HBM - a type of dynamic random access memory or DRAM standard first produced in 2013 - involves stacking chips vertically to save space and reduce power consumption, helping to process the large volumes of data generated by complex AI applications. SK Hynix expects this market for custom HBM to grow to tens of billions of dollars by 2030, Choi said. Due to technological changes in the way SK Hynix and rivals such as Micron Technology (MU.O), opens new tab and Samsung Electronics (005930.KS), opens new tab build next-generation HBM4, their products include a customer-specific logic die, or "base die", that helps manage the memory. That means it is no longer possible to easily replace a rival's memory product with a nearly identical chip or product. Part of SK Hynix's optimism for future HBM market growth includes the likelihood that customers will want even further customisation than what SK Hynix already does, Choi said. At the moment it is mostly larger customers such as Nvidia (NVDA.O), opens new tab that receive individual customisation, while smaller clients get a traditional one-size-fits-all approach. "Each customer has different taste," Choi said, adding that some want specific performance or power characteristics. SK Hynix is currently the main HBM supplier to Nvidia, although Samsung and Micron (MU.O), opens new tab supply it with smaller volumes. Last week, Samsung cautioned during its earnings conference call that current generation HBM3E supply would likely outpace demand growth in the near term, a shift that could weigh on prices. "We are confident to provide, to make the right competitive product to the customers," Choi said. 100% TARIFFS U.S. President Donald Trump on Wednesday said the United States would impose a tariff of about 100% on semiconductor chips imported from countries not producing in America or planning to do so. Choi declined to comment on the tariffs. Trump told reporters in the Oval Office the new tariff rate would apply to "all chips and semiconductors coming into the United States," but would not apply to companies that were already manufacturing in the United States or had made a commitment to do so. Trump's comments were not a formal tariff announcement, and the president offered no further specifics. South Korea's top trade envoy Yeo Han-koo said on Thursday that Samsung Electronics and SK Hynix would not be subject to the 100% tariffs on chips if they were implemented. Samsung has invested in two chip fabrication plants in Austin and Taylor, Texas, and SK Hynix has announced plans to build an advanced chip packaging plant and an artificial intelligence research and development facility in Indiana. South Korea's chip exports to the United States were valued at $10.7 billion last year, accounting for 7.5% of its total chip exports. Some HBM chips are exported to Taiwan for packaging, accounting for 18% of South Korea's chip exports in 2024, a 127% increase from the previous year. Reporting by Heekyong Yang and Hyunjoo Jin in Seoul and Max A. Cherney in San Francisco; Editing by Tom Hogue Our Standards: The Thomson Reuters Trust Principles., opens new tab * Suggested Topics: * Artificial Intelligence Max A. Cherney Thomson Reuters Max A. Cherney is a correspondent for Reuters based in San Francisco, where he reports on the semiconductor industry and artificial intelligence. He joined Reuters in 2023 and has previously worked for Barron's magazine and its sister publication, MarketWatch. Cherney graduated from Trent University with a degree in history.

[3]

US tariff fears fail to shake SK Hynix as AI chip demand fuels record-breaking growth projections until the end of the decade

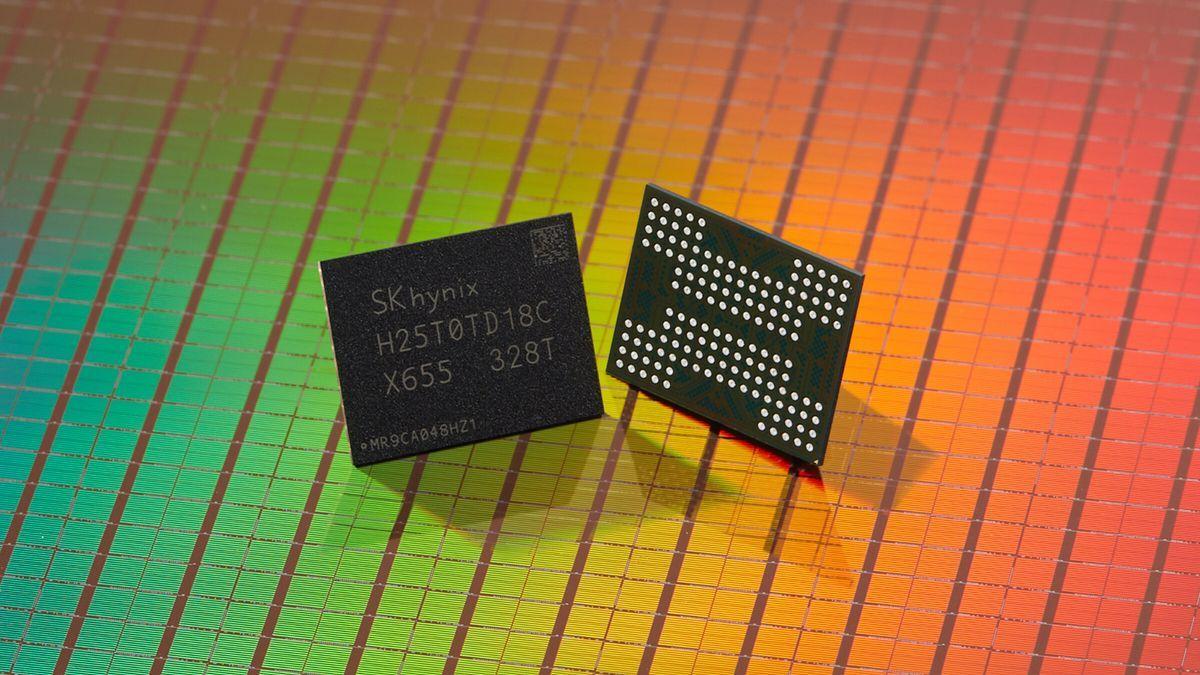

HBM technology stacks chips vertically for greater efficiency and reduced energy consumption SK Hynix is forecasting rapid expansion in the AI memory segment, estimating a 30% annual growth rate for high-bandwidth memory (HBM) until 2030. The company's projection comes amid uncertainty surrounding potential US tariffs of about 100% on semiconductor chips from nations without American manufacturing operations. While US President Donald Trump said the tariff plan would target "all chips and semiconductors coming into the United States," South Korean officials indicated both SK Hynix and Samsung Electronics would not be subject to the measures, due to their ongoing and planned US investments. Choi Joon-yong, head of HBM business planning at SK Hynix, said, "AI demand from the end user is pretty much, very firm and strong... Each customer has different taste." "We are confident to provide, to make the right competitive product to the customers," he added. He also suggested capital spending from major cloud service providers such as Amazon, Microsoft, and Google could be revised upward. Choi believes that the correlation between AI infrastructure expansion and HBM demand is direct, although factors such as energy availability were taken into account in the forecasts. Speaking to Reuters, the company anticipates that the custom HBM sector will reach tens of billions of dollars by 2030, driven by the performance requirements of advanced AI applications. This specialized DRAM technology, first introduced in 2013, stacks chips vertically to reduce power consumption and physical footprint while improving data-processing efficiency. SK Hynix and competitors, including Samsung and Micron Technology, are developing HBM4 products that integrate a "base die" for memory management, making it harder to substitute rival products. Currently, larger clients like Nvidia receive highly customized solutions, while smaller customers often rely on standardized designs. The company's position as Nvidia's primary HBM supplier underlines its influence in the AI hardware space. However, Samsung recently cautioned that near-term HBM3E production could exceed market demand growth, potentially pressuring prices. Despite the ongoing tariff discussions, SK Hynix's market confidence remains steady. The company is investing in US manufacturing capacity, including an advanced chip packaging plant and an AI research facility in Indiana, which could help safeguard against trade disruptions. South Korea's chip exports to the US were valued at $10.7 billion last year, with HBM shipments to Taiwan for packaging increasing sharply in 2024. While SK Hynix's optimism reflects the expected rise in AI infrastructure spending, market analysts point to the cyclical nature of the semiconductor industry, where oversupply and pricing pressures are recurring challenges. The company's ability to deliver competitive products in a market increasingly shaped by customization could determine its resilience.

[4]

SK hynix president says AI memory market to grow at average annual rate of 30% through 2030

TL;DR: SK hynix forecasts the HBM memory market to grow 30% annually through 2030, driven by strong AI demand from NVIDIA, AMD, and major cloud providers. The upcoming HBM4 standard offers customized performance, raising production complexity and prices, solidifying SK hynix's leadership in advanced AI memory solutions. SK hynix president says the HBM memory market will grow at 30% per year through to 2030, with leading AI GPUs from NVIDIA and AMD using the best HBM they can get, and the AI market isn't slowing down any time soon. The upbeat projection for global growth of HBM used in AI chips brushes off any concerns in rising price pressures in a sector that, for decades, has been treated like commodities such as oil or coal, reports Reuters. US cloud companies like Amazon, Microsoft, and Google are spending hundreds of billions of dollars on AI servers, which is very healthy for one of the key ingredients: HBM. SK hynix's Choi Joon-yong, the head of HBM business planning at SK hynix, said: "AI demand from the end user is pretty much, very firm and strong". HBM4 is right around the corner, with the next-gen HBM memory standard debuting inside of NVIDIA's next-generation Rubin R100 AI GPU. HBM4 is more complicated to make than HBM3 + HBM3E inside of AI GPUs of today, with HBM4 makers including SK hynix, Samsung, and Micron all having to change the way they build their respective HBM4 memory. This means that it's no longer possible to just easily replace a rival's HBM with a nearly identical chip or product, as their new HBM4 includes a customer-specific logic die, or "base die", that helps manage the HBM. Choi continued, saying that companies like NVIDIA and AMD will want specific performance or power characteristics with their HBM4 memory, saying "each customer has different taste". This is part of SK hynix's future in HBM market growth, as customers will want customizations made that SK hynix isn't even doing right now. SK hynix recently "drastically" raised pricing on HBM4 memory as it is more complicated to make, and it's in a "war of nerves" against NVIDIA, more on that story above. The company also beat Samsung -- its fellow market rival in South Korea -- as the world's biggest memory chip supplier for the first time ever, and that pace isn't slowing down with HBM4.

[5]

Exclusive-SK Hynix expects AI memory market to grow 30% a year to 2030

SEOUL/SAN FRANCISCO (Reuters) -South Korea's SK Hynix forecasts that the market for a specialized form of memory chip designed for artificial intelligence will grow 30% a year until 2030, a senior executive said in an interview with Reuters. The upbeat projection for global growth in high-bandwidth memory (HBM) for use in AI brushes off concern over rising price pressures in a sector that for decades has been treated like commodities such as oil or coal. "AI demand from the end user is pretty much, very firm and strong," said SK Hynix's Choi Joon-yong, the head of HBM business planning at SK Hynix. The billions of dollars in AI capital spending that cloud computing companies such as Amazon, Microsoft and Alphabet's Google are projecting will likely be revised upwards in the future, which would be "positive" for the HBM market, Choi said. The relationship between AI build-outs and HBM purchases is "very straightforward" and there is a correlation between the two, Choi said. SK Hynix's projections are conservative and include constraints such as available energy, he said. But the memory business is undergoing a significant strategic change during this period as well. HBM - a type of dynamic random access memory or DRAM standard first produced in 2013 - involves stacking chips vertically to save space and reduce power consumption, helping to process the large volumes of data generated by complex AI applications. SK Hynix expects this market for custom HBM to grow to tens of billions of dollars by 2030, Choi said. Due to technological changes in the way SK Hynix and rivals such as Micron Technology and Samsung Electronics build next-generation HBM4, their products include a customer-specific logic die, or "base die", that helps manage the memory. That means it is no longer possible to easily replace a rival's memory product with a nearly identical chip or product. Part of SK Hynix's optimism for future HBM market growth includes the likelihood that customers will want even further customisation than what SK Hynix already does, Choi said. At the moment it is mostly larger customers such as Nvidia that receive individual customisation, while smaller clients get a traditional one-size-fits-all approach. "Each customer has different taste," Choi said, adding that some want specific performance or power characteristics. SK Hynix is currently the main HBM supplier to Nvidia, although Samsung and Micron supply it with smaller volumes. Last week, Samsung cautioned during its earnings conference call that current generation HBM3E supply would likely outpace demand growth in the near term, a shift that could weigh on prices. "We are confident to provide, to make the right competitive product to the customers," Choi said. 100% TARIFFS U.S. President Donald Trump on Wednesday said the United States would impose a tariff of about 100% on semiconductor chips imported from countries not producing in America or planning to do so. Choi declined to comment on the tariffs. Trump told reporters in the Oval Office the new tariff rate would apply to "all chips and semiconductors coming into the United States," but would not apply to companies that were already manufacturing in the United States or had made a commitment to do so. Trump's comments were not a formal tariff announcement, and the president offered no further specifics. South Korea's top trade envoy Yeo Han-koo said on Thursday that Samsung Electronics and SK Hynix would not be subject to the 100% tariffs on chips if they were implemented. Samsung has invested in two chip fabrication plants in Austin and Taylor, Texas, and SK Hynix has announced plans to build an advanced chip packaging plant and an artificial intelligence research and development facility in Indiana. South Korea's chip exports to the United States were valued at $10.7 billion last year, accounting for 7.5% of its total chip exports. Some HBM chips are exported to Taiwan for packaging, accounting for 18% of South Korea's chip exports in 2024, a 127% increase from the previous year. (Reporting by Heekyong Yang and Hyunjoo Jin in Seoul and Max A. Cherney in San Francisco; Editing by Tom Hogue)

Share

Share

Copy Link

SK Hynix projects significant growth in the High Bandwidth Memory (HBM) market for AI applications, expecting it to reach tens of billions of dollars by 2030. The company remains optimistic despite potential US tariffs and increasing competition in the sector.

SK Hynix's Optimistic Forecast for AI Memory Market

SK Hynix, a leading South Korean semiconductor manufacturer, has projected a robust 30% annual growth rate for the High Bandwidth Memory (HBM) market through 2030. This forecast comes amid increasing demand for AI-specific memory solutions and technological advancements in the sector

1

2

.HBM Technology and Market Dynamics

High Bandwidth Memory, first introduced in 2013, involves stacking memory chips vertically to improve performance and efficiency. This technology is crucial for processing the large volumes of data generated by complex AI applications

3

.

Source: TechRadar

2

.The company's head of HBM business planning, Choi Joon-yong, stated, "AI demand from the end user is pretty much, very firm and strong"

2

. This optimism is fueled by the significant AI capital spending projected by major cloud computing companies such as Amazon, Microsoft, and Google1

.Technological Advancements and Customization

The upcoming HBM4 standard represents a significant shift in the memory business.

Source: TweakTown

2

4

. This advancement makes it more challenging to substitute rival products, potentially solidifying market positions.Choi emphasized the growing importance of customization, noting that "Each customer has different taste"

2

. While larger customers like Nvidia currently receive individualized solutions, SK Hynix anticipates increased demand for customization from smaller clients as well4

.Market Competition and Challenges

SK Hynix currently holds the largest share of the HBM market and is the primary supplier to Nvidia. However, the company faces competition from Samsung and Micron, who are also scaling up their HBM production

1

5

. Samsung has recently cautioned about potential oversupply of HBM3E in the short term, which could pressure prices2

.Related Stories

Geopolitical Considerations

The AI memory market's growth projections come against the backdrop of potential geopolitical challenges. U.S. President Donald Trump has announced plans for a 100% tariff on semiconductor chips imported from countries without U.S.-based manufacturing

1

2

. However, South Korean officials have indicated that both SK Hynix and Samsung would likely be exempt due to their existing and planned U.S. facilities2

.SK Hynix's Strategy and Outlook

Despite these challenges, SK Hynix remains confident in its ability to maintain its market position.

Source: Reuters

2

3

. This strategy aims to secure supply to North American customers while mitigating potential trade risks.As the AI infrastructure continues to expand, SK Hynix's fortunes are closely tied to the sector's growth. While factors such as oversupply, customer concentration, or disruptive memory technologies could impact growth, the company's current lead in customization and packaging positions it well for the projected AI-driven demand increase

1

5

.References

Summarized by

Navi

[1]

[4]

[5]

Related Stories

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology