South Korea launches world's first comprehensive AI law amid startup concerns over compliance

5 Sources

5 Sources

[1]

South Korea's 'world-first' AI laws face pushback amid bid to become leading tech power

The laws have been criticised by tech startups, which say they go too far, and civil society groups, which say they don't go far enough South Korea has embarked on a foray into the regulation of AI, launching what has been billed as the most comprehensive set of laws anywhere in the world, that could prove a model for other countries, but the new legislation has already encountered pushback. The laws, which will force companies to label AI-generated content, have been criticised by local tech startups, which say they go too far, and civil society groups, which say they don't go far enough. The AI basic act, which took effect on Thursday last week, comes amid growing global unease over artificially created media and automated decision-making, as governments struggle to keep pace with rapidly advancing technologies. The act will force companies providing AI services to: * Add invisible digital watermarks for clearly artificial outputs such as cartoons or artwork. For realistic deepfakes, visible labels are required. * "High-impact AI", including systems used for medical diagnosis, hiring and loan approvals, will require operators to conduct risk assessments and document how decisions are made. If a human makes the final decision the system may fall outside the category. * Extremely powerful AI models will require safety reports, but the threshold is set so high that government officials acknowledge no models worldwide currently meet it. Companies that violate the rules face fines of up to 30m won (£15,000), but the government has promised a grace period of at least a year before penalties are imposed. The legislation is being billed as the "world's first" to be fully enforced by a country, and central to South Korea's ambition to become one of the world's three leading AI powers alongside the US and China. Government officials maintain the law is 80-90% focused on promoting industry rather than restricting it. Alice Oh, a computer science professor at the Korea Advanced Institute of Science and Technology (KAIST), said that while the law was not perfect, it was intended to evolve without stifling innovation. However a survey in December from the Startup Alliance found that 98% of AI startups were unprepared for compliance. Its co-head, Lim Jung-wook, said frustration was widespread. "There's a bit of resentment," he said. "Why do we have to be the first to do this?" Companies must self-determine whether their systems qualify as high-impact AI, a process critics say is lengthy and creates uncertainty. They also warn of competitive imbalance: all Korean companies face regulation regardless of size, while only foreign firms meeting certain thresholds - such as Google and OpenAI - must comply. The push for regulation has unfolded against a uniquely charged domestic backdrop that has left civil society groups worried the legislation does not go far enough. South Korea accounts for 53% of all global deepfake pornography victims, according to a 2023 report by Security Hero, a US-based identity protection firm. In August 2024, an investigation exposed massive networks of Telegram chatrooms creating and distributing AI-generated sexual imagery of women and girls, foreshadowing the scandal that would later erupt around Elon Musk's Grok chatbot. The law's origins, however, predate this crisis, with the first AI-related bill submitted to parliament in July 2020. It stalled repeatedly in part due to provisions that were accused of prioritising industry interests over citizen protection. Civil society groups maintain that the new legislation provides limited protection for people harmed by AI systems. Four organisations, including Minbyun, a collective of human rights lawyers, issued a joint statement the day after it was implemented arguing the law contained almost no provisions to protect citizens from AI risks. The groups noted that while the law stipulated protection for "users", those users were hospitals, financial companies and public institutions that use AI systems, not people affected by AI. The law established no prohibited AI systems, they argued, and exemptions for "human involvement" created significant loopholes. The country's human rights commission has criticised the enforcement decree for lacking clear definitions of high-impact AI, noting that those most likely to suffer rights violations remain in regulatory blind spots. In a statement, the ministry of science and ICT said it expected the law to "remove legal uncertainty" and build "a healthy and safe domestic AI ecosystem", adding that it would continue to clarify the rules through revised guidelines. Experts said South Korea had deliberately chosen a different path from other jurisdictions. Unlike the EU's strict risk-based regulatory model, the US and UK's largely sector-specific, market-driven approaches, or China's combination of state-led industrial policy and detailed service-specific regulation, South Korea has opted for a more flexible, principles-based framework, said Melissa Hyesun Yoon, a law professor at Hanyang University who specialises in AI governance. That approach is centred on what Yoon describes as "trust-based promotion and regulation". "Korea's framework will serve as a useful reference point in global AI governance discussions," she said.

[2]

South Korea launches landmark laws to regulate AI, startups warn of compliance burdens

Seoul is hoping that the new AI Basic Act will position the country as a leader in the field. It has taken effect in South Korea sooner than a comparable effort in Europe, where the EU AI Act is being applied in phases through 2027. South Korea introduced on Thursday what it says is the world's first comprehensive set of laws regulating artificial intelligence, aiming to strengthen trust and safety in the sector, but startups fretted that compliance could hold them back. Seoul is hoping that the new AI Basic Act will position the country as a leader in the field. It has taken effect in South Korea sooner than a comparable effort in Europe, where the EU AI Act is being applied in phases through 2027. Global divisions remain over how to regulate AI, with the U.S. favouring a more light-touch approach to avoid stifling innovation. China has introduced some rules and proposed creating a body to coordinate global regulation. One key feature of the laws is the requirement that companies must ensure there is human oversight in so-called "high-impact" AI which includes fields like nuclear safety, the production of drinking water, transport, healthcare and financial uses such as credit evaluation and loan screening. Other rules stipulate that companies must give users advance notice about products or services using high-impact or generative AI, and provide clear labelling when AI-generated output is difficult to distinguish from reality. The Ministry of Science and ICT has said the legal framework was designed to promote AI adoption while building a foundation of safety and trust. The bill was prepared after extensive consultation and companies will be given a grace period of at least a year before authorities begin imposing administrative fines for infractions. The penalties can be hefty. A failure to label generative AI, for example, could leave a company facing a fine of up to 30 million won ($20,400). The law will provide a "critical institutional foundation" for South Korea's ambition to become a top-three global AI powerhouse, Science minister Bae Kyung-hoon, a former head of AI research at electronics giant LG, told a press conference. But Lim Jung-wook, co-head of South Korea's Startup Alliance, said many founders were frustrated that key details remain unsettled. "There's a bit of resentment - why do we have to be the first to do this?" he said. Jeong Joo-yeon, a senior researcher at the group, said the law's language was so vague that companies may default to the safest approach to avoid regulatory risk. The ministry has said it plans a guidance platform and dedicated support centre for companies during the grace period. "Additionally, we will continue to review measures to minimise the burden on industry," a spokesperson said, adding that authorities were looking at extending the grace period if domestic and overseas industry conditions warranted such a measure.

[3]

South Korea launches landmark laws to regulate artificial intelligence

South Korea introduced on Thursday what it says is the world's first comprehensive set of laws regulating artificial intelligence, aiming to strengthen trust and safety in the sector, but startups fretted that compliance could hold them back. Seoul is hoping that the new AI Basic Act will position the country as a leader in the field. It has taken effect in South Korea sooner than a comparable effort in Europe, where the EU AI Act is being applied in phases through 2027. Global divisions remain over how to regulate AI, with the U.S. favoring a more light-touch approach to avoid stifling innovation. China has introduced some rules and proposed creating a body to coordinate global regulation.

[4]

Unclear guidance, vague terms in AI Basic Act leave businesses in limbo - The Korea Times

Korea's revised Basic Act on the Development of Artificial Intelligence (AI) and the Establishment of Foundation for Trustworthiness, also known as the AI Basic Act, took effect on Jan. 22 as the world's first comprehensive legal framework for AI. With the broad new obligations on companies that develop or deploy AI technologies, industry players are scrambling to interpret the new regulations, amid growing concerns over its vague standards and rising uncertainty about the business risks they may trigger. The regulations seek to balance AI innovation with safety and public trust in AI, creating a national governance framework centered on a national AI committee chaired by the president. It mandates an AI master plan every three years, reinforced powers for the presidential committee and government support for research and development, training data infrastructure -- including open test beds at public institutions, -- and special measures for small and medium‑sized enterprises and startups. On the industry side, the act obliges AI-generated content to be disclosed as such, mandates transparency measures such as watermarks and imposes risk controls on systems classified as "high-impact." But as the first business week of the revised act unfolded, with about a year of grace period for implementation of the new rules, companies are left navigating murky definitions and unclear standards, prompting fears that it could slow innovation and complicate compliance. Lee Seong-yeob, a professor at Korea University's Graduate School of Management of Technology, said the new framework risks dampening development incentives if engineers begin second‑guessing whether their work might inadvertently breach the law, especially in the fast-paced AI field. "Because AI development and deployment cycles are so short, compliance must be repeated with each new version, making regulatory requirements a potential barrier to AI development and deployment," he said. "For Korea, which aims to move up in AI, what is supposed to be a minimum safety net could end up functioning as a significant hurdle." Mandatory transparency, yet unclear guidance Under the act, entities that develop or use AI for commercial purposes must notify users when content, such as images, video, audio or text, is generated by AI by using visible watermarks or other clear indicators. However, for many firms, the practical details remain incomplete, especially around when a watermark is needed and who exactly must apply it. While the law clearly assigns transparency obligations to providers that directly offer AI products and services to users, it excludes general users and companies that only rely on AI as a creative tool, which could create loopholes and uncertainty in the regulatory perimeter. In practice, companies, including animation and webtoon studios, that use generative tools are not defined as AI service providers and therefore are exempt from labeling duty, while platforms hosting or distributing AI‑assisted works also face fewer obligations unless they themselves operate the underlying models. Much deepfake or misleading content comes from overseas apps beyond Korea's legal reach and only a few global tech giants meet the high threshold for the act's requirement to appoint a local representative subject to Korean jurisdiction. AI‑generated content from smaller overseas services or reposted by individuals may continue to evade systematic labeling, undermining the act's transparency goals, observers note. Some also warn that it is unclear what would happen in cases where watermarks are removed or damaged by users, since obligations stop at service providers. The content sector, including gaming and media, also expressed concerns that rules on watermark application could impair productivity and diminish the value of creative output, as AI‑assisted work could stigmatize content as being of lower value. "We hope the system will be implemented to support industry growth rather than a regulatory‑first standpoint," a gaming industry insider said. "There are concerns that these regulations could become a burden on industrial development. Now that the law is in force, we also hope there will be sufficient support so that those on the ground can adapt smoothly to the new framework." High-impact AI still undefined Equally contentious is the law's provision on high-impact AI systems, defined as those that could significantly affect human life, safety or fundamental rights. The act intends to classify and impose additional safeguards on high-impact applications, such as autonomous vehicles, health care diagnostics and infrastructure operations. However, the legislation and early guidance stop short of defining quantitative thresholds, such as specific error rates, coverage ratios or incident probabilities, that would automatically push a system into the high‑impact category. Vague terms like "significant impact" and "risk of harm" also raise concerns that they would leave too much room for regulators' judgment, complicating companies' investment planning for large‑scale AI deployments. If businesses cannot reliably predict whether a model will be treated as high‑impact, they may delay launches or shift projects abroad rather than invest in additional documentation, audits and impact assessments at home. Lee, the professor at Korea University, noted that the very concept of high impact is hard to determine in advance, pointing out that this is one of the key reasons why other countries, such as the United States and China, have so far avoided predefined classifications. "As AI keeps evolving, it is very hard to draw a clear line in advance for what counts as high impact or high risk. In the end, even if we update the standards as much as possible for now, there will still be the problem that they must be continuously revised every time new technologies are developed," he said, noting the current grace period should also be used to fine-tune the legislation. "During this one‑year period, the law technically applies, but there are no penalties, so I think we should move quickly to revise it while we are still in this preparation phase." During the grace period, the government has ensured temporarily easing of inspections and fines while operating a support desk that has already seen inquiries about the AI Basic Act surge since the law took effect. Tech companies have begun reorganizing internal governance to get ahead of the new rules and formalize compliance playbooks. Major telecommunications companies said they are reviewing their companywide compliance frameworks and setting up internal risk management protocols involving law, security and ethics teams. Tech giants, such as Naver and Kakao, are also moving to align their products with transparency obligations, having previously introduced internal AI governance and risk frameworks on a voluntary basis.

[5]

Korea becomes 1st nation to enact comprehensive law on safe AI usage - The Korea Times

Korea on Thursday formally enacted a comprehensive law governing the safe use of artificial intelligence (AI) models, becoming the first country globally in doing so, establishing a regulatory framework against misinformation and other hazardous effects involving the emerging field. The Basic Act on the Development of Artificial Intelligence and the Establishment of a Foundation for Trustworthiness, or the AI Basic Act, officially took effect Thursday, according to the science ministry. It marked the first governmental adoption of comprehensive guidelines on the use of AI globally. The act centers on requiring companies and AI developers to take greater responsibility for addressing deepfake content and misinformation that can be generated by AI models, granting the government the authority to impose fines or launch probes into violations. In detail, the act introduces the concept of "high-risk AI," referring to AI models used to generate content that can significantly affect users' daily lives or their safety, including applications in the employment process, loan reviews and medical advice. Entities harnessing such high-risk AI models are required to inform users that their services are based on AI and are responsible for ensuring safety. Content generated by AI models is required to carry watermarks indicating its AI-generated nature. "Applying watermarks to AI-generated content is the minimum safeguard to prevent side effects from the abuse of AI technology, such as deepfake content," a ministry official said. Global companies offering AI services in Korea meeting any of the following criteria -- global annual revenue of 1 trillion won ($681 million) or more, domestic sales of 10 billion won or higher, or at least 1 million daily users in the country -- are required to designate a local representative. Currently, OpenAI and Google fall under the criteria. Violations of the act may be subject to fines of up to 30 million won, and the government plans to enforce a one-year grace period in imposing penalties to help the private sector adjust to the new rules. The act also includes measures for the government to promote the AI industry, with the science minister required to present a policy blueprint every three years.

Share

Share

Copy Link

South Korea enacted the AI Basic Act, becoming the first nation to implement comprehensive legislation to regulate artificial intelligence. The law mandates watermarks for AI-generated content and human oversight for high-impact AI systems. However, tech startups warn that vague legal language and compliance burdens could stifle innovation, while civil society groups argue the protections don't go far enough.

South Korea Enacts World's First Comprehensive AI Law

South Korea has officially enacted the AI Basic Act, becoming the first country globally to implement what it bills as the world's first comprehensive AI law

1

5

. The legislation, which took effect on January 22, arrives amid growing global unease over artificially created media and automated decision-making, positioning South Korea ahead of comparable efforts like the EU AI Act, which is being applied in phases through 20272

. The law represents a central pillar of South Korea's ambition to become one of the world's three leading AI powers alongside the US and China, with government officials maintaining the legislation is 80-90% focused on promoting industry rather than restricting it1

.

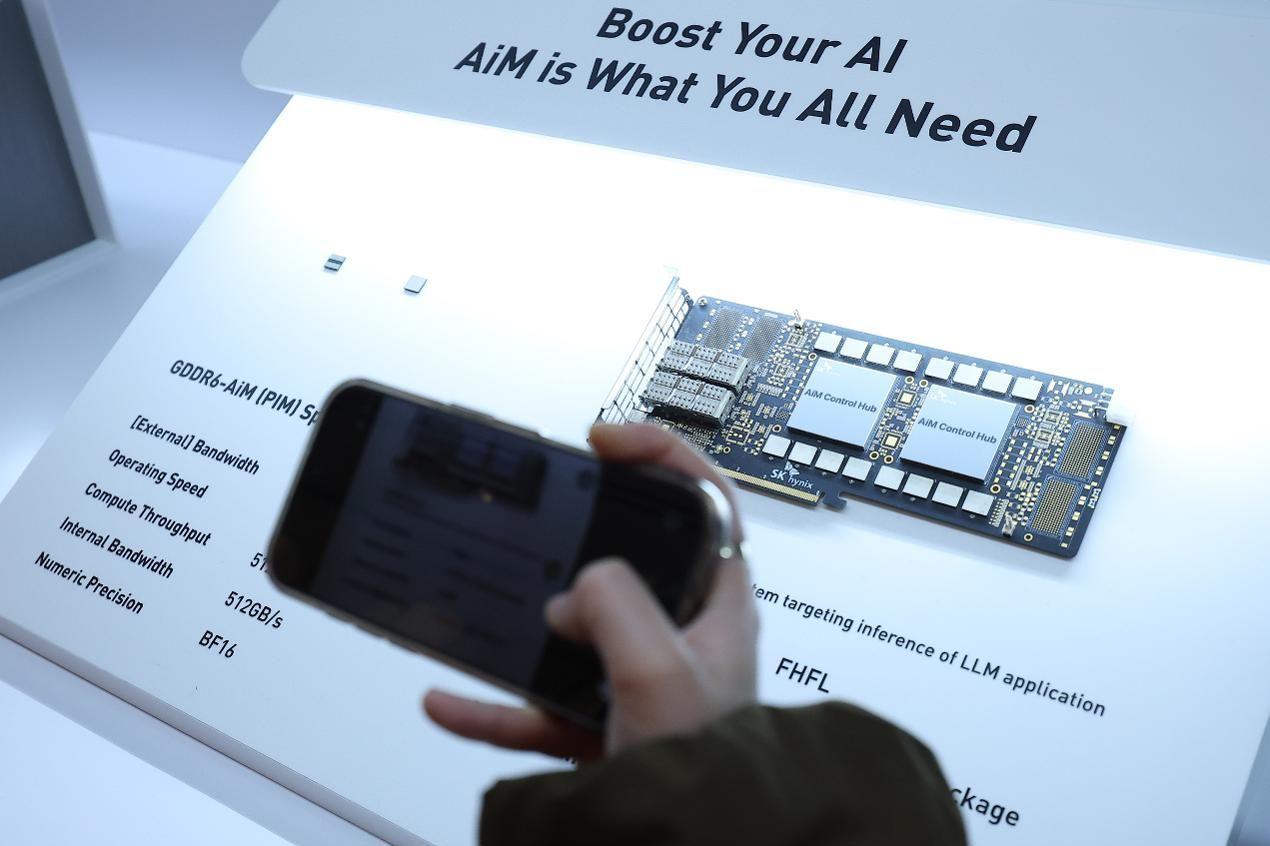

Source: Korea Times

Mandatory Watermarks for AI Content and Human Oversight Requirements

The AI Basic Act introduces strict requirements to regulate artificial intelligence services and ensure safe AI usage

5

. Companies providing AI services must add invisible digital watermarks for clearly artificial outputs such as cartoons or artwork, while realistic deepfakes require visible labels1

. The law mandates transparency measures to prevent misinformation and deepfakes, establishing watermarks for AI content as what the Ministry of Science and ICT calls "the minimum safeguard to prevent side effects from the abuse of AI technology"5

. High-impact AI systems, including those used for medical diagnosis, hiring, loan approvals, nuclear safety, and transport, will require operators to conduct risk assessments and document how decisions are made, with mandatory human oversight1

2

. Companies that violate the rules face penalties of up to 30 million won (approximately $20,400 or £15,000), though the government has promised a grace period of at least a year before imposing fines1

2

.

Source: ET

Startups Sound Alarm Over Compliance Burdens and Vague Legal Language

Despite the government's assurances, tech startups have raised significant concerns about compliance burdens for startups and the practical challenges of implementation

2

. A December survey from the Startup Alliance found that 98% of AI startups were unprepared for compliance, with co-head Lim Jung-wook expressing widespread frustration: "There's a bit of resentment. Why do we have to be the first to do this?"1

. Companies must self-determine whether their systems qualify as high-impact AI systems, a process critics say is lengthy and creates uncertainty1

. The vague legal language has left businesses in limbo, with unclear guidance on critical definitions4

. Jeong Joo-yeon, a senior researcher at the Startup Alliance, noted that the law's language was so vague that companies may default to the safest approach to avoid regulatory risk . Professor Lee Seong-yeob from Korea University warned that the regulatory framework risks dampening innovation if engineers begin second-guessing whether their work might inadvertently breach the law4

.Competitive Imbalance and Global Tech Giant Requirements

A particular point of contention involves competitive imbalance: all Korean companies face regulation regardless of size, while only foreign firms meeting certain thresholds must comply

1

. Global companies offering AI services in South Korea must designate a local representative if they meet any of the following criteria: global annual revenue of 1 trillion won ($681 million) or more, domestic sales of 10 billion won or higher, or at least 1 million daily users in the country5

. Currently, OpenAI and Google fall under these criteria1

5

. However, much deepfake or misleading content comes from overseas apps beyond South Korea's legal reach, and only a few global tech giants meet the high threshold for local representation requirements4

.Related Stories

Civil Society Groups Argue Protections Fall Short

While startups worry the law goes too far, civil society groups maintain it doesn't go far enough to protect citizens

1

. Four organizations, including Minbyun, a collective of human rights lawyers, issued a joint statement arguing the law contained almost no provisions to protect citizens from AI risks1

. The groups noted that while the law stipulated protection for "users," those users were hospitals, financial companies, and public institutions that use AI systems, not people affected by AI-generated content1

. The country's human rights commission criticized the enforcement decree for lacking clear definitions of high-impact AI, noting that those most likely to suffer rights violations remain in regulatory blind spots1

. This criticism carries particular weight given that South Korea accounts for 53% of all global deepfake pornography victims, according to a 2023 report by Security Hero1

.Governance Structure and Future Implementation

The regulatory framework creates a national governance structure centered on a national AI committee chaired by the president, mandating an AI master plan every three years

4

. The Ministry of Science and ICT stated it expects the law to "remove legal uncertainty" and build "a healthy and safe domestic AI ecosystem," adding that it would continue to clarify the rules through revised guidelines1

. Science minister Bae Kyung-hoon, a former head of AI research at electronics giant LG, told a press conference that the law will provide a "critical institutional foundation" for South Korea's ambition to become a top-three global AI powerhouse . The ministry has said it plans a guidance platform and dedicated support centre for companies during the grace period, and authorities are considering extending the grace period if domestic and overseas industry conditions warrant such a measure . Alice Oh, a computer science professor at the Korea Advanced Institute of Science and Technology (KAIST), noted that while the law was not perfect, it was intended to evolve without stifling innovation1

. The legislation represents a distinct path from the EU's strict risk-based regulatory model, the US and UK's largely sector-specific approaches, or China's combination of state-led industrial policy and detailed service-specific regulation1

. As global divisions persist over how to regulate artificial intelligence, with the US favoring a more light-touch approach to avoid stifling innovation and China proposing a body to coordinate global regulation, South Korea's experiment in balancing public trust with industry promotion will be closely watched by policymakers worldwide3

.

Source: Korea Times

References

Summarized by

Navi

[1]

[4]

Related Stories

South Korea mandates AI ad labeling to combat deepfake celebrities and fabricated experts

10 Dec 2025•Policy and Regulation

Global AI Regulation Landscape: Divergent Approaches Ahead of Paris Summit

04 Feb 2025•Policy and Regulation

EU AI Act Takes Effect: New Rules and Implications for AI Providers and Users

01 Aug 2025•Policy and Regulation

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology