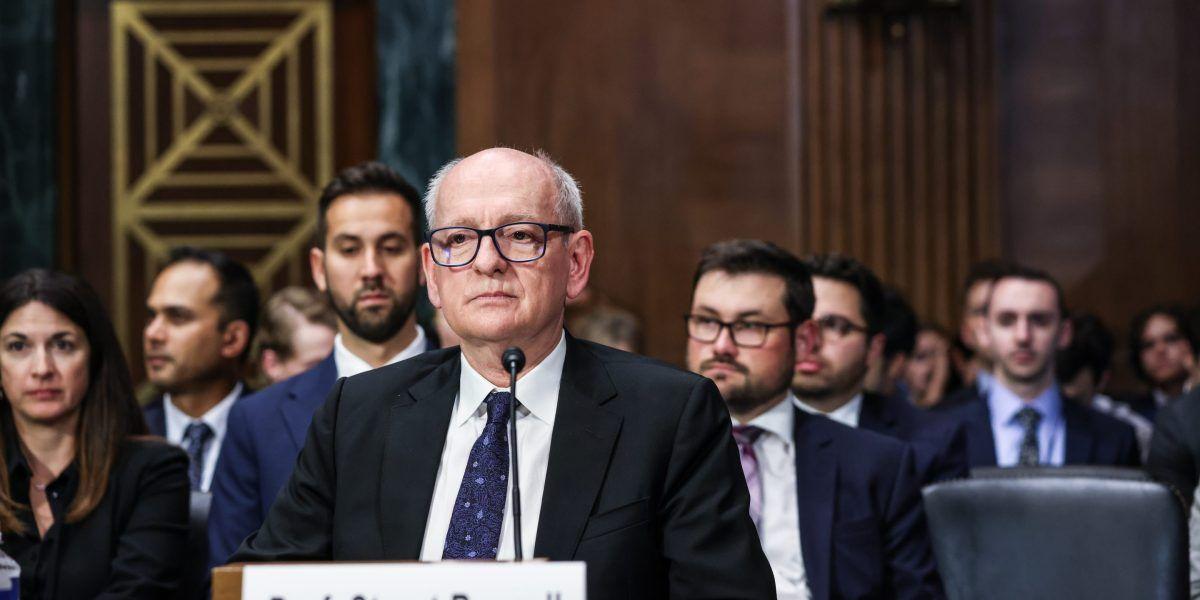

Stuart Russell warns Big Tech's AI arms race could lead to human extinction

2 Sources

2 Sources

[1]

Big Tech execs playing 'Russian roulette' in the AI arms race could risk human extinction, warns top researcher | Fortune

The global competition to dominate artificial intelligence has reached a fever pitch, but one of the world's leading computer scientists warned that Big Tech is recklessly gambling with the future of the human species. The loudest voices in AI often fall into two camps: those who praise the technology as world-changing, and those who urge restraint -- or even containment -- before it becomes a runaway threat. Stuart Russell, a pioneering AI researcher at the University of California, Berkeley, firmly belongs to the latter group. One of his chief concerns is that governments and regulators are struggling to keep pace with the technology's rapid rollout, leaving the private sector locked in a race to the finish that risks devolving into the kind of perilous competition not seen since the height of the Cold War. "For governments to allow private entities to essentially play Russian roulette with every human being on earth is, in my view, a total dereliction of duty," Russell told AFP from the AI Impact Summit in New Delhi, India. While tech CEOs are locked in an "arms race" to develop the next and best AI model, a goal the industry maintains will eventually herald enormous advancements in medicinal research and productivity many ignore or gloss over the risks, according to Russell. In a worst case scenario, he believes the breakneck speed of innovation without regulation could lead to the extinction of the human race. Russell should know about the existential risks underlying AI's rapid deployment. The British-born computer scientist has been studying AI for over 40 years, and published one of the most authoritative textbooks on the subject as far back as 1995. In 2016, he founded a research center at Berkeley focusing on AI safety, which advocates "provably beneficial" AI systems for humanity. In New Delhi, Russell remarked on how far off the mark companies and governments seem to be on that goal. Russell's critique centered on the rapid development of systems that could eventually overpower their creators, leaving human civilization as "collateral damage in that process." The heads of major AI firms are aware of these existential dangers, but find themselves trapped regardless by market forces. "Each of the CEOs of the main AI companies, I believe, wants to disarm," Russell said, but they cannot do so "unilaterally" because their position would quickly be usurped by competitors and would face immediate ousting by their investors. Talk of existential risk and humanity's potential extinction was once reserved for the specter of runaway nuclear proliferation during the Cold War, when great powers stockpiled weapons out of fear that rivals would surpass them. But skeptics like Stuart Russell increasingly apply that same framework to the age of artificial intelligence. The competition between the U.S. and China is often described as an AI "arms race," complete with the secrecy, urgency, and high stakes that defined the nuclear rivalry between Washington and Moscow in the latter half of the 20th century. Vladimir Putin, Russia's president, captured the enormous stakes succinctly nearly a decade ago: "Whoever becomes the leader in this sphere will become the ruler of the world," he said in a 2017 address. While the current arms race cannot be measured in warheads, the scale of it is captured in the staggering amounts of capital being deployed. Countries and corporations are currently spending hundreds of billions of dollars on energy-intensive data centers to train and run AI.In the U.S. alone, analysts expect capital expenditure on AI to exceed $600 billion this year. But aggressive corporate action has yet to be matched by restraint through regulatory action, Russell said. "It really helps if each of the governments understand this issue. And so that's why I'm here," he said, referring to the India summit. China and the EU are among the AI-developing powers that have taken a harder stance on regulating the technology. Elsewhere, the reality has been more hands-off. In India, the government has opted for a largely deregulatory approach. In the U.S., meanwhile, the Trump administration has championed pro-market ideals for AI, and sought to scrap most state-level regulations to give companies free rein.

[2]

Stuart Russell Says AI Could Turn Humans 'Into Less Than A Human Being,' Urges Action On Super-Intelligent Systems - Alphabet (NASDAQ:GOOGL)

Top computer science researcher Stuart Russell is sounding the alarm over the rapid development of artificial intelligence, warning that unchecked competition among tech companies could pose existential risks to humanity. AI CEOs Risk Humanity In Global Tech Race On Tuesday, Russell, a professor at the University of California, Berkeley, told AFP that CEOs of major AI companies understand the dangers of super-intelligent systems but feel trapped by investor pressures, reported Barron's. "For governments to allow private entities to essentially play Russian roulette with every human being on earth is, in my view, a total dereliction of duty," he said at the AI Impact Summit in New Delhi. He warned that AI could replace millions of jobs, particularly in customer service and tech support, and be misused for surveillance or even criminal activity. Highlighting the risks, Russell noted that OpenAI CEO Sam Altman has publicly acknowledged that AI could threaten human survival. Anthropic, another AI startup, recently cautioned that its chatbot models might be nudged toward "knowingly supporting -- in small ways -- efforts toward chemical weapon development and other heinous crimes." Russell described AI as "human imitators" capable of performing cognitive tasks, saying, "When you're taking over all cognitive functions -- the ability to answer a question, to make a decision, to make a plan... You are turning someone into less than a human being." Google Leaders Highlight AI's Transformative Impact Speaking at the India AI Impact Summit 2026, he compared the AI revolution to an industrial revolution, "but 10 times faster and 10 times larger." He highlighted its role across Google's businesses, including Search, YouTube, Cloud, Waymo and Isomorphic Labs. Google DeepMind CEO Demis Hassabis warned that artificial general intelligence could reshape the world faster than any previous technological shift if managed responsibly. He compared AGI's potential to the discovery of fire and electricity and predicted it could have ten times the impact of the Industrial Revolution within a decade. Hassabis noted 2026 as a pivotal year for AI, with AGI potentially arriving in the next five years, while Sergey Brin had previously estimated it would appear before 2030. Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. Photo courtesy: Shutterstock Market News and Data brought to you by Benzinga APIs To add Benzinga News as your preferred source on Google, click here.

Share

Share

Copy Link

Stuart Russell, a leading AI researcher at UC Berkeley, cautioned that the unregulated competition among tech companies to develop artificial intelligence amounts to playing Russian roulette with humanity's future. Speaking at the AI Impact Summit in New Delhi, Russell warned that the breakneck pace of AI development without proper oversight could lead to human extinction in a worst-case scenario.

Big Tech's Competitive Drive Fuels Dangerous AI Development

Stuart Russell, a pioneering artificial intelligence researcher at the University of California, Berkeley, delivered a stark warning at the AI Impact Summit in New Delhi: the current AI arms race among tech giants could pose an existential threat to humanity

1

. Russell, who has studied AI for over 40 years and authored one of the field's most authoritative textbooks in 1995, used the Russian roulette analogy to describe how private companies are gambling with every human life on earth. "For governments to allow private entities to essentially play Russian roulette with every human being on earth is, in my view, a total dereliction of duty," Russell told AFP1

.

Source: Fortune

The British-born computer scientist founded a research center at Berkeley in 2016 focusing on AI safety, advocating for provably beneficial AI systems that serve humanity rather than threaten it. His concerns center on the rapid development of super-intelligent systems that could eventually overpower their creators, leaving human civilization as collateral damage in the process

1

.Market Forces Trap CEOs in Unchecked AI Development

Russell's critique reveals a troubling paradox: the heads of major AI firms understand the existential risk their work poses, yet find themselves unable to slow down. "Each of the CEOs of the main AI companies, I believe, wants to disarm," Russell explained, but they cannot do so unilaterally because competitors would quickly surpass them and investors would demand their removal

1

. This dynamic mirrors the Cold War nuclear arms race, where great powers stockpiled weapons out of fear that rivals would gain the upper hand.The scale of this competition is captured in staggering capital expenditures. In the U.S. alone, analysts expect spending on AI to exceed $600 billion this year as countries and corporations pour hundreds of billions into energy-intensive data centers

1

. Russian President Vladimir Putin captured the stakes in 2017 when he declared, "Whoever becomes the leader in this sphere will become the ruler of the world"1

.

Source: Benzinga

OpenAI and Anthropic Leaders Acknowledge Dangers

Russell's warnings align with public statements from industry leaders. OpenAI CEO Sam Altman has acknowledged that AI could threaten human survival

2

. Anthropic, another AI startup, recently cautioned that its chatbot models might be nudged toward "knowingly supporting -- in small ways -- efforts toward chemical weapon development and other heinous crimes"2

.Russell described AI as "human imitators" capable of performing cognitive tasks, warning about job displacement particularly in customer service and tech support, as well as potential misuse for surveillance and criminal activity

2

. "When you're taking over all cognitive functions -- the ability to answer a question, to make a decision, to make a plan... You are turning someone into less than a human being," he said2

.Related Stories

AGI Timeline Accelerates as Governments Struggle to Regulate AI Development

Google DeepMind CEO Demis Hassabis, also speaking at the AI Impact Summit, warned that AGI (Artificial General Intelligence) could reshape the world faster than any previous technological shift if managed responsibly

2

. He compared AGI's potential to the discovery of fire and electricity, predicting it could have ten times the impact of the Industrial Revolution within a decade. Hassabis noted 2026 as a pivotal year for AI, with AGI potentially arriving in the next five years, while Google co-founder Sergey Brin previously estimated it would appear before 20302

.Yet governmental inaction remains a critical concern. While China and the EU have taken harder stances on regulation, other major players lag behind. India has opted for a largely deregulatory approach, while the Trump administration has championed pro-market ideals for AI and sought to scrap most state-level regulations to give companies free rein

1

. Russell's presence in New Delhi signals his effort to educate governments: "It really helps if each of the governments understand this issue. And so that's why I'm here," he explained1

. The question remains whether regulatory frameworks can catch up before the technology reaches a point of no return, with human extinction becoming more than a theoretical possibility in the face of unchecked AI development.References

Summarized by

Navi

Related Stories

Global AI Summit in Paris Shifts Focus from Safety to Opportunity, Sparking Debate

12 Feb 2025•Policy and Regulation

Dario Amodei warns AI risks are 'almost here' in 19,000-word essay calling out AI companies

26 Jan 2026•Policy and Regulation

AI Industry Leaders Warn of Control Crisis as Companies Race to Build Superintelligent Systems

12 Nov 2025•Policy and Regulation

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Anthropic and Pentagon clash over AI safeguards as $200 million contract hangs in balance

Policy and Regulation