UK Government Forces Tech Firms to Remove Abusive Images Within 48 Hours or Face Blockade

4 Sources

4 Sources

[1]

UK to Force Tech Firms to Take Down Abusive Images in 48 Hours

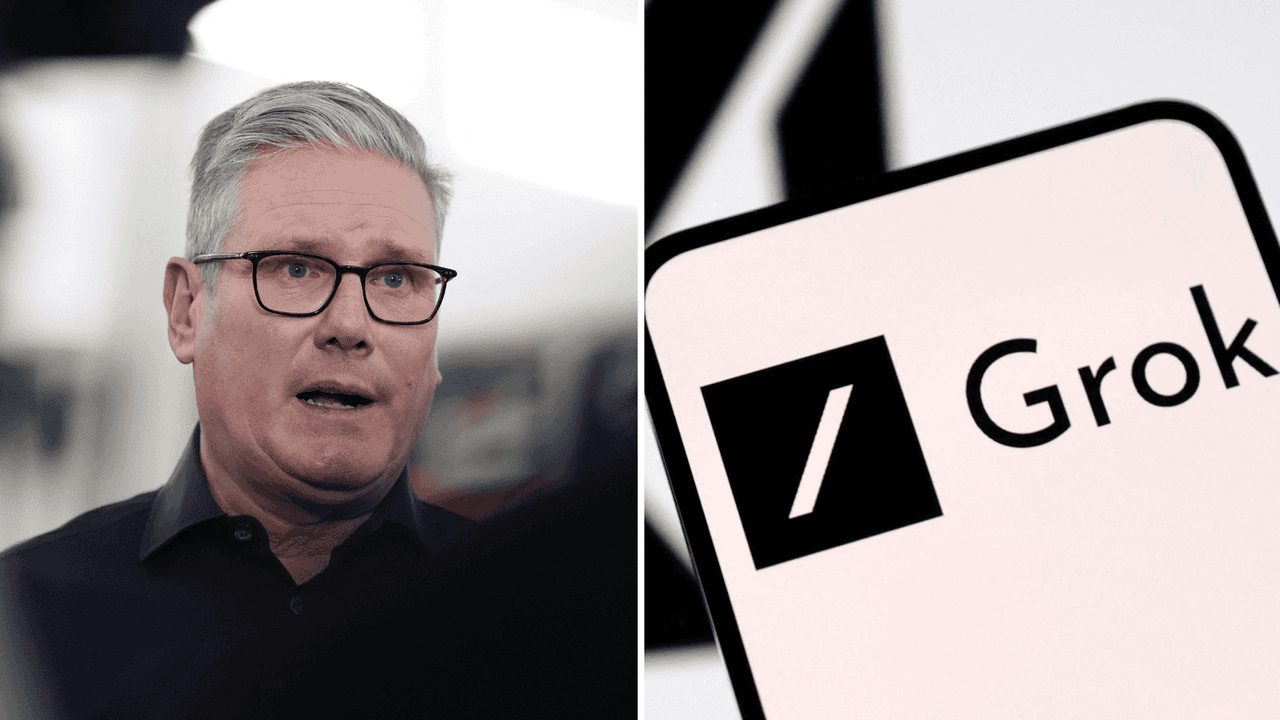

The UK government has proposed rules that would require tech companies to remove abusive images from their sites within 48 hours, weeks after X users flooded the social media platform with thousands of pictures of undressed women they'd generated with the company's artificial intelligence tool. Companies that fail to remove the content in time could be fined as much as 10% of their global revenue or have their service blocked in the UK, the government said in a statement on Wednesday. Regulator Ofcom is also considering whether to digitally tag intimate images of people shared without their consent so they are automatically removed, akin to child sexual abuse and terrorism content. "The online world is the front line of the 21st century battle against violence against women and girls. That's why my government is taking urgent action against chatbots and 'nudification' tools," Prime Minister Keir Starmer said in the statement. Elon Musk's X came under widespread criticism last month after its Grok AI tool was used to create and distribute sexualized images of real people in underwear or bathing suits. Child safety groups found sexualized AI-generated images of children on the dark web. Get the Tech Newsletter bundle. Get the Tech Newsletter bundle. Get the Tech Newsletter bundle. Bloomberg's subscriber-only tech newsletters, and full access to all the articles they feature. Bloomberg's subscriber-only tech newsletters, and full access to all the articles they feature. Bloomberg's subscriber-only tech newsletters, and full access to all the articles they feature. Bloomberg may send me offers and promotions. Plus Signed UpPlus Sign UpPlus Sign Up By submitting my information, I agree to the Privacy Policy and Terms of Service. X restricted access to Grok and blocked the feature, referred to as "bikini mode," after an outcry from governments worldwide and emphasized that it takes down illegal content from its site. But it sparked a regulatory crackdown on the company and provided fodder for a movement to restrict social media companies more broadly. Several governments in Europe are now weighing banning social media for younger teenagers, building on a law that was passed in Australia last year. Sharing non-consensual intimate images is already illegal in the UK. It's defined as sharing pictures of people in an "intimate state," like a sex act, who are nude or partially nude or who are in a "culturally intimate" state, such as a Muslim woman who has removed her hijab. The UK is proposing adding the new rules as amendments to the crime and policing bill, giving police greater powers to enforce takedown measures. The UK's Revenge Porn Helpline, a group that assists people in getting this content removed, has said that while it succeeds more than 90% of the time, platforms aren't always compliant, and it can take several requests. "When the team are supporting victims, I am continually reminded that when they want help and support, they want help and support now. They want their content removed now, not in a few hours or a few days," David Wright, chief executive officer of the UK Safer Internet Centre, said in a report to Parliament last year.

[2]

Tech firms must remove 'revenge porn' in 48 hours or risk being blocked, says Starmer

PM says measure, also applied to deepfake nudes, is needed owing to a 'national emergency' of online misogyny Deepfake nudes and "revenge porn" must be removed from the internet within 48 hours or technology firms risk being blocked in the UK, Keir Starmer has said, calling it a "national emergency" that the government must confront. Companies could be fined millions or even blocked altogether if they allow the images to spread or be reposted after victims give notice. Amendments will be made to the crime and policing bill to also regulate AI chatbots such as X's Grok, which generated nonconsensual images of women in bikinis or in compromising positions until the government threatened action against Elon Musk's company. Writing for the Guardian, Starmer said: "The burden of tackling abuse must no longer fall on victims. It must fall on perpetrators and on the companies that enable harm." The prime minister said that institutional misogyny being "woven into the fabric of our institutions" meant the problem had not been taken seriously enough. "Too often, misogyny is excused, minimised or ignored. The arguments of women are dismissed as exaggerated or 'one-offs'. That culture creates permission," Starmer wrote. Government sources said they expected to give the new powers to Ofcom to enforce by the summer and companies will be legally required to remove this content no more than 48 hours after it is flagged to them. Platforms including social media companies and pornography sites that fail to act could face fines of up to 10% of their qualifying worldwide revenue or having their services blocked in the UK. Victims would be able to flag the images either directly with tech firms or with Ofcom - which would trigger an alert across multiple platforms, according to the Department for Science, Innovation and Technology. Ofcom would be responsible for enforcing the ban on the images, with the aim to remove the onus on victims to need to report the same image potentially thousands of times as it is continually reposted. The media regulator will be told to explore ways for "revenge porn" images to have a digital watermark to allow them to be automatically flagged every time they are reposted. Internet providers will also be given new guidance on how to block hosting for rogue sites who specialise in hosting nonconsensual real or AI-generated explicit content. The Grok "nudification" tool that sparked an outcry in early January saw ministers threaten to ban X if it did not act. About 6,000 bikini demands were being made to the chatbot every hour, according to analysis conducted for the Guardian, with many requests made to create images of women bent over or wearing just dental floss. But there has also been a rise in recent years of nonconsensual real or deepfake images being used to blackmail young women and men, which charities have linked to a number of suicides. Starmer said the horror stories of women and girls who saw intimate images spread across the internet was "the type of story that, as a parent, makes your heart drop to your stomach". "Too often, those victims have been left to fight alone - chasing action site to site, reporting the same material again and again, only to see it reappear elsewhere hours later," the prime minister said. "That is not justice. It is failure. And it is sending a message to the young people of this country that women and girls are a commodity to be used and shared." Creating or sharing nonconsensual intimate images will also become a "priority offence" under the Online Safety Act, giving it the same level of seriousness as child abuse images or terrorism. The law does not require platforms to independently identify nonconsensual intimate imagery, but just to remove these images when they are flagged. Google, Meta, X and others already do this for child sexual abuse content through a process called hash matching - which assigns videos a unique digital signature that can be matched against databases of abusive content. And while the 48-hour timeline is brief, India has recently mandated that social media companies remove some deepfake content in three hours. "I think that 48 hours is certainly possible, to be honest with you," said Anne Craanen, who researches online misogyny at the Institute for Strategic Dialogue. "The problem is, it may not necessarily incentivise a quicker response rate from companies. But 48 hours is longer than the timeframe for the removal of other types of content, such as terrorist content in the EU." Craanen added that there are already existing initiatives to use hash matching to protect victims of intimate abuse; although it can be challenging getting different tech platforms to coordinate among themselves, so that an abusive video uploaded to Facebook, for example, is automatically detected on Reddit. Hash matching is not a perfect technology, Craanen emphasised, and can be circumvented. Terrorist groups, for example, often add emojis or small alterations to videos already hashed as terrorist content, thus making them unrecognisable to hash matching systems. The advent of AI tools and AI deepfakes will make this problem worse, allowing nonconsensual intimate images and other content to be quickly altered and spread around the internet, Craanen said, evading attempts to quickly detect it with tools such as hash matching. In a moment like the January Grok bikinification crisis, some abuse might be impossible to reign in. While the law appears to apply to all tech platforms, including "rogue websites" that fall outside the reach of the Online Safety Act, there are questions as to how it could apply on encrypted messaging services such as WhatsApp and Signal. In his article, Starmer said he was also determined to challenge misogyny in government and in politics, after several weeks where the prime minister has faced criticism for the appointment of Peter Mandelson as US ambassador despite knowing about his friendship with the disgraced financier and child sex offender Jeffery Epstein. Mandelson was fired after new revelations about the closeness of their friendship. The prime minister is also facing a row over the appointment of a new cabinet secretary, Antonia Romeo, tipped to be the Home Office permanent secretary, who was cleared of bullying allegations nine years ago but remains a divisive figure in the civil service. Some of her defenders have said the criticism of Romeo is based on sexist double-standards. Starmer suggested he wanted to appoint more women to senior leadership roles in government and said he was "determined to transform the culture of government: to challenge the structures that still marginalise women's voices". "And it's why I believe that simply counting how many women hold senior roles is not enough," he said. "What matters is whether their views carry weight and lead to change."

[3]

Tech firms must take down abusive images in 48 hours - or face being blocked from UK

Tech companies that don't take down abusive images reported to them could be blocked from operating in the UK or fined 10% of worldwide revenue. Technology firms that do not take down abusive images from their platforms within 48 hours face being blocked from the UK under new rules proposed by the government. Prime Minister Sir Keir Starmer said he was putting tech companies "on notice" to take down non-consensual intimate images, and will leave "no stone unturned" to protect women and girls. Companies will be legally required by law to remove the images within 48 hours of them being reported to them. If they do not, firms could be fined 10% of qualifying worldwide revenue - which could amount to billions of pounds for some major platforms - or have their services banned from the UK. Ministers say tech firms should take tackling intimate image abuse as seriously as they take tackling terrorist or child sexual abuse material. The government is making the change through an amendment to the Crime and Policing Bill, which is currently going through parliament. Media regulator Ofcom is also considering plans to treat non-consensual intimate images as the same as child sexual abuse material, which is digitally marked when found, so that any time they are re-shared, they are automatically taken down. Additionally, the government says it will publish guidance for internet companies on how to block "rogue websites" that host this content and fall out of reach of the Online Safety Act. Sir Keir said he would "leave no stone unturned in the fight to protect women from violence and abuse". He said the government had already taken "urgent action against chatbots and 'nudification' tools", and that they were "going further, putting companies on notice so that any non-consensual image is taken down in under 48 hours". Technology Secretary Liz Kendall said: "The days of tech firms having a free pass are over... No woman should have to chase platform after platform, waiting days for an image to come down." Shadow technology secretary Julia Lopez said when a similar proposal was put forward by Conservative peer, Baroness Charlotte Owen, Labour had failed to take action. "Once again, the government is playing catch-up to duck a major backbench rebellion. "The reality is that, for all the prime minister's tough rhetoric, he has arrived late to this issue. He does not know what to believe - he only knows what to do to try and survive another week." The move follows controversy in January over X's AI tool, Grok, creating AI images undressing people without their consent. Creating non-consensual intimate images, including sexually explicit deepfakes, was criminalised earlier this month, and X stopped Grok from creating the images following the outrage. Other countries are also taking action against X, with Ireland's data privacy regulator saying on Tuesday that X faces an EU privacy investigation over the non-consensual deepfakes created by Grok. Earlier this week, Sir Keir announced a crackdown on social media platforms, such as closing a legal loophole to eliminate "vile illegal content created by AI". Downing Street has also launched a consultation on measures like an Australian-style ban on under-16s using social media, and is ensuring it is able to implement one quickly if it is recommended.

[4]

UK to make tech firms remove abusive images within 48 hours of alert

Victims of abusive images would have to report them only once for tech companies to remove them from multiple online platforms under the new measures, which could come into force as early as this summer. Failure to act would result in firms facing a fine of up to 10% of their global revenue or having their services blocked in the UK, the government said in a statement. Technology firms will be required to remove non-consensual sexual images within 48 hours under tighter UK rules proposed by the government Thursday, following an outcry over sexualised deepfakes created by the AI chatbot Grok. Prime Minister Keir Starmer called tackling the problem "a national emergency", writing in the Guardian newspaper that it "requires an immediate and uncompromising response". Victims of abusive images would have to report them only once for tech companies to remove them from multiple online platforms under the new measures, which could come into force as early as this summer. Failure to act would result in firms facing a fine of up to 10% of their global revenue or having their services blocked in the UK, the government said in a statement. The proposals come after Elon Musk's Grok chatbot drew international criticism for letting users create and share sexualised deepfakes of women and children using simple text prompts. "The online world is the frontline of the 21st century battle against violence against women and girls," Starmer said in a the government's statement. "That's why my government is taking urgent action: against chatbots and 'nudification' tools," he said. The proposals will be introduced via an amendment to a criminal bill giving police greater powers to enforce measures. The government earlier this week extended its online safety laws to include AI chatbots, making providers responsible for preventing the generation of illegal or harmful content by AI. Britain's media regulator Ofcom is considering plans to treat illegal sexualised images with the same severity as child sexual abuse and terrorism content. It will announce its final decision on these proposals in May. Starmer's Labour government is ramping up efforts to protect children online, having launched a consultation on a social media ban for those under the age of 16. In January 2025, Starmer pledged to ease red tape to attract billions of pounds of AI investment and help Britain become an "AI superpower".

Share

Share

Copy Link

The UK government has proposed strict new rules requiring tech companies to remove non-consensual intimate images within 48 hours of being reported. Companies that fail to comply could face fines of up to 10% of their global revenue or have their services blocked entirely in the UK. The measures come after widespread criticism of X's Grok AI tool, which was used to create sexualized deepfake images of women and children.

UK Government Introduces Strict 48-Hour Takedown Rule for Abusive Images

The UK government has unveiled sweeping new regulations that will force tech firms to remove abusive images from their platforms within 48 hours of being reported, marking one of the most aggressive regulatory moves against online violence against women and girls. Companies that fail to comply with the 48-hour takedown rule could face fines of up to 10% of their global revenue or have their services blocked entirely in the UK

1

. Prime Minister Keir Starmer called the issue a "national emergency" that requires an immediate response, emphasizing that the burden of tackling abuse must no longer fall on victims but on perpetrators and the companies that enable harm2

.

Source: ET

The proposed amendments to the Crime and Policing Bill will give police greater powers to enforce takedown measures for non-consensual intimate images, including revenge porn and deepfake nudes

3

. Under the new system, victims would only need to report images once, with Ofcom triggering alerts across multiple platforms simultaneously, removing the burden of chasing content site to site4

.Grok AI Controversy Sparks Regulatory Crackdown

The new regulations come weeks after Elon Musk's X platform drew international condemnation when its Grok AI tool was used to create and distribute AI-generated images of real people in compromising positions. Analysis conducted for the Guardian revealed that approximately 6,000 "bikini demands" were being made to the AI chatbots every hour, with many requests made to create images of women in sexually explicit poses

2

. Child safety groups also discovered sexualized AI-generated images of children on the dark web, intensifying calls for action1

.

Source: Bloomberg

X restricted access to Grok and blocked the feature, referred to as "bikini mode," after widespread outcry from governments worldwide. However, the incident provided momentum for a broader movement to restrict social media companies, with several European governments now weighing social media bans for younger teenagers, building on legislation passed in Australia last year

1

.Digital Watermarking and Hash Matching Technology

Ofcom is exploring innovative technological solutions to combat the spread of non-consensual intimate images, including digital watermarking that would allow images to be automatically flagged and removed every time they are reposted

2

. The regulator is considering treating these images with the same severity as child sexual abuse and terrorism content, which already use hash matching technology. This process assigns videos a unique digital signature that can be matched against databases of abusive content, a system already employed by Google, Meta, X and others for child sexual abuse material2

.Anne Craanen, who researches online misogyny at the Institute for Strategic Dialogue, stated that "48 hours is certainly possible," though she noted it is longer than the timeframe for removing terrorist content in the EU. India has recently mandated that social media companies remove some deepfake content within three hours, suggesting even tighter timelines are technically feasible

2

.Related Stories

Enforcement Powers and Industry Response

The measures, which could come into force as early as this summer, will make creating or sharing non-consensual intimate images a "priority offence" under the Online Safety Act, giving it the same level of seriousness as child abuse images or terrorism

2

. Technology Secretary Liz Kendall declared that "the days of tech firms having a free pass are over," emphasizing that no woman should have to chase platform after platform waiting days for an image to come down3

.The UK's Revenge Porn Helpline has reported that while it succeeds more than 90% of the time in getting content removed, platforms aren't always compliant and it can take several requests. David Wright, chief executive officer of the UK Safer Internet Centre, emphasized that victims want help now, "not in a few hours or a few days"

1

. The government will also publish guidance for internet companies on how to block rogue websites that host nudification tools and fall outside the reach of the Online Safety Act3

.Broader Implications for Online Safety

Starmer wrote that institutional misogyny being "woven into the fabric of our institutions" meant the problem had not been taken seriously enough, with arguments from women often dismissed as exaggerated or isolated incidents

2

. The government has also launched a consultation on measures including an Australian-style ban on under-16s using social media, ensuring it can implement such restrictions quickly if recommended3

. Ireland's data privacy regulator announced that X faces an EU privacy investigation over the non-consensual deepfakes created by Grok, signaling coordinated international action3

. The fines on tech companies could amount to billions of pounds for major platforms, creating significant financial incentives for compliance with the new regulations.

Source: Sky News

References

Summarized by

Navi

[2]

Related Stories

UK forces tech firms to block cyberflashing as Online Safety Act makes it a priority offence

08 Jan 2026•Policy and Regulation

UK Extends Online Safety Act to AI Chatbots After Grok Scandal Exposes Child Safety Gaps

16 Feb 2026•Policy and Regulation

UK Government to Criminalize Creation and Sharing of Sexually Explicit Deepfakes

07 Jan 2025•Policy and Regulation

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology