The Double-Edged Sword of AI in Education: Detecting Cheating and False Accusations

2 Sources

2 Sources

[1]

What Happens When AI Falsely Flags Students for Cheating

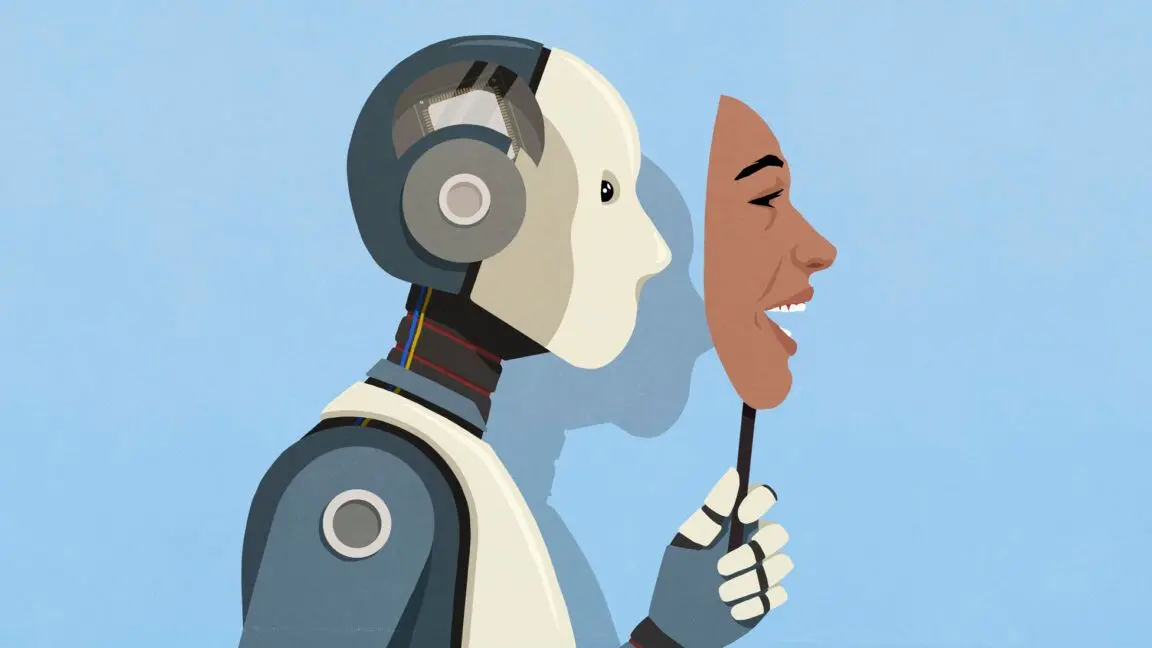

The education system has an AI problem. As students have started using tools like ChatGPT to do their homework, educators have deployed their own AI tools to determine if students are using AI to cheat. But the detection tools, which are largely effective, do flag false positives roughly 2% of the time. For students who are falsely accused, the consequences can be devastating. On today's Big Take podcast, host Sarah Holder speaks to Bloomberg's tech reporter Jackie Davalos about how students and educators are responding to the emergence of generative AI and what happens when efforts to crack down on its use backfire. Read more: AI Detectors Falsely Accuse Students of Cheating -- With Big Consequences

[2]

Big Take: When AI Falsely Flags Students For Cheating

Big Take: When AI Falsely Flags Students For Cheating The education system has an AI problem. As students have started using tools like ChatGPT to do their homework, educators have deployed their own AI tools to determine if students are using AI to cheat. But the detection tools, which are largely effective, do flag false positives roughly 2% of the time. For students who are falsely accused, the consequences can be devastating. On today's Big Take podcast, host Sarah Holder speaks to Bloomberg's tech reporter Jackie Davalos about how students and educators are responding to the emergence of generative AI and what happens when efforts to crack down on its use backfire.

Share

Share

Copy Link

As AI tools like ChatGPT become popular for homework, educators are using AI detection tools to catch cheaters. However, these tools sometimes falsely accuse students, leading to serious consequences.

The Rise of AI in Education

The education system is grappling with a new challenge as artificial intelligence (AI) tools like ChatGPT become increasingly popular among students for completing homework assignments. In response, educators have begun deploying their own AI-powered detection tools to identify potential cheating

1

2

. This technological arms race has sparked a debate about the ethics and effectiveness of using AI to combat AI-assisted academic dishonesty.The Effectiveness and Pitfalls of AI Detection Tools

While these AI detection tools are largely effective in identifying AI-generated content, they are not infallible. Reports suggest that these tools have a false positive rate of approximately 2%

1

. This means that for every 100 assignments checked, two may be incorrectly flagged as AI-generated when they are, in fact, original work by students.Consequences of False Accusations

For students who fall victim to these false positives, the consequences can be severe. Being accused of academic dishonesty can have far-reaching implications, potentially affecting a student's academic record, future educational opportunities, and even career prospects. The emotional toll of such accusations on students who have genuinely completed their work cannot be understated

1

.Educators' Dilemma

Educators find themselves in a challenging position. On one hand, they need to maintain academic integrity and ensure that students are doing their own work. On the other hand, they must be cautious about relying too heavily on imperfect technology that could unfairly penalize innocent students. This situation raises important questions about the balance between leveraging technology to maintain academic standards and protecting students' rights and reputations.

Student and Educator Responses

The emergence of generative AI in education has elicited varied responses from both students and educators. Some students argue that AI tools are simply the latest in a long line of technological aids for learning, while others express concern about the fairness of AI detection methods. Educators are divided on how to adapt their teaching and assessment methods in light of these new technologies

2

.Related Stories

The Broader Implications

This situation highlights broader issues surrounding the integration of AI in education. It raises questions about how to teach critical thinking and writing skills in an age where AI can generate human-like text. Additionally, it underscores the need for clear policies and guidelines on the use of AI in academic settings, both by students and institutions.

Looking Ahead

As AI technology continues to evolve, both in its generative capabilities and detection methods, the education system will need to adapt. This may involve rethinking traditional assessment methods, developing more sophisticated and accurate detection tools, and fostering open discussions about the ethical use of AI in academic contexts. The challenge lies in harnessing the potential of AI to enhance learning while maintaining the integrity and fairness of educational assessments.

References

Summarized by

Navi

Related Stories

Student Demands Tuition Refund After Catching Professor Using ChatGPT

15 May 2025•Technology

AI in Education: Reshaping Learning and Challenging Academic Integrity

12 Sept 2025•Technology

University of Illinois Students Caught Using AI to Apologize for Cheating, Highlighting Academic Integrity Crisis

30 Oct 2025•Entertainment and Society

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology