The Dual-Edged Sword of AI: Technological Advancement and Societal Concerns

2 Sources

2 Sources

[1]

The Coming Tech Autocracy | Sue Halpern

To understand why a number of Silicon Valley tech moguls are supporting Donald Trump's third presidential campaign after shunning him in 2016 and 2020, look no further than chapter three, bullet point five, of this year's Republican platform. It starts with the party's position on cryptocurrency, that ephemeral digital creation that facilitates money laundering, cybercrime, and illicit gun sales while greatly taxing energy and water resources: Republicans will end Democrats' unlawful and unAmerican Crypto crackdown and oppose the creation of a Central Bank Digital Currency. We will defend the right to mine Bitcoin, and ensure every American has the right to self-custody of their Digital Assets, and transact free from Government Surveillance and Control. The platform then pivots to artificial intelligence, the technology that brings us deepfake videos, voice cloning, and a special kind of misinformation that goes by the euphemistic term "hallucination," as if the happened to accidentally swallow a tab of : We will repeal Joe Biden's dangerous Executive Order that hinders Innovation, and imposes Radical Leftwing ideas on the development of this technology. In its place, Republicans support development rooted in Free Speech and Human Flourishing. According to the venture capitalist Ben Horowitz, who, along with his business partner Marc Andreessen, is all in for the former president, Trump wrote those words himself. (This may explain the random capitalizations.) As we were reminded a few months ago when Trump requested a billion dollars from the oil and gas industry in exchange for favorable energy policies once he is in office again, much of American politics is transactional. Horowitz and Andreessen are, by their own account, the biggest crypto investors in the world, and their firm, Andreessen Horowitz, holds two funds worth billions. It makes sense, then, that they and others who are investing in or building these nascent industries would support a craven, felonious autocrat; the return on investment promises to be substantial. Or, as Andreessen wrote last year in a five-thousand-plus-word ramble through his neoreactionary animating philosophy called "The Techno-Optimist Manifesto," "Willing buyer meets willing seller, a price is struck, both sides benefit from the exchange or it doesn't happen." The fact is, more than 70 percent of Americans, both Democrat and Republican, favor the establishment of standards to test and ensure the safety of artificial intelligence, according to a survey conducted by the analytics consultancy Ipsos last November. An earlier Ipsos poll found that 83 percent "do not trust the companies developing systems to do so responsibly," a view that was also held across the political spectrum. Even so, as shown by both the Republican platform and a reported draft executive order on prepared by Trump advisers that would require an immediate review of "unnecessary and burdensome regulations," public concern is no match for corporate dollars. Perhaps to justify this disregard, Trump and his advisers are keen to blame China. "Look, is very scary, but we absolutely have to win, because if we don't win, then China wins, and that is a very bad world," Trump told Horowitz and Andreessen. (They agreed.) Pitching as "a new geopolitical battlefield that must somehow be 'won,'" to quote Verity Harding, the former head of public policy at Google DeepMind, has become a convenient pretext for its unfettered development. Harding's new book, Needs You, an eminently readable examination of the debates over earlier transformational technologies and their resolutions, suggests -- perhaps a bit too hopefully -- that it doesn't have to be this way. Artificial intelligence, a broad category of computer programs that automate tasks that might otherwise require human cognition, is not new. It has been used for years to recommend films on Netflix, filter spam e-mails, scan medical images for cancer, play chess, and translate languages, among many other things, with relatively little public or political interest. That changed in November 2022, when Open, a company that started as a nonprofit committed to developing for the common good, released Chat, an application powered by the company's large language model. It and subsequent generative platforms, with their seemingly magical abilities to compose poetry, pass the bar exam, create pictures from words, and write code, captured the public imagination and ushered in a technological revolution. It quickly became clear, though, that the magic of generative could also be used to practice the dark arts: with the right prompt, it could explain how to make a bomb, launch a phishing attack, or impersonate a president. And it can be wildly yet confidently inaccurate, pumping out invented facts that seem plausibly like the real thing, as well as perpetuating stereotypes and reinforcing social and political biases. Generative is trained on enormous amounts of data -- the early models were essentially fed the entire Internet -- including copyrighted material that was appropriated without consent. That is bad enough and has led to a number of lawsuits, but, worse, once material is incorporated into a foundational model, the model can be prompted to write or draw "in the style of" someone, diluting the original creator's value in the marketplace. When, in May 2023, the Writers Guild of America went on strike, in part to restrict the use of -generated scripts, and was joined by the Screen Actors Guild two months later, it was a blatant warning to the rest of us that generative was going to change all manner of work, including creative work that might have seemed immune from automation because it is so fundamentally human and idiosyncratic. It also became apparent that generative is going to be extremely lucrative, not only for the billionaires of Silicon Valley, whose wealth has already more than doubled since Trump's 2017 tax cuts, but for the overall economy, potentially surpassing the economic impact of the Internet itself. By one account, will add close to $16 trillion to the global economy by 2030. Open, having shed its early idealism, is, by the latest accounting, valued at $157 billion. Anthropic, a rival company founded by Open alumni, is in talks to increase its valuation to $40 billion. (Amazon is an investor.) Meta, Google, and Microsoft, too, have their own chatbots, and Apple recently integrated into its newest phones. As the cognitive scientist Gary Marcus proclaims in his short but mighty broadside Taming Silicon Valley: How We Can Ensure That AI Works for Us, after Chat was released, "almost overnight went from a research project to potential cash cow." Arguably, artificial intelligence's most immediate economic effect, and the most obvious reason it is projected to add trillions to the global economy, is that it will reduce or replace human labor. While it will take time for agents to be cheaper than human workers (because the cost of training is currently so high), a recent survey of chief financial officers conducted by researchers at Duke University and the Federal Reserve found that more than 60 percent of US companies plan to use to automate tasks currently done by people. In a study of 750 business leaders, 37 percent said technology had replaced some of their workers in 2023, and 44 percent reported that they expected to lay off employees this year due to . But in the computer scientist Daniela Rus's new book, The Mind's Mirror: Risk and Reward in the Age of AI, written with Gregory Mone, she offers a largely sunny take on the digital future: The long-term impact of automation on job loss is extremely difficult to predict, but we do know that does not automate jobs. and machine learning automate tasks -- and not every task, either. This is a semantic feint: tasks are what jobs are made of. Goldman Sachs estimates that 300 million jobs globally will be lost or degraded by artificial intelligence. What does degraded mean? Contrary to Rus, who believes that technologies such as Chat "will not eliminate writing as an occupation, yet they will undoubtedly alter many writing jobs," consider the case of Olivia Lipkin, a twenty-five-year-old copywriter at a San Francisco tech start-up. As she told The Washington Post, her assignments dropped off after the release of Chat, and managers began referring to her as "Olivia/Chat." Eventually her job was eliminated because, as noted in her company's internal Slack messages, using the bot was cheaper than paying a writer. "I was actually out of a job because of ," she said. Lipkin is one person, but she represents a trend that has only just begun to gather steam. The outplacement firm Challenger, Gray and Christmas found that nearly four thousand US jobs were lost to in May 2023 alone. In many cases, workers are now training the technology that will replace them, either inadvertently, by modeling a given task -- i.e., writing ad copy that the machine eventually mimics -- or explicitly, by teaching the to see patterns, recognize objects, or flag the words, concepts, and images that the tech companies have determined to be off-limits. In Code Dependent: Living in the Shadow of AI, the journalist Madhumita Murgia documents numerous cases of people, primarily in the Global South, whose "work couches a badly kept secret about so-called artificial intelligence systems -- that the technology does not 'learn' independently, and it needs humans, millions of them, to power it." They include displaced Syrian doctors who are training to recognize prostate cancer, college graduates in Venezuela labeling fashion items for e-commerce sites, and young people in Kenya who spend hours each day poring over photographs, identifying the many objects that an autonomous car might encounter. Eventually the itself will be able to find the patterns in the prostate cancer scans and spot the difference between a stop sign and a yield sign, and the humans will be left behind. And then there is the other kind of degradation, the kind that subjects workers to horrific content in order to train artificial intelligence to recognize and reject it. At a facility in Kenya, Murgia found workers subcontracted by Meta who spend their days watching "bodies dismembered from drone attacks, child pornography, bestiality, necrophilia and suicides, filtering them out so that we don't have to." "I later discovered that many of them had nightmares for months and years," she writes: "Some were on antidepressants, others had drifted away from their families, unable to bear being near their own children any longer." The same kind of work was being done elsewhere for Open. In some of these cases, workers are required to sign agreements that absolve the tech companies of responsibility for any mental health issues that arise in the course of their employment and forbid them from talking to anyone, including family members, about the work they do. It may be some consolation that tech companies are trying to keep the most toxic material out of their systems. But they have not prevented bad actors from using generative to inject venomous content into the public square. Deepfake technology, which can replace a person in an existing image with someone else's likeness or clone a person's voice, is already being used to create political propaganda. Recently the Trump patron Elon Musk posted on X, the social media site he owns, a manipulated video of Kamala Harris saying things she never said, without any indication that it was fake. Similarly, in the aftermath of Hurricane Helene, a doctored photo of Trump knee-deep in the floodwaters went viral. (The picture first appeared on Threads and was flagged by Meta as fake.) While deepfake technology can also be used for legitimate reasons, such as to create a cute Pepsi ad that Rus writes about, it has been used primarily to make nonconsensual pornography: of all the deepfakes found online in 2023, 98 percent were porn, and 99 percent of those depicted were women. For the most part, those who do not give permission for their likenesses to be used in -generated porn have no legal recourse in US courts. Though thirteen states currently have laws penalizing the creation or dissemination of sexually explicit deepfakes, there are no federal laws prohibiting the creation or consumption of nonconsensual pornography (unless it involves children). Section 230 of the Communications Decency Act, which has shielded social media companies from liability for what is published on their platforms, may also provide cover for companies whose technology is used to create this material. The European Union's Act, which was passed in the spring, has the most nuanced rules to curb malicious -generated content. But, as Murgia points out, trying to get nonconsensual images and videos removed from the Internet is nearly impossible. The EU Act is the most comprehensive legislation to address some of the more egregious harms of artificial intelligence. The European Commission first began exploring the possibility of regulating in the spring of 2021, and it took three years, scores of amendments, public comments, and vetting by numerous committees to get it passed. The act was almost derailed by lobbyists working on behalf of Open, Microsoft, Google, and other tech companies, who spent more than 100 million euros in a single year trying to persuade the EU to make the regulations voluntary rather than mandatory. When that didn't work, Sam Altman, the of Open, who has claimed numerous times that he would like governments to regulate , threatened to pull the company's operations from Europe because he found the draft law too onerous. He did not follow through, but Altman's threat was a billboard-size announcement of the power that the tech companies now wield. As the political scientist Ian Bremmer warned in a 2023 Talk, the next global superpower may well be those who run the big tech companies: These technology titans are not just men worth 50 or 100 billion dollars or more. They are increasingly the most powerful people on the planet, with influence over our futures. And we need to know: Are they going to act accountably as they release new and powerful artificial intelligence? It's a crucial question. So far, tech companies have been resisting government-imposed guidelines and regulations, arguing instead for extrajudicial, voluntary rules. To support this position, they have trotted out the age-old canard that regulation stifles innovation and relied on conservative pundits like James Pethokoukis, a senior fellow at the American Enterprise Institute, for backup. The real "danger around is that overeager Washington policymakers will rush to regulate a fast-evolving technology," Pethokoukis wrote in an editorial in the New York Post. We shouldn't risk slowing a technology with vast potential to make America richer, healthier, more militarily secure, and more capable of dealing with problems such as climate change and future pandemics. The tech companies are hedging their bets by engaging in a multipronged effort of regulatory capture. According to Politico, an organization backed by Silicon Valley billionaires and tied to leading artificial intelligence firms is funding the salaries of more than a dozen fellows in key congressional offices, across federal agencies and at influential think tanks. If they succeed, the fox will not only be guarding the henhouse -- the fox will have convinced legislators that this will increase the hens' productivity. Another common antiregulation stance masquerading as its opposite is the assertion -- like the one made by Michael Schwarz, Microsoft's chief economist, at last year's World Economic Forum Growth Summit -- that "we shouldn't regulate until we see some meaningful harm that is actually happening." (A more bizarre variant of this was articulated by Marc Andreessen on an episode of the podcast The Ben and Marc Show, when he said that he and Horowitz are not against regulation but believe it "should happen at the application level, not at the technology level...because to regulate at the technology level, then you're regulating math.") Those harms are already evident, of course, from -generated deepfakes to algorithmic bias to the proliferation of misinformation and cybercrime. Murgia writes about an algorithm used by police in the Netherlands that identifies children who may, in the future, commit a crime; another whose seemingly neutral dataset led to more health care for whites than Blacks because it used how much a person paid for health care as a proxy for their health care needs; and an -guided drone system deployed by the United States in Yemen that determined which people to kill based on certain predetermined patterns of behavior, not on their confirmed identities. Predictive systems, whose parameters are concealed by proprietary algorithms, are being used in an increasing number of industries, as well as by law enforcement and government agencies and throughout the criminal justice system. Typically, when machines decide to deny parole, reject an application for government benefits, or toss out the résumé of a job seeker, the rebuffed party has few, if any, remedies: How can they appeal to a machine that will always give them the same answer? There are also very real, immediate environmental harms from . Large language models have colossal carbon footprints. By one estimate, the carbon emissions resulting from the training of -3 were the equivalent of those from a car driving the 435,000 or so miles to the moon and back, while for -4 the footprint was three hundred times that. Rus cites a 2023 projection that if Google were to swap out its current search engine for a large language model, the company's "total electricity consumption would skyrocket, rivaling the energy appetite of a country like Ireland." Rus also points out that the amount of water needed to cool the computers used to train these models, as well as their data centers, is enormous. According to one study, it takes between 700,000 and two million liters of fresh water just to train a large language model, let alone deploy it. Another study estimates that a large data center requires between one million and five million gallons of water a day, or what's used by a city of 10,000 to 50,000 people. Microsoft, which has already integrated its chatbot, Copilot, into many of its business and productivity products, is looking to small modular nuclear reactors to power its ambitions. It's a long shot. No Western nation has begun building any of these small reactors, and in the US only one company has had its design approved, at a cost of $500 million. To come full circle, Microsoft is training an on documents relating to the licensing of nuclear power plants, in an effort to expedite the regulatory process. Not surprisingly, there is already opposition in communities where these new nuclear plants may be located. In the meantime, Microsoft has signed a deal with the operators of the Three Mile Island nuclear plant to bring the part of the facility that did not melt down in 1979 back online by 2028. Microsoft will purchase all of the energy created there for twenty years. No doubt Gary Marcus would applaud the EU Act and other attempts to hold the big tech companies to account, since he wrote his book as a call to action. "We can't realistically expect that those who hope to get rich from are going to have the interests of the rest of us close at heart," he writes. "We can't count on governments driven by campaign finance contributions to push back. The only chance at all is for the rest of us to speak up, really loudly." Marcus details the demands that citizens should make of their governments and the tech companies. They include transparency on how systems work; compensation for individuals if their data is used to train s and the right to consent to this use; and the ability to hold tech companies liable for the harms they cause by eliminating Section 230, imposing cash penalties, and passing stricter product liability laws, among other things. Marcus also suggests -- as does Rus -- that a new, -specific federal agency, akin to the , the , or the , might provide the most robust oversight. As he told the Senate when he testified in May 2023: The number of risks is large. The amount of information to keep up on is so much.... is going to be such a large part of our future and is so complicated and moving so fast...[we should consider having] an agency whose full-time job is to do this. It's a fine idea, and one that a Republican president who is committed to decimating the so-called administrative state would surely never implement. And after the Supreme Court's recent decision overturning the Chevron doctrine, Democratic presidents who try to create a new federal agency -- at least one with teeth -- will likely find the effort hamstrung by conservative jurists. That doctrine, established by the Court's 1984 decision in Chevron v. Natural Resources Defense Council, granted federal agencies the power to use their expertise to interpret congressional legislation. As a consequence, it gave the agencies and their nonpartisan civil servants considerable leeway in applying laws and making policy decisions. The June decision reverses this. In the words of David Doniger, one of the lawyers who argued the original Chevron case, "The net effect will be to weaken our government's ability to meet the real problems the world is throwing at us." A functional government, committed to safeguarding its citizens, might be keen to create a regulatory agency or pass comprehensive legislation, but we in the United States do not have such a government. In light of congressional dithering, regulatory capture, and a politicized judiciary, pundits and scholars have proposed other ways to ensure safe . Harding suggests that the Internet Corporation for Assigned Names and Numbers (), the international, nongovernmental group responsible for maintaining the Internet's core functions, might be a possible model for international governance of . While it's not a perfect fit, especially because assets are owned by private companies, and it would not have the enforcement mechanism of a government, a community-run body might be able, at least, to determine "the kinds of rules of the road that will need to adhere to in order to protect the future." In a similar vein, Marcus proposes the creation of something like the International Atomic Energy Agency or the International Civil Aviation Organization but notes that "we can't really expect international governance to work until we get national governance to work first." By far the most intriguing proposal has come from the Fordham law professor Chinmayi Sharma, who suggests that the way to ensure both the safety of and the accountability of its creators is to establish a professional licensing regime for engineers that would function in a similar way to medical licenses, malpractice suits, and the Hippocratic oath in medicine. "What if, like doctors," she asks in the Washington University Law Review, " engineers also vowed to do no harm?" Sharma's concept, were it to be adopted, would overcome the obvious obstacles currently stymieing effective governance: it bypasses the tech companies, it does not require a new government bureaucracy, and it is nimble. It would accomplish this, she writes, by establishing academic requirements at accredited universities; creating mandatory licenses to "practice" commercial engineering; erecting independent organizations that establish and update codes of conduct and technical practice guidelines; imposing penalties, suspensions or license revocations for failure to comply with codes of conduct and practice guidelines; and applying a customary standard of care, also known as a malpractice standard, to individual engineering decisions in a court of law. Professionalization, she adds, quoting the network intelligence analyst Angela Horneman, "would force engineers to treat ethics 'as both a software design consideration and a policy concern.'" Sharma's proposal, though unconventional, is no more or less aspirational than Marcus's call for grassroots action to curb the excesses of Big Tech or Harding's hope for an international, inclusive, community-run, nonbinding regulatory group. Were any of these to come to fruition, they would be likely targets of a Republican administration and its tech industry funders, whose ultimate goal, it seems, is a post-democracy world where they decide what's best for the rest of us. The danger of allowing them to set the terms of development now is that they will amass so much money and so much power that this will happen by default.

[2]

How to prevent millions of invisible law-free AI agents casually wreaking economic havoc

Shortly after the world tilted on its axis with the release of ChatGPT, folks in the tech community started asking each other: What is your p(doom)? That's nerd-speak for, what do you think is the probability that AI will destroy humanity? There was Eliezer Yudkowsky warning women not to date men who didn't think the odds were greater than 80%, as he believes. AI pioneers Yoshua Bengio and Geoffrey Hinton estimating 10-20 percent -- enough to get both to shift their careers from helping to build AI to helping to protect against it. And then another AI pioneer, Meta's chief AI scientist Yann LeCun, pooh-poohing concerns and putting the risk at less than .01% -- "below the chances of an asteroid hitting the earth." According to LeCun, current AI is dumber than a cat. The numbers are, of course, meaningless. They just stand in for wildly different imagined futures in which humans either manage or fail to maintain control over how AI behaves as it grows in capabilities. The true doomers see us inevitably losing an evolutionary race with a new species: AI "wakes up" one day with the goal of destroying humanity because it can and we're slow, stupid, and irrelevant. Big digital brains take over from us puny biological brains just like we took over the entire planet, destroying or dominating all other species. The pooh-poohers say, Look, we are building this stuff, and we won't build and certainly won't deploy something that could harm us. Personally, although I've been thinking about what happens if we develop really powerful AI systems for several years now, I don't lose sleep over the risk of rogue AI that just wants to squash us. I have found that people who do worry about this often have a red-in-tooth-and-claw vision of evolution as a ruthlessly Darwinian process according to which the more intelligent gobble up the less. But that's not the way I think about evolution, certainly not among humans. Among humans, evolution has mostly been about cultural group selection -- humans succeed when the groups they belong to succeed. We have evolved to engage in exchange -- you collect the berries, I'll collect the water. The extraordinary extent to which humans have outstripped all other species on the planet is a result of the fact that we specialize and trade, engage in shared defense and care. It begins with small bands sharing food around the fire and ultimately delivers us to the phenomenally complex global economy and geopolitical order we live in today. And how have we accomplished this? Not by relentlessly increasing IQ points but by continually building out the stability and adaptability of our societies. By enlarging our capacity for specialization and exchange. Our intelligence is collective intelligence, cooperative intelligence. And to sustain that collectivity and cooperation we rely on complex systems of norms and rules and institutions that give us each the confidence it takes to live in a world where we depend constantly on other people doing what they should. Bringing home the berries. Ensuring the water is drinkable. So, when I worry about where AI might take us, this is what I worry about: AI that messes up these intricate evolved systems of cooperation in the human collective. Because what our AI companies are furiously building is no longer well thought of as new tools for us to use in what we do. What they are bringing into being is a set of entirely new actors who will themselves start to "do." It is the implication of the autonomy of advanced AI that we need to come to grips with. Autonomy has been there from the Big Bang of modern AI in 2012 -- when Geoffrey Hinton and his students Ilya Sutskever and Alex Krizhevsky demonstrated the power of a technique for building software that writes itself. That's what machine learning is: Instead of a human programmer writing all the lines of code, the machine does it. Humans write learning algorithms that give the machine an objective -- try to come up with labels for these pictures ("cat" "dog") that match the ones that humans give them -- and some mathematical dials and switches to fiddle with to get to the solution. But then the machine does the rest. This type of autonomy already generates a bunch of issues, which we've grappled with in AI since the start: Since the machine wrote the software, and it's pretty darn complicated, it's not easy for humans to unpack what it's doing. That can make it hard to predict or understand or fix. But we can still decide whether to use it; we're still very much in control. Today, however, the holy grail that is drawing billions of AI investment dollars is autonomy that goes beyond a machine writing its own code to solve a problem set for it by humans. AI companies are now aiming at autonomous AI systems that can use their code to go out and do things in the real world: buy or sell shares on the stock exchange, agree to contracts, execute maintenance routines on equipment. They are giving them tools -- access to the internet to do a search today, access to your bank account tomorrow. Our AI developers and investors are looking to create digital economic actors, with the capacity to do just about anything. When Meta CEO Mark Zuckerberg recently said that he expected the future to be a world with hundreds of millions if not billions of AI agents -- "more AI agents than there are people" -- he was imagining this. Artificial agents helping businesses manage customer relations, supply chains, product development, marketing, pricing. AI agents helping consumers manage their finances and tax filings and purchases. AI agents helping governments manage their constituents. That's the "general" in artificial general intelligence, or AGI. The goal is to be able to give an AI system a "general" goal and then set it loose to make and execute a plan. Autonomously. Mustafa Suleyman, now head of AI at Microsoft, imagined it this way: In this new world, an AI agent would be able to "make $1 million on a retail web platform in a few months with just a $100,000 investment." A year ago, he thought such a generally capable AI agent could be two years away. Pause for a moment and think about all the things this would entail: a piece of software with control over a bank account and the capacity to enter into contracts, engage in marketing research, create an online storefront, evaluate product performance and sales, and accrue a commercial reputation. For starters. Maybe it has to hire human workers. Or create copies of itself to perform specialized tasks. Obtain regulatory approvals? Respond to legal claims of contract breach or copyright violation or anticompetitive conduct? Shouldn't we have some rules in place for these newcomers? Before they start building and selling products on our retail web platforms? Because if one of our AI companies announces they have these agents ready to unleash next year, we currently have nothing in place. If someone wants to work in our economy, they have to show their ID and demonstrate they're authorized to work. If someone wants to start a business, they have to register with the secretary of state and provide a unique legal name, a physical address, and the name of the person who can be sued if the business breaks the rules. But an AI agent? Currently they can set up shop wherever and whenever they like. Put it this way: If that AI agent sold you faulty goods, made off with your deposit, stole your IP, or failed to pay you, who are you going to sue? How are all the norms and rules and institutions that we rely on to make all those complex trades and transactions in the modern economy function going to apply? Who is going to be accountable for the actions of this autonomous AI agent? The naïve answer is: whoever unleashed the agent. But there many problems here. First, how are you going to find the person or business who set the AI agent on its path, possibly, according to Suleyman, a few months ago? Maybe the human behind the agent has disclosed their identity and contact information at every step along the way. But maybe they haven't. We don't have any specific rules in place yet requiring humans to disclose like this. I'm sure smart lawyers will be looking for such rules in our existing law, but smart scammers, and aggressive entrepreneurs, will be looking for loopholes too. Even if you can trace every action by an AI agent back to a human you can sue, it's probably not cheap or easy to do so. And when legal rules are more expensive to enforce, they are less often enforced. We get less deterrence of bad behavior and distortion in our markets. And suppose you do find the human behind the machine. Then what? The vision, remember, is one of an AI agent capable of taking a general instruction -- go make a million bucks! -- and then performing autonomously with little to no further human input. Moseying around the internet, making deals, paying bills, producing stuff. What happens when the agent starts messing up or cheating? These are agents built on machine learning: Their code was written by a computer. That code is extremely uninterpretable and unpredictable -- there are literally billions of mathematical dials and switches being used by the machine to decide what to do next. When ChatGPT goes bananas and starts telling a New York Times journalist it's in love with him and he should leave his wife, we have no idea why. Or what it might do if it now has access to bank accounts and email systems and online platforms. This is known as the alignment problem, and it is still almost entirely unsolved. So, here's my prediction if you sue the human behind the AI agent when you find them: The legal system will have a very hard time holding them responsible for everything the agent does, regardless of how alien, weird, and unpredictable. Our legal systems hold people responsible for what they should have known better to avoid. It is rife with concepts like foreseeability and reasonableness. We just don't hold people or businesses responsible very often for stuff they had no way of anticipating or preventing. And if we do want to hold them responsible for stuff they never imagined, then we need clear laws to do that. But that's why the existential risk that keeps me up at night is what seems to be the very real and present risk that we'll have a flood of AI agents joining our economic systems with essentially zero legal infrastructure in place. Effectively no rules of the road -- indeed, no roads -- to ensure we don't end up in chaos. And I do mean chaos -- in the sense of butterfly wings flapping in one region of the world and hurricanes happening elsewhere. AI agents won't just be interacting with humans; they'll be interacting with other AI agents. And creating their own sub-agents to interact with other sub-agents. This is the problem my lab works on -- multi-agent AI systems, with their own dynamics at a level of complexity beyond just the behavior of a Gemini or GPT-4 or Claude. Multi-agent dynamics are also a major gap in our efforts to plug the hole of risks from frontier models: Red-teaming efforts to make sure that our most powerful language models can't be prompted into helping someone build a bomb do not go near the question of what happens in multi-agent systems. What might happen when there are hundreds, thousands, millions of humans and AI agents interacting in transactions that can affect the stability of the global economy or geopolitical order? We have trouble predicting what human multi-agent systems -- economies, political regimes -- will produce. We know next to nothing about what happens when you introduce AI agents into our complex economic systems. But we do know something about how to set the ground rules for complex human groups. And we should be starting there with AI. Number one: Just as we require workers to have verifiable ID and a social security number to get a job, and just as we require companies to register a unique identity and legally effective address to do business, we should require registration and identification of AI agents set loose to autonomously participate in transactions online. And just as we require employers and banks to verify the registration of the people they hire and the businesses they open bank accounts for, we should require anyone transacting with an AI agent to verify their ID and registration. This is a minimal first step. It requires creating a registration scheme and some verification infrastructure. And answering some thorny questions about what counts as a distinct "instance" of an AI agent (since software can be freely replicated and effectively be in two -- multiple -- places at once and an agent could conceivably "disappear" and "reconstitute" itself). That's what law does well: create artificial boundaries around things so we can deal with them. (Like requiring a business to have a unique name that no one else has registered, and protecting trademarks that could otherwise be easily copied. Or requiring a business or partnership to formally dissolve to "disappear.") It's eminently doable. The next step can go one of two ways, or both. The reason to create legally recognizable and persistent identities is to enable accountability -- read: someone to sue when things go wrong. A first approach on this would be to require any AI agent to have an identified person or business who is legally responsible for its actions -- and for that person or business to be disclosed in any AI transaction. States usually require an out-of-state business to publicly designate an in-state person or entity who can be sued in local courts in the event the business breaches a contract or violates the law. We could establish a similar requirement for an AI agent "doing business" in the state. But we may have to go further and make AI agents directly legally responsible for their actions. Meaning you could sue the AI agent. This may sound strange at first. But we already make artificial "persons" legally responsible: Corporations are "legal persons" that can sue and be sued in their own "name." No need to track down the shareholders or directors or managers. And we have a whole legal apparatus around how these artificial persons must be constituted and governed. They have their own assets -- which can be used to pay fines and damages. They can be ordered by courts to do or not do things. They can be stopped from doing business altogether if they engage in illegal or fraudulent activity. Creating distinct legal status for AI agents -- including laws about requiring them to have sufficient assets to pay fines or damages as well as laws about how they are "owned" and "managed" -- is probably something we'd have to do in a world with "more AI agents than people." How far away is this world? To be honest, we -- the public -- really don't know. Only the companies who are furiously investing billions into building AI agents -- Google, OpenAI, Meta, Anthropic, Microsoft -- know. And our current laws allow them to keep this information to themselves. And that's why there's another basic legal step we should be taking, now. Even before we create registration schemes for AI agents, we should create registration requirements for our most powerful AI models, as I've proposed (in brief and in detail) with others. That's the only way for governments to gain the kind of visibility we need to even know where to start on making sure we have the right legal infrastructure in place. If we're getting a whole slew of new alien participants added to our markets, shouldn't we have the same capacity to know who they are and make them follow the rules as we do with humans? We shouldn't just be relying on p-doom fights on X between technologists and corporate insiders about whether we are a few years, or a few decades, or a few hundred years away from these possibilities.

Share

Share

Copy Link

An exploration of the complex landscape surrounding AI development, including political implications, economic impacts, and societal concerns, highlighting the need for responsible innovation and regulation.

The Political Landscape of AI

The intersection of artificial intelligence and politics has become increasingly prominent, with significant implications for future governance. The Republican Party's platform, as highlighted by venture capitalists Ben Horowitz and Marc Andreessen, demonstrates a stark shift towards embracing AI and cryptocurrency development with minimal regulation

1

. This stance, purportedly written by Donald Trump himself, emphasizes "Free Speech and Human Flourishing" in AI development, contrasting with the current administration's approach.Public Opinion vs. Corporate Interests

Despite the political positioning, public sentiment tells a different story. According to Ipsos surveys, over 70% of Americans, across party lines, support establishing standards to ensure AI safety

1

. Furthermore, 83% express distrust in companies developing AI systems responsibly. This disconnect between public concern and political action underscores the influence of corporate interests in shaping AI policy.The Global AI Race

The narrative of AI development as a "geopolitical battlefield" against China has gained traction, serving as justification for rapid, unregulated advancement

1

. This framing, however, may oversimplify the complex challenges and ethical considerations surrounding AI development.The Evolution and Impact of AI

Artificial intelligence has transitioned from specialized applications to more general-purpose tools, exemplified by the release of ChatGPT in November 2022

1

. While these advancements offer remarkable capabilities, they also present significant risks, including the potential for misuse in creating harmful content or perpetuating biases.Economic and Labor Implications

The rise of generative AI has sparked concerns about its impact on various industries, particularly creative fields. The Writers Guild of America strike in 2023, partly motivated by concerns over AI-generated scripts, highlights the growing anxiety about AI's role in reshaping the job market

1

.The Debate on AI's Future Impact

The tech community remains divided on the potential long-term consequences of AI development. While some, like Eliezer Yudkowsky, warn of existential risks, others, such as Yann LeCun, downplay these concerns

2

. This divergence in opinions reflects the uncertainty surrounding AI's future trajectory.Related Stories

Autonomous AI Agents and Economic Implications

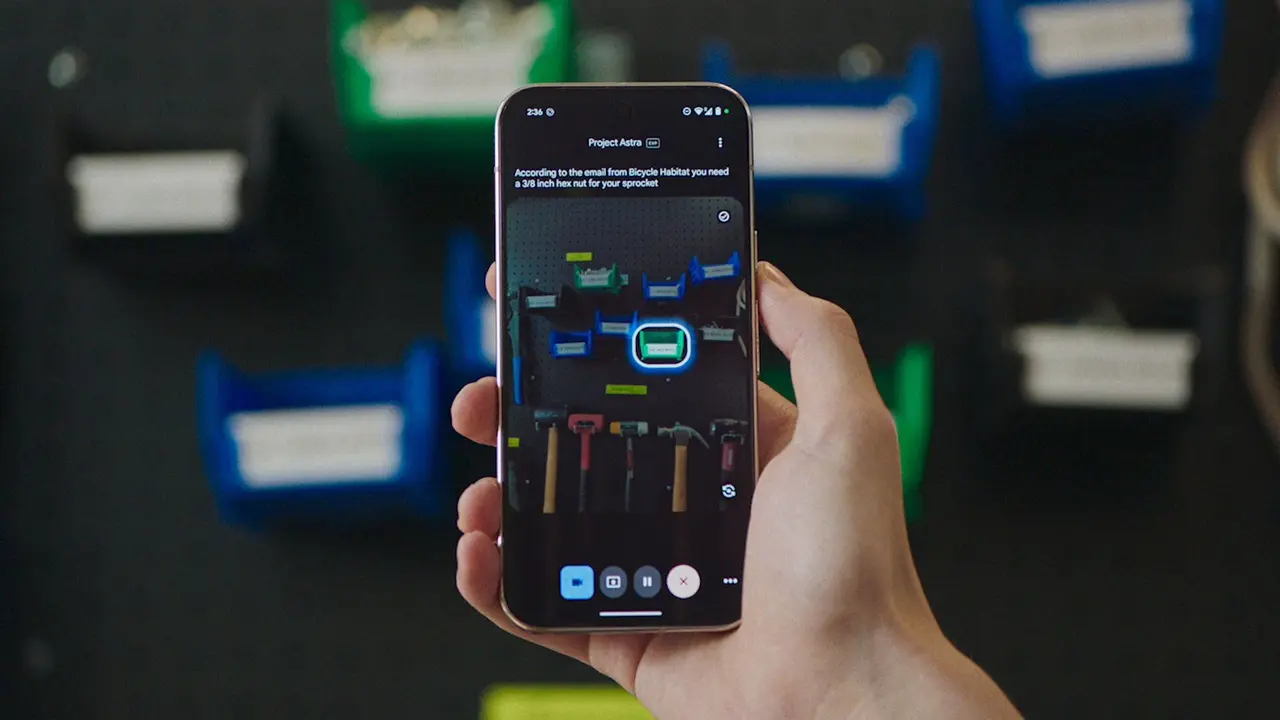

The development of autonomous AI agents capable of performing complex tasks independently is becoming a focal point for AI companies. Mark Zuckerberg's vision of a world with "more AI agents than there are people" underscores the potential for AI to dramatically reshape economic interactions and decision-making processes

2

.The Need for Responsible Development

As AI continues to advance, the importance of maintaining human control and oversight becomes paramount. The complex systems of norms, rules, and institutions that underpin human cooperation must be considered in AI development to prevent unintended disruptions to societal structures

2

.Conclusion

The rapid advancement of AI technology presents both unprecedented opportunities and significant challenges. Balancing innovation with responsible development and regulation will be crucial in harnessing AI's potential while mitigating its risks. As the debate continues, the need for informed public discourse and thoughtful policy-making becomes increasingly evident in shaping the future of AI and its impact on society.

References

Summarized by

Navi

[1]

Related Stories

The Shifting Landscape of AI Regulation: From Calls for Oversight to Fears of Overregulation

27 May 2025•Science and Research

Silicon Valley Elite Under Scrutiny: The Debate Over AI Ethics and Public Manipulation

18 Sept 2024

The Evolution of OpenAI: From AGI Fears to Global Influence

09 May 2025•Policy and Regulation

Recent Highlights

1

Elon Musk merges SpaceX with xAI, plans 1 million satellites to power orbital data centers

Business and Economy

2

SpaceX files to launch 1 million satellites as orbital data centers for AI computing power

Technology

3

Google Chrome AI launches Auto Browse agent to handle tedious web tasks autonomously

Technology