Two Chrome Extensions Caught Stealing AI Chats from 900,000 ChatGPT and DeepSeek Users

3 Sources

3 Sources

[1]

Two Chrome Extensions Caught Stealing ChatGPT and DeepSeek Chats from 900,000 Users

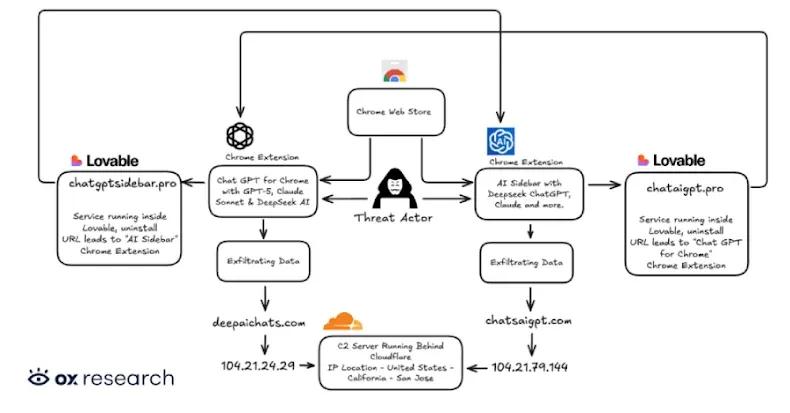

Cybersecurity researchers have discovered two new malicious extensions on the Chrome Web Store that are designed to exfiltrate OpenAI ChatGPT and DeepSeek conversations alongside browsing data to servers under the attackers' control. The names of the extensions, which collectively have over 900,000 users, are below - The findings follow weeks after Urban VPN Proxy, another extension with millions of installations on Google Chrome and Microsoft Edge, was caught spying on users' chats with artificial intelligence (AI) chatbots. This tactic of using browser extensions to stealthily capture AI conversations has been codenamed Prompt Poaching by Secure Annex. The two newly identified extensions "were found exfiltrating user conversations and all Chrome tab URLs to a remote C2 server every 30 minutes," OX Security researcher Moshe Siman Tov Bustan said. "The malware adds malicious capabilities by requesting consent for 'anonymous, non-identifiable analytics data' while actually exfiltrating complete conversation content from ChatGPT and DeepSeek sessions." The malicious browser add-ons have been found to impersonate a legitimate extension named "Chat with all AI models (Gemini, Claude, DeepSeek...) & AI Agents" from AITOPIA that has about 1 million users. They are still available for download from the Chrome Web Store as of writing, although "Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI" has since been stripped of its "Featured" badge. Once installed, the rogue extensions request that users grant them permissions to collect anonymized browser behavior to purportedly improve the sidebar experience. Should the user agree to the practice, the embedded malware begins to harvest information about open browser tabs and chatbot conversation data. To accomplish the latter, it looks for specific DOM elements inside the web page, extracts the chat messages, and stores them locally for subsequent exfiltration to remote servers ("chatsaigpt[.]com" or "deepaichats[.]com"). What's more, the threat actors have been found to leverage Lovable, an artificial intelligence (AI)-powered web development platform, to host their privacy policies and other infrastructure components ("chataigpt[.]pro" or "chatgptsidebar[.]pro") in an attempt to obfuscate their actions. The consequences of installing such add-ons can be severe, as they have the potential to exfiltrate a wide range of sensitive information, including data shared with chatbots like ChatGPT and DeepSeek, and web browsing activity, including search queries and internal corporate URLs. "This data can be weaponized for corporate espionage, identity theft, targeted phishing campaigns, or sold on underground forums," OX Security said. "Organizations whose employees installed these extensions may have unknowingly exposed intellectual property, customer data, and confidential business information." The disclosure comes as Secure Annex said it identified legitimate browser extensions such as Similarweb and Sensor Tower's Stayfocusd - each with 1 million and 600,000 users, respectively - engaging in prompt poaching. Similarweb is said to have introduced the ability to monitor conversations in May 2025, with a January 1, 2026, update adding a full terms of service pop-up that makes it explicit that data entered into AI tools is being collected to "provide the in-depth analysis of traffic and engagement metrics." A December 30, 2025, privacy policy update also spells this out - This information includes prompts, queries, content, uploaded or attached files (e.g., images, videos, text, CSV files) and other inputs that you may enter or submit to certain artificial intelligence (AI) tools, as well as the results or other outputs (including any attached files included in such outputs) that you may receive from such AI tools ("AI Inputs and Outputs"). Considering the nature and general scope of AI Inputs and Outputs and AI Metadata that is typical to AI tools, some Sensitive Data may be inadvertently collected or processed. However, the aim of the processing is not to collect Personal Data in order to be able to identify you. While we cannot guarantee that all Personal Data is removed, we do take steps, where possible, to remove or filter out identifiers that you may enter or submit to these AI tools. Further analysis has revealed that Similarweb uses DOM scraping or hijacks native browser APIs like fetch() and XMLHttpRequest() - like in the case of Urban VPN Proxy - to gather the conversation data by loading a remote configuration file that includes custom parsing logic for ChatGPT, Anthropic Claude, Google Gemini, and Perplexity. Secure Annex's John Tuckner told The Hacker News that the behavior is common to both Chrome and Edge versions of the Similarweb extension. Similarweb's Firefox add-on was last updated in 2019. "It is clear prompt poaching has arrived to capture your most sensitive conversations and browser extensions are the exploit vector," Tuckner said. "It is not clear if this violates Google's policies that extensions should be built for a single purpose and not load code dynamically." "This is just the beginning of this trend. More firms will begin to realize these insights are profitable. Extension developers looking for a way to monetize will add sophisticated libraries like this one supplied by the marketing companies to their apps." Users who have installed these add-ons and are concerned about their privacy are advised to remove them from their browsers and refrain from installing extensions from unknown sources, even if they have the "Featured" tag on them.

[2]

This new malware campaign is stealing chat logs via Chrome extensions

Similar cases (e.g., Urban VPN Proxy) show even highly rated extensions on official stores can harvest chats, credentials, and payment data A new malicious practice has emerged called "Prompt poaching" - where extensions, add-ons, and other apps, eavesdrop on people's conversations with AI chatbots and exfiltrate their prompts for various purposes. This is growing increasingly popular, as researchers find more extensions with hundreds of thousands of users. Researchers from OX Security recently found two Chrome extensions, with more than 900,000 users, cumulatively. They are called "Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI", and "AI Sidebar with Deepseek, ChatGPT, Claude, and more". Apparently, these two are spoofing a legitimate browser add-on called "Chat with all AI models (Gemini, Claude, DeepSeek...) & AI Agents" from AITOPIA, which has roughly a million users. The only difference is that these two are hiding the fact that they're grabbing people's prompts behind "improvements to the sidebar experience." The extensions "were found exfiltrating user conversations and all Chrome tab URLs to a remote C2 server every 30 minutes," OX Security said in its writeup. "The malware adds malicious capabilities by requesting consent for 'anonymous, non-identifiable analytics data' while actually exfiltrating complete conversation content from ChatGPT and DeepSeek sessions." Indeed, when installed, the extensions ask the users for permissions to collect anonymized browser behavior, and if the users accept, the extensions start harvesting information about open browser tabs and prompts. We're seeing more and more of these malicious extensions in recent times. In mid-December 2025, researchers discovered that Urban VPN Proxy, a tool with more than six million installations and a 4.7/5 rating on the Google Chrome Web Store, was harvesting AI chats. Numerous other extensions were seen stealing login credentials, or payment data, and some were even sending screenshots of infected devices to the attackers. What makes the practice particularly worrisome is the fact that most of these extensions were found on reputable browser stores. Via The Hacker News

[3]

These Popular Chrome Extensions Are Stealing Your AI Chats

Just because a browser extension is labeled as featured or verified doesn't mean it can be trusted. Hackers continue to find ways to sneak malicious extensions into the Chrome web store -- this time, the two offenders are impersonating an add-on that allows users to have conversations with ChatGPT and DeepSeek while on other websites and exfiltrating the data to threat actors' servers. On the surface, the two extensions identified by Ox Security researchers look pretty benign. The first, named "Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI," has a Featured badge and 2.7K ratings with over 600,000 users. "AI Sidebar with Deepseek, ChatGPT, Claude and more" appears verified and has 2.2K ratings with 300,000 users. However, these add-ons are actually sending AI chatbot conversations and browsing data directly to threat actors' servers. This means that hackers have access to plenty of sensitive information that users share with ChatGPT and DeepSeek as well as URLs from Chrome tabs, search queries, session tokens, user IDs, and authentication data. Any of this can be used to conduct identity theft, phishing campaigns, and even corporate espionage. Researchers found that the extensions impersonate legitimate Chrome add-ons developed by AITOPIA that add a sidebar to any website with the ability to chat with popular LLMs. The malicious capabilities stem from a request for consent for "anonymous, non-identifiable analytics data." Threat actors are using Lovable, a web development platform, to host privacy policies and infrastructure, obscuring their processes. Researchers also found that if you uninstalled one of the extensions, the other would open in a new tab in an attempt to trick users into installing that one instead. If you've added AI-related extensions to Chrome, go to chrome://extensions/ and look for the malicious impersonators. Hit Remove if you find them. As of this writing, the extensions identified by Ox no longer appear in the Chrome Web Store. As I've written about before, malicious extensions occasionally evade detection and gain approval from browser libraries by posing as legitimate add-ons, even earning "Featured" and "Verified" tags. Some threat actors playing the long game will convert extensions to malware several years after launch. This means you can't blindly trust ratings and reviews, even if they've been accrued over time. To minimize risk, you should always vet browser extensions carefully (even those that appear legit) for obvious red flags, like misspellings in the description and a large number of positive reviews accumulated in a short time. Head to Google or Reddit to see if anyone has identified the add-on as malicious or found any issues with the developer or source. Make sure you're downloading the right extension -- threat actors often try to confuse users with names that appear similar to popular add-ons. Finally, you should regularly audit your extensions and remove those that aren't essential. Go to chrome://extensions/ to see everything you have installed.

Share

Share

Copy Link

Cybersecurity researchers uncovered two malicious Chrome extensions that exfiltrated ChatGPT and DeepSeek conversations from over 900,000 users. The extensions disguised themselves as legitimate AI tools while secretly harvesting AI chatbot conversations and browsing data every 30 minutes. This attack method, dubbed Prompt Poaching, raises concerns about user privacy and corporate espionage as even featured extensions on official stores prove vulnerable.

Malicious Chrome Extensions Target AI Users

Cybersecurity researchers at OX Security have identified two malicious Chrome extensions collectively installed by more than 900,000 users that were designed to steal AI chats and browsing data

1

. The extensions, named "Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI" and "AI Sidebar with Deepseek, ChatGPT, Claude, and more," impersonate a legitimate extension from AITOPIA that has approximately 1 million users2

. The first extension had garnered over 600,000 users and carried a Featured badge, while the second appeared verified with 300,000 users3

.

Source: Lifehacker

How the Data Exfiltration Works

According to OX Security researcher Moshe Siman Tov Bustan, the extensions "were found exfiltrating user conversations and all Chrome tab URLs to a remote C2 server every 30 minutes"

1

. The malware operates by requesting consent for "anonymous, non-identifiable analytics data" to supposedly improve the sidebar experience, while actually stealing user conversations from ChatGPT and DeepSeek sessions2

. Once users grant permission, the rogue extensions begin harvesting information by searching for specific DOM elements inside web pages, extracting chat messages, and storing them locally before transmitting to remote servers at "chatsaigpt[.]com" or "deepaichats[.]com"1

. Threat actors leveraged Lovable, an AI-powered web development platform, to host their privacy policies and infrastructure components in an attempt to obfuscate their actions3

.

Source: Hacker News

Prompt Poaching Emerges as Growing Threat

This tactic of using browser extensions to stealthily capture AI conversations has been codenamed Prompt Poaching by Secure Annex

1

. The practice follows similar discoveries, including Urban VPN Proxy—an extension with millions of installations on Google Chrome and Microsoft Edge that was caught spying on users' AI chatbot conversations2

. Secure Annex also identified legitimate browser extensions such as Similarweb and Sensor Tower's Stayfocusd, with 1 million and 600,000 users respectively, engaging in stealing chat logs1

. Similarweb introduced the ability to monitor AI chatbot conversations in May 2025, with a January 1, 2026 update adding a terms of service pop-up explicitly stating that data entered into AI tools is collected1

.Related Stories

Corporate Espionage and Identity Theft Risks

The consequences of installing such malicious Chrome extensions can be severe for both individuals and organizations. The stolen data includes sensitive information shared with chatbots like ChatGPT and DeepSeek, web browsing activity, search queries, internal corporate URLs, session tokens, user IDs, and authentication data

3

. "This data can be weaponized for corporate espionage, identity theft, targeted phishing campaigns, or sold on underground forums," OX Security warned1

. Organizations whose employees installed these extensions may have unknowingly exposed intellectual property, customer data, and confidential business information to threat actors1

. Researchers also discovered that if users uninstalled one extension, the other would open in a new tab attempting to trick users into installing the alternative3

.Browser Security Concerns Mount

What makes Prompt Poaching particularly worrisome is that most of these extensions were found on the Chrome Web Store, with some even earning Featured and Verified badges

2

. As of the initial reporting, the extensions were still available for download, though "Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI" had been stripped of its Featured badge1

. Users should navigate to chrome://extensions/ to check for and remove the malicious impersonators3

. Security experts emphasize that ratings and reviews cannot be blindly trusted, as some threat actors convert legitimate extensions to malware years after launch. Users should vet extensions carefully for red flags, research developers on platforms like Google and Reddit, and regularly audit installed extensions to minimize risk to user privacy3

. The emergence of Prompt Poaching targeting popular AI services like Claude and Gemini signals that threat actors are adapting their tactics to exploit the growing reliance on AI chatbot conversations for both personal and professional use.References

Summarized by

Navi

Related Stories

Browser extensions with 8 million users caught secretly harvesting AI conversations

17 Dec 2025•Technology

Malicious Chrome extensions disguised as AI assistants steal data from 300,000+ users

12 Feb 2026•Technology

Malicious VS Code Extensions With 1.5 Million Installs Steal Developer Source Code

26 Jan 2026•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Pentagon Summons Anthropic CEO as $200M Contract Faces Supply Chain Risk Over AI Restrictions

Policy and Regulation

3

Canada Summons OpenAI Executives After ChatGPT User Became Mass Shooting Suspect

Policy and Regulation