Virtualization Platforms Enhance GPU Support for AI Workloads

2 Sources

2 Sources

[1]

A guide to passing GPUs through to Proxmox, XCP-ng VMs

Go ahead, toss that old gaming card in your box and boost your AI applications -- you know you want to Hands on Broadcom's acquisition of VMware has sent many scrambling for alternatives. Two of the biggest beneficiaries of Broadcom's price hikes, at least on the free and open source side of things, have been the Proxmox VE and XCP-ng hypervisors. At the same time, interest in enterprise AI has taken off in earnest. With so many making the switch to these FOSS-friendly virtualization platforms, we figured at least some of you might be interested in passing a GPU or two through to your VMs to experiment with local AI workloads. In this tutorial, we'll be looking at what it takes to pass a GPU through to VMs running on either platform, and go over some of the more common pitfalls you may run into. To kick things off, we'll start with XCP-ng - a descendant of the Citrix Xen Server project - as it's the easier of the two hypervisors to pass PCIe devices through, at least in this vulture's experience. By default, graphics cards get assigned to Dom0 (the management VM) and are used for display output. However, with a couple of quick config changes, we can tell Dom0 to ignore the card so that we can use the hardware for acceleration in another VM -- you may want to set up a display via another GPU, via the CPU, or the motherboard's integrated graphics. Before you get started, make sure that an IOMMU is enabled in BIOS. Short for I/O memory management unit, sometimes called Intel VT-d or AMD IOV, this is used by the hypervisor to strictly control which hardware resources each guest VM can directly access, ultimately allowing a given virtual machine to communicate directly with the GPU. On server and workstation hardware, an IOMMU is usually enabled by default. But if you're using consumer hardware or running into issues, you may want to check your BIOS to ensure it's turned on. Next connect to your XCP-ng host via KVM or SSH, as shown above, and drop to a command shell. From here we'll use to locate our GPU: If VGA isn't working, try one of the following instead: You should be presented something like this: Next, note down the ID assigned to the GPU's graphics compute and audio outputs. In this case it's . We'll use this to tell XCP-ng to hide it from Dom0 on subsequent boots. As you can see in the command below we've plugged in our GPU's ID after the to hide that specific device from the management VM: With that out of the way we just need to reboot the machine and our GPU will be ready to be passed through to our VM. With Dom0 no longer in control of the GPU, you can move on to attaching it to another VM. Begin by spinning up a new VM in Xen Orchestra as you normally would. For this tutorial we'll be using the latest release of Ubuntu Server 24.04. Once your OS is installed in the new virtual machine, shutdown the VM, and head over to the VM's "Advanced" tab in the Orchestra web interface, scroll down to GPUs, and click the button to select it, as pictured above. It will appear as once added. With that out of the way, you can go ahead and start up your VM. To test whether we passed through our GPU successfully, we can run this time from inside the Linux guest VM. If your GPU appears in the list, you're ready to install your drivers. Depending on your OS and hardware, this may require downloading driver packages from the manufacturer's website. If you happen to be running a Ubuntu 24.04 VM with an Nvidia card, you can simply run: And if you want the CUDA toolkit, you'd also run: If you're running a different distro or operating system, you will want to check out the GPU vendor's website for drivers and instructions. Now that you've got an accelerated VM up and running, we recommend checking out some of our hands-on guides linked at the bottom of this story. If things haven't gone smoothly, check out XCP-ng's documentation on device passthrough here. Enabling PCIe passthrough on Proxmox VE is a little more involved. Like with XCP-ng, this means we need to tell Proxmox not to initialize the graphics card we'd like to pass through to our VM. Unfortunately, it's a bit of an all-or-nothing situation with Proxmox, as the way we do this is by blacklisting the driver module for our specific brand of GPU. To get started, install your GPU card in your server and boot into the Proxmox management console. But, before we go any further, make sure that Proxmox sees our GPU. For this we'll be using the utility to list our installed peripherals. From the Proxmox management console, select your node from the sidebar, open the shell, as pictured above, and then type in: If nothing comes up, try one of the following: You should see a print out similar to this one showing your graphics card: Now that we've established that Proxmox can actually see the card, we can move on enabling the IOMMU and blacklisting the drivers. We'll be demonstrating this on an AMD system with a Nvidia GPU, but we'll also share steps for AMD cards, too. Before we can pass through our PCIe device, we need to enable the IOMMU both in the BIOS and in the Proxmox bootloader. As we mentioned in the earlier section on XCP-ng, IOMMU is the mechanism which the hypervisor uses to make the GPU available to the VM guests running on the system. In our experience the IOMMU should be enabled by default on most server and workstation equipment, but is usually disabled on consumer boards. Once you've got an IOMMU activated in BIOS, we need to tell Proxmox to use it. From the Proxmox management console, open the shell. The next bit depends on how you configured your boot disk. Usually Proxmox will default to Grub for single disk installations and Systemd-boot for installations on mirrored disks. Meanwhile, for those with AMD CPUs, the line should look like this: The Proxmox team also recommends adding to boost performance on hardware that supports it, however it's not strictly required. Save and exit, then apply it by updating the bootloader: For Systemd-boot: The process looks a little different for Systemd-boot but is pretty much the same idea. Rather than the Grub config, we'll be editing the kernel cmdline file. Next we need to enable a few VFIO modules by opening the module config file using your editor of choice: And paste in the following: If you're trying this on an earlier version of Proxmox - older than version 8.0 - you'll also need to add: Once you've updated the modules, force an update and then reboot your system: After you reboot your system you can check that they've loaded successfully by running If everything worked properly, you should see the three VFIO modules we enabled earlier appear on screen. Check out our troubleshooting section if you run into any problems. Now that we've got an nIOMMU successfully configured we need to tell Proxmox that we don't want it to load our GPU drivers. Finally refresh the kernel modules and reboot by running: Okay, we've officially arrived at the fun part. At this point, we should be able to add our GPU to our VM and everything should just work. If it doesn't, head down to our troubleshooting section for a few tweaks to try as well as resources to check out. To do that, start by creating a new VM. The process should be fairly straightforward but there are a couple of changes we need to make under the System and CPU sections of the Proxmox web-based VM creation wizard, as pictured below. Under the System section: Next, under the CPU section, shown above, ensure that Type is set to to avoid compatibility issues that can crop up with some GPU drivers and runtimes. Once your VM has been created, go ahead and start it and install your operating system of choice. In this case, we're using Ubuntu 24.04 Server edition. After you have your OS installed, shutdown the VM and head over to its Hardware config page and click and select , as shown above. Next select and select the GPU you'd like to pass through to the VM from the drop down. Then, tick the checkbox and ensure that both and are checked. If the latter is grayed out, you probably didn't set the machine type to in the earlier step. Optionally, you can repeat these steps to pass through the GPU's audio device, if it has one. With our PCIe device added, we can go ahead and start our VM up and use to make sure the GPU has been passed through successfully: Again, if nothing comes up, try one of the following: From here, you can install your GPU drivers as you normally would on a bare metal system. During installation, you may run into an error, shown below, because UEFI Secure Boot was enabled for OVMF VMs in Proxmox. You can either reboot and disable Secure Boot in the VM BIOS (press the key during the initial boot splash) or you can set a password and enroll a signing key in your EFI firmware. If for some reason you're still having trouble passing your GPU through to a VM, you may need to make a few additional tweaks. Enabling unsafe interrupts If for some reason you're having trouble getting the VFIO modules set up, you may have to enable unsafe interrupts by creating a new config file under . According to the Proxmox docs, this can lead to instability, so we only recommend applying this if you run into trouble: Configuring VFIO passthrough for troublesome cards If you're still having trouble, you may need to more explicitly tell Proxmox to let the VM take control over the GPU. Start by grabbing the vendor and device IDs for your GPU. They'll look a bit like this: and . Note if you're using a server card, you may only have one set of IDs. To identify the IDs for our GPU we can use : To check that the vfio-pci driver has been loaded we can execute the following and scroll up until you see your card. If everything worked correctly, you should see something like this (note that is listed as the kernel driver) and you can head back up the previous section to configure your VM: Now that you've got a GPU accelerated VM how about checking out one of our other AI themed tutorials for ways you can put it to work... We're already hard at work on more AI and large language model-related coverage, so be sure to sound off in the comments with any ideas or questions you might have. ®

[2]

A hands on guide to containerization for AI development

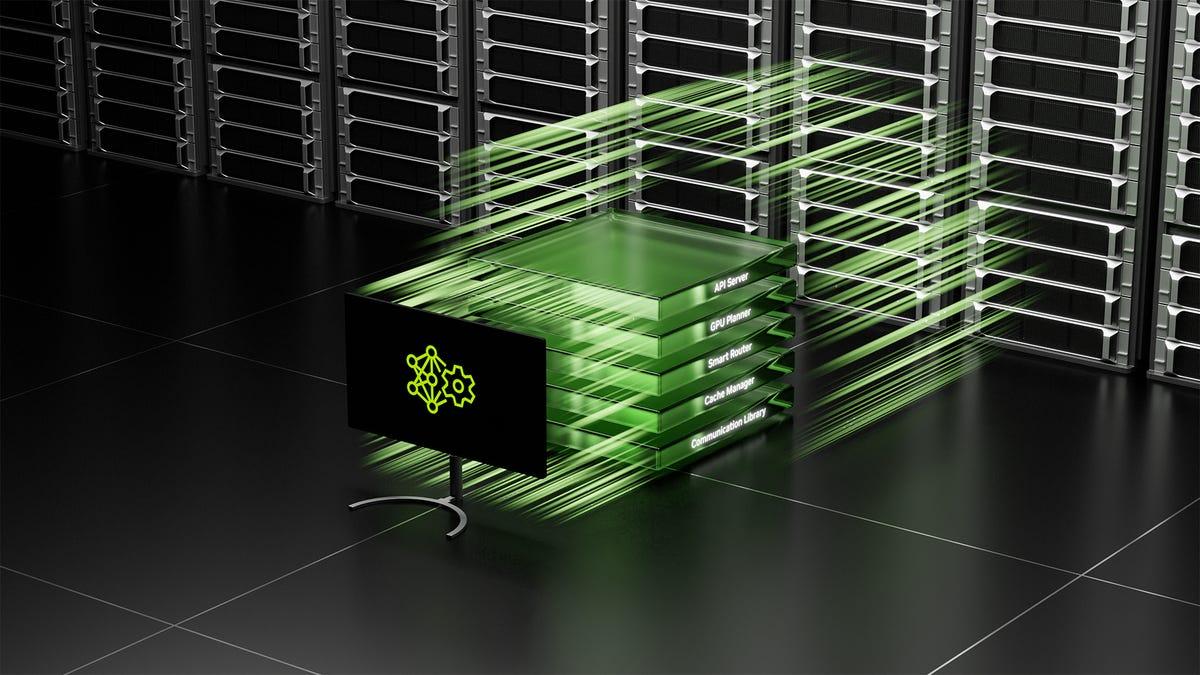

Hands on One of the biggest headaches associated with AI workloads is wrangling all of the drivers, runtimes, libraries, and other dependencies they need to run. This is especially true for hardware-accelerated tasks where if you've got the wrong version of CUDA, ROCm, or PyTorch there's a good chance you'll be left scratching your head while staring at an error. If that weren't bad enough, some AI projects and apps may have conflicting dependencies, while different operating systems may not support the packages you need. However, by containerizing these environments we can avoid a lot of this mess by building images that have been configured specifically to a task and - perhaps more importantly - be deployed in a consistent and repeatable manner each time. And because the containers are largely isolated from one another, you can usually have apps running with conflicting software stacks. For example you can have two containers, one with CUDA 11 and the other with 12, running at the same time. This is one of the reasons that chipmakers often make containerized versions of their accelerated-computing software libraries available to users since it offers a consistent starting point for development. Unlike virtual machines, you can pass your GPU through to as many containers as you like, and so long as you don't exceed the available vRAM you shouldn't have an issue. For those with Intel or AMD GPUs, the process couldn't be simpler and simply involves passing the right flags when spinning up our container. For example, let's say we want to make make your Intel GPU available to an Ubuntu 22.04 container. You'd append to the command. Assuming you're on a bare metal system with an Intel GPU, you'd run something like: Meanwhile, for AMD GPUs you'd append Note: Depending on your system you'll probably need to run this command with elevated privileges using or in some cases . If you happen to be running one of Team Green's cards, you'll need to install the Nvidia Container Toolkit before you can expose it to your Docker containers. To get started, we'll add the software repository for the toolkit to our sources list and refresh Apt. (You can see Nvidia's docs for instructions on installing on RHEL and SUSE-based distros here.) Now we can install the container runtime and configure Docker to use it. With the container toolkit installed, we just need to tell Docker to use the Nvidia runtime by editing the file. To do this, we can simply execute the following: The last step is to restart the docker daemon and test that everything is working by launching a container with the flag. One of the most useful applications of Docker containers when working with AI software libraries and models is as a development environment. This is because you can spin up as many containers as you need and tear them down when you're done without worrying about borking your system. Now, you can just spin up a base image of your distro of choice, expose our GPU to it, and start installing CUDA, ROCm, PyTorch, or Tensorflow. For example, to create a basic GPU accelerated Ubuntu container you'd run the following (remember to change the or flag appropriately) to create and then access the container. This will create a new Ubuntu 22.04 container named GPUtainer that: While building up a container from scratch with CUDA, ROCm, or OpenVINO can be useful at times, it's also rather tedious and time consuming, especially when there are prebuilt images out there that'll do most of the work for you. For example, if we want to get a basic CUDA 12.5 environment up and running we can use a image as a starting point. To test it run: Or, if you've got and AMD card, we can use one of the ROCm images like this one. Meanwhile, owners of Intel GPU should be able to create a similar environment using this OpenVINO image. By design, Docker containers are largely ephemeral in nature, which means that changes to them won't be preserved if, for example, you were to delete the container or update the image. However, we can save any changes committing them to a new image. To commit changes made to the CUDA dev environment we created in the last step we'd run the following to create a new image called "cudaimage". We could then spin up a new container based on it by running: Converting existing containers into reproducible images can be helpful for creating checkpoints and testing out changes. But, if you plan to share your images, it's generally best practice to show your work in the form of a . This file is essentially just a list of instructions that typically tells Docker how to turn an existing image into a custom one. As with much of this tutorial, if you're at all familiar with Docker or the command most of this should be self explanatory. For those new to generating Docker images, we'll go through a simple example using this AI weather app we kludged together in Python. It uses Microsoft's Phi3-instruct LLM to generate a human-readable report from stats gathered from Open Weather Map every 15 minutes in the tone of a TV weather personality. Note: If you are following along, be sure to set your zip code and Open Weather Map API key appropriately. If you're curious, the app works by passing the weather data and instructions to the LLM via the Transformers pipeline module, which you can learn more about here. On its own, the app is already fairly portable with minimal dependencies. However, it still relies on the CUDA runtime being installed correctly, something we can make easier to manage by containerizing the app. From here, we simply need to tell it what commands it should to set up the container and install any dependencies. In this case, we just need a few Python modules, as well as the latest release of PyTorch for our GPU. Finally, we'll set the to the command or executable we want the container to run when it's first started. With that, our is complete and should look like this: Now all we have to do is convert the into a new image by running the following, and then sit back and wait. After a few minutes, the image should be complete and we can use it to spin up our container in interactive mode. Note: Remove the bit if you don't want the container to destroy itself when stopped. After a few seconds the container will launch, download Phi3 from Hugging Face, quantize it to 4-bits precision, and present our first weather report. Naturally, this is an intentionally simple example, but hopefully it illustrates how containerization can be used to make running AI apps easier to build and deploy. We recommend taking a look at Docker's documentation here, if you need anything more intricate. Like any other app, containerizing your AI projects has a number of advantages beyond just making them more reproducible and easier to deploy at scale, it also allows models to be shipped alongside optimized configurations for specific use cases or hardware configurations. This is the idea behind Nvidia Inference Microservices -- NIMs for short -- which we looked at back at GTC this spring. These NIMs are really just containers built by Nvidia with specific versions of software such as CUDA, Triton Inference Server, or TensorRT LLM that have been tuned to achieve the best possible performance on their hardware. And since they're built by Nvidia, every time the GPU giant releases an update to one of its services that unlocks new features or higher performance on new or existing hardware, users will be able to take advantage of these improvements simply by pulling down a new NIM image. Or that's the idea anyway. Over the next couple of weeks, Nvidia is expected to make its NIMs available for free via its developer program for research and testing purposes. But before you get too excited, if you want to deploy them in production you're still going to need a AI Enterprise license which will set you back $4,500/year per GPU or $1/hour per GPU in the cloud. We plan to take a closer look at Nvidia's NIMs in the near future. But, if an AI enterprise license isn't in your budget, there's nothing stopping you from building your own optimized images, as we've shown in this tutorial. ®

Share

Share

Copy Link

Proxmox and XCP-ng introduce GPU passthrough capabilities, while containerization emerges as a key strategy for AI application deployment. These developments aim to improve performance and flexibility in AI and machine learning environments.

Proxmox and XCP-ng Boost GPU Support

In a significant move for the virtualization industry, both Proxmox and XCP-ng have announced enhanced GPU passthrough capabilities, addressing the growing demand for GPU resources in AI and machine learning workloads. Proxmox, a popular open-source virtualization platform, has introduced support for up to 16 GPUs per virtual machine in its latest release

1

. This development allows for more efficient utilization of GPU resources, particularly beneficial for organizations running complex AI models.Similarly, XCP-ng, another open-source virtualization solution, has implemented GPU passthrough features, enabling direct access to GPU hardware from within virtual machines

1

. This enhancement is expected to significantly improve performance for GPU-intensive tasks, making XCP-ng a more attractive option for AI researchers and developers.Containerization: A New Frontier for AI Applications

As the AI landscape evolves, containerization has emerged as a key strategy for deploying and managing AI applications. Industry experts are increasingly recognizing the benefits of containerizing AI workloads, including improved portability, scalability, and resource efficiency

2

.Containerization allows AI applications to be packaged with all their dependencies, ensuring consistent performance across different environments. This approach is particularly valuable for organizations looking to deploy AI models in various settings, from on-premises data centers to cloud platforms.

Challenges and Considerations

While the advancements in GPU passthrough and containerization offer significant benefits, they also present new challenges. IT teams must now grapple with the complexities of managing GPU resources across virtualized environments and ensuring optimal performance for containerized AI applications.

Security remains a top concern, as the increased use of virtualization and containerization in AI workloads introduces new attack vectors. Organizations must implement robust security measures to protect sensitive AI models and data

2

.Related Stories

Industry Impact and Future Outlook

The developments in GPU passthrough and AI application containerization are expected to have a profound impact on the AI and virtualization industries. As more organizations adopt these technologies, we may see a shift in how AI workloads are deployed and managed.

The enhanced GPU support in virtualization platforms like Proxmox and XCP-ng is likely to accelerate the adoption of AI and machine learning in various sectors. Meanwhile, the trend towards containerization of AI applications could lead to more flexible and efficient AI deployment strategies, potentially reducing costs and improving time-to-market for AI-powered solutions.

References

Summarized by

Navi

[1]

[2]

Related Stories

Nvidia Unveils Blackwell Ultra GPUs and AI Desktops, Focusing on Reasoning Models and Revenue Generation

19 Mar 2025•Technology

The AI PC Revolution: Challenges and Opportunities in 2025

16 Jan 2025•Technology

The Symbiotic Relationship Between Edge Computing and Cloud in AI Infrastructure

17 Jan 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology