YouTube's AI Editing Controversy: The Battle for Content Control and Transparency

2 Sources

2 Sources

[1]

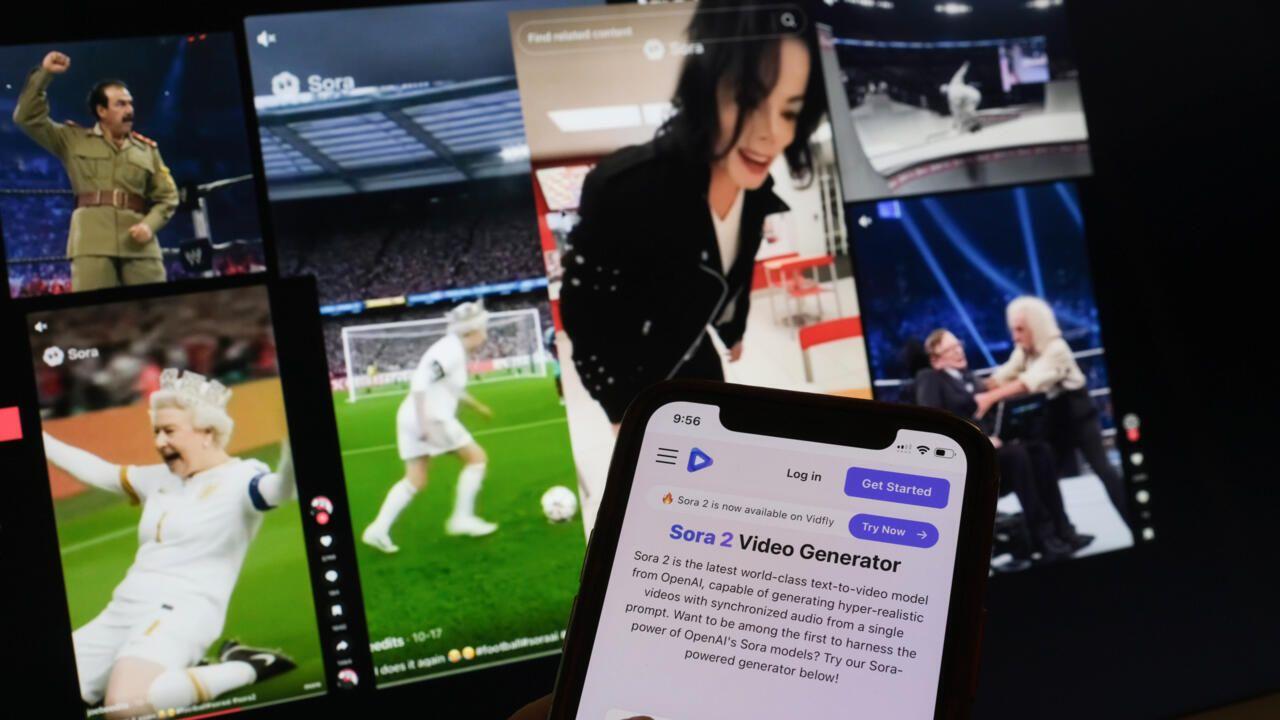

YouTube's AI editing scandal reveals how reality can be manipulated without our consent

University of Sydney provides funding as a member of The Conversation AU. Disclosure, consent and platform power have become newly invigorated battlefields with the rise of AI. The issue came to the fore recently with YouTube's controversial decision to use AI-powered tools to "unblur, denoise and improve clarity" for some of the content uploaded to the platform. This was done without the consent, or even knowledge, of the relevant content creators. Viewers of the material knew nothing of YouTube's intervention. Without transparency, users have limited recourse to identify, let alone respond to, AI-edited content. At the same time, such distortions have a history that significantly predates today's AI tools. A new kind of invisible edit Platforms such as YouTube aren't the first to engage in subtle image manipulation. For decades, lifestyle magazines have "airbrushed" photos to soften or sharpen certain features. Not only are readers not informed of the changes, often the celebrity in question isn't either. In 2003, actor Kate Winslet angrily decried British GQ's choice to alter her cover shot - which included narrowing her waist - without her consent. The wider public has also shown an appetite for editing images before posting to social media. This makes sense. One 2021 study that looked at 7.6 million user-posted photos on Flickr found filtered photos were more likely to get views and engagement. However, YouTube's recent decision demonstrates the extent to which users may not be in the driver's seat. TikTok faced a similar scandal in 2021, when some Android users realised a "beauty filter" had been applied automatically to their posts without their consent or disclosure. This is especially concerning as recent research has found a link between the use of appearance-enhancing TikTok filters and self-image concerns. Undisclosed alterations extend to offline as well. In 2018, new iPhone models were found to be automatically using a feature called Smart HDR (High Dynamic Range) to "smooth" users' skin. This was later described by Apple as a "bug", and was reversed. These issues also collided in the Australian political sphere last year. Nine News published an AI-modified photo of Victorian MP Georgie Purcell that exposed her midriff, whereas it was covered in the original photo. They did not tell viewers the image they used had been edited with AI. The issue isn't limited to visual content, either. In 2023, author Jane Friedman found Amazon selling five AI-generated books under her name. Not only were they not her works, they also posed the risk of significant reputational harm. In each of these cases, the algorithmic alterations were presented without disclosure to those who viewed them. The disappearing disclosure Disclosure is one of the simplest tools we have to adapt to an increasingly altered AI-mediated reality. Research suggests companies that are transparent about their use of AI algorithms are more likely to be trusted by users with the users' initial trust in the company and AI system playing a significant role. While users have demonstrated diminishing trust in AI systems globally, they have also shown increasing trust in AI they have used themselves, including the belief that it will inevitably get better. So why do companies still use AI without disclosing it? Perhaps it's because disclosures of AI use can be problematic. Research has found disclosing AI use consistently reduces trust in the relevant person or organisation, although not as much as if they are discovered to have used AI without disclosure. Beyond trust, the impact of disclosures is complex. Research has found disclosures on AI-generated misinformation are unlikely to make that information any less persuasive to viewers. However, they can make people hesitate to share the content, for fear of spreading misinformation. Sailing into the AI-generated unknown With time it will only get harder to identify confected and manipulated AI imagery. Even sophisticated AI detectors remain a step behind. Another big challenge in fighting misinformation - a problem made worse by the rise of AI - is confirmation bias. This refers to users' tendency to be less critical of media (AI or otherwise) that confirms what they already believe. Fortunately there are resources at our disposal, provided we have the presence of mind to seek them out. Younger media consumers in particular have developed strategies that can push back against the tide of misinformation on the internet. One of these is simple triangulation, which involves seeking out multiple reliable sources to confirm a piece of news. Users can also curate their social media feeds by purposefully liking or following people and groups they trust, while excluding poorer quality sources. But they may face an uphill battle, as platforms such as TikTok and YouTube are inclined towards an infinite scroll model that encourages passive consumption over tailored engagement. While YouTube's decision to alter creators' videos without consent or disclosure is likely within its legal rights as a platform, it puts its users and contributors in a difficult position. And given previous cases from other major platforms - as well as the outsized power digital platforms enjoy - this probably won't be the last time this happens.

[2]

YouTube's Secret AI Edits: Are Creators Losing Control of Their Videos?

The issue isn't just confined to texts with social media; in Australia, MP Georgie Purcell's image was altered by AI on Nine News without any courtesy or disclosure. The issue appears more intricate in the literature. Jane Friedman noted that books made with AI were sold on Amazon under her name, a risk as it puts her reputation at risk. As the use of modern becomes increasingly pervasive, experts believe that disclosure remains the simplest safeguard. There is the potential to preserve trust through open communication about algorithmic , even if it is AI use that is acknowledged and is assumed to erode confidence momentarily. Hiding tool usage tends to cause greater outrage when uncovered. However, as AI software becomes more advanced, it becomes harder to detect manipulated content, exposing audiences to subtle manipulation. There is a lot of content to be consumed, and safeguarding oneself falls on the users. Verifying the facts from multiple authentic sources and prioritizing social media feeds from credible sources are some of the appropriate methods. The into YouTube points to a much larger ethical problem: these platforms have overwhelming power over online content, yet both the creators and users have limited control. The integration of the technology in day-to-day media is going to raise even more questions about consent, transparency, and accountability, and audiences are going to have a harder time navigating a world where reality is filtered and manufactured.

Share

Share

Copy Link

YouTube's use of AI to edit user content without consent sparks debate on platform power, transparency, and the future of digital media manipulation.

YouTube's Controversial AI Editing Decision

YouTube recently made headlines by implementing AI-powered tools to "unblur, denoise and improve clarity" of content uploaded to its platform. This decision was made without the consent or knowledge of content creators, raising significant concerns about platform power and transparency in the digital age

1

.The Broader Context of AI-Mediated Reality

Source: The Conversation

YouTube's actions are part of a larger trend of AI-driven content manipulation across various platforms and media:

- Social Media Filters: Platforms like TikTok have faced scrutiny for applying "beauty filters" without user consent

1

. - Print Media: For decades, lifestyle magazines have "airbrushed" photos without informing readers or subjects

1

. - Political Sphere: In Australia, an AI-modified photo of MP Georgie Purcell was published by Nine News without disclosure

2

. - Literature: Author Jane Friedman discovered AI-generated books being sold under her name on Amazon

1

2

.

The Importance of Disclosure and Transparency

Experts argue that disclosure is one of the simplest tools to adapt to an increasingly AI-mediated reality. Research suggests that companies transparent about their AI use are more likely to be trusted by users

1

.However, the impact of disclosures is complex:

- Disclosing AI use can reduce trust in the relevant person or organization.

- Disclosures on AI-generated misinformation may not make the content less persuasive but can discourage sharing

1

.

Challenges in Detecting AI-Generated Content

As AI technology advances, it becomes increasingly difficult to identify manipulated content:

- Sophisticated AI detectors struggle to keep pace with advancements.

- Confirmation bias makes users less critical of media that aligns with their beliefs

1

.

User Strategies for Navigating AI-Altered Content

To combat misinformation and AI manipulation, users can employ several strategies:

- Triangulation: Seeking multiple reliable sources to confirm information.

- Curated Social Media Feeds: Following trusted sources and excluding low-quality content.

- Critical Consumption: Being aware of passive consumption encouraged by "infinite scroll" models

1

2

.

Related Stories

The Power Dynamics of Digital Platforms

Source: Analytics Insight

YouTube's decision to alter creators' videos without consent highlights the significant power digital platforms wield over online content. While likely within their legal rights, such actions put users and contributors in a difficult position

1

.Future Implications and Ethical Concerns

The integration of AI technology in daily media raises crucial questions about:

- Consent

- Transparency

- Accountability

As reality becomes increasingly filtered and manufactured, audiences face greater challenges in navigating the digital landscape

2

.The YouTube controversy serves as a stark reminder of the ongoing battle for content control and the need for increased awareness and regulation in the age of AI-mediated reality.

References

Summarized by

Navi

[1]

[2]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology