Ad Blockers May Expose Users to More Problematic Ads, NYU Study Reveals

2 Sources

2 Sources

[1]

Ad blockers may be showing users more problematic ads, study finds

Ad blockers, the digital shields that nearly one billion internet users deploy to protect themselves from intrusive advertising, may be inadvertently exposing their users to more problematic content, according to a new study from NYU Tandon School of Engineering. The study, which analyzed over 1,200 advertisements across the United States and Germany, found that users of Adblock Plus's "Acceptable Ads" feature encountered 13.6% more problematic advertisements compared to users browsing without any ad-blocking software. The finding challenges the widely held belief that such privacy tools uniformly improve the online experience. "While programs like Acceptable Ads aim to balance user and advertiser interests by permitting less disruptive ads, their standards often fall short of addressing user concerns comprehensively," said Ritik Roongta, NYU Tandon Computer Science and Engineering (CSE) Ph.D. student and lead author of the study that will be presented at the 25th Privacy Enhancing Technologies Symposium on July 15, 2025. Rachel Greenstadt, CSE professor and faculty member of the NYU Center for Cybersecurity, oversaw the research. The research team developed an automated system using artificial intelligence to identify problematic advertisements at scale. To define what constitutes "problematic," the researchers created a comprehensive taxonomy drawing from advertising industry policies, regulatory guidelines, and user feedback studies. Their framework identifies seven categories of concerning content: ads inappropriate for minors (such as alcohol or gambling promotions), offensive or explicit material, deceptive health or financial claims, manipulative design tactics like fake urgency timers, intrusive user experiences, fraudulent schemes, and political content without proper disclosure. Their AI system, powered by OpenAI's GPT-4o-mini model, matched human experts' judgments 79% of the time when identifying problematic content across these categories. The study revealed particularly concerning patterns for younger internet users. Nearly 10% of advertisements shown to underage users in the study violated regulations designed to protect minors. This highlights systematic failures in preventing inappropriate advertising from reaching children, the very problem that drives many users to adopt ad blockers in the first place. Adblock Plus's Acceptable Ads represents an attempt at compromise in the ongoing battle between advertisers and privacy advocates. The program, used by over 300 million people worldwide, works by maintaining curated lists of approved advertising exchanges (the automated platforms that connect advertisers with websites) and publishers (the websites and apps that display ads). The program allows certain advertisements to bypass ad blockers if they meet "non-intrusive" standards. However, the NYU Tandon researchers discovered that advertising exchanges behave differently when serving ads to users with ad blockers enabled. While newly added exchanges in the Acceptable Ads program showed fewer problematic advertisements, existing approved exchanges that weren't blocked actually increased their delivery of problematic content to these privacy-conscious users. "This differential treatment of ad blocker users by ad exchanges raises serious questions," Roongta noted. "Do ad exchanges detect the presence of these privacy-preserving extensions and intentionally target their users with problematic content?" The implications extend beyond user experience. The researchers warn that this differential treatment could enable a new form of digital fingerprinting, where privacy-conscious users become identifiable precisely because of their attempts to protect themselves. This creates what the study calls a "hidden cost" for privacy-aware users. The $740 billion digital advertising industry has been locked in an escalating arms race with privacy tools. Publishers lose an estimated $54 billion annually to ad blockers, leading nearly one-third of websites to deploy scripts that detect and respond to ad-blocking software. "The misleading nomenclature of terms like 'acceptable' or 'better' ads creates a perception of enhanced user experience, which is not fully realized," said Greenstadt. This study extends earlier research by Greenstadt and Roongta, which found that popular privacy-enhancing browser extensions often fail to meet user expectations across key performance and compatibility metrics. The current work reveals another dimension of how privacy technologies may inadvertently harm the users they aim to protect.

[2]

Ad Blockers May Be Showing Users More Problematic Ads, NYU Tandon Study Finds | Newswise

Newswise -- Ad blockers, the digital shields that nearly one billion internet users deploy to protect themselves from intrusive advertising, may be inadvertently exposing their users to more problematic content, according to a new study from NYU Tandon School of Engineering. The study, which analyzed over 1,200 advertisements across the United States and Germany, found that users of Adblock Plus's "Acceptable Ads" feature encountered 13.6% more problematic advertisements compared to users browsing without any ad blocking software. The finding challenges the widely held belief that such privacy tools uniformly improve the online experience. "While programs like Acceptable Ads aim to balance user and advertiser interests by permitting less disruptive ads, their standards often fall short of addressing user concerns comprehensively," said Ritik Roongta, NYU Tandon Computer Science and Engineering (CSE) PhD student and lead author of the study that will be presented at the 25th Privacy Enhancing Technologies Symposium on July 15, 2025. Rachel Greenstadt, CSE professor and faculty member of the NYU Center for Cybersecurity, oversaw the research. The research team developed an automated system using artificial intelligence to identify problematic advertisements at scale. To define what constitutes "problematic," the researchers created a comprehensive taxonomy drawing from advertising industry policies, regulatory guidelines, and user feedback studies. Their framework identifies seven categories of concerning content: ads inappropriate for minors (such as alcohol or gambling promotions), offensive or explicit material, deceptive health or financial claims, manipulative design tactics like fake urgency timers, intrusive user experiences, fraudulent schemes, and political content without proper disclosure. Their AI system, powered by OpenAI's GPT-4o-mini model, matched human experts' judgments 79% of the time when identifying problematic content across these categories. The study revealed particularly concerning patterns for younger internet users. Nearly 10% of advertisements shown to underage users in the study violated regulations designed to protect minors. This highlights systematic failures in preventing inappropriate advertising from reaching children, the very problem that drives many users to adopt ad blockers in the first place. Adblock Plus's Acceptable Ads represents an attempt at compromise in the ongoing battle between advertisers and privacy advocates. The program, used by over 300 million people worldwide, works by maintaining curated lists of approved advertising exchanges (the automated platforms that connect advertisers with websites) and publishers (the websites and apps that display ads). The program allows certain advertisements to bypass ad blockers if they meet "non-intrusive" standards. However, the NYU Tandon researchers discovered that advertising exchanges behave differently when serving ads to users with ad blockers enabled. While newly added exchanges in the Acceptable Ads program showed fewer problematic advertisements, existing approved exchanges that weren't blocked actually increased their delivery of problematic content to these privacy-conscious users. "This differential treatment of ad blocker users by ad exchanges raises serious questions," Roongta noted. "Do ad exchanges detect the presence of these privacy-preserving extensions and intentionally target their users with problematic content?" The implications extend beyond user experience. The researchers warn that this differential treatment could enable a new form of digital fingerprinting, where privacy-conscious users become identifiable precisely because of their attempts to protect themselves. This creates what the study calls a "hidden cost" for privacy-aware users. The $740 billion digital advertising industry has been locked in an escalating arms race with privacy tools. Publishers lose an estimated $54 billion annually to ad blockers, leading nearly one-third of websites to deploy scripts that detect and respond to ad blocking software. "The misleading nomenclature of terms like 'acceptable' or 'better' ads creates a perception of enhanced user experience, which is not fully realized," said Greenstadt. This study extends earlier research by Greenstadt and Roongta, which found that popular privacy-enhancing browser extensions often fail to meet user expectations across key performance and compatibility metrics. The current work reveals another dimension of how privacy technologies may inadvertently harm the users they aim to protect. In addition to Greenstadt and Roongta, the current paper's authors are Julia Jose, an NYU Tandon CSE PhD candidate, and Hussam Habib, research associate at Greenstadt's PSAL lab.

Share

Share

Copy Link

A new study from NYU Tandon School of Engineering finds that ad blockers, particularly those using the "Acceptable Ads" feature, may inadvertently expose users to more problematic content, challenging the belief that these tools uniformly improve online experiences.

Ad Blockers: A Double-Edged Sword for Online Privacy

A groundbreaking study from the NYU Tandon School of Engineering has uncovered a surprising twist in the world of online advertising and privacy protection. Researchers found that ad blockers, tools used by nearly one billion internet users to shield themselves from intrusive ads, may actually be exposing users to more problematic content

1

.The Unexpected Consequences of "Acceptable Ads"

Source: Tech Xplore

The study, led by PhD student Ritik Roongta and overseen by Professor Rachel Greenstadt, analyzed over 1,200 advertisements across the United States and Germany. Their findings revealed that users of Adblock Plus's "Acceptable Ads" feature encountered 13.6% more problematic advertisements compared to those browsing without any ad-blocking software

1

.This discovery challenges the widely held belief that privacy tools universally enhance the online experience. "While programs like Acceptable Ads aim to balance user and advertiser interests by permitting less disruptive ads, their standards often fall short of addressing user concerns comprehensively," Roongta explained

2

.AI-Powered Analysis of Problematic Ads

To conduct their research, the team developed an innovative AI system using OpenAI's GPT-4o-mini model. This system was designed to identify problematic advertisements at scale, matching human experts' judgments 79% of the time

1

.The researchers created a comprehensive taxonomy of concerning content, including:

- Ads inappropriate for minors

- Offensive or explicit material

- Deceptive health or financial claims

- Manipulative design tactics

- Intrusive user experiences

- Fraudulent schemes

- Political content without proper disclosure

Implications for Young Users and Privacy

The study uncovered alarming patterns for younger internet users, with nearly 10% of advertisements shown to underage users violating regulations designed to protect minors

2

. This highlights systematic failures in preventing inappropriate advertising from reaching children, ironically the very issue that drives many to adopt ad blockers.Related Stories

The Hidden Costs of Privacy Protection

The researchers discovered that advertising exchanges behave differently when serving ads to users with ad blockers enabled. While newly added exchanges in the Acceptable Ads program showed fewer problematic advertisements, existing approved exchanges increased their delivery of problematic content to privacy-conscious users

1

.This differential treatment raises concerns about potential digital fingerprinting, where privacy-aware users become identifiable due to their attempts to protect themselves. "This creates a 'hidden cost' for privacy-aware users," the study warns

2

.The Ongoing Battle in Digital Advertising

The $740 billion digital advertising industry continues to engage in an escalating arms race with privacy tools. Publishers lose an estimated $54 billion annually to ad blockers, leading nearly one-third of websites to deploy scripts that detect and respond to ad-blocking software

1

.Professor Greenstadt cautioned, "The misleading nomenclature of terms like 'acceptable' or 'better' ads creates a perception of enhanced user experience, which is not fully realized"

2

.References

Summarized by

Navi

Related Stories

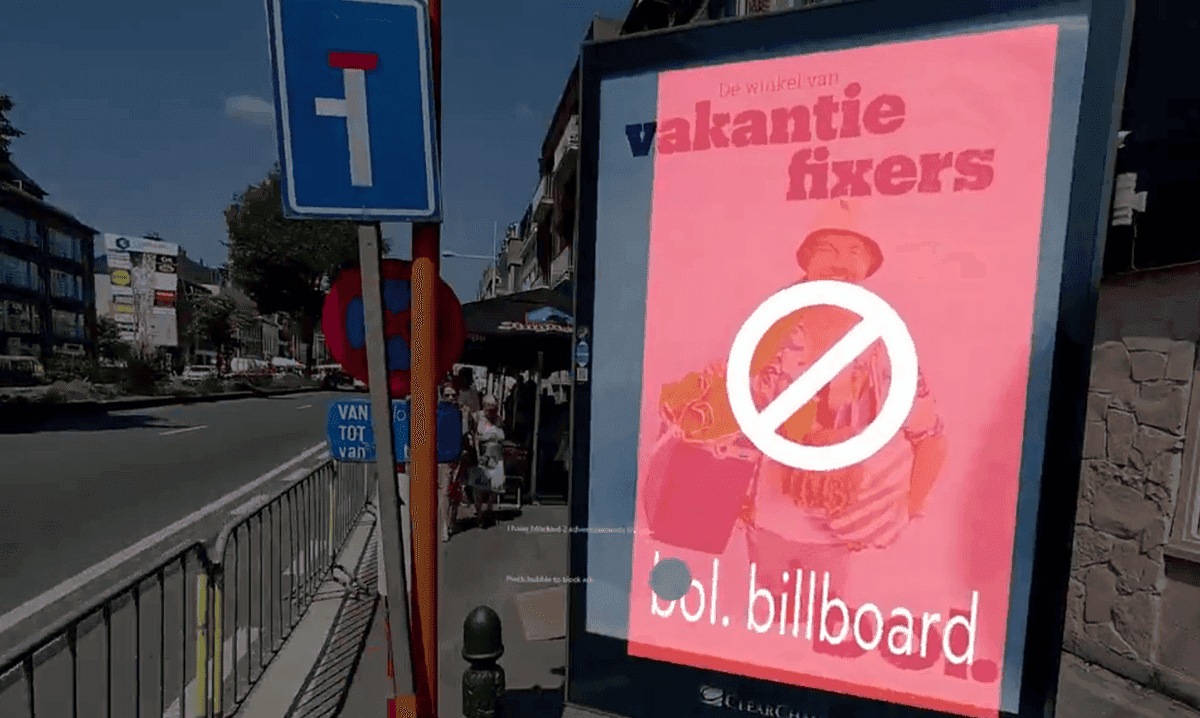

Engineer Develops Real-World Ad-Blocking App Using AR Glasses and AI

22 Jun 2025•Technology

AI Web Browser Assistants Raise Serious Privacy Concerns, Study Reveals

13 Aug 2025•Technology

OpenAI researcher quits over ChatGPT ads, warns of Facebook-like privacy erosion ahead

11 Feb 2026•Policy and Regulation

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology