Adobe Expands AI Arsenal with New Audio Generation Tools for Firefly

9 Sources

9 Sources

[1]

Adobe Now Lets You Generate Soundtracks and Speech in Firefly

All products featured on WIRED are independently selected by our editors. However, we may receive compensation from retailers and/or from purchases of products through these links. Learn more. Adobe is leaning heavily into artificial intelligence. At the company's annual MAX conference in Los Angeles, it announced a slew of new features for its creative apps, almost all of which include some kind of new AI capability. It even teased an integration with OpenAI's ChatGPT. Here's everything you need to know. Adobe's new darling app is Firefly, which launched in 2023 and offers the ability to create images and videos through generative AI. So it makes sense that the bulk of the announcements revolve around it. First, the company says it's opening up support for custom models, allowing creatives to train their own AI models to create specific characters and tones. Businesses have been able to leverage custom models in Firefly for some time, but Adobe is rolling out the feature to individual customers. Adobe says you need just six to 12 images to train the model on a character, and "slightly more" to train it on a tone. The basis of the model remains Adobe's own Firefly model, which means it's trained on proprietary data and commercially safe to use. Custom models will roll out at the end of the year, and you can join a waiting list in November for early access.

[2]

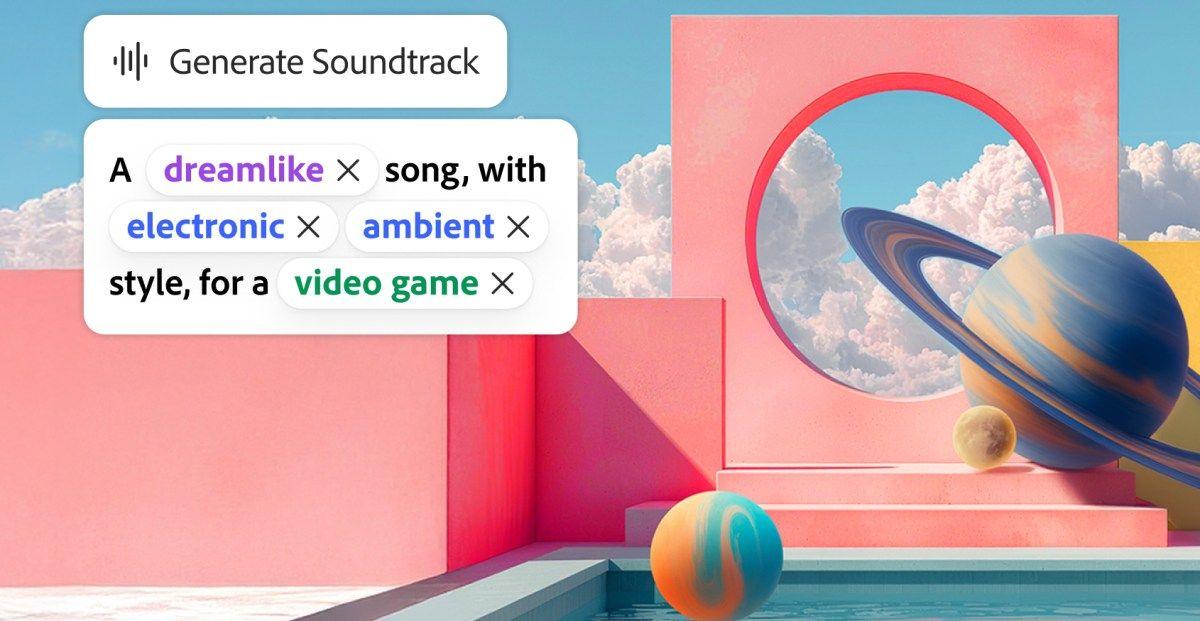

Adobe's New AI Is All About Audio. How to Create Music for Your Videos with Firefly

Much of the news and product updates Adobe dropped this week was, unsurprisingly, centered around generative AI. But while most of this year has seen massive leaps in image and video generation, Adobe is focusing on elevating its AI offerings in another area: AI audio. The two new features, generate soundtrack and generate speech, do exactly what their names suggest. You can create background music and record scripts for your video. But each comes with hands-on controls that make AI audio less of a gamble and more of a useful tool for creators of all skill levels. They're available in beta now. Adobe is also releasing a beta version of its latest, fifth-gen Firefly Image Model. It promises to be better at producing photorealistic images, and you can now use prompt-based editing. There's also a new beta Firefly video editor that comes with a multitrack timeline that's meant to help you compile AI-generated clips. Adobe is also expanding its partnerships with two new AI companies, ElevenLabs and Topaz Labs. For even more AI news, you can learn about the AI assistants coming to Photoshop and Express. Music licensing is complicated, especially for commercial use. So let me start with the part that matters most: Any music generated with Firefly's generate soundtrack is given a universal license, which means you can use it for any purpose, indefinitely. Adobe creates its AI tools by using content (in this case, audio) that it has permission to use for AI training. So in theory, you shouldn't have Firefly AI audio removed from YouTube or other platforms or get a dreaded copyright strike. "This is a unique time in the world where music licensing is on the top of everybody's mind and creators are just either frustrated because they're trying to do the best thing for their content, or they're confused," Jay LeBoeuf, Adobe's head of AI audio, said in an interview. "So we're just hoping to remove the confusion." In a demo, Firefly did reject a prompt with an artist's name in it as it violated its user guidelines due to copyright concerns. Because the model isn't trained on Taylor Swift's music, for example, it can't create music similar to hers. Now, the fun stuff: Generate soundtrack is the first AI music tool from Adobe, and it's designed to take the guesswork out of what you want. You upload your video, and the AI analyzes it. Based on its assessment, Firefly will write a prompt it thinks may work well for your video. It's a Mad Libs-style prompt, and you can swap out the descriptors as you see fit. The prompt has three parts: describing the general vibe, style (think genre) and purpose (commercial, experimental, etc.). You can also adjust the tempo and energy level. Once you're happy with your prompt, click generate and less than two minutes later, four instrumental-only variations will be ready for you to play. Your audio will be as long as your video, but you can edit that as needed. You can upload videos that are up to five minutes long. You can try your hand at creating AI instrumental music for your videos now. Generate soundtrack and generate speech are both available through Firefly, and they're in beta. Check to see if your Adobe plan includes access to Firefly, and if it doesn't, you can get a plan starting at $10 per month. Once you have a soundtrack you like, you can download the complete video (or just the soundtrack) to your computer. Generating speech in Firefly is simple, and it includes a lot of features that'll make it useful for nearly any project. It's a simple window where you can type in the words you want the AI voice to read. You can also upload a script of up to 7,500 characters -- roughly a 15- to 20-minute video. Once uploaded, you can choose from 50 voices, each tagged with an approximate age and gender, including nonbinary options. You can generate speech in 20 different languages. But the fun part is what you can do to fine-tune your prompt. Speech is more than just reading words on a page. When we read long passages or talk with others, we naturally add emphasis, emotion and rhythm to our speech. With the new program, you can do the same, adding pauses where you want the AI to take a breather and highlighting sections where the tone should shift. If you're like me and nobody pronounces your name right on the first try, you can use the "fix pronunciation" tool to ensure there aren't any flubs. Select the name or proper noun and then add a phonetic breakdown, and the AI will use that to smooth out the pronunciation. These tools, along with your hands-on ability to adjust specific sections, are meant to give you more control, something other text-to-speech programs don't always offer. "It's a way for us to provide lifelike speech to creators, to small business owners, to educators, to everybody that really just has a story to tell, and maybe they're not as comfortable as we are just pulling out a mic and talking," said LeBoeuf. Firefly audio is a brand-new AI model. But that's not your only option. Adobe has been steadily adding to its roster of third-party AI models this year, for both AI video and image. It's expanding those choices again by including ElevenLab's multilingual V2 model as an option for generating speech.

[3]

Adobe's new AI audio tools can add soundtracks and voice-overs to videos

Adobe is giving filmmakers new generative AI audio tools that can quickly add thematically appropriate backing tracks and narration to videos. Generate Soundtrack and Generate Speech are being introduced to a redesigned Adobe Firefly AI app, while Adobe is also developing a new web-based video production tool that combines multiple AI features with a simple editing timeline. The Generate Soundtrack tool is launching in public beta in the Firefly app, and works by assessing an uploaded video and then generating a selection of instrumental audio clips that automatically synchronize to the footage. Users can direct the style of the music by selecting from provided presets like lofi, hip-hop, classical, EDM, and more, or describe the desired vibe in the provided text prompt interface -- asking it to be more sentimental, aggressive, and so on. The tool will also suggest a prompt based on the uploaded video footage that can be used as a starting point.

[4]

Adobe Firefly's latest trick uses AI to make royalty-free soundtracks that sync to your videos

One of the toughest parts of content creation is getting a little easier thanks to a new feature from Adobe. As part of this year's Adobe Max conference, the company introduced several AI-powered initiatives for its suite of creative products. Among them is Generate Soundtrack, a new audio tool for Adobe Firefly. Also: The most popular AI image and video generator might surprise you - here's why people use it Adobe said the Generate Soundtrack feature runs on the Firefly Audio Model. The feature creates commercially safe, fully licensed, studio-quality instrumental tracks that sync with your video footage in seconds, even offering multiple track variations. The commercially safe element of the feature is important, as creators don't have to worry about copyright strikes or takedowns on YouTube or Instagram. We haven't seen Generate Soundtrack in action, but if it's as customizable as the company's sound effect feature, the tool should prove to be pretty useful. Adobe's Generate Sound Effects feature not only lets you describe what you want with words, but you can even imitate cadence, timing, or intensity with your own voice. Adobe Firefly has a free plan. However, to use all the technology's features, including longer outputs and other creative apps, you'll need a paid subscription. Those plans start at $9.99 a month and can rise to $69.99. Also: Are Sora 2 and other AI video tools risky to use? Here's what a legal scholar says To date, music has been a challenging part of the process for many content creators. They either have to select from the same generic, free libraries that everyone else is using or deal with sometimes tricky and expensive licensing. Now, with Generative Soundtrack, creators can find music with a single click that should match their content. Generate Soundtrack is available in public beta starting today.

[5]

Adobe Turns Up the Volume on AI With New Ways to Generate Soundtracks and Audio

Adobe's hub for all things AI, Firefly, is central to its latest innovations. The company announced a ton of AI-powered updates at its Max creative conference on Tuesday. While the rest of us have been obsessing (and worrying) over OpenAI's new Sora AI slop app, Adobe is headed in a different direction: Its newest features are for generating AI audio. Adobe was the second big tech company to introduce AI-generated audio to its AI video model, following Google's Veo 3. Its previous AI audio tool was primarily focused on sound effects. With that tool, you could record yourself roaring like a monster, and AI would keep the cadence of your recording but beef it up with AI. Now, Adobe is building on its audio tools and introducing new ones. Generate soundtrack and generate speech do exactly what they suggest: You can create background music and record scripts for your video. But each comes with industry-first perks that make them enticing for any creator. They're available in beta now. Adobe is also releasing its latest, fifth-generation Firefly Image Model. It's better at producing photorealistic images, and you can now use prompt-based editing. There's also a new Firefly video editor, a multitrack timeline that's meant to help you manage AI-generated clips. Adobe is expanding its partnerships with two new AI companies, ElevenLabs and Topaz Labs. And with Adobe, you'll also be able to create your own custom AI models. For even more AI news, you can learn about the AI assistants coming to Photoshop and Express. Generating speech in Firefly is simple, and it includes a lot of features that'll make it useful for nearly any project. It's a simple window where you can type in the words you want the AI voice to read. You can also upload a script of up to 7,500 characters -- roughly a 15- to 20-minute video. Once uploaded, you can choose from 50 voices, each tagged with an approximate age and gender, including nonbinary options. You can generate speech in 20 different languages. But the fun part is what you can do to fine-tune your prompt. Speech is more than just reading words on a page. When we read long passages or talk with others, we naturally add emphasis, emotion and rhythm to our speech. With the new program, you can do the same, adding pauses where you want the AI to take a breather and highlighting sections where the tone should shift. If you're like me and nobody pronounces your name right on the first try, you can use the "fix pronunciation" tool to ensure there aren't any flubs. Select the name or proper noun and then add a phonetic breakdown, and the AI will use that to smooth out the pronunciation. These tools, along with your hands-on ability to adjust specific sections, are meant to give you more control, something other text-to-speech programs don't always offer. "It's a way for us to provide lifelike speech to creators, to small business owners, to educators, to everybody that really just has a story to tell, and maybe they're not as comfortable as we are just pulling out a mic and talking," Jay LeBoeuf, Adobe's head of AI audio, said in an interview. Firefly audio is a brand-new AI model. But that's not your only option. Adobe has been steadily adding to its roster of third-party AI models this year, for both AI video and image. It's expanding those choices again by including ElevenLab's multilingual V2 model as an option for generating speech. Music licensing is complicated, especially for commercial use. So let me start with the part that matters most: Any music generated with Firefly's generate soundtrack is given a universal license, which means you can use it for any purpose, indefinitely. Adobe creates its AI tools by using content (in this case, audio) that it has permission to use for AI training. So in theory, you shouldn't have Firefly AI audio removed from YouTube or other platforms or get a dreaded copyright strike. "This is a unique time in the world where music licensing is on the top of everybody's mind and creators are just either frustrated because they're trying to do the best thing for their content, or they're confused," said LeBoeuf. "So we're just hoping to remove the confusion." In a demo, Firefly did reject a prompt with an artist's name in it as it violated its user guidelines due to copyright concerns. Because the model isn't trained on Taylor Swift's music, for example, it can't create music similar to hers. Now, the fun stuff: Generate soundtrack is the first AI music tool from Adobe, and it's designed to take the guesswork out of what you want. You upload your video, and the AI analyzes it. Based on its assessment, Firefly will write a prompt it thinks may work well for your video. It's a Mad Libs-style prompt, and you can swap out the descriptors as you see fit. The prompt has three parts: describing the general vibe, style (think genre) and purpose (commercial, experimental, etc.). You can also adjust the tempo and energy level. Once you're happy with your prompt, click generate and less than two minutes later, four music variations will be ready for you to play. Your audio will be as long as your video, but you can edit that as needed. You can upload videos that are up to five minutes long.

[6]

Adobe's Firefly can now use AI to generate soundtracks, speech and video

Adobe has released the latest version of Firefly that now leans heavily on AI for nearly every facet of video and image post-production. The updated app can now use AI to generate narration, music, images and video clips, while even helping you to brainstorm ideas and piece together clips. Many creators may find it distasteful to lean on AI for nearly every aspect of production, but Adobe calls it "a tool for, not a replacement of, human creativity." Firefly has mostly been a content generation tool until now, but Adobe has now introduced the Firefly video editor into private beta. It's a web-based multitrack timeline editor, not unlike Adobe Premiere Pro, that lets you generate, organize, trim and arrange clips, with tools to add voiceovers, soundtracks and titles. You can organize existing Firefly content or generate new ones inside the editor (with presets like claymation, anime and 2D), and combine that with captured media. All that can be edited with "frame-by-frame precision or through a built-in transcript," Adobe said. On top of video, Firefly eliminates the need for humans to make voiceovers and music, too. Adobe's new Generate Soundtrack (public beta) is a Firefly Audio Model-powered AI music generator that lets you select a style or comes up with one to match any clip you upload. It then syncs and times it precisely with that footage. Generate Speech, meanwhile, does the same thing for voiceovers. It gives you a choice between Firefly's Speech Model and one for ElevenLabs, letting you generate "lifelike voices in multiple languages, and fine-tune emotion, pacing and emphasis for natural, expressive delivery." Adobe is also expanding access to its Fire!y Creative Production tool directly in the Fire!y app as a private beta to start with. It's a complete AI-powered batch image editing system that lets creators piece together clips, automatically replace backgrounds, apply uniform color grading and crop in via a prompt-driven, no code interface. Then there's Fire!y Boards, an "AI-powered ideation surface" to brainstorm new concepts. A feature called "Rotate Object" helps you convert 2D images into 3D so you can position objects and people in different poses and rotate them to new perspectives. Two others, PDF exporting and bulk image downloading, speed the the process of sharing visual concepts across projects. Finally, Prompt to Edit (available now on Firefly) is a conversational editing interface that allows you to use everyday language to describe the edits you want to make to an image, much as you'd use text-to-image tools like Midjourney to create new images. It's available with Adobe's latest Fire!y Image Model 5 AI, along with partner models from Black Forest Labs, Google and OpenAI. With Firefly's AI now able to handle every aspect of production, you may be wondering if this will result in a wave of unwatchable AI "slop" appearing on YouTube and elsewhere. The answer is "probably," but it won't necessarily be cheap. Standalone Firefly subscriptions are $10/month for the basic plan (20 five-second videos), $20/month for the the Pro plan (40 five-second videos) and $199 for the Premium plan (unlimited videos). However, Adobe is throwing in free image and video generation (with some restrictions) for all Firefly and Creative Cloud Pro customers until December 1st. All the new tools are now available either as part of the update, in public beta or in private beta as mentioned above.

[7]

Adobe Adds AI Audio, Video and Imaging Tools to Firefly Platform | AIM

It now integrates partner models from ElevenLabs, Google, OpenAI, Topaz Labs, Luma AI, and Runway. Adobe has expanded its Firefly creative AI suite with new tools and models spanning audio, video, and imaging, deepening its push to make generative AI a core part of professional creative workflows. Announced at the company's annual Adobe MAX conference, the updates introduce Generate Soundtrack for creating fully licensed instrumental tracks, Generate Speech for lifelike multilingual voiceovers, and a new timeline-based video editor for producing and sequencing clips with AI assistance. The new tools allow creators to edit directly within Firefly, mixing generative and uploaded content through both visual and text-based interfaces. Adobe also unveiled Firefly Image Model 5, which produces photorealistic 4MP images and supports natural-language editing through a new Prompt to Edit feature. The company said the model delivers improved lighting, texture, and anatomic accuracy, as well as more coherent multi-layered compositions. In addition, Firefly now integrates partner models from ElevenLabs, Google, OpenAI, Topaz Labs, Luma AI, and Runway, making it one of the broadest creative AI ecosystems available. Adobe is also expanding access to Firefly Custom Models, which let creators train private, personalised models to generate assets in their own style. A new experimental tool, Project Moonlight, introduces a conversational AI assistant that can draw insights from creators' social channels and projects to help them move from idea to finished content. Most new features, including Firefly Image Model 5, Generate Soundtrack, and Generate Speech, are available in public beta, while the Firefly video editor, Custom Models, and Project Moonlight remain in private beta.

[8]

Adobe Will Now Let You Generate Audio Tracks and Voiceovers in Firefly

* The new announcements were made at the Adobe Max 2025 event * Adobe introduced the new Firefly Image Model 5 * The new AI model is focused on layered image editing Adobe announced several new artificial intelligence (AI) features and tools at the Adobe Max 2025 event on Tuesday. Among them, the biggest highlight is the new AI assistants being added to Photoshop, Express, and Firefly platforms. The company is also adding new AI-powered audio and video generation tools in Firefly. Apart from this, the San Jose, California-based software giant is also bringing a new Firefly model as well as integrating new partner models to give users more choice in their daily workflows. Adobe Introduces New AI Tools and Features Across Platforms Over the last couple of years, Adobe has invested heavily in integrating new AI tools and features into its platform. The company has also created a new Firefly platform that offers a range of AI capabilities, both from its native models as well as third-party models. Now, at the Adobe Max 2025 event, it introduced several new features across existing and newer modalities to bring new functionalities to its platforms. Starting with the core technology, Adobe introduced the new Firefly Image Model 5. The company says it combines native 4-Megapixel resolution, photorealistic quality, and prompt-based editing capabilities into a unified large language model (LLM). The main focus of the next generation of Firefly Image is multi-layered editing. Essentially, once an image has been generated, users will have the option to either touch and edit the elements for granular control or write a prompt and let AI handle the task. The AI model treats every asset in an image as a separate layer and can edit them separately. Apart from first-party models, Adobe is also integrating new third-party AI models into its existing catalogue. The biggest addition is ElevenLabs' audio generation models and Topaz's image generation models. Adobe said another new area the company is focusing on is custom Firefly models. So far, this was only available to its enterprise clients, but now it will be available to individual users as well. Essentially, this allows users to bring their images and train the Firefly models on the user's style to ensure visual consistency. It is currently in beta, and early access can be gained by signing up for its waitlist. Coming to the new tools, the software giant said that it has focused on the audio space to bring new functionalities to users. There is now a new Generate Soundtrack tool that can generate studio-quality, fully-licensed music tracks, a Generate Speech tool for voice-over generation, and a Firefly video editor tool that can generate, organise, trim, and sequence clips. The first two are available in beta, while the video editor has a waitlist that interested users can sign up for. There is also the Prompt-to-Edit tool that was mentioned above which supports Firefly Image Model 5, Google's Nano Banana, and Black Forest Labs' Black Flux model.

[9]

Adobe Firefly evolves into an all-in-one creative AI studio with new tools for audio, video, and imaging

There is also a project Moonlight tool, which is a conversational AI built into FireflyAt its annual MAX conference, Adobe took another step toward building a true all-in-one creative AI studio. The company announced major upgrades to Adobe Firefly, expanding it beyond image generation into a full multimedia creation space that now includes AI-powered audio, video, and voice tools -- all backed by a growing lineup of partner models and Adobe's own generative tech. Among the biggest updates is Generate Soundtrack, which lets creators instantly produce royalty-free music synced to their visuals, and Generate Speech, a text-to-voice tool with natural delivery and multilingual support. Firefly is also introducing a timeline-based AI video editor, combining traditional multitrack editing with prompt-driven generation, so users can mix recorded clips with new, AI-made visuals or scenes in the same workspace. Adobe also previewed Project Moonlight, a conversational AI interface designed to work across its creative apps. It can analyze a user's projects and social posts, then suggest new content ideas or automate repetitive creative tasks -- a move that signals Adobe's deeper investment in "agentic AI," where assistants don't just generate assets but help shape creative direction. The new Firefly Image Model 5 continues Adobe's focus on high-fidelity visual generation, now capable of 4MP native resolution and improved photorealism. The company has also opened Firefly to third-party models from ElevenLabs, Google, Runway, Luma AI, and Topaz Labs, among others, letting users pick the right model for each task. For creators who want more control, Firefly Custom Models are now in private beta, allowing individuals or teams to train their own personalized AI models that replicate their unique aesthetic or brand style -- all within Adobe's ecosystem. Firefly's new tools aim to streamline the creative process from brainstorming to publishing, letting users ideate, edit, and finish projects in one space. The updates are rolling out in phases, with most features -- including Generate Soundtrack and Firefly Image Model 5 -- already in public beta.

Share

Share

Copy Link

Adobe introduces Generate Soundtrack and Generate Speech features to its Firefly AI platform, enabling creators to produce royalty-free music and voice-overs that automatically sync with video content. The new tools address licensing concerns while offering extensive customization options.

Adobe Unveils AI Audio Generation Tools at MAX Conference

Adobe has significantly expanded its artificial intelligence capabilities with the introduction of two major audio generation features for its Firefly platform. At the company's annual MAX conference in Los Angeles, Adobe announced Generate Soundtrack and Generate Speech, marking the company's strategic push into AI-powered audio creation for content creators

1

.

Source: ZDNet

The new features represent Adobe's latest effort to address one of the most challenging aspects of content creation: finding appropriate, legally safe audio content. Both tools are now available in public beta through the redesigned Adobe Firefly AI app

3

.Generate Soundtrack: AI-Powered Music Creation

The Generate Soundtrack feature operates by analyzing uploaded video content and automatically creating instrumental music tracks that synchronize with the footage. The AI assessment process generates contextually appropriate music suggestions based on the video's visual content and pacing

2

.

Source: The Verge

Users can customize their music through a structured prompt system that includes three key components: general vibe, style or genre, and intended purpose. The tool supports various musical styles including lofi, hip-hop, classical, and EDM, while also allowing creators to adjust tempo and energy levels to match their specific needs

3

.The generation process produces four instrumental variations in under two minutes, with tracks automatically matching the length of uploaded videos up to five minutes long. This approach eliminates the guesswork traditionally associated with music selection for video content

2

.Generate Speech: Advanced Voice Synthesis

The Generate Speech feature offers comprehensive text-to-speech capabilities with extensive customization options. Users can input scripts up to 7,500 characters, roughly equivalent to a 15-20 minute video, and select from 50 different AI voices spanning various ages and genders, including nonbinary options

5

.

Source: CNET

The tool supports speech generation in 20 different languages and includes advanced features for fine-tuning pronunciation and emotional delivery. Users can add strategic pauses, adjust tonal emphasis for specific sections, and utilize a pronunciation correction tool for proper nouns and names

2

.Jay LeBoeuf, Adobe's head of AI audio, emphasized the tool's accessibility: "It's a way for us to provide lifelike speech to creators, to small business owners, to educators, to everybody that really just has a story to tell, and maybe they're not as comfortable as we are just pulling out a mic and talking"

5

.Related Stories

Licensing and Commercial Safety

A critical advantage of Adobe's new audio tools lies in their licensing structure. All music generated through Generate Soundtrack receives a universal license, allowing unlimited commercial use without copyright concerns. Adobe trains its AI models exclusively on content for which it has explicit permission, addressing the complex licensing issues that plague many content creators

4

.The system actively prevents copyright infringement by rejecting prompts that reference specific artists. During demonstrations, Firefly refused to generate music similar to Taylor Swift's work, as the model isn't trained on copyrighted material from specific artists

2

.LeBoeuf noted the timing significance: "This is a unique time in the world where music licensing is on the top of everybody's mind and creators are just either frustrated because they're trying to do the best thing for their content, or they're confused. So we're just hoping to remove the confusion"

5

.References

Summarized by

Navi

[4]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Anthropic and Pentagon clash over AI safeguards as $200 million contract hangs in balance

Policy and Regulation