Adobe Launches 'Project Indigo': A Revolutionary Computational Photography App for iPhones

8 Sources

8 Sources

[1]

I Supercharged My iPhone Camera With Adobe's New Indigo App

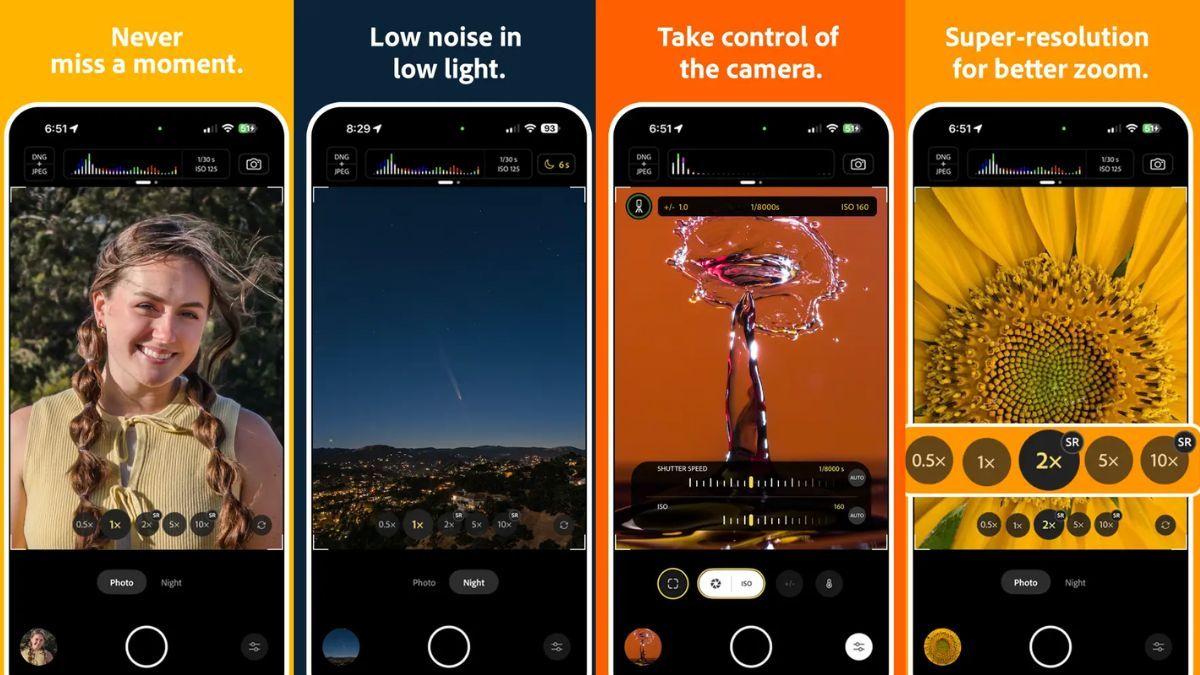

The iPhone 16 Pro has already impressed us with its amazing image quality, comfortably holding its own against other top-end Android phones including the Galaxy S25 Ultra and Pixel 9 Pro. And while the default camera app makes it easy to take quick snaps, it lacks features that enthusiastic photographers need. But where Apple left a gap, Adobe has rushed to fill in. Adobe's new camera app -- called Indigo -- offers granular control over camera settings like white balance and shutter speed while also packing AI-based features like resolution upscaling for 10x zoom, denoising and reflection removal tools. The app is available now for iPhone, so like the excitable photographer I am, I took it for a quick spin around Edinburgh. I love this first shot of a bird flying through Edinburgh's Royal Mile. I've taken advantage of two features here. Firstly, the manual white balance has allowed me to slightly warm the scene up, as I often find that the iPhone's default camera app tends to lean on the cool side. I love the tones captured here. Secondly, the app features a zero-lag shutter, which allowed me to quickly capture the moment the bird was perfectly in line with the church spire. It's a difficult shot to nail, but having no delay between pressing the shutter button and the image taking makes all the difference. Adobe says it achieves this by "constantly capturing raw images while the viewfinder is running," meaning that the image has technically already been captured when you press the button. For those of you wanting to snag high-drama shots of football games or your dog jumping for a frisbee, a zero-lag shutter is a boon. While the iPhone's base optical zoom maxes out at 5x, Adobe's Indigo app lets you digitally zoom in further with better quality. Using AI and combining multiple frames to upscale those images, they retain more detail than simply zooming in to 10x in the regular camera app. I used it here and I'm impressed at the overall clarity of the scene. I also ran the app's AI Denoise tool on the image. While there wasn't much image noise to begin with, the tool has the added benefit of sharpening up an image, which has really helped bring some extra fine detail to the blades of grass and tree bark. I'm impressed here, as the image doesn't look overly digitally sharpened, which can often be the case with these kinds of tools. Instead, the image looks natural and surprisingly clear for a zoomed-in shot. That said, it doesn't always seem to do a good job. The image from the iPhone's built-in camera app at 10x digital zoom (left) looks sharper here, with better contrast for a richer image. The same scene taken at 10x zoom using Indigo (right) looks quite low in contrast and flat by comparison. But that's not necessarily a bad thing overall. In fact, I found many of my test images had a natural look to them, with realistic shadow tones, highlights and colors. Phone software often makes images look too processed, especially on the various phones that try and lighten shadows too much (I'm looking at you, OnePlus 13), but the images Indigo produces have a great balance, even without any Lightroom editing after the capture. Speaking of which, it's no surprise that as an Adobe product, Indigo makes it easy to share the image directly to Adobe Lightroom for further editing. DNG raw files are generally easy to work with (you must have HDR editing enabled, and using profiles seems to immediately blow out any highlights), although the same file did not look as good when I opened it in Google's free Snapseed editor. It's likely there are simply early compatibility issues, and I expect this to improve in time. I've enjoyed using Indigo, and I'm looking forward to spending more time with it over the coming weeks. It definitely offers deeper functionality over Apple's default camera app, in particular the ability to adjust white balance and other settings. I also appreciate the natural look the images provide and the flexibility of editing in Lightroom. Then there are wider features like noise reduction, reflection reduction and a night mode which I've yet to try. Using Indigo as your camera does mean sacrificing Apple features like Live Photos and Photographic Styles, which are great for adding a filmic look to your images. I also don't love having to use a separate camera app, especially when I often flit between shooting still images and video which is easy to do when when using the default camera. In an ideal world I'd like to see Adobe work directly with Apple to implement these features into the core camera experience. But still, if you're a keen photographer and want to take more granular control over your images when you're out shooting, then Indigo is definitely worth installing and playing around with. Despite Adobe talking to CNET about its app back in 2022, it's still best considered as being in beta (the company calls it an "experimental camera app") with features like creative looks, portrait modes and even advanced tools like exposure and focus bracketing potentially on the cards for future updates. Also, an Android version is on the table "for sure." Given that it's currently free to use and requires no sign in with an Adobe subscription, it's well worth trying out.

[2]

Adobe gives the iPhone photo app a glow-up with SLR power - for free

Snapping photos through your mobile phone is certainly convenient. But it's not necessarily the best option, especially if you're looking to capture high-quality images. Also: Adobe Firefly app is finally launching to users. Here's how to access (and the perks) Now, Adobe has released a new camera app that aims to address some of the limitations of mobile photography. Available now in the App Store, the free Project Indigo app serves as a possible replacement for the built-in Camera app. But you'll need the right type of phone. The app works with all Pro and Pro Max iPhones starting with the iPhone 12, and with all non-Pro iPhones starting with the 14. Adobe stresses that Indigo performs some heavy-duty computing, so the newer your phone, the better. Besides being free, the app requires no Adobe account or sign-in. Also: The best graphic design software: Feature-packed, professional tools Indigo offers several features designed to improve the quality of your photos, Adobe said in its new research post. First, it offers manual controls so you can set the shutter speed, ISO, exposure, focus, temperature, and other elements. Second, it enhances your photos with a more natural SLR -- like look. Third, it taps into computational photography. That third feature is the one worth exploring. By default, most mobile camera apps take a single shot of a scene or subject. That single resulting image often suffers from what Adobe refers to as the "smartphone look" -- one that's overly bright, low in contrast, high in color saturation, too smooth or soft, and sometimes overly sharp. That type of photo isn't necessarily what you want. Instead, computational photography typically underexposes the shot, and, more importantly, captures multiple images of the same scene. Those images are combined to reduce noise and other issues, producing a higher-quality image. Indigo even takes this process a couple of steps further. Here's how. Also: The best photo editing software: Expert tested and reviewed "First, we under-expose more strongly than most cameras," Adobe explained in its blog post. "Second, we capture, align, and combine more frames when producing each photo -- up to 32 frames... This means that our photos have fewer blown-out highlights and less noise in the shadows. Taking a photo with our app may require slightly more patience after pressing the shutter button than you're used to, but after a few seconds you'll be rewarded with a better picture." Indigo's computational process works no matter which format you use. Many experienced photographers like to shoot in RAW format rather than JPEG, as the former retains its high quality and offers more options to tweak the image. But RAW photos chew up a lot of storage. With Indigo, the computational method applies to both RAW and JPEG alike. The app offers two still-photo modes -- Photo and Night. Photo is the default. But if the scene is dark, the app will suggest that you switch to Night mode. Here, Indigo not only sets a longer exposure time to capture the darker scene, but it also takes more images each time you press the shutter. The challenge with longer exposures is that you need to avoid camera shake by keeping your hands steady or by using a tripod or other object to brace the phone. Also: Adobe's Photoshop AI editing magic finally comes to Android - and it's free To learn more about Indigo, check out Adobe's research post. You can delve into all the finer details of the app, see how it works, and view a variety of captured photos. To use Indigo, make sure your iPhone is supported, then download the app from the App Store. Try the different modes, such as Photo and Night, and work with the manual controls. You might want to take the same photos with the built-in Camera app and with Indigo to compare the differences. Also: Is that image real or AI? Now Adobe's got an app for that - here's how to use it Looking to the future, Adobe said it would like to create an Android version of Indigo. Also on the drawing board are alternative "looks" for the app, including personalized ones. That means you'd be able to choose a specific look based on how you want to capture a scene. Other potential features include a portrait mode option with higher quality, as well as panoramic and video-recording modes. Also: I've used Canva for years but it turns out Adobe Express is cheaper, just as good - and free to try "This is the beginning of a journey for Adobe -- toward an integrated mobile camera and editing experience that takes advantage of the latest advances in computational photography and AI," Adobe said in its post. "Our hope is that Indigo will appeal to casual mobile photographers who want a natural SLR-like look for their photos, including when viewed on large screens; to advanced photographers who want manual control and the highest possible image quality; and to anyone, casual or serious, who enjoys playing with new photographic experiences." Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[3]

Adobe launches a new 'computational photography' camera app for iPhones

Jay Peters is a news editor covering technology, gaming, and more. He joined The Verge in 2019 after nearly two years at Techmeme. Adobe has a new computational photography camera app for iPhones - and one of its creators, Marc Levoy, helped make the impressive computational photography features that made some of Google's earlier Pixel cameras shine. The new app, called Project Indigo, was released last week by Adobe Labs. It's free and available for the iPhone 12 Pro and Pro Max, iPhone 13 Pro and Pro Max, and all iPhone 14 models and above. (Though Adobe recommends using an iPhone 15 Pro or newer.) It also doesn't require logging into an Adobe account to use. "Instead of capturing a single photo, Indigo captures a burst of photos and combines them together to produce a high-quality photo with lower noise and higher dynamic range," according to the app's description. Indigo tries to produce a natural, "SLR-like" look for photos, and it also offers a bunch of manual controls like focus, shutter speed, ISO, and white balance. To really understand what's going on under the hood of Project Indigo, though, I highly recommend reading a detailed blog post from Levoy, now an Adobe Fellow who joined the company in 2020 to build a "universal camera app," and Florian Kainz, a senior scientist. The post covers things like why smartphone cameras are good, how its computational photography works, how it creates the natural look for its photos, and some details about its image processing pipeline. It is here I must confess that I am not a camera expert by any means. But even I found the post pretty interesting and informative. The photos in the post do look great, and Adobe has an album of photos you can browse, too. In the post, Levoy and Kainz say that Project Indigo will also be a testbed for technologies that might get added to other flagship products, like a button to remove reflections. And down the line, the team plans to build things like an Android version, a portrait mode, and even video recording. "This is the beginning of a journey for Adobe - towards an integrated mobile camera and editing experience that takes advantage of the latest advances in computational photography and AI," according to Levoy and Kainz. "Our hope is that Indigo will appeal to casual mobile photographers who want a natural SLR-like look for their photos, including when viewed on large screens; to advanced photographers who want manual control and the highest possible image quality; and to anyone - casual or serious - who enjoys playing with new photographic experiences."

[4]

Adobe Project Indigo is a new photo app from former Pixel camera engineers

Adobe launched its own take on how smartphone cameras should work this week with Project Indigo, a new iPhone camera app from some of the team behind the Pixel camera. The project combines the computational photography techniques that engineers Marc Levoy and Florian Kainz popularized at Google, with pro controls and new AI-powered features. In their announcement of the new app, Levoy and Kainz style Project Indigo as the better answer to typical smartphone camera complaints of limited controls and over-processing. Rather than using aggressive tone mapping and sharpening, Project Indigo is supposed to use "only mild tone mapping, boosting of color saturation, and sharpening." That's intentionally not the same as the "zero-processing" approach some third-party apps are taking. "Based on our conversations with photographers, what they really want is not zero-process but a more natural look -- more like what an SLR might produce," Levoy and Kainz write. The new app also has fully manual controls, "and the highest image quality that computational photography can provide," whether you want a JPEG or a RAW file at the end. Project Indigo achieves that by dramatically under-exposing the shots it combines together, and relying on a larger number of shots to combine -- up to 32 frames, according to Levoy and Kainz. The app also includes some of Adobe's more experimental photo features, like "Remove Reflections," which uses AI to eliminate reflections from photos. Levoy left Google in 2020, and joined Adobe a few months later to form a team with the express goal of building a "universal camera app". Based on his LinkedIn, Kainz joined Adobe that same year. At Google, Kainz and Levoy were often credited with popularizing the concept of computational photography, where camera apps rely more on software than hardware to produce quality smartphone photos. Google's success in that arena kicked off a camera arms race that's raised the bar everywhere, but also led to some pretty over-the-top photos. Project Indigo is a bit of a corrective, and also an interesting test whether a third-party app that might produce better photos is enough to beat the default. Project Indigo is available to download for free now, and runs on either the iPhone 12 Pro and up, or the iPhone 14 and up. An Android version of the app is coming at some point in the future.

[5]

The brains that built the Pixel camera just launched 'Project Indigo,' not on Android yet [Gallery]

Adobe this week announced "Project Indigo," a new camera app that was built by the brains behind the Pixel camera, but it's not on Android just yet. To build the computational photography-focused camera found in its Pixel phones, Google enlisted the help of Marc Levoy and Florian Kainz. Both engineers worked with Google from 2014 through 2020, when they left following the Pixel 4. Kainz, in particular, was responsible for the impressive night-time photography on Pixel devices. After leaving Google, both Levoy and Kainz ended up at Adobe as detailed on their respective LinkedIn profiles, and now we're seeing the fruits of their efforts at the company. In a post, Marc Levoy says that his team at Adobe - called "Nextcam" - have launched "Project Indigo" (no, not that one) after five years of development. Levoy describes the project as a "computational photography camera app" that "offers a natural SLR-like look, full manual controls, the highest possible image quality, and new photographic experiences, including on-device removal of window reflections." A further blog post from Adobe dives into what sets "Project Indigo" apart. This starts, of course, with the computational photography that first made the Pixel camera so great. "Indigo" will capture and combine more frames than most cameras, while also "more strongly" under-exposing those photos. The result is an end picture that has "fewer blown-out highlights and less noise in the shadows" at the expense of taking a little bit longer to actually take the image. The app captures up to 32 frames, where Pixel Camera would only capture 15 frames as Android Authority points out. "Indigo" can also output both JPEG and raw images that still benefit from computational photography enhancements. The app also benefits from "local tone mapping" for improved HDR and, being an Adobe product, is "naturally compatible with Adobe Camera Raw and Lightroom." Users will also choose between "Photo" and "Night" modes, with the former benefitting from zero shutter lag, while the latter focuses on longer exposure times. "Night" mode is best used on a tripod and can detect when handshake is reduced to really expand its capture times for the best results. "Project Indigo" avoids AI in capturing more detail while zoomed in, using "multi-frame super-resolution" to increase detail. This means that the "extra detail in our super-resolution photos is real, not hallucinated." Finally, "Indigo" has pro controls over the focus, shutter speed, ISO, exposure, and white balance, as well as the number of frames captured in a burst. Computational photography is still employed with pro controls, but the user has additional control over it. The results, seen below, can clearly be absolutely stunning. There's also a "Removing Reflections" button that can remove glass and window relfections after an image is taken. While "Project Indigo" is just an "experimental camera app" for the time being, Adobe has made it freely available through the App Store for iPhone 14 and above (or iPhone 12 Pro and above). An Android version is "for sure" coming later, but there's no timeline there, nor any word on what devices will be able to use it. With Levoy and Kainz at the helm, it seems obvious that Pixel devices will be on that list, but it's impossible to say for certain just yet.

[6]

Adobe released an iPhone camera app with full manual controls - 9to5Mac

For photography enthusiasts, the prosumer camera app market has had no shortage of great options, with longtime favorites like Halide from Lux leading the pack. Now, Adobe has decided to enter the picture (get it?) with a free experimental app from the same team behind the original Google Pixel camera. If you remember the early days of the Google Pixel and its heavy focus on computational photography, you already have a sense of what this team, now at Adobe, cares about and is capable of. Its new app, Project Indigo, brings that same spirit to the iPhone, but with a few key differences. At its core, Project Indigo is Adobe's answer to the biggest complaints about smartphone photos today: "overly bright, low contrast, high color saturation, strong smoothing, and strong sharpening". Here's how the team behind Project Indigo presents the app: This is the beginning of a journey for Adobe - towards an integrated mobile camera and editing experience that takes advantage of the latest advances in computational photography and AI. Our hope is that Indigo will appeal to casual mobile photographers who want a natural SLR-like look for their photos, including when viewed on large screens; to advanced photographers who want manual control and the highest possible image quality; and to anyone - casual or serious - who enjoys playing with new photographic experiences. Project Indigo takes an interesting approach to multi-frame image capture, combining up to 32 underexposed frames for a single shot to reduce noise and preserve highlight detail. If that sounds like what your iPhone's default camera does with HDR or Night mode... it is. But Project Indigo pushes things further, with more control and more frames. The trade-off? You'll sometimes wait a few extra seconds after pressing the shutter, but the payoff is cleaner shadows, less noise, and more dynamic range. Another nice touch: Project Indigo applies this same multi-frame computational stack even when outputting RAW/DNG files, not just JPEGs. That's something most smartphone camera apps don't do. As you'd hope from an advanced camera app, Project Indigo brings manual control over focus, ISO, shutter speed, white balance (with temperature and tint), and exposure compensation. But it also "offers control over the number of frames in the burst", letting photographers have full control over capture time versus noise levels. There's even a "Long Exposure" mode, which is perfect for creative motion blur effects. Project Indigo also tackles digital zoom quality with multi-frame super-resolution. If you pinch zoom past 2× (or 10× on the telephoto lens of iPhone 16 Pro Max), the app automatically captures multiple slightly offset frames (using your natural handshake) and combines them to build a sharper final image. But unlike AI-processed super-res tools that sometimes invent detail, this technique uses real-world micro-shifts to reconstruct resolution, promising a much better result. Given that this is an Adobe project, it's no surprise that Project Indigo integrates tightly with Lightroom Mobile. After capture, you can send images straight into Lightroom for editing, whether you're working with the JPEG or the DNG. In fact, Adobe has even built in profile and metadata support that lets Lightroom know the difference between Project Indigo's SDR and HDR "looks", making it easy to toggle between them during editing. This is also Adobe Labs territory, meaning Project Indigo doubles as a testbed for features that may roll out more broadly across Adobe's ecosystem later. One cool early example: an AI-powered "Remove Reflections" mode that helps clean up photos shot through windows or glass. Project Indigo runs on all iPhone Pro and Pro Max models starting from iPhone 12, plus all non-Pro iPhones starting from iPhone 14. The app is free, requires no Adobe account, and is available now on the App Store. That said, given how CPU-intensive the app's image pipeline is, Adobe recommends running it on a newer iPhone for the best experience. Be sure to visit the Project Indigo website and check out the many lossless sample photos they made available to present the app. You can also download it from the App Store if you'd like to try it for yourself.

[7]

Adobe's New Computational iPhone Camera App Looks Incredible

Alongside new Photoshop and Lightroom updates, Adobe recently unveiled Project Indigo, a new computational photography camera app. As spotted by DPReview, Adobe quietly introduced Project Indigo last week with a blog post on its dedicated research website. Project Indigo is a new computational photography camera app that promises to leverage the significant advancements in computational photography made over the last decade-plus to help mobile photographers capture better-quality images. In their article, Adobe's Marc Levoy (Adobe Fellow) and Florian Kainz (Senior Scientist) explain how, despite giant leaps forward in smartphone image sensor and optical technologies, many hardcore photographers still lament phone photos because they cannot compete with the much larger sensors and lenses in dedicated cameras, embody a "smartphone look" that is overly processed, and generally don't provide dedicated photographers with all the manual controls they desire. Enter Project Indigo. The new app aims to "address some of these gaps" that photographers experience with their smartphone cameras. Project Indigo, which is available right now on the Apple App Store, offers full manual controls, a more natural "SLR-like" look to photos, and the best image quality that current computational photography technology can deliver, including in JPEG and RAW photo formats. Levoy and Kainz add that their new app also introduces "some new photographic experiences not available in other camera apps." Starting with the computational photography aspects of Project Indigo, Adobe researchers utilize intelligent processing to enhance smartphone image quality significantly. In the examples below of a low-light scene, the first photo was captured as a single image on an iPhone under 1/10 lux illumination. The second image is a handheld shot captured by Project Indigo. The app captured and merged 32 frames in quick succession, which means that each frame can push the sensor less, resulting in less noise while still achieving an appropriate final exposure. It's effectively like keeping the shutter open for much longer without having to hold the camera steady for a longer period. And yes, this also works with RAW photo output. "What's different about computational photography using Indigo? First, we under-expose more strongly than most cameras," the researchers write. "Second, we capture, align, and combine more frames when producing each photo -- up to 32 frames as in the example above. This means that our photos have fewer blown-out highlights and less noise in the shadows. Taking a photo with our app may require slightly more patience after pressing the shutter button than you're used to, but after a few seconds you'll be rewarded with a better picture." As for the "look" of smartphone photos that many photographers dislike -- something that some apps have worked hard to overcome -- many mobile camera apps use computational photography to excess. While high-dynamic range photos with clever tone mapping can expand the dynamic range that mobile shooters can capture, they can also result in distorted, unnatural-looking images. Adobe has already made considerable strides in the realm of more natural-looking HDR photos with its impressive Adaptive Color profile, which uses subtle semantically-aware mask-based adjustments to expand tonal range without making photos look weird. Project Indigo builds upon this work but can achieve even better results because, as a camera app itself, it can work alongside the specific camera settings in real-time. "Our look is similar to Adobe's Adaptive Color profile, making our camera app naturally compatible with Adobe Camera Raw and Lightroom. That said, we know which camera, exposure time, and ISO was used when capturing your photo, so our look can be more faithful than Adobe's profile to the brightness of the scene before you," Adobe writes. While Adobe has provided many Project Indigo sample photos that can be properly displayed on this article, many more are best viewed in HDR. To view these photos, visit Adobe's Project Indigo Lightroom album. Adobe recommends viewing this album on an HDR-compatible display using Google Chrome, but it will work in some other browsers. Adobe notes that the album may not display correctly in Safari, however. Many modern smartphones, like the iPhone 15/16 Pro and Pro Max models, feature several high-quality rear cameras with different focal lengths (fields of view). Across various focal length options, however, phones utilize digital crops, meaning they just use less of the image sensor and then, in some cases, digitally scale the images to be larger. In Project Indigo, when the user pinches to zoom, the app uses multi-frame super-resolution, which promises to "restore much of the image quality lost by digital scaling." It works similarly to a pixel-shift mode on a dedicated camera, taking advantage of natural hand movement to capture the same scene from a series of slightly different perspectives. The app then combines these different frames into one larger, sharper one that features more detail than a single photo. And unlike AI-based super-resolution, the extra detail is real -- pulled from actual images. Project Indigo's third key objective is to offer mobile photographers with the professional controls they get on their dedicated camera systems. Project Indigo includes pro controls built "from the ground up for a natively computational camera," including controls over focus, shutter speed, ISO, exposure compensation, and white balance. However, since Project Indigo relies heavily on burst photography for some of its features, it also includes fine-grained control over the number of frames per burst. It also includes a "Long Exposure" button that replaces the app's merging method to capture photos with the same dreamy, smooth appearance as a long-exposure shot on a dedicated camera. This is great for taking pictures of moving water, for example, and can also be used for creative lighting effects and traditional single-frame night photography. The complete Project Indigo article offers much more in-depth technical information, including the app's image processing pipeline as it relates to photographic formats, demosaicing, and real-time image editing. It's an excellent read for photo technology enthusiasts. Project Indigo will be continually updated and may serve as a testbed for Adobe technologies in development for other apps. "This is the beginning of a journey for Adobe -- towards an integrated mobile camera and editing experience that takes advantage of the latest advances in computational photography and AI," Adobe writes. "Our hope is that Indigo will appeal to casual mobile photographers who want a natural SLR-like look for their photos, including when viewed on large screens; to advanced photographers who want manual control and the highest possible image quality; and to anyone -- casual or serious -- who enjoys playing with new photographic experiences." Project Indigo is available now for free on the Apple App Store. It works on all Pro and Pro Max iPhones starting from series 12 and on all non-Pro models from iPhone series 14 and newer. Since it is a work-in-progress, it does not require an Adobe account to use. An Android version and in-app presets are in development. The team also says it is working on solutions for exposure and focus bracketing, plus new multi-frame modes. Users are encouraged to download Project Indigo and try it for themselves. Adobe wants their feedback, too.

[8]

Adobe Launches a New Camera App for iPhone With Full Manual Controls

Adobe has launched yet another app for the iPhone, building upon its recent releases of Firefly and Photoshop on the App Store. The US-based company has introduced Project Indigo, a dedicated camera app developed by Adobe Labs which leverages computational photography to capture up to 32 frames and combine them into a single photo. It uses artificial intelligence (AI) to store images in both standard dynamic range (SDR) and high dynamic range (HDR). Adobe says Project Indigo is said to be compatible with the Camera Raw and Lightroom apps. In a research article, Adobe detailed its new Project Indigo app. With Project Indigo, Adobe aims to tackle the issue of "smartphone look" -- images which are overly bright, have low contrast, high colour saturation, high smoothing, and strongly sharpened details. While these appear fine on a small screen, seeing them on a bigger display can result in an "unrealistic look", as per the company. This is where Project Indigo comes in. It is available as a free-to-download experimental app on the App Store for iPhone. The app offers full manual controls, with tools like aperture, exposure time, ISO, focus, and white balance, with the latter also having separate control over temperature and tint. Opening the app brings up two modes -- Photo and Night -- meant for daylight and night photography, respectively. The latter is said to use longer exposure to minimise noise and capture more frames with each press of the shutter. It also improves stabilisation and reduces hand-shake when capturing long-exposure images at night. Adobe says Project Indigo brings a more natural "SLR-like" look to images and in the highest possible quality. The app is powered by computational photography and is claimed to under-expose images more strongly than most cameras. Further, it also captures, aligns, and combines up to 32 frames, resulting in photos with fewer blown-out highlights, less noise in shadows, and a better picture overall. Employing the aforementioned methods means less spatial denoising is required, explains Adobe. It is claimed to preserve more natural textures, even if it means leaving a bit of noise in the image. The benefits of computational photography are applied to both JPEG and raw photos. As per the company, Project Indigo also improves pinch-zoom on iPhone by employing multi-frame super resolution. It is claimed to restore image quality which is usually lost by digital scaling when the camera focuses on the centre part of the image while zooming in. The app captures multiple images of the same scene, combining them into a single image, known as a "super resolution photo". Adobe says it features more details than what is present in a single image. Project Indigo is compatible with Apple's Pro models starting with iPhone 12 series. It is also available on iPhone 14 and later non-Pro models. At the moment, the app is completely free-to-use and does not require sign-in. Adobe says it will also introduce a similar app for Android devices in time.

Share

Share

Copy Link

Adobe's new 'Project Indigo' app, developed by former Google Pixel camera engineers, brings advanced computational photography and AI features to iPhones, promising SLR-like image quality and manual controls.

Adobe Unveils 'Project Indigo': A Game-Changer in Mobile Photography

Adobe has launched a groundbreaking new camera app called 'Project Indigo', developed by former Google Pixel camera engineers Marc Levoy and Florian Kainz. This free app, currently available for select iPhone models, aims to revolutionize smartphone photography by combining advanced computational techniques with manual controls and AI-powered features

1

.Computational Photography at Its Core

Source: Gadgets 360

Project Indigo leverages sophisticated computational photography methods to produce high-quality images. Unlike traditional smartphone cameras that capture a single shot, Indigo takes up to 32 frames and combines them, resulting in photos with reduced noise and higher dynamic range

2

. This approach allows for better handling of challenging lighting conditions and produces images with fewer blown-out highlights and less noise in shadows3

.Natural, SLR-like Image Quality

A key focus of Project Indigo is to address common complaints about smartphone photography, such as over-processing and unnatural looks. The app aims to produce images with a more natural, SLR-like appearance by using mild tone mapping, subtle color saturation boosting, and minimal sharpening

4

. This approach sets it apart from both default camera apps and other third-party apps that opt for zero processing.Advanced Features and Manual Controls

Source: CNET

Project Indigo offers a range of features catering to both casual and advanced photographers:

- Manual controls for shutter speed, ISO, exposure, focus, and white balance

1

. - Zero-lag shutter for capturing fast-moving subjects

1

. - AI-based features like resolution upscaling for 10x zoom and reflection removal

1

. - Night mode for low-light photography

5

. - Compatibility with Adobe Camera Raw and Lightroom for seamless editing workflows

5

.

Related Stories

Availability and Future Plans

Currently, Project Indigo is available for free on the App Store for iPhone 12 Pro and newer models, with no Adobe account required

2

. Adobe has confirmed plans for an Android version in the future, along with potential additions such as portrait mode, video recording, and personalized "looks"3

.

Source: Engadget

Impact on Mobile Photography Landscape

The launch of Project Indigo represents a significant development in the mobile photography space. By bringing together the expertise of renowned computational photography pioneers and Adobe's imaging prowess, the app has the potential to raise the bar for smartphone camera capabilities

4

. It challenges both default camera apps and existing third-party solutions, offering a compelling alternative for users seeking more control and higher image quality from their mobile devices.References

Summarized by

Navi

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy