The Rise of the 'Cybernetic Teammate': How AI is Transforming Human Collaboration in the Workplace

3 Sources

3 Sources

[1]

Researchers Explore How AI Can Strengthen, Not Replace, Human Collaboration

Researchers from Carnegie Mellon University's Tepper School of Business(opens in new window) are learning how AI can be used to support teamwork rather than replace teammates. Anita Williams Woolley(opens in new window) is a professor of organizational behavior. She researches collective intelligence, or how well teams perform together, and how artificial intelligence could change workforce dynamics(opens in new window). Now, Woolley and her colleagues are helping to figure out exactly where and how AI can play a positive role. "I'm always interested in technology that can help us become a better version of ourselves individually," Woolley said, "but also collectively, how can we change the way we think about and structure work to be more effective?" Woolley collaborated with technologists and others in her field to develop Collective HUman-MAchine INtelligence(opens in new window) (COHUMAIN), a framework that seeks to understand where AI fits within the established boundaries of organizational social psychology. The researchers behind COHUMAIN caution against treating AI like any other teammate. Instead, they see it as a partner that works under human direction, with the potential to strengthen existing capabilities or relationships. "AI agents could create the glue that is missing because of how our work environments have changed, and ultimately improve our relationships with one another," Woolley said. The research that makes up the COHUMAIN architecture emphasizes that while AI integration into the workplace may take shape in ways we don't yet understand, it won't change the fundamental principles behind organizational intelligence, and likely can't fill in all of the same roles as humans. For instance, while AI might be great at summarizing a meeting, it's still up to people to sense the mood in the room or pick up on the wider context of the discussion. Organizations have the same needs as before, including a structure that allows them to tap into each human team member's unique expertise. Woolley said that artificial intelligence systems may best serve in "partnership" or facilitation roles rather than managerial ones, like a tool that can nudge peers to check in with each other, or provide the user with an alternate perspective.. With so much collaboration happening through screens, AI tools might help teams strengthen connections between coworkers. But those same tools also raise questions about what's being recorded and why. "People have a lot of sensitivity, rightly so, around privacy. Often you have to give something up to get something, and that is true here," Wooley said. The level of risk that users feel, both socially and professionally, can change depending on how they interact with AI, according to Allen Brown(opens in new window), a Ph.D. student who works closely with Woolley. Brown is exploring where this tension shows up and how teams can work through it. His research focuses on how comfortable people feel taking risks or speaking up in a group. Brown said that, in the best case, AI could help people feel more comfortable speaking up and sharing new ideas that might not be heard otherwise. "In a classroom, we can imagine someone saying, 'Oh, I'm a little worried. I don't know enough for my professor, or how my peers are going to judge my question,' or, 'I think this is a good idea, but maybe it isn't.' We don't know until we put it out there." Since AI relies on a digital record that might or might not be kept permanently, one concern is that a human might not know which interactions with an AI will be used for evaluation. "In our increasingly digitally mediated workspaces, so much of what we do is being tracked and documented," Brown said. "There's a digital record of things, and if I'm made aware that, 'Oh, all of a sudden our conversation might be used for evaluation,' we actually see this significant difference in interaction." Even when they thought their comments might be monitored or professionally judged, people still felt relatively secure talking to another human being. "We're talking together. We're working through something together, but we're both people. There's kind of this mutual assumption of risk," he explained. The study found that people felt more vulnerable when they thought an AI system was evaluating them. Brown wants to understand how AI can be used to create the opposite effect -- one that builds confidence and trust. "What are those contexts in which AI could be a partner, could be part of this conversational communicative practice within a pair of individuals at work, like a supervisor-supervisee relationship, or maybe within a team where they're working through some topic that might have task conflict or relationship conflict?" Brown said. "How does AI help resolve the decision-making process or enhance the resolution so that people actually feel increased psychological safety?" At the individual level, Tepper researchers are also learning how the way in which AI explains its reasoning affects how people use and trust it. Zhaohui (Zoey) Jiang(opens in new window) and Linda Argote(opens in new window) are studying how people react to different kinds of AI systems -- specifically, ones that explain their reasoning (transparent AI) versus ones that don't explain how they make decisions (black box AI). "We see a lot of people advocating for transparent AI," Jiang said, "but our research reveals an advantage of keeping the AI a black box, especially for a high ability participant." One of the reasons for this, she explained, is overconfidence and distrust in skilled decision-makers. "For a participant who is already doing a good job independently at the task, they are more prone to the well-documented tendency of AI aversion. They will penalize the AI's mistake far more than the humans making the same mistake, including themselves," Jiang said. "We find that this tendency is more salient if you tell them the inner workings of the AI, such as its logic or decision rules." People who struggle with decision-making actually improve their outcomes when using transparent AI models that show off a moderate amount of complexity in their decision-making process. "We find that telling them how the AI is thinking about this problem is actually better for less-skilled users, because they can learn from AI decision-making rules to help improve their own future independent decision-making." While transparency is proving to have its own use cases and benefits, Jiang said the most surprising findings are around how people perceive black box models. "When we're not telling these participants how the model arrived at its answer, participants judge the model as the most complex. Opacity seems to inflate the sense of sophistication, whereas transparency can make the very same system seem simpler and less 'magical,'" she said. Both kinds of models vary in their use cases. While it isn't yet cost‑effective to tailor an AI to each human partner, future systems may be able to self-adapt their representation to help people make better decisions, she said. "It can be dynamic in a way that it can recognize the decision-making inefficiencies of that particular individual that it is assigned to collaborate with, and maybe tweak itself so that it can help complement and offset some of the decision-making inefficiencies."

[2]

The 'cybernetic teammate': How AI is rewriting the rules of business collaboration | Fortune

A novel experiment at Procter & Gamble reveals that artificial intelligence isn't just a tool -- it's becoming a genuine teammate that can match human collaboration. For decades, the holy grail of business performance has been effective teamwork. We've organized entire companies around the premise that collaboration beats individual effort -- that two heads are better than one. But what happens when one of those "heads" is artificial intelligence? A remarkable field experiment involving 776 professionals at Procter & Gamble -- and led by Harvard's D^3 Institute, where I'm an executive fellow -- has fundamentally challenged our assumptions about teamwork, expertise, and the future of collaborative work. The results suggest we're witnessing the emergence of what researchers call the "cybernetic teammate": AI that doesn't just assist but actively participates in the collaborative process. The P&G experiment was elegantly simple yet profound. Cross-functional teams of commercial and R&D professionals were randomly assigned to work on real product innovation challenges in four different configurations: individuals working alone; traditional two-person teams; individuals with AI; and teams augmented with AI. The headline finding is striking: Individuals working with AI delivered measurable performance improvements -- nearly 40% gains -- that elevated them to the same level as traditional human teams. In other words, AI appears capable of replicating the fundamental benefits we've long attributed to humans in terms of collaboration, including the innovative power of multiple human perspectives. Think about what this means. For generations, we've structured organizations around teams because collaboration allows us to pool different expertise, catch blind spots, and generate better solutions than individuals working alone. The P&G study shows that AI can provide these same collaborative benefits to a single person. But here's where the story gets even more interesting. While AI-enabled individuals could match traditional teams, AI-augmented cross-functional teams delivered results that were in an entirely different league. When researchers examined the top 10% of solutions -- the breakthrough ideas that could drive real competitive advantage -- AI-enhanced cross-functional teams were three times more likely to produce them. This wasn't a marginal improvement. The combination of diverse human expertise working together with AI created a multiplicative effect that neither human-only teams nor AI-enabled individuals could achieve. It suggests that the future of high-stakes innovation lies not in replacing human collaboration with AI, but in supercharging cross-functional teams with artificial intelligence. The P&G experiment effectively shows that just as AI enhances the performance of individual workers, it can to the same with entire teams with unmatched quality results. One of the significant advantages of working in teams is the access to complementary expertise provided by diverse team members. The experiment discovered that AI could fulfill this role quite well. Individuals, either technical or commercial, when working without AI predictably created solutions that favored their own expertise domain. However, those individuals working with AI produced solutions that were as balanced as cross-functional teams working with or without AI. R&D specialists began proposing more commercially viable solutions while commercial professionals developed technically sounder approaches. AI acted as a bridge, helping team members access and integrate perspectives outside their domain expertise. Few business commonplaces are as oft repeated as the need to "break down silos." Yet doing so remains difficult in practice for most organizations. Now, with the help of AI, businesses will be better able to fundamentally change how cross-functional teams operate. Instead of gathering experts who advocate for their functional perspective and then compromise, AI enables teams where each member can think holistically across functions. In the case of the P&G experiment, the result was more technically feasible and commercially attractive solutions from the ground up, rather than negotiated settlements between competing viewpoints -- just the tangible "breaking down of silos" that leaders so often seek and can only poorly approximate through reshuffling of reporting lines. For organizations struggling with the classic challenges of cross-functional collaboration -- territorial disputes, communication gaps, and suboptimal compromises -- AI offers a pathway to genuine integration. This experiment also challenges everything we thought we knew about technology's impact on workplace satisfaction. Far from creating a cold, mechanical work experience, AI collaboration enhanced positive emotions in ways that rival human teamwork itself. The data is striking. Individuals working with AI showed a 46% increase in positive emotions -- excitement, energy, and enthusiasm -- compared to those working alone. But AI-augmented teams experienced an even more dramatic 64% boost in positive emotions. Simultaneously, AI reduced negative emotions like anxiety and frustration by approximately 23% across both individual and team settings. What makes this finding interesting is that it reveals AI filling a role we never expected: that of the emotionally supportive and motivational partner. Traditionally, one of the key justifications for teamwork has been its psychological benefits -- the energy that comes from collaboration, the reduced stress of shared responsibility, the excitement of building on each other's ideas. The P&G experiment shows that AI can replicate many of these emotional benefits. Perhaps most tellingly, participants who experienced these positive emotional responses also reported significantly higher expectations for future AI use. This creates a virtuous cycle: Positive AI experiences drive greater adoption, which leads to more sophisticated AI interaction skills, which in turn generates even better outcomes and more positive experiences. This emotional dimension helps explain why AI adoption often exceeds initial expectations once people actually use it. It's not just about efficiency gains -- it's about AI making work more enjoyable and engaging. These findings reveal specific organizational design principles that forward-thinking companies should implement immediately: Reimagine your innovation architecture. The traditional model of assembling large, diverse teams for every innovation challenge is now obsolete. Instead, deploy AI-augmented cross-functional teams as your primary innovation unit. These teams combine the boundary-busting power of AI with diverse human expertise to consistently produce breakthrough solutions. This approach can reduce the overhead coordination of traditional innovation processes while maximizing the probability of generating top-tier ideas from the start. Transform cross-functional team dynamics. Stop tolerating the dysfunction that plagues most cross-functional teams -- the territorial battles, the compromised solutions, the endless coordination meetings. AI can eliminate these friction points by enabling each team member to think and contribute across functional boundaries. This isn't just about adding AI to existing teams; it's about redesigning how cross-functional teams operate from the ground up. AI as a cybernetic teammate can bridge silos and bring in missing expertise, enabling teams to work faster and better. Train 'T-shaped' AI collaborators. The P&G findings reveal that effective AI collaboration requires a new type of professional -- one who combines deep domain expertise with sophisticated AI interaction skills. Employees can no longer rely on broad, shallow knowledge; they need to deepen their functional expertise while developing the ability to "dance" with AI across domains. The vertical stroke of the "T" becomes even more critical as it provides the substantive knowledge needed to guide, challenge, and refine AI outputs. Meanwhile, the horizontal stroke expands dramatically as AI enables professionals to contribute meaningfully beyond their core expertise. This dual development -- deeper specialization paired with AI-enabled boundary spanning -- creates professionals who can extract maximum value from human-AI collaboration. Companies that build these competencies first will have sustainable advantages in innovation speed and quality. Restructure project economics and timelines. If one AI-enabled person can match a traditional team's output while working 16% faster, your resource allocation needs to be adjusted accordingly. Projects that previously required months of coordination between functions can now be completed by smaller, AI-augmented teams in weeks. This isn't about cost-cutting -- it's about dramatically accelerating time-to-market while maintaining or improving output quality. Design for the emotional multiplier effect. The emotional benefits of AI collaboration create a compounding advantage. Employees who have positive AI experiences become AI advocates, driving organic adoption throughout the organization. Conversely, poor initial AI experiences create resistance that's difficult to overcome. Invest heavily in the first 90 days of AI implementation -- training, support, and carefully curated use cases that ensure positive emotional responses from the start. In the training, have employees directly experience how AI enables them to perform better and develop new skills instead of the fears of deskilling and replacement. We're witnessing the early stages of a fundamental shift in how knowledge work gets done. AI isn't just automating tasks completed by individual workers; it's also now capable of participating in the collaborative processes that drive innovation. This doesn't mean human collaboration becomes obsolete. Rather, it suggests we're entering an era where the most powerful problem-solving units will be human-AI ensembles that combine the best of both worlds: human intuition, creativity, and domain expertise with AI's vast knowledge base, pattern recognition, and ability to rapidly explore solution spaces. The companies that figure out how to orchestrate these cybernetic teams -- where AI truly functions as a teammate rather than just a tool -- will have a significant competitive advantage in the innovation economy. The future belongs to those who can master the art of human-machine collaboration.

[3]

The Cybernetic Teammate: When One Human Plus AI Equals Two | PYMNTS.com

The research from Johns Hopkins Carey Business School and MIT Sloan School of Management found that people working with AI produced 60% more output than those working without it, while maintaining the same quality. They also exchanged 23% fewer messages, showing less time spent coordinating and more time completing tasks. The pattern suggests that AI is reshaping collaboration. People focus on context, reasoning and judgment. Machines handle repetition, data and scale. The result is more work done in less time, with fewer steps. As PYMNTS reported, early adopters are finding that workers remain accountable for decisions and oversight while algorithms accelerate the mechanical parts of production. Many describe the shift as moving from delegation to partnership. The concept, known as "cybernetic teammate," was introduced by Harvard researchers studying how AI changes the division of labor inside teams. Unlike earlier waves of automation that replaced labor, this model expands it. Humans no longer use tools passively; they direct systems that learn and adapt alongside them. Procter & Gamble tested the model in its innovation labs. The company studied 776 professionals developing new product ideas. Individuals using AI performed as well as two-person teams without it. Teams with AI produced the most creative results, according to Harvard Business School data. Artificial intelligence also changed how people worked together, the research found. Engineers proposed more commercially viable concepts. Marketers created more technically informed solutions. Workers reported greater enthusiasm and less frustration, according to Harvard's Working Knowledge. The technology seemed to reduce barriers between creative and technical roles, improving cross-functional collaboration. If one person with AI can equal two without it, the meaning of productivity shifts. Coordination costs decline as algorithms handle drafting, analysis and scheduling. The most efficient team may soon be the human-AI pair rather than the traditional group. For executives, that changes how output is measured. Headcount no longer tracks capability. A single employee with AI can manage work that once required multiple people. Yet the transition is rarely smooth. The MIT Sloan Review cautions that companies adopting AI without redesigning roles often see a "productivity paradox." Workflows, incentives and reporting lines stay the same while technology changes how the work itself happens. Productivity dips before rising because employees must learn how to collaborate with algorithms rather than compete with them. AI adoption often produces a J-shaped performance curve, where output drops before it rises. Firms that introduce AI into existing roles without adjusting workflows or incentives often see efficiency stagnate. Studies also find that firms integrating AI into old structures rarely sustain gains. Those that rebuild jobs around human-AI pairings, assigning creative, interpretive and computational tasks to whichever side does them best, recover faster and outperform peers. The research concludes that productivity depends less on adopting AI than on reorganizing work around it. PYMNTS noted a similar pattern across industries. AI now handles drafting, summarization and data analysis, freeing employees for tasks that demand reasoning and originality. The strongest results occur when firms embed human supervision into every step, ensuring that speed does not come at the expense of accuracy or accountability. A Columbia University study found teams performed best when humans treated AI as a capable partner rather than a tool. When workers either distrusted or over-relied on the system, performance declined and stress indicators rose. The researchers concluded that success depends on calibrated trust, humans must understand both the strengths and limits of their AI partners.

Share

Share

Copy Link

New research from major universities and Fortune 500 companies reveals that AI is evolving from a simple tool to a genuine collaborative partner, with studies showing AI-human partnerships can match traditional team performance while enhancing cross-functional collaboration.

The Emergence of AI as a Collaborative Partner

A groundbreaking wave of research is fundamentally reshaping how we understand artificial intelligence's role in the workplace. Rather than simply automating tasks or replacing human workers, AI is emerging as what researchers call a "cybernetic teammate" – a genuine collaborative partner that can replicate the benefits traditionally associated with human teamwork

1

2

.

Source: PYMNTS

This paradigm shift is supported by extensive research from leading institutions including Carnegie Mellon University's Tepper School of Business, Harvard's D³ Institute, Johns Hopkins Carey Business School, and MIT Sloan School of Management. The findings suggest that AI isn't just enhancing individual productivity – it's fundamentally changing the nature of collaboration itself.

Landmark Research Reveals Transformative Results

The most compelling evidence comes from a field experiment involving 776 professionals at Procter & Gamble, led by Harvard's D³ Institute. The study tested four different work configurations: individuals working alone, traditional two-person teams, individuals with AI, and teams augmented with AI

2

.The results were striking. Individuals working with AI delivered nearly 40% performance improvements, elevating them to the same level as traditional human teams. This suggests that AI can provide the same collaborative benefits – including diverse perspectives, error-catching, and solution generation – that have long been attributed exclusively to human teamwork

2

.

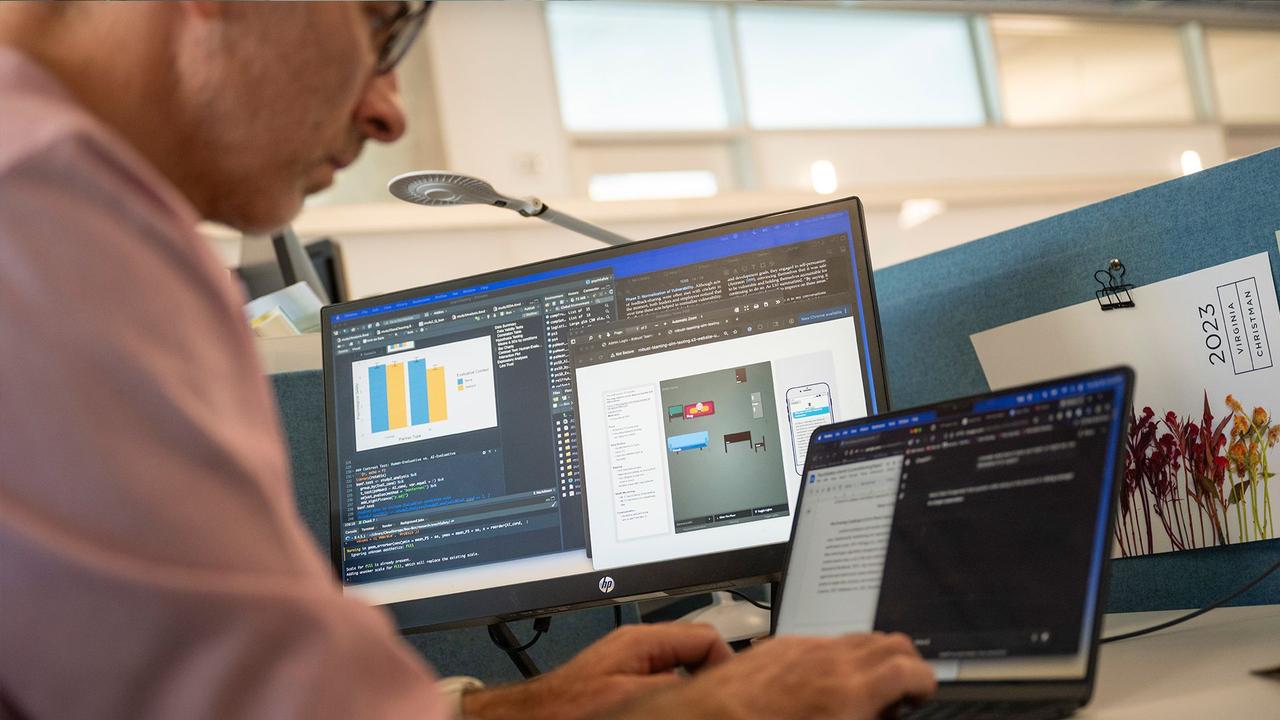

Source: CMU

Additional research from Johns Hopkins and MIT found that people working with AI produced 60% more output while maintaining quality, and exchanged 23% fewer messages, indicating more efficient task completion with less coordination overhead

3

.Breaking Down Organizational Silos

One of the most significant findings relates to cross-functional collaboration. The P&G experiment revealed that AI-augmented cross-functional teams were three times more likely to produce breakthrough innovations compared to traditional teams. More importantly, AI helped individuals access expertise outside their domain – R&D specialists began proposing more commercially viable solutions while commercial professionals developed more technically sound approaches

2

.

Source: Fortune

Anita Williams Woolley, a professor of organizational behavior at Carnegie Mellon, emphasizes that AI agents could "create the glue that is missing because of how our work environments have changed." Her research through the COHUMAIN (Collective HUman-MAchine INtelligence) framework suggests that AI works best in partnership or facilitation roles rather than managerial ones

1

.Related Stories

Addressing Privacy and Trust Concerns

The transition to AI collaboration isn't without challenges. Research by Allen Brown, a Ph.D. student working with Woolley, explores how people's comfort levels change when interacting with AI systems, particularly regarding privacy and evaluation concerns. The study found that people felt more vulnerable when they thought an AI system was evaluating them, compared to interactions with human colleagues

1

.A Columbia University study reinforced the importance of calibrated trust, finding that teams performed best when humans treated AI as a capable partner rather than a tool. Performance declined when workers either distrusted or over-relied on the system

3

.Organizational Transformation Required

The research consistently shows that simply introducing AI into existing workflows isn't sufficient. Companies must redesign roles and processes around human-AI partnerships to realize the full benefits. MIT Sloan Review warns of a "productivity paradox" where firms adopting AI without restructuring often see initial performance drops before improvements emerge

3

.Successful implementation requires rebuilding jobs around human-AI pairings, with creative and interpretive tasks assigned to humans while computational and repetitive work goes to AI systems. This approach has shown to help firms recover faster and outperform peers who maintain traditional structures.

References

Summarized by

Navi

[2]

Related Stories

MIT Study Reveals Nuances in Human-AI Collaboration Effectiveness

28 Oct 2024•Science and Research

The Role of AI in Leadership and Business: Enhancing Human Potential While Maintaining Trust

15 Jul 2024

AI and automation will eliminate 6% of US jobs by 2030, but the real story is more complex

13 Jan 2026•Business and Economy

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research