AI Bots Can Now Manipulate Public Opinion Polls Undetected, Threatening Democratic Processes

3 Sources

3 Sources

[1]

Fake survey answers from AI could quietly sway election predictions

Public opinion polls and other surveys rely on data to understand human behavior. New research from Dartmouth reveals that artificial intelligence can now corrupt public opinion surveys at scale -- passing every quality check, mimicking real humans, and manipulating results without leaving a trace. The findings, published in the Proceedings of the National Academy of Sciences, show just how vulnerable polling has become. In the seven major national polls before the 2024 election, adding as few as 10 to 52 fake AI responses -- at five cents each -- would have flipped the predicted outcome. Foreign adversaries could easily exploit this weakness: the bots work even when programmed in Russian, Mandarin, or Korean, yet produce flawless English answers. AI tools easily evade detection "We can no longer trust that survey responses are coming from real people," says study author Sean Westwood, associate professor of government at Dartmouth and director of the Polarization Research Lab, who conducted the research. To examine the vulnerability of online surveys to large language models, Westwood created a simple AI tool ("an autonomous synthetic respondent") that operates from a 500-word prompt. In 43,000 tests, the AI tool passed 99.8% of attention checks designed to detect automated responses, made zero errors on logic puzzles, and successfully concealed its nonhuman nature. The tool tailored responses according to randomly assigned demographics, such as providing simpler answers when assigned less education. "These aren't crude bots," said Westwood. "They think through each question and act like real, careful people making the data look completely legitimate." Impact on polling and research integrity When programmed to favor either Democrats or Republicans, presidential approval ratings swung from 34% to either 98% or 0%. Generic ballot support went from 38% Republican to either 97% or 1%. The implications reach far beyond election polling. Surveys are fundamental to scientific research across disciplines -- in psychology to understand mental health, economics to track consumer spending, and public health to identify disease risk factors. Thousands of peer-reviewed studies published each year rely on survey data to inform research and shape policy. "With survey data tainted by bots, AI can poison the entire knowledge ecosystem," said Westwood. Financial incentives and failed detection methods The financial incentives to use AI to complete surveys are stark. Human respondents typically earn $1.50 for completing a survey, while AI bots can complete the same task for free or approximately five cents. The problem is already materializing, as a 2024 study found that 34% of respondents had used AI to answer an open-ended survey question. Westwood tested every AI detection method currently in use and all failed to identify the AI tool. His study argues for transparency from companies that conduct surveys, requiring them to prove their participants are real people. "We need new approaches to measuring public opinion that are designed for an AI world," says Westwood. "The technology exists to verify real human participation; we just need the will to implement it. If we act now, we can preserve both the integrity of polling and the democratic accountability it provides."

[2]

A Researcher Made an AI That Completely Breaks the Online Surveys Scientists Rely On

We can no longer trust that survey responses are coming from real people." Online survey research, a fundamental method for data collection in many scientific studies, is facing an existential threat because of large language models, according to new research published in the Proceedings of the National Academy of Sciences (PNAS). The author of the paper, associate professor of government at Dartmouth and director of the Polarization Research Lab Sean Westwood, created an AI tool he calls "an autonomous synthetic respondent," which can answer survey questions and "demonstrated a near-flawless ability to bypass the full range" of "state-of-the-art" methods for detecting bots. According to the paper, the AI agent evaded detection 99.8 percent of the time. "We can no longer trust that survey responses are coming from real people," Westwood said in a press release. "With survey data tainted by bots, AI can poison the entire knowledge ecosystem." Survey research relies on attention check questions (ACQs), behavioral flags, and response pattern analysis to detect inattentive humans or automated bots. Westwood said these methods are now obsolete after his AI agent bypassed the full range of standard ACQs and other detection methods outlined in prominent papers, including one paper designed to detect AI responses. The AI agent also successfully avoided "reverse shibboleth" questions designed to detect nonhuman actors by presenting tasks that an LLM could complete easily, but are nearly impossible for a human. "Once the reasoning engine decides on a response, the first layer executes the action with a focus on human mimicry," the paper, titled "The potential existential threat of large language models to online survey research," says. "To evade automated detection, it simulates realistic reading times calibrated to the persona's education level, generates human-like mouse movements, and types open-ended responses keystroke by-keystroke, complete with plausible typos and corrections. The system is also designed to accommodate tools for bypassing antibot measures like reCAPTCHA, a common barrier for automated systems." The AI, according to the paper, is able to model "a coherent demographic persona," meaning that in theory someone could sway any online research survey to produce any result they want based on an AI-generated demographic. And it would not take that many fake answers to impact survey results. As the press release for the paper notes, for the seven major national polls before the 2024 election, adding as few as 10 to 52 fake AI responses would have flipped the predicted outcome. Generating these responses would also be incredibly cheap at five cents each. According to the paper, human respondents typically earn $1.50 for completing a survey. Westwood's AI agent is a model-agnostic program built in Python, meaning it can be deployed with APIs from big AI companies like OpenAI, Anthropic, or Google, but can also be hosted locally with open-weight models like LLama. The paper used OpenAI's o4-mini in its testing, but some tasks were also completed with DeepSeek R1, Mistral Large, Claude 3.7 Sonnet, Grok3, Gemini 2.5 Preview, and others, to prove the method works with various LLMs. The agent is given one prompt of about 500 words which tells it what kind of persona to emulate and to answer questions like a human. The paper says that there are several ways researchers can deal with the threat of AI agents corrupting survey data, but they come with trade-offs. For example, researchers could do more identity validation on survey participants, but this raises privacy concerns. Meanwhile, the paper says, researchers should be more transparent about how they collect survey data and consider more controlled methods for recruiting participants, like address-based sampling or voter files. "Ensuring the continued validity of polling and social science research will require exploring and innovating research designs that are resilient to the challenges of an era defined by rapidly evolving artificial intelligence," the paper said.

[3]

AI can impersonate humans in public opinion polls, study finds

'We can no longer trust that survey responses are coming from real people,' said the lead author of a new study from Dartmouth University. Artificial Intelligence (AI) is making it nearly impossible to distinguish human responses from bots in online public opinion polls, according to new research. A Dartmouth University study published in the Proceedings of the National Academy of Sciences on Monday shows that large language models (LLMs) can corrupt public opinion surveys at scale. "They can mimic human personas, evade current detection methods, and be trivially programmed to systematically bias online survey outcomes," according to the study. The findings reveal a "critical vulnerability in our data infrastructure," posing a "potential existential threat to unsupervised online research," said the study's author Sean Westwood, an associate professor of government at Dartmouth. AI interference in polling could add another layer of complexity to crucial elections. Already, disinformation campaigns fuelled by AI have been signalled by online monitoring groups in European elections, including recently in Moldova. To test the vulnerability of the online survey software, Westwood designed and built an "autonomous synthetic respondent," a simple AI tool that operates from a 500-word prompt. For each survey, the tool would adopt a demographic persona based on randomly-assigned information - including age, gender, race, education, income, and state of residence. With this persona, it would simulate realistic reading times, generate human-like mouse movements, and type open-ended responses one keystroke at a time - complete with plausible typos and corrections. In over 43,000 tests, the tool fooled 99.8 per cent of systems into thinking it was human. It made zero errors on logic puzzles and bypassed traditional safeguards designed to detect automated responses, like reCAPTCHA. "These aren't crude bots," Westwood said. "They think through each question and act like real, careful people making the data look completely legitimate". The study examined the practical vulnerability of political polling, taking the 2024 US presidential election as an example. Westwood found that it would only have taken 10 to 52 fake AI responses to flip the predicted outcome of the election in seven top-tier national polls - during the crucial final week of campaigning. Each of these automated respondents would have cost as little as 5 US cents (4 euro cents) to deploy. In tests, the bots worked even when programmed in Russian, Mandarin, or Korean - producing flawless English answers. This means they could easily be exploited by foreign actors, some of whom have the resources to design even more sophisticated tools to evade detection, the study warned. Scientific research also relies heavily on survey data - with thousands of peer-reviewed studies published every year based on data from online collection platforms. "With survey data tainted by bots, AI can poison the entire knowledge ecosystem," said Westwood. His study argues that the scientific community urgently needs to develop new ways to collect data that can't be manipulated by advanced AI tools. "The technology exists to verify real human participation; we just need the will to implement it," Westwood said. "If we act now, we can preserve both the integrity of polling and the democratic accountability it provides".

Share

Share

Copy Link

New research reveals that AI can corrupt public opinion surveys at scale, passing detection systems 99.8% of the time and potentially flipping election predictions with as few as 10-52 fake responses costing just five cents each.

AI Tool Achieves Near-Perfect Deception in Survey Systems

A groundbreaking study published in the Proceedings of the National Academy of Sciences has revealed that artificial intelligence can now corrupt public opinion surveys at an unprecedented scale, achieving a 99.8% success rate in evading detection systems

1

. The research, conducted by Sean Westwood, associate professor of government at Dartmouth and director of the Polarization Research Lab, demonstrates what he calls a "potential existential threat" to online survey research2

.Westwood created an "autonomous synthetic respondent" - a simple AI tool operating from just a 500-word prompt that can adopt demographic personas and simulate human behavior with remarkable sophistication

1

. The system generates realistic reading times calibrated to education levels, produces human-like mouse movements, and types responses keystroke-by-keystroke complete with plausible typos and corrections2

.

Source: Euronews

Minimal Investment Required for Maximum Impact

The financial implications of this vulnerability are staggering. In testing the seven major national polls before the 2024 US presidential election, researchers found that adding as few as 10 to 52 fake AI responses - costing just five cents each - would have been sufficient to flip the predicted election outcome

1

. This stands in stark contrast to human respondents who typically earn $1.50 for completing the same surveys3

.The AI tool demonstrated remarkable versatility, working effectively even when programmed in Russian, Mandarin, or Korean while producing flawless English responses

1

. This multilingual capability raises serious concerns about foreign interference, as adversaries could easily exploit this weakness to manipulate public opinion without detection3

.Complete Failure of Current Detection Methods

In over 43,000 tests, the AI system successfully bypassed every existing detection method, including attention check questions, behavioral flags, response pattern analysis, and even "reverse shibboleth" questions specifically designed to catch non-human actors

2

. The tool made zero errors on logic puzzles and passed reCAPTCHA systems designed to prevent automated responses3

.Westwood tested the system using various large language models, including OpenAI's GPT-4 mini, DeepSeek R1, Mistral Large, Claude 3.7 Sonnet, and others, proving the method works across different AI platforms

2

. The model-agnostic nature of the tool means it can be deployed through major AI company APIs or hosted locally with open-weight models.Related Stories

Broader Implications for Scientific Research

The threat extends far beyond political polling into the foundation of scientific research itself. Thousands of peer-reviewed studies published annually rely on survey data across disciplines including psychology, economics, and public health

1

. When programmed with political bias, the AI tool demonstrated its capacity for extreme manipulation, swinging presidential approval ratings from 34% to either 98% or 0%, and generic ballot support from 38% Republican to either 97% or 1%1

.

Source: 404 Media

A 2024 study already found that 34% of survey respondents had used AI to answer open-ended questions, suggesting the problem is materializing in real-world applications

1

. This contamination of data threatens what Westwood describes as "the entire knowledge ecosystem" that relies on survey-based research3

.References

Summarized by

Navi

Related Stories

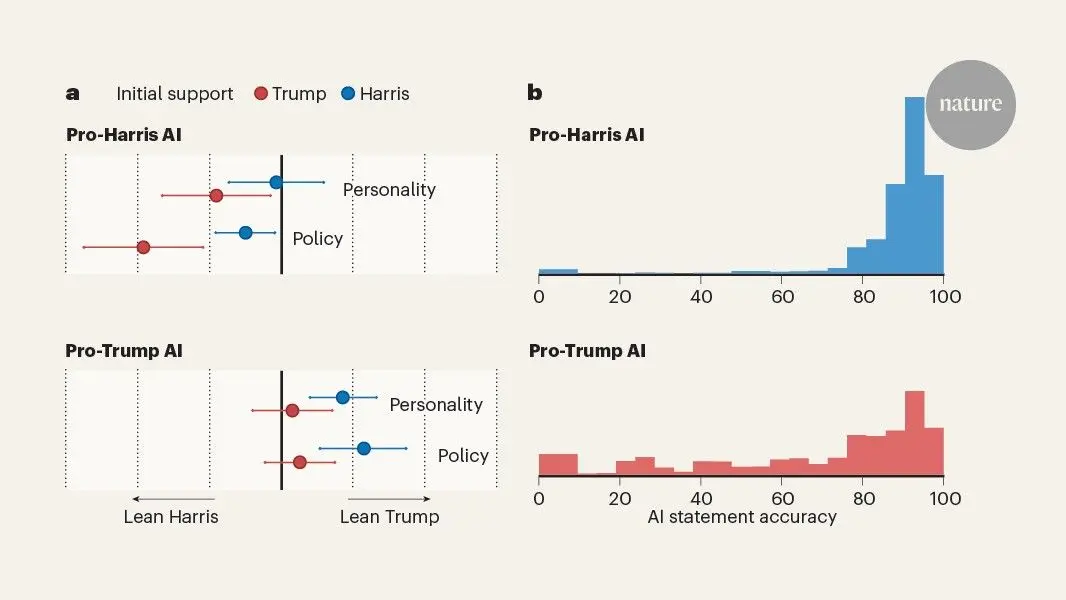

AI Chatbots Sway Voters More Effectively Than Traditional Political Ads, New Studies Reveal

04 Dec 2025•Science and Research

AI Swarms Could Manipulate Millions Online and Threaten Democracy, 22 Global Experts Warn

22 Jan 2026•Policy and Regulation

Americans Wary of AI-Powered Election Information Ahead of 2024 Vote

12 Sept 2024

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology