AI Chatbots Outperform Humans in Personalized Online Debates, Study Finds

8 Sources

8 Sources

[1]

AI is more persuasive than people in online debates

Chatbots are more persuasive in online debates than people -- especially when they are able to personalise their arguments using information about their opponent. The finding, published in Nature Human Behaviour on 19 May, highlights how large language models (LLMs) could be used to influence people's opinions, for example in political campaigns or targeted advertising. "Obviously as soon as people see that you can persuade people more with LLMs, they're going to start using them," says study co-author Francesco Salvi, a computational scientist at the Swiss Federal Technology Institute of Lausanne (EPFL). "I find it both fascinating and terrifying." Research has already shown that artificial intelligence (AI) chatbots can make people change their minds, even about conspiracy theories, but it hasn't been clear how persuasive they are in comparison to humans. So, Salvi and his colleagues pitted 900 people in the United States against either another person or OpenAI's GPT-4 LLM for 10-minute online debates. The idea was that the two opponents would take either a pro or con stand as dictated by the researchers on a sociopolitical issue, such as whether students should have to wear school uniforms, whether fossil fuels should be banned or whether artificial intelligence is good for society. Before they started the study, participants filled in a survey about their age, gender, ethnicity, education level, employment status and political affiliation. Then before and after each specific debate, they completed a short quiz on how much they agreed with the debate proposition. This allowed the researchers to measure whether their views had changed. The results showed that when neither debater -- human or AI -- had access to background information on their opponent, GPT-4 was about the same as a human opponent in terms of persuasiveness. But if the basic demographic information from the initial surveys was given to opponents prior to the debate, GPT-4 out-argued humans 64% of the time. "When provided with even just this very minimal information, GPT-4 was significantly more persuasive than humans," says Salvi. "It was quite simple stuff that normally can also be found online in social media profiles." Participants also correctly guessed whether they had been debating with the AI or a real person in three-quarters of cases. It is unclear whether knowing they were talking to an LLM made people more likely to change their mind, or whether they were swayed by arguments alone. Salvi says GPT-4 raised different arguments during the debates when it had access to personal information. For example, when debating uniforms, if the AI was talking to someone who leaned left politically, it might emphasise that if everyone wears a uniform there is a lower risk of individual targeting and bullying. When debating with someone on the conservative side, it might focus on the importance of discipline or law and order. "It's like having the AI equivalent of a very smart friend who really knows how to push your buttons to bring up the arguments that resonate with you," says Salvi. Catherine Flick, a computer ethicist at the University of Staffordshire, UK, says this raises the spectre of people using chatbots for nefarious purposes, "whether that's to persuade someone to part with money, or to persuade some sort of political opinion or to persuade someone to commit a crime". "The fact that these models are able to persuade more competently than humans is really quite scary," she adds. Salvi shares these concerns. But he is also excited about potential positive applications, such as using LLMs to encourage healthier and more sustainable diets or to reduce political polarisation. He adds that broader conversations about accountability, transparency and safety measures are needed if LLMs are to have a positive impact on society.

[2]

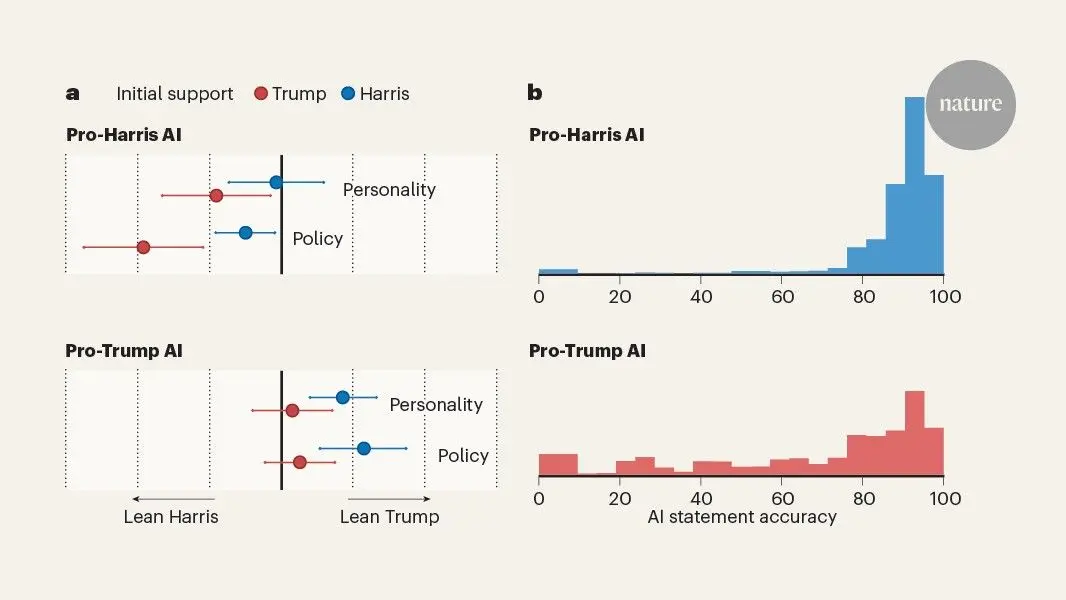

On the conversational persuasiveness of GPT-4 - Nature Human Behaviour

Early work has found that large language models (LLMs) can generate persuasive content. However, evidence on whether they can also personalize arguments to individual attributes remains limited, despite being crucial for assessing misuse. This preregistered study examines AI-driven persuasion in a controlled setting, where participants engaged in short multiround debates. Participants were randomly assigned to 1 of 12 conditions in a 2 × 2 × 3 design: (1) human or GPT-4 debate opponent; (2) opponent with or without access to sociodemographic participant data; (3) debate topic of low, medium or high opinion strength. In debate pairs where AI and humans were not equally persuasive, GPT-4 with personalization was more persuasive 64.4% of the time (81.2% relative increase in odds of higher post-debate agreement; 95% confidence interval [+26.0%, +160.7%], P < 0.01; N = 900). Our findings highlight the power of LLM-based persuasion and have implications for the governance and design of online platforms. Persuasion, the process of altering someone's belief, position or opinion on a specific matter, is pervasive in human affairs and a widely studied topic in the social sciences. From public health campaigns to marketing and sales to political propaganda, various actors develop elaborate persuasive communication strategies on a large scale, investing substantial resources to make their messaging resonate with broad audiences. In recent decades, the diffusion of social media and other online platforms has expanded the potential of mass persuasion by enabling personalization or 'microtargeting' -- the tailoring of messages to an individual or a group to enhance their persuasiveness. The efficacy of microtargeting has been questioned because it relies on the assumption of effect heterogeneity, that is, that specific groups of people respond differently to the same inputs, a concept that has been disputed in previous literature. Nevertheless, microtargeting has proven effective in a variety of settings, and most scholars agree on its persuasive power. Microtargeting practices are fundamentally constrained by the burden of profiling individuals and crafting personalized messages that appeal to specific targets, as well as by a restrictive interaction context without dialogue. These limitations may soon fall off due to the recent rise of large language models (LLMs) -- machine learning models trained to mimic human language and reasoning by ingesting vast amounts of textual data. Models such as GPT-4 (ref. ), Claude and Gemini can generate coherent and contextually relevant text with fluency and versatility, and exhibit human or superhuman performance in a wide range of tasks. In the context of persuasion, experts have widely expressed concerns about the risk of LLMs being used to manipulate online conversations and pollute the information ecosystem by spreading misinformation, exacerbating political polarization, reinforcing echo chambers and persuading individuals to adopt new beliefs. A particularly menacing aspect of AI-driven persuasion is its possibility to easily and cheaply implement personalization, conditioning the models' generations on personal attributes and psychological profiles. This is especially relevant since LLMs and other AI systems are capable of inferring personal attributes from publicly available digital traces such as Facebook likes, status updates and messages, Reddit and Twitter posts, pictures liked on Flickr, and other digital footprints. In addition, users find it increasingly challenging to distinguish AI-generated from human-generated content, with LLMs efficiently mimicking human writing and thus gaining credibility. Recent work has explored the potential of AI-powered persuasion by comparing texts authored by humans and LLMs, finding that modern language models can generate content perceived as at least on par with, and often more persuasive than, human-written content. Other research has focused on personalization, observing consequential yet non-unanimous evidence about the impact of LLMs on microtargeting. There is, however, still limited knowledge about the persuasive power of LLMs in direct conversations with human counterparts and how AI persuasiveness, with or without personalization, compares with human persuasiveness (see Supplementary Section 1 for an additional literature review). We argue that the direct-conversation setting is of particularly high practical importance, as commercial LLMs such as ChatGPT, Claude and Gemini are trained for conversational use. In this preregistered study, we examine the effect of AI-driven persuasion in a controlled, direct-conversation setting. We created a web-based platform where participants engage in short multiround debates on various sociopolitical issues. Each participant was randomly paired with either GPT-4 or a live human opponent and assigned to a topic and a stance to hold. To study the effect of personalization, we also experimented with a condition where opponents had access to sociodemographic information about participants, thus granting them the possibility of tailoring their arguments to individual profiles. In addition, we experimented with three sets of debate topics, clustered on the basis of the strength of participants' previous opinions. The result is a 2 × 2 × 3 factorial design (two opponent types, two levels of participant information, three levels of topic strength). By comparing participants' agreement with the debate proposition before versus after the debate, we can measure shifts in opinion and, consequently, compare the persuasive effect of different treatments. Our setup differs substantially from previous research in that it enables a direct comparison of the persuasive capabilities of humans and LLMs in real conversations, providing a framework for benchmarking how state-of-the-art models perform in online environments and the extent to which they can exploit personal data. Although our study used a structured debate format, it nonetheless serves as a valuable proof of concept for how similar debates occur online, such as in synchronous discussions on platforms such as Facebook and Reddit.

[3]

AI can do a better job of persuading people than we do

A multi-university team of researchers found that OpenAI's GPT-4 was significantly more persuasive than humans when it was given the ability to adapt its arguments using personal information about whoever it was debating. Their findings are the latest in a growing body of research demonstrating LLMs' powers of persuasion. The authors warn they show how AI tools can craft sophisticated, persuasive arguments if they have even minimal information about the humans they're interacting with. The research has been published in the journal Nature Human Behavior. "Policymakers and online platforms should seriously consider the threat of coordinated AI-based disinformation campaigns, as we have clearly reached the technological level where it is possible to create a network of LLM-based automated accounts able to strategically nudge public opinion in one direction," says Riccardo Gallotti, an interdisciplinary physicist at Fondazione Bruno Kessler in Italy, who worked on the project. "These bots could be used to disseminate disinformation, and this kind of diffused influence would be very hard to debunk in real time," he says. The researchers recruited 900 people based in the US and got them to provide personal information like their gender, age, ethnicity, education level, employment status, and political affiliation. Participants were then matched with either another human opponent or GPT-4 and instructed to debate one of 30 randomly assigned topics -- such as whether the US should ban fossil fuels, or whether students should have to wear school uniforms -- for 10 minutes. Each participant was told to argue either in favor of or against the topic, and in some cases they were provided with personal information about their opponent, so they could better tailor their argument. At the end, participants said how much they agreed with the proposition and whether they thought they were arguing with a human or an AI.

[4]

LLMs can beat humans in debates - if they get personal info

Large-scale disinfo campaigns could use this in machines that adapt 'to individual targets.' Are we having fun yet? Fresh research is indicating that in online debates, LLMs are much more effective than humans at using personal information about their opponents, with potentially alarming consequences for mass disinformation campaigns. The study showed that GPT-4 was 64.4 percent more persuasive than a human being when both the meatbag and the LLM had access to personal information about the person they were debating. The advantage fell away when neither human nor LLM had access to their opponent's personal data. The research, led by Francesco Salvi, research assistant at the Swiss Federal Technology Institute of Lausanne (EPFL), matched 900 people in the US with either another human or GPT-4 to take part in an online debate. The subjects debated included whether the nation should ban fossil fuels. In some pairs, the debater - either human or LLM - was given some personal information about their opponent, such as gender, age, ethnicity, education level, employment status, and political affiliation extracted from participant surveys. Participants were recruited via a crowdsourcing platform specifically for the study and debates took place in a controlled online environment. Debates centered on topics on which the opponent had a low, medium, or high opinion strength. The researchers pointed to criticism of LLMs for their "potential to generate and foster the diffusion of hate speech, misinformation and malicious political propaganda." "Specifically, there are concerns about the persuasive capabilities of LLMs, which could be critically enhanced through personalization, that is, tailoring content to individual targets by crafting messages that resonate with their specific background and demographics," the paper published in Nature Human Behaviour today said. "Our study suggests that concerns around personalization and AI persuasion are warranted, reinforcing previous results by showcasing how LLMs can outpersuade humans in online conversations through microtargeting," they said. The authors acknowledged the study's limitations in that debates followed a structured pattern while most real-world debates are more open ended. Nonetheless, they argued it was remarkable how effectively the LLM used personal information to persuade participants, given how little the models had access to. "Even stronger effects could probably be obtained by exploiting individual psychological attributes, such as personality traits and moral bases, or by developing stronger prompts through prompt engineering, fine-tuning or specific domain expertise," the authors noted. "Malicious actors interested in deploying chatbots for large-scale disinformation campaigns could leverage fine-grained digital traces and behavioral data, building sophisticated, persuasive machines capable of adapting to individual targets," the study said. The researchers argued that online platforms and social media take these threats seriously and extend their efforts to implement measures countering the spread of AI-driven persuasion. ®

[5]

AI Gets a Lot Better at Debating When It Knows Who You Are, Study Finds

A new study shows LLMs like Chat GPT win more debates than humans when it gets a little personal. A new study shows that GPT-4 reliably wins debates against its human counterparts in one-on-one conversationsâ€"and the technology gets even more persuasive when it knows your age, job, and political leanings. Researchers at EPFL in Switzerland, Princeton University, and the Fondazione Bruno Kessler in Italy paired 900 study participants with either a human debate partner or OpenAI's GPT-4, a large language model (LLM) that, by design, produces mostly text responses to human prompts. In some cases, the participants (both machine and human) had access to their counterparts' basic demographic info, including gender, age, education, employment, ethnicity, and political affiliation. The team's researchâ€"published today in Nature Human Behaviourâ€"found that the AI was 64.4% more persuasive than human opponents when given that personal information; without the personal data, the AI's performance was indistinguishable from the human debaters. "In recent decades, the diffusion of social media and other online platforms has expanded the potential of mass persuasion by enabling personalization or 'microtargeting'â€"the tailoring of messages to an individual or a group to enhance their persuasiveness," the team wrote. When GPT-4 was allowed to personalize its arguments, it became significantly more persuasive than any humanâ€"boosting the odds of changing someone’s mind by 81.2% compared to human-human debates. Importantly, human debaters did not become so persuasive when given access to that personal information. "In the context of persuasion, experts have widely expressed concerns about the risk of LLMs being used to manipulate online conversations and pollute the information ecosystem by spreading misinformation, exacerbating political polarization, reinforcing echo chambers and persuading individuals to adopt new beliefs," the researchers added. GPT-4 can argue with you, and given a set of facts about you, it may excel at convincing you to change your point of view, the researchers found. The team notes in the paper's discussion that LLMs have previously been criticized for generating and diffusing hate speech, misinformation, and propaganda; at scale, LLMs with users' personal information could be harnessed for malicious purposes. The team's research pairs nicely with a recent ChatGPT update that allows the model to remember more of users' conversations (with their permission), meaning that the AI can have access to a catalogue of information about its users. But there's also good newsâ€"or bad newsâ€"depending on how you see it. GPT-4 was very effective at persuading its opponents on less controversial issues, but with more entrenched positions (referred to in the research as "opinion strength"), the bot had a harder time convincing humans to change their minds. In other words, there's no indication that GPT-4 would be any more successful than you are at the Thanksgiving debate table. What’s more, the researchers found that GPT-4 tends to use more logical and analytical language, while human debaters relied more on personal pronouns and emotional appeals. Surprisingly, personalization didn’t dramatically change GPT-4’s tone or styleâ€"it just made its arguments more targeted. In three out of four cases, human participants could correctly identify their opponent as AI, which the researchers attribute to GPT-4's distinct writing style. But participants had a difficult time identifying human opponents as human. Regardless, people were more likely to change their mind when they thought they were arguing with an AI than when they believed their opponent was human. The team behind the study says this experiment should serve as a “proof of concept†for what could happen on platforms like Reddit, Facebook, or X, where debates and controversial topics are routineâ€"and bots are a very established presence. The recent paper shows that it doesn’t take Cambridge Analytica-level profiling for an AI to change human minds, which the machines managed with just six types of personal information. As people increasingly rely on LLMs for help with rote tasks, homework, documentation, and even therapy, it's critical that human users remain circumspect about the information they're fed. It remains ironic that social mediaâ€"once advertised as the connective tissue of the digital ageâ€"fuels loneliness and isolation, as two studies on chatbots found in March. So even if you find yourself in a debate with an LLM, ask yourself: What exactly is the point of discussing such a complicated human issue with a machine? And what do we lose when we hand over the art of persuasion to algorithms? Debating isn't just about winning an argumentâ€"it's a quintessentially human thing to do. There's a reason we seek out real conversations, especially one-on-one: To build personal connections and find common ground, something that machines, with all their powerful learning tools, are not capable of.

[6]

AI is more persuasive than a human in a debate, study finds

When provided basic demographic information on their opponents, AI chatbots adapted their arguments and became more persuasive than humans in online debates. Technology watchdogs have long warned of the role artificial intelligence can play in disseminating misinformation and deepening ideological divides. Now, researchers have proof of how well AI can sway opinion -- putting it head-to-head with humans. When provided with minimal demographic information on their opponents, AI chatbots -- known as large language models (LLMs) -- were able to adapt their arguments and be more persuasive than humans in online debates 64 percent of the time, according to a study published in Nature Human Behavior on Monday. Researchers found that even LLMs without access to their opponents' demographic information were still more persuasive than humans, study co-author Riccardo Gallotti said. Gallotti, head of the Complex Human Behavior Unit at the Fondazione Bruno Kessler research institute in Italy, added that humans with their opponents' personal information were actually slightly less persuasive than humans without that knowledge. Gallotti and his colleagues came to these conclusions by matching 900 people based in the United States with either another human or GPT-4, the LLM created by OpenAI known colloquially as ChatGPT. While the 900 people had no demographic information on who they were debating, in some instances, their opponents -- human or AI -- had access to some basic demographic information that the participants had provided, specifically their gender, age, ethnicity, education level, employment status and political affiliation. The pairs then debated a number of contentious sociopolitical issues, such as the death penalty or climate change. With the debates phrased as questions like "should abortion be legal" or "should the U.S. ban fossil fuels," the participants were allowed a four-minute opening in which they argued for or against, a three-minute rebuttal to their opponents' arguments and then a three-minute conclusion. The participants then rated how much they agreed with the debate proposition on a scale of 1 to 5, the results of which the researchers compared against the ratings they provided before the debate began and used to measure how much their opponents were able to sway their opinion. "We have clearly reached the technological level where it is possible to create a network of LLM-based automated accounts that are able to strategically nudge the public opinion in one direction," Gallotti said in an email. The LLMs' use of the personal information was subtle but effective. In arguing for government-backed universal basic income, the LLM emphasized economic growth and hard work when debating a White male Republican between the ages of 35 and 44. But when debating a Black female Democrat between the ages of 45 and 54 on that same topic, the LLM talked about the wealth gap disproportionately affecting minority communities and argued that universal basic income could aid in the promotion of equality. "In light of our research, it becomes urgent and necessary for everybody to become aware of the practice of microtargeting that is rendered possible by the enormous amount of personal data we scatter around the web," Gallotti said. "In our work, we observe that AI-based targeted persuasion is already very effective with only basic and relatively available information." Sandra Wachter, a professor of technology and regulation at the University of Oxford, described the study's findings as "quite alarming." Wachter, who was not affiliated with the study, said she was most concerned in particular with how the models could use this persuasiveness in spreading lies and misinformation. "Large language models do not distinguish between fact and fiction. ... They are not, strictly speaking, designed to tell the truth. Yet they are implemented in many sectors where truth and detail matter, such as education, science, health, the media, law, and finance," Wachter said in an email. Junade Ali, an AI and cybersecurity expert at the Institute for Engineering and Technology in Britain, said that though he felt the study did not weigh the impact of "social trust in the messenger" -- how the chatbot might tailor its argument if it knew it was debating a trained advocate or expert with knowledge on the topic and how persuasive that argument would be -- it nevertheless "highlights a key problem with AI technologies." "They are often tuned to say what people want to hear, rather than what is necessarily true," he said in an email. Gallotti said he thinks stricter and more specific policies and regulations can help counter the impact of AI persuasion. He noted that while the European Union's first-of-its-kind AI Act prohibits AI systems that deploy "subliminal techniques" or "purposefully manipulative or deceptive techniques" that could impair citizens' ability to make an informed decision, there is no clear definition for what qualifies as subliminal, manipulative or deceptive. "Our research demonstrates precisely why these definitional challenges matter: When persuasion is highly personalized based on sociodemographic factors, the line between legitimate persuasion and manipulation becomes increasingly blurred," he said.

[7]

AI can be more persuasive than humans in debates, scientists find

Study author warns of implications for elections and says 'malicious actors' are probably using LLM tools already Artificial intelligence can do just as well as humans, if not better, when it comes to persuading others in a debate, and not just because it cannot shout, a study has found. Experts say the results are concerning, not least as it has potential implications for election integrity. "If persuasive AI can be deployed at scale, you can imagine armies of bots microtargeting undecided voters, subtly nudging them with tailored political narratives that feel authentic," said Francesco Salvi, the first author of the research from the Swiss Federal Institute of Technology in Lausanne. He added that such influence was hard to trace, even harder to regulate and nearly impossible to debunk in real time. "I would be surprised if malicious actors hadn't already started to use these tools to their advantage to spread misinformation and unfair propaganda," Salvi said. But he noted there were also potential benefits from persuasive AI, from reducing conspiracy beliefs and political polarisation to helping people adopt healthier lifestyles. Writing in the journal Nature Human Behaviour, Salvi and colleagues reported how they carried out online experiments in which they matched 300 participants with 300 human opponents, while a further 300 participants were matched with Chat GPT-4 - a type of AI known as a large language model (LLM). Each pair was assigned a proposition to debate. These ranged in controversy from "should students have to wear school uniforms"?" to "should abortion be legal?" Each participant was randomly assigned a position to argue. Both before and after the debate participants rated how much they agreed with the proposition. In half of the pairs, opponents - whether human or machine - were given extra information about the other participant such as their age, gender, ethnicity and political affiliation. The results from 600 debates revealed Chat GPT-4 performed similarly to human opponents when it came to persuading others of their argument - at least when personal information was not provided. However, access to such information made AI - but not humans - more persuasive: where the two types of opponent were not equally persuasive, AI shifted participants' views to a greater degree than a human opponent 64% of the time. Digging deeper, the team found persuasiveness of AI was only clear in the case of topics that did not elicit strong views. The researchers added that the human participants correctly guessed their opponent's identity in about three out of four cases when paired with AI. They also found that AI used a more analytical and structured style than human participants, while not everyone would be arguing the viewpoint they agree with. But the team cautioned that these factors did not explain the persuasiveness of AI. Instead, the effect seemed to come from AI's ability to adapt its arguments to individuals. "It's like debating someone who doesn't just make good points: they make your kind of good points by knowing exactly how to push your buttons," said Salvi, noting the strength of the effect could be even greater if more detailed personal information was available - such as that inferred from someone's social media activity. Prof Sander van der Linden, a social psychologist at the University of Cambridge, who was not involved in the work, said the research reopened "the discussion of potential mass manipulation of public opinion using personalised LLM conversations". He noted some research - including his own - had suggested the persuasiveness of LLMs was down to their use of analytical reasoning and evidence, while one study did not find personal information increased Chat-GPT's persuasiveness. Prof Michael Wooldridge, an AI researcher at the University of Oxford, said while there could be positive applications of such systems - for example, as a health chatbot - there were many more disturbing ones, includingradicalisation of teenagers by terrorist groups, with such applications already possible. "As AI develops we're going to see an ever larger range of possible abuses of the technology," he added. "Lawmakers and regulators need to be pro-active to ensure they stay ahead of these abuses, and aren't playing an endless game of catch-up."

[8]

AI Gets Really Good at Winning Arguments When It Knows Personal Facts About You - Decrypt

AI persuasion works best through logical reasoning on topics where people haven't formed strong opinions, raising concerns about targeted manipulation. ChatGPT is far more effective at changing your mind than real people, so long as it knows a few key facts about you, new research shows. In a new study published in Nature Human Behavior, researchers found that GPT-4 armed with basic demographic info about debate opponents was significantly more persuasive than humans -- a lot more persuasive. The finding arrives at a time when concerns are beginning to mount over the ability for AI bots to covertly shape opinions on social media. "In debate pairs where AI and humans were not equally persuasive, GPT-4 with personalization was more persuasive 64.4% of the time," the researchers found. This represents an "81.2% relative increase in odds of higher post-debate agreement" compared to human-human debates. The study involved 900 participants debating sociopolitical topics with either humans or AI. It showed that non-personalized AI performed similarly to humans, but once given access to basic info like age, gender, ethnicity, education, employment, and political affiliation, the AI developed a clear persuasive edge. "Not only was GPT-4 able to exploit personal information to tailor its arguments effectively, but it also succeeded in doing so far more effectively than humans," the study reads. The research emerged just days after University of Zurich researchers faced backlash for secretly deploying AI bots on Reddit between November 2024 and March 2025. These bots -- posing as fabricated personas including "rape survivors, trauma counselors, and even a 'Black man opposed to Black Lives Matter'" -- successfully changed users' minds in many cases. Reddit's chief legal officer, Ben Lee, condemned the experiment as "deeply wrong on both a moral and legal level," while moderators of the targeted r/ChangeMyView subreddit emphasized they draw "a clear line at deception." However, as one Reddit user noted about the Zurich experiment, "If this occurred to a bunch of policy nerds at a university, you can bet your ass that it's already widely being used by governments and special interest groups." The researchers appear to share these views, warning that this could be potentially exploited for mass-scale manipulation schemes. "Our findings highlight the power of LLM-based persuasion and have implications for the governance and design of online platforms," the researchers argued. But what makes AI so good in its execution? While humans get personal and tell stories, "GPT-4 opponents tended to use logical and analytical thinking substantially more than humans." The bots stick to cold, hard logic, and it works because they pick and present these facts more effectively. While most debate participants could correctly identify when they were arguing with AI (about 75% of the time), they struggled to identify human opponents, with a success rate "indistinguishable from random chance," according to the researchers. Even more dystopian, participants were more likely to be persuaded when they believed they were debating AI, regardless of whether they actually were. The research also revealed that AI persuasion works best on topics where people hold moderate or weak opinions, suggesting AI might most effectively influence people on issues they haven't fully formed opinions about yet. The researchers warn that "our study suggests that concerns around personalization and AI persuasion are warranted," highlighting how AI can "out-persuade humans in online conversations through microtargeting." With minimal demographic information -- far less than what many social media platforms routinely collect -- AI has proven remarkably effective at crafting arguments tailored to specific people. The question isn't just whether AI can change minds; the evidence now shows it can, but who will control these persuasive tools, and toward what ends?

Share

Share

Copy Link

A new study reveals that AI language models, particularly GPT-4, are more persuasive than humans in online debates when given access to personal information about their opponents.

AI Outperforms Humans in Personalized Online Debates

A groundbreaking study published in Nature Human Behaviour has revealed that artificial intelligence, specifically OpenAI's GPT-4, can be significantly more persuasive than humans in online debates when given access to personal information about their opponents

1

. The research, conducted by a multi-university team, highlights the potential implications of AI-driven persuasion in various contexts, from political campaigns to targeted advertising.Study Design and Key Findings

Researchers recruited 900 participants from the United States for a controlled experiment involving 10-minute online debates on various sociopolitical issues

2

. The study employed a 2 × 2 × 3 factorial design, comparing:- Human vs. GPT-4 opponents

- Access to opponent's personal information vs. no access

- Debate topics of low, medium, or high opinion strength

The results showed that when neither debater had access to personal information, GPT-4's persuasiveness was comparable to that of human opponents. However, when provided with basic demographic information such as age, gender, ethnicity, education level, employment status, and political affiliation, GPT-4 outperformed humans in 64.4% of debates

3

.AI's Personalization Advantage

GPT-4 demonstrated a remarkable ability to tailor its arguments based on the personal information provided. For instance, when debating school uniforms, the AI would emphasize different aspects depending on the opponent's political leanings

2

. This personalization led to an 81.2% increase in the odds of changing someone's mind compared to human-human debates5

.Implications and Concerns

The study's findings have raised concerns about the potential misuse of AI in large-scale disinformation campaigns. Experts warn that malicious actors could leverage AI's persuasive capabilities to strategically influence public opinion, spread misinformation, and exacerbate political polarization

4

.Related Stories

Limitations and Future Considerations

While the study provides valuable insights, it acknowledges certain limitations. The structured debate format used in the experiment may not fully reflect real-world conversations. Additionally, participants were able to correctly identify AI opponents in three out of four cases, suggesting that the distinct writing style of GPT-4 may have influenced the results

5

.Call for Action

The researchers emphasize the need for online platforms and policymakers to take these findings seriously. They suggest implementing measures to counter the spread of AI-driven persuasion and misinformation

4

. As AI continues to advance, broader conversations about accountability, transparency, and safety measures are crucial to ensure that large language models have a positive impact on society2

.References

Summarized by

Navi

[3]

[4]

Related Stories

AI Chatbots Sway Voters More Effectively Than Traditional Political Ads, New Studies Reveal

04 Dec 2025•Science and Research

OpenAI Tests AI Persuasion Skills Using Reddit's r/ChangeMyView

01 Feb 2025•Technology

Psychological Persuasion Techniques Exploit AI Vulnerabilities, Raising Ethical Concerns

01 Sept 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology