AI-Induced Indifference: How Unfair AI Decisions May Desensitize Us to Human Misconduct

2 Sources

2 Sources

[1]

Unfair decisions by AI could make us indifferent to bad behaviour by humans

Bocconi University provides funding as a member of The Conversation UK. Artificial intelligence (AI) makes important decisions that affect our everyday lives. These decisions are implemented by firms and institutions in the name of efficiency. They can help determine who gets into college, who lands a job, who receives medical treatment and who qualifies for government assistance. As AI takes on these roles, there is a growing risk of unfair decisions - or the perception of them by those people affected. For example, in college admissions or hiring, these automated decisions can unintentionally favour certain groups of people or those with certain backgrounds, while equally qualified but underrepresented applicants get overlooked. Or, when used by governments in benefit systems, AI may allocate resources in ways that worsen social inequality, leaving some people with less than they deserve and a sense of unfair treatment. Together with an international team of researchers, we examined how unfair resource distribution - whether handled by AI or a human - influences people's willingness to act against unfairness. The results have been published in the journal Cognition. With AI becoming more embedded in daily life, governments are stepping in to protect citizens from biased or opaque AI systems. Examples of these efforts include the White House's AI Bill of Rights, and the European parliament's AI Act. These reflect a shared concern: people may feel wronged by AI's decisions. So how does experiencing unfairness from an AI system affect how people treat one another afterwards? AI-induced indifference Our paper in Cognition looked at people's willingness to act against unfairness after experiencing unfair treatment by an AI. The behaviour we examined applied to subsequent, unrelated interactions by these individuals. A willingness to act in such situations, often called "prosocial punishment," is seen as crucial for upholding social norms. For example, whistleblowers may report unethical practices despite the risks, or consumers may boycott companies that they believe are acting in harmful ways. People who engage in these acts of prosocial punishment often do so to address injustices that affect others, which helps reinforce community standards. We asked this question: could experiencing unfairness from AI, instead of a person, affect people's willingness to stand up to human wrongdoers later on? For instance, if an AI unfairly assigns a shift or denies a benefit, does it make people less likely to report unethical behaviour by a co-worker afterwards? Across a series of experiments, we found that people treated unfairly by an AI were less likely to punish human wrongdoers afterwards than participants who had been treated unfairly by a human. They showed a kind of desensitisation to others' bad behaviour. We called this effect AI-induced indifference, to capture the idea that unfair treatment by AI can weaken people's sense of accountability to others. This makes them less likely to address injustices in their community. Reasons for inaction This may be because people place less blame on AI for unfair treatment, and thus they feel less driven to act against injustice. This effect is consistent even when participants encountered only unfair behaviour by others or both fair and unfair behaviour. To look at whether the relationship we had uncovered was affected by familiarity with AI, we carried out the same experiments again, after the release of ChatGPT in 2022. We got the same results with the later series of tests as we had with the earlier ones. These results suggest that people's responses to unfairness depend not only on whether they were treated fairly but also on who treated them unfairly - an AI or a human. In short, unfair treatment by an AI system can affect how people respond to each other, making them less attentive to each other's unfair actions. This highlights AI's potential ripple effects in human society, extending beyond an individual's experience of a single unfair decision. When AI systems act unfairly, the consequences extend to future interactions, influencing how people treat each other, even in situations unrelated to AI. We would suggest that developers of AI systems should focus on minimising biases in AI training data to prevent these important spillover effects. Policymakers should also establish standards for transparency, requiring companies to disclose where AI might make unfair decisions. This would help users understand the limitations of AI systems, and how to challenge unfair outcomes. Increased awareness of these effects could also encourage people to stay alert to unfairness, especially after interacting with AI. Feelings of outrage and blame for unfair treatment are essential for spotting injustice and holding wrongdoers accountable. By addressing AI's unintended social effects, leaders can ensure AI supports rather than undermines the ethical and social standards needed for a society built on justice.

[2]

Unfair decisions by AI could make us indifferent to bad behavior by humans

Artificial intelligence (AI) makes important decisions that affect our everyday lives. These decisions are implemented by firms and institutions in the name of efficiency. They can help determine who gets into college, who lands a job, who receives medical treatment and who qualifies for government assistance. As AI takes on these roles, there is a growing risk of unfair decisions -- or the perception of them by those people affected. For example, in college admissions or hiring, these automated decisions can unintentionally favor certain groups of people or those with certain backgrounds, while equally qualified but underrepresented applicants get overlooked. Or, when used by governments in benefit systems, AI may allocate resources in ways that worsen social inequality, leaving some people with less than they deserve and a sense of unfair treatment. Together with an international team of researchers, we examined how unfair resource distribution -- whether handled by AI or a human -- influences people's willingness to act against unfairness. The results have been published in the journal Cognition. With AI becoming more embedded in daily life, governments are stepping in to protect citizens from biased or opaque AI systems. Examples of these efforts include the White House's AI Bill of Rights, and the European parliament's AI Act. These reflect a shared concern: People may feel wronged by AI's decisions. So how does experiencing unfairness from an AI system affect how people treat one another afterwards? AI-induced indifference Our paper in Cognition looked at people's willingness to act against unfairness after experiencing unfair treatment by an AI. The behavior we examined applied to subsequent, unrelated interactions by these individuals. A willingness to act in such situations, often called "prosocial punishment," is seen as crucial for upholding social norms. For example, whistleblowers may report unethical practices despite the risks, or consumers may boycott companies that they believe are acting in harmful ways. People who engage in these acts of prosocial punishment often do so to address injustices that affect others, which helps reinforce community standards. We asked this question: Could experiencing unfairness from AI, instead of a person, affect people's willingness to stand up to human wrongdoers later on? For instance, if an AI unfairly assigns a shift or denies a benefit, does it make people less likely to report unethical behavior by a co-worker afterwards? Across a series of experiments, we found that people treated unfairly by an AI were less likely to punish human wrongdoers afterwards than participants who had been treated unfairly by a human. They showed a kind of desensitization to others' bad behavior. We called this effect AI-induced indifference, to capture the idea that unfair treatment by AI can weaken people's sense of accountability to others. This makes them less likely to address injustices in their community. Reasons for inaction This may be because people place less blame on AI for unfair treatment, and thus they feel less driven to act against injustice. This effect is consistent even when participants encounter only unfair behavior by others or both fair and unfair behavior. To look at whether the relationship we had uncovered was affected by familiarity with AI, we carried out the same experiments again, after the release of ChatGPT in 2022. We got the same results with the later series of tests as we had with the earlier ones. These results suggest that people's responses to unfairness depend not only on whether they were treated fairly but also on who treated them unfairly -- an AI or a human. In short, unfair treatment by an AI system can affect how people respond to each other, making them less attentive to each other's unfair actions. This highlights AI's potential ripple effects in human society, extending beyond an individual's experience of a single unfair decision. When AI systems act unfairly, the consequences extend to future interactions, influencing how people treat each other, even in situations unrelated to AI. We would suggest that developers of AI systems should focus on minimizing biases in AI training data to prevent these important spillover effects. Policymakers should also establish standards for transparency, requiring companies to disclose where AI might make unfair decisions. This would help users understand the limitations of AI systems, and how to challenge unfair outcomes. Increased awareness of these effects could also encourage people to stay alert to unfairness, especially after interacting with AI. Feelings of outrage and blame for unfair treatment are essential for spotting injustice and holding wrongdoers accountable. By addressing AI's unintended social effects, leaders can ensure AI supports rather than undermines the ethical and social standards needed for a society built on justice.

Share

Share

Copy Link

A study reveals that experiencing unfair treatment from AI systems can make people less likely to act against human wrongdoing, potentially undermining social norms and accountability.

AI Fairness and Its Impact on Human Behavior

A groundbreaking study published in the journal Cognition has revealed a concerning phenomenon dubbed "AI-induced indifference." This research, conducted by an international team, explores how unfair decisions made by artificial intelligence (AI) systems can influence human behavior in subsequent social interactions

1

2

.The Growing Influence of AI in Decision-Making

AI systems are increasingly being employed to make critical decisions in various aspects of our lives, including college admissions, job applications, medical treatment allocation, and government assistance eligibility. While these systems aim to improve efficiency, they also raise concerns about potential unfairness or bias in their decision-making processes

1

2

.The Concept of AI-Induced Indifference

The study's key finding is that individuals who experience unfair treatment from an AI system are less likely to engage in "prosocial punishment" of human wrongdoers in subsequent, unrelated interactions. This behavior, crucial for upholding social norms, involves actions such as whistleblowing or boycotting companies perceived as harmful

1

2

.Experimental Findings

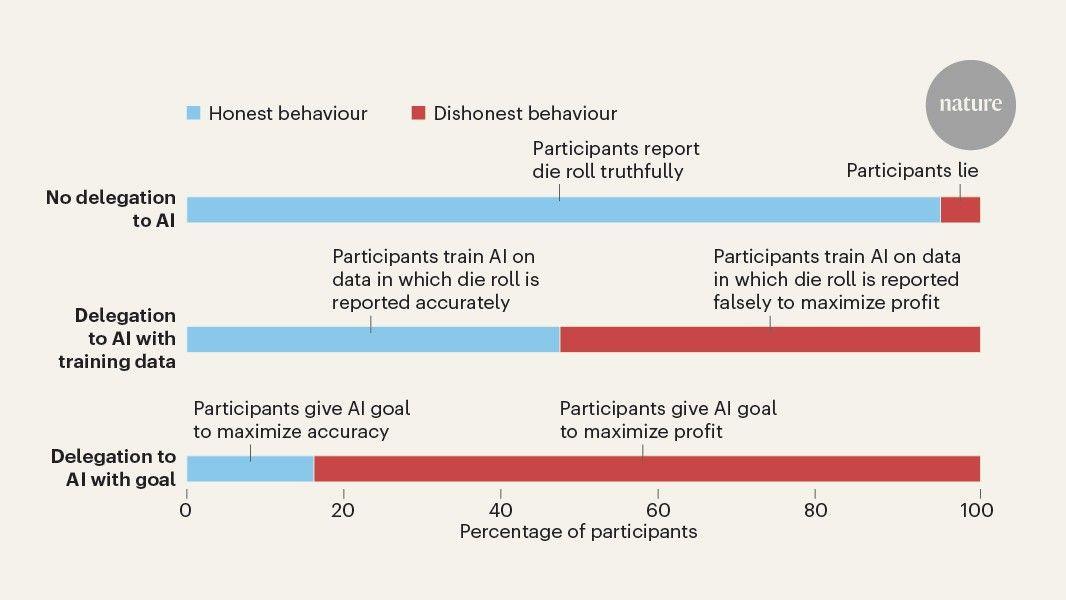

Across a series of experiments, researchers observed that:

- Participants treated unfairly by AI were less likely to punish human wrongdoers compared to those treated unfairly by humans.

- This effect persisted even when participants encountered only unfair behavior or a mix of fair and unfair behavior.

- The phenomenon remained consistent in experiments conducted before and after the release of ChatGPT, suggesting that increased familiarity with AI did not alter the results

1

2

.

Implications and Concerns

The study highlights potential ripple effects of AI systems on human society:

- Unfair AI decisions may weaken people's sense of accountability to others.

- This desensitization could lead to a reduced likelihood of addressing injustices in communities.

- The consequences of unfair AI treatment may extend to future human interactions, even in situations unrelated to AI

1

2

.

Related Stories

Recommendations for Mitigating AI-Induced Indifference

To address these concerns, the researchers suggest:

- AI developers should focus on minimizing biases in training data to prevent spillover effects.

- Policymakers should establish transparency standards, requiring companies to disclose potential areas of unfair AI decision-making.

- Increased awareness of these effects could encourage people to remain vigilant against unfairness, especially after interacting with AI systems

1

2

.

The Importance of Addressing AI's Unintended Social Effects

The study emphasizes that feelings of outrage and blame for unfair treatment are essential for identifying injustice and holding wrongdoers accountable. By addressing the unintended social effects of AI, leaders can ensure that AI systems support rather than undermine the ethical and social standards necessary for a just society

1

2

.References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation