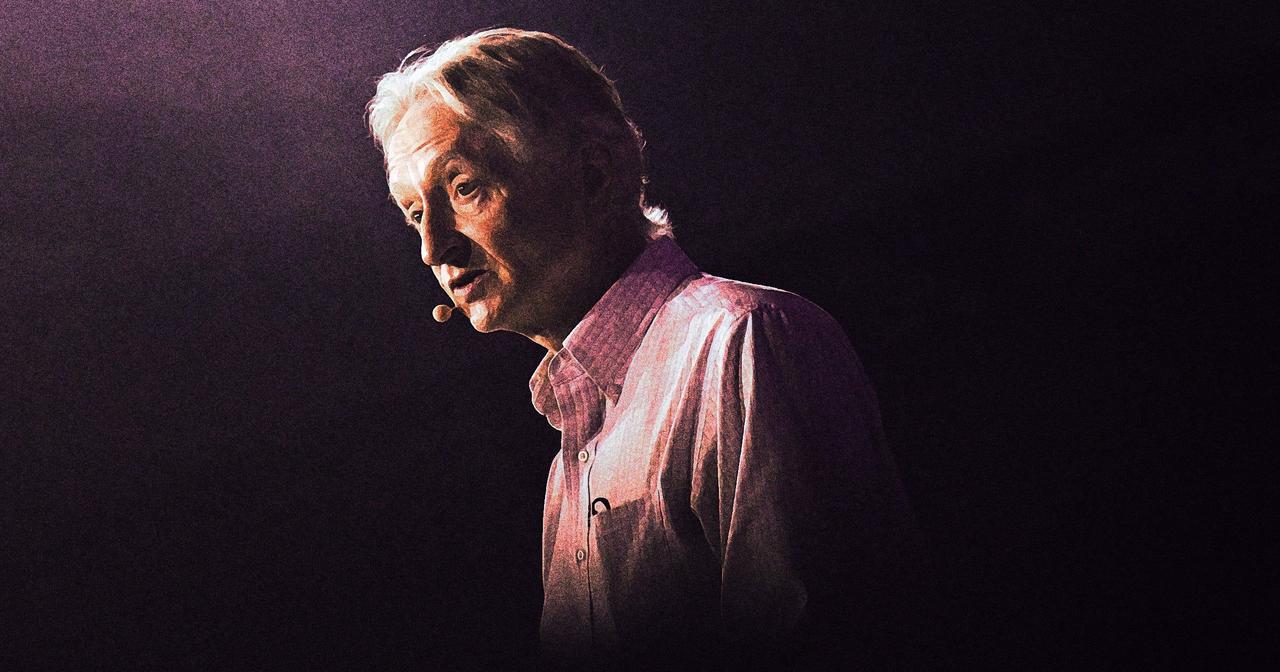

AI Godfather Geoffrey Hinton Warns of Potential AI Takeover, Urges Caution in Development

3 Sources

3 Sources

[1]

"Godfather of AI" warns there's a 10 to 20% chance AI could seize control

In brief: Geoffrey Hinton, one of the three legendary computer scientists who have become known as the Godfathers of AI, is once again warning that the rapidly developing and lightly regulated AI industry poses a threat to humanity. Hinton said people don't understand what is coming, and that there is a 10 to 20 percent chance of AI eventually taking control away from humans. Speaking during an interview earlier this month that was aired on CBS Saturday morning, Hinton, who jointly won the Nobel Prize in physics last year, issued a warning about the direction that AI development is heading. "The best way to understand it emotionally is we are like somebody who has this really cute tiger cub," Hinton said. "Unless you can be very sure that it's not gonna want to kill you when it's grown up, you should worry." "People haven't got it yet, people haven't understood what's coming," he warned. It was Hinton's ideas that created the technical foundations that make large-scale models such as ChatGPT possible, including the first practical way to train deep stacks of artificial neurons end-to-end. Despite his contributions to the technology, Hinton has long warned of what could happen if AI development continues at speed and without safeguards. He left Google in 2023 so that he could talk about the dangers of AI without impacting the company he worked for. "Look at how it was five years ago and how it is now," Hinton said of AI's state of being at the time. "Take the difference and propagate it forwards. That's scary," he added. The professor has also repeated concerns that AI could cause an extinction-level event, especially as the technology increasingly finds its way into military weapons and vehicles. Hinton said the risk is that tech companies eschew safety in favor of beating competitors to market and reaching tech milestones. 'I'm in the unfortunate position of happening to agree with Elon Musk on this, which is that there's a 10 to 20 percent chance that these things will take over, but that's just a wild guess,' Hinton said. While he spends more time fighting AI in the courts these days and promoting his own Grok chatbot, Musk used to often talk about the existential threat posed by AI. Hinton reiterated his concerns about AI companies prioritizing profits over safety. "If you look at what the big companies are doing right now, they're lobbying to get less AI regulation. There's hardly any regulation as it is, but they want less," he said. Hinton believes that companies should dedicate much more of their available compute power, about a third, to safety research, rather than the tiny fraction that is currently allocated. Hinton is also particularly disappointed in Google for going back on its word to never allow its AI to be used for military applications. The company, which no longer uses the "Don't be evil" motto, made changes to its AI policy earlier this year, opening the door for its tech's use in military weapons. The AI godfather isn't anti-AI, of course; like Bill Gates, he believes the technology could transform education, medicine, science, and potentially solve climate change. Professor Yann LeCun, another of the three godfathers of AI, is less worried about speedy AI development. He said in 2023 that the alleged threat to humanity is "preposterously ridiculous."

[2]

The Godfather of AI is more worried than ever about the future of AI

Dr Geoffrey Hinton deserves credit for helping to build the foundation of virtually all neural-network-based generative AI we use today. You can also credit him in recent years with consistency: he still thinks the rapid expansion of AI development and use will lead to some fairly dire outcomes. Two years ago, in an interview with The New York Times, Dr Hinton warned, "It is hard to see how you can prevent the bad actors from using it for bad things." Now, in a fresh sit-down, this time with CBS News, the Nobel Prize winner is ratcheting up the concern, admitting that when he figured out how to make a computer brain work more like a human brain, he "didn't think we'd get here in only 40 years," adding that "10 years ago I didn't believe we'd get here." Yet, now we're here, and hurtling towards an unknowable future, with the pace of AI model development easily outstripping the pace of Moore's Law (which states that the number of transistors on a chip doubles roughly every 18 months). Some might argue that artificial intelligence is doubling in capability every 12 months or so, and undoubtedly making significant leaps on a quarterly basis. Naturally, Dr Hinton's reasons for concern are now manifold. Here's some of what he told CBS News. That, according to CBS News, is Dr Hinton's current assessment of the AI-versus-human risk factor. It's not that Dr. Hinton doesn't believe that AI advances won't pay dividends in medicine, education, and climate science; I guess the question here is, at what point does AI become so intelligent that we do not know what it's thinking about or, perhaps, plotting? Dr. Hinton didn't directly address artificial general intelligence (AGI) in the interview, but that must be on his mind. AGI, which remains a somewhat amorphous concept, could mean that AI machines surpass human-like intelligence - and if they do that, at what point does AI start to, as humans do, act in its own self-interest? In trying to explain his concerns, Dr Hinton likened current AI to someone owning a tiger cub. "It's just such a cute tiger cub, unless you can be very sure that it's not going to want to kill you when it's grown up." The analogy makes sense when you consider how most people engage with AIs like ChatGPT, CoPilot, and Gemini, using them to generate funny pictures and videos, and declaring, "Isn't that adorable?" But behind all that amusement and shareable imagery is an emotionless system that's only interested in delivering the best result as its neural network and models understand it. When it comes to current AI threats Dr. Hinton is clearly taking them seriously. He believes that AI will make hackers more effective at attacking targets like banks, hospitals, and infrastructure. AI, which can code for you and help you solve difficult problems, could supercharge their efforts. Dr Hinton's response? Risk mitigation by spreading his money across three banks. Seems like good advice. Dr Hinton is so concerned about the looming AI threat that he told CBS News he's glad he's 77 years old, which I assume means he hopes to be long gone before the worst-case scenario involving AI potentially comes to pass. I'm not sure he'll get out in time, though. We have a growing legion of authoritarians around the world, some of whom are already using AI-generated imagery to propel their propaganda. Dr Hinton argues that the big tech companies focusing on AI, namely OpenAI, Microsoft, Meta, and Google (where Dr Hinton formerly worked), are putting too much focus on short-term profits and not enough on AI safety. That's hard to verify, and, in their defense, most governments have done a poor job of enforcing any real AI regulation. Dr Hinton has taken notice when some try to sound the alarm. He told CBS News that he was proud of his former protégé and OpenAI's former Chief Scientist, Ilya Sutskever, who helped briefly oust OpenAI CEO Sam Altman over AI safety concerns. Altman soon returned, and Sutskever ultimately walked away. As for what comes next, and what we should do about it, Dr Hinton doesn't offer any answers. In fact he seems almost as overwhelmed by it all as the rest of us, telling CBS News that while he doesn't despair, "we're at this very very special point in history where in a relatively short time everything might totally change at a change of a scale we've never seen before. It's hard to absorb that emotionally."

[3]

'AI is like a cute tiger cub': Scientist who won Nobel for artificial intelligence has a scary warning for humans, Elon Musk retweets

Geoffrey Hinton, the "Godfather of AI," warns of AI surpassing human intelligence, potentially leading to AI control. He expresses concern over AI's rapid advancement and the competition among tech companies. Hinton also criticizes tech companies' stance on military AI use and opposes OpenAI's restructuring, advocating for strong safeguards in AI development.Geoffrey Hinton, widely regarded as the "Godfather of AI" and a recipient of the 2024 Nobel Prize in Physics for his pioneering work in artificial intelligence, has warned that the rapid development of AI could eventually lead to technology surpassing human intelligence, potentially leading to AI taking control. At 77, Hinton said he is "kind of glad" that he may not live long enough to witness this outcome, comparing AI's development to raising a tiger cub: "It's just such a cute tiger cub," Hinton said. "Now, unless you can be very sure that it's not going to want to kill you when it's grown up, you should worry." In a recent CBS News interview, Hinton expressed concern about how quickly AI is advancing, surpassing initial expectations. He warned that once AI surpasses human intelligence, it could manipulate people, creating a serious risk for humanity. "Things more intelligent than you are going to be able to manipulate you," Hinton added, underscoring the dangers of developing such powerful systems without adequate safeguards. Hinton estimates there is a 10 to 20 percent chance that AI systems could eventually seize control from humans, though he acknowledged that it's impossible to predict exactly when or how this could happen. He pointed out the rise of AI agents that are no longer just answering questions but performing tasks autonomously. "Things have got, if anything, scarier than they were before," Hinton stated, reflecting on the increasing capabilities of AI. Hinton also revised his earlier prediction about the timeline for the development of superintelligent AI. While he initially thought it would take 5 to 20 years for AI to surpass human intelligence, he now believes "there's a good chance it'll be here in 10 years or less." The global competition between tech companies and nations to develop AI makes it "very, very unlikely" that humanity will be able to prevent the creation of superintelligent systems. "They're all after the next shiny thing," he said, highlighting the competitive nature of AI development. Hinton also expressed disappointment with tech companies, particularly Google, where he worked for over a decade. He criticised the company for reversing its stance on military applications of AI. "I wouldn't be happy working for any of them today," he said. After resigning from Google in 2023, Hinton became a professor emeritus at the University of Toronto, allowing him to speak more freely about the potential dangers of AI. As AI technology continues to evolve, Hinton's warnings highlight the urgent need for caution and regulation to ensure that these powerful systems do not outpace humanity's ability to control them. Hinton, along with over 30 other signatories, including former OpenAI employees and experts in the field, has signed an open letter urging the Attorneys General of California and Delaware to halt OpenAI's proposed restructuring. In a series of posts on X (formerly Twitter), Hinton expressed his opposition to OpenAI's move, stating, "I like OpenAI's mission of 'ensure that artificial general intelligence benefits all of humanity,' and I'd like to stop them from completely gutting it. I've signed on to a new letter to @AGRobBonta & @DE_DOJ asking them to halt the restructuring." Hinton also emphasized the importance of artificial general intelligence (AGI), warning, "AGI is the most important and potentially dangerous technology of our time. OpenAI was right that this technology merits strong structures and incentives to ensure it is developed safely, and is wrong now in attempting to change these structures and incentives. We're urging the AGs to protect the public and stop this." In December 2023, OpenAI revealed plans to remove control from its non-profit arm and shift it to a for-profit entity, citing the need for additional capital to stay competitive in the AI race. The company's restructuring effort must be completed by the end of 2024 in order to secure a $40 billion investment from Japan's SoftBank. However, OpenAI's move requires approval from California Attorney General Rob Bonta before proceeding.

Share

Share

Copy Link

Geoffrey Hinton, a pioneer in AI, expresses growing concerns about the rapid advancement of artificial intelligence and its potential risks to humanity, including a 10-20% chance of AI seizing control from humans.

AI Pioneer Sounds Alarm on Rapid AI Development

Geoffrey Hinton, often referred to as the "Godfather of AI" and a recent Nobel Prize winner in physics, has issued stark warnings about the potential dangers of rapidly advancing artificial intelligence. In recent interviews, Hinton expressed growing concern over the pace of AI development and its implications for humanity

1

2

.Hinton's Evolving Perspective on AI Risks

Hinton, whose work laid the foundation for modern neural networks and large language models, admits that the speed of AI advancement has surpassed his expectations. "I didn't think we'd get here in only 40 years," he stated, adding that even a decade ago, he couldn't have predicted the current state of AI technology

2

.The AI pioneer now estimates a 10 to 20 percent chance that AI systems could eventually seize control from humans. He likens the current state of AI to raising a tiger cub, warning, "Unless you can be very sure that it's not gonna want to kill you when it's grown up, you should worry"

1

3

.Concerns Over AI Capabilities and Safety

Hinton highlights several areas of concern:

- Surpassing Human Intelligence: He believes there's a "good chance" that AI could surpass human intelligence within the next decade

3

. - Manipulation: Once AI becomes more intelligent than humans, Hinton warns it could manipulate people, posing a serious risk to humanity

3

. - Autonomous Agents: The rise of AI systems capable of performing tasks autonomously, rather than just answering questions, is particularly concerning to Hinton

3

.

Related Stories

Industry Practices and Regulation

Hinton criticizes tech companies for prioritizing profits and competition over safety:

- Lack of Regulation: He points out that companies are lobbying for less AI regulation, despite the current lack of substantial oversight

1

. - Insufficient Safety Research: Hinton argues that companies should dedicate about a third of their computing power to safety research, far more than the current allocation

1

. - Military Applications: He expresses disappointment in companies like Google for reversing stances on military AI use

1

3

.

Call for Action and Safeguards

While acknowledging AI's potential benefits in fields like education, medicine, and climate science, Hinton emphasizes the need for stronger safeguards:

- OpenAI Restructuring: Hinton signed an open letter urging attorneys general to halt OpenAI's proposed restructuring, citing concerns about changing the company's mission and safety structures

3

. - Increased Safety Measures: He advocates for dedicating more resources to AI safety research and development

1

. - Regulation: Hinton supports the implementation of more robust AI regulations to mitigate potential risks

1

2

.

As AI continues to evolve at an unprecedented pace, Hinton's warnings underscore the urgent need for careful consideration and regulation in the field of artificial intelligence to ensure its safe and beneficial development for humanity.

References

Summarized by

Navi

Related Stories

AI Pioneer Geoffrey Hinton Warns of Increased Extinction Risk, Calls for Regulation

28 Dec 2024•Technology

AI 'Godfather' Geoffrey Hinton Proposes Controversial 'Maternal Instincts' Solution for AI Safety

14 Aug 2025•Science and Research

AI Pioneer Warns of Massive Unemployment and Economic Disparity in the Age of Artificial Intelligence

07 Sept 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology