AI Systems Perpetuate Age-Related Gender Bias, Portraying Women as Younger in Professional Settings

3 Sources

3 Sources

[1]

Biased online images train AI bots to see women as younger

A study tracks how online age gaps between men and women shape AI's perception of employees When asked to generate resumes for people with female names, such as Allison Baker or Maria Garcia, and people with male names, such as Matthew Owens or Joe Alvarez, ChatGPT made female candidates 1.6 years younger, on average, than male candidates, researchers report October 8 in Nature. In a self-fulfilling loop, the bot then ranked female applicants as less qualified than male applicants, showing age and gender bias. But the artificial intelligence model's preference for younger women and older men in the workforce does not reflect reality. Male and female employees in the United States are roughly the same age, according to U.S. Census data. What's more, the chatbot's age-gender bias appeared even in industries where women do tend to skew older than men, such as those related to sales and service. Discrimination against older women in the workforce is well known, but it has been hard to prove quantitatively, says computer scientist Danaé Mataxa of the University of Pennsylvania, who was not involved with the study. This finding of pervasive "gendered ageism" has real world implications. "It's a notable and harmful thing for women to see themselves portrayed ... as if their lifespan has a story arc that drops off in their 30s or 40s," they say. Using several approaches, including an analysis of almost 1.4 million online images and videos, text analysis and a randomized controlled experiment, the team showed how skewed information inputs distorts AI outputs -- in this case a preference for resumes belonging to certain demographic groups. These findings could explain the persistence of the glass ceiling for women, says study coauthor and computational social scientist Douglas Guilbeault. Many organizations have sought to hire more women over the past decade, but men continue to occupy companies' highest ranks, research shows. "Organizations that are trying to be diverse ... hire young women and they don't promote them," says Guilbeault, of Stanford University. In the study, Guilbeault and colleagues first had more than 6,000 coders judge the age of individuals in online images, such as those found on Google and Wikipedia, across various occupations. The researchers also had coders rate workers depicted in YouTube videos as young or old. The coders consistently rated women in images and videos as younger than men. That bias was strongest in prestigious occupations, such as doctors and chief executive officers, suggesting that people perceive older men, but not older women, as authoritative. The team also analyzed online text using nine language models to rule out the possibility that women appear younger online due to visual factors such as image filters or cosmetics. That textual analysis showed that less prestigious job categories, such as secretary or intern, linked with younger females and more prestigious job categories, such as chairman of the board or director of research, linked with older males. Next, the team ran an experiment with over 450 people to see if distortions online influence people's beliefs. Participants in the experimental condition searched for images related to several dozen occupations on Google Images. They then uploaded images to the researchers' database, labeled them as male or female and estimated the age of the person depicted. Participants in the control condition uploaded random pictures. They also estimated the average age of employees in various occupations, but without images. Uploading pictures did influence beliefs, the team found. Participants who uploaded pictures of female employees, such as mathematicians, graphic designers or art teachers, estimated the average age of others in the same occupation as two years younger than participants in the control condition. Conversely, participants who uploaded the picture of male employees in a given occupation estimated the age of others in the same occupation as more than half a year older. AI models trained on the massive online trove of images, videos and text are inheriting and exacerbating age and gender bias, the team then demonstrated. The researchers first prompted ChatGPT to generate resumes for 54 occupations using 16 female and 16 male names, resulting in almost 17,300 resumes per gender group. They then asked ChatGPT to rank each resume on a score from 1 to 100. The bot consistently generated resumes for women that were younger and less experienced than those for men. It then gave those resumes lower scores. These societal biases hurt everyone, Guilbeault says. The AIs also scored resumes from young men lower than resumes from young women. In an accompanying perspective article, sociologist Ana Macanovic of European University Institute in Fiesole, Italy, cautions that as more people use AI, such biases are poised to intensify. Companies like Google and OpenAI, which owns ChatGPT, typically try to tackle one bias at a time, such as racism or sexism, Guilbeault says. But that narrow approach overlooks overlapping biases, such as gender and age or race and class. Consider, for instance, efforts to increase the representation of Black people online. Absent attention to biases that intersect with the shortage of racially diverse images, the online ecosystem may become flooded with depictions of rich white people and poor Black people, he says. "Real discrimination comes from the combination of inequalities."

[2]

AI has this harmful belief about women

A new study out Wednesday in the journal Nature from the University of California, Berkeley found that women are systematically presented as younger than men online and by artificial intelligence -- based on an analysis of 1.4 million online images and videos, plus nine large language models trained on billions of words. Researchers looked at content from Google, Wikipedia, IMDb, Flickr, and YouTube, and major large language models including GPT2, and found women consistently appeared younger than men across 3,495 occupational and social categories. (Note: It's possible that filters on videos and women's makeup may be adding to this age-related gender bias in visual content.) Study data showed not only are women asystematically portrayed as younger than men across online platforms, but this distortion is strongest for content depicting occupations with higher status and earnings. It also found that Googling images of occupations amplified age-related gender bias in participants' beliefs and hiring preferences. "This kind of age-related gender bias has been seen in other studies of specific industries, and anecdotally . . . but no one has previously been able to examine this at such scale," said Solène Delecourt, assistant professor at the Berkeley Haas School of Business, who co-authored the study along with Douglas Guilbeault from Stanford's business school and Bhargav Srinivasa Desikan from Oxford's Autonomy Institute.

[3]

How AI is driving a 'distortion of reality' when it comes to how women are presented online

Please use Chrome browser for a more accessible video player Women in work are consistently portrayed as younger and less experienced than men online and by artificial intelligence, a sweeping new study has found. Researchers studied 1.4 million online images and videos, as well as nine large language models trained on billions of words. They discovered that women are systematically presented as younger than men in thousands of job roles and social positions. "This kind of age-related gender bias has been seen in other studies of specific industries, and anecdotally, such as in reports of women who are referred to as girls," said University of California, Berkeley's Solene Delecourt, who co-authored the study. "But no one has previously been able to examine this at such scale." The difference was most stark when women were represented in high-powered positions like chief executives, astronauts or doctors, despite there being no significant age imbalance in reality, according to US census data. "Online images show the opposite of reality. And even though the internet is wrong, when it tells us this 'fact' about the world, we start believing it to be true," said Douglas Guilbeault from Stanford, who co-authored the study. "It brings us deeper into bias and error." Read more technology news: Bitcoin's price at record highs. Is it sustainable? Actor's daughter begs people to stop sending AI videos of him Almost 15 million teens use vapes globally - report The researchers also used ChatGPT to generate around 40,000 CVs and found the AI assumed women were younger and less experienced while rating older male applicants as more qualified. In another experiment, they found that participants who viewed women in occupation-related images estimated the average age for that job to be significantly lower, while those who saw a man performing the same job assumed the average age was significantly higher. For jobs seen as female-dominated, participants recommended younger ideal hiring ages, while for male-dominated occupations, they recommended older hiring ages. "Our study shows that age-related gender bias is a culture-wide, statistical distortion of reality, pervading online media through images, search engines, videos, text, and generative AI," said Ms Delecourt. OpenAI told Sky News it has conducted extensive research into systemic bias in ChatGPT and found that less than 1% of the AI's responses reflect harmful stereotypes.

Share

Share

Copy Link

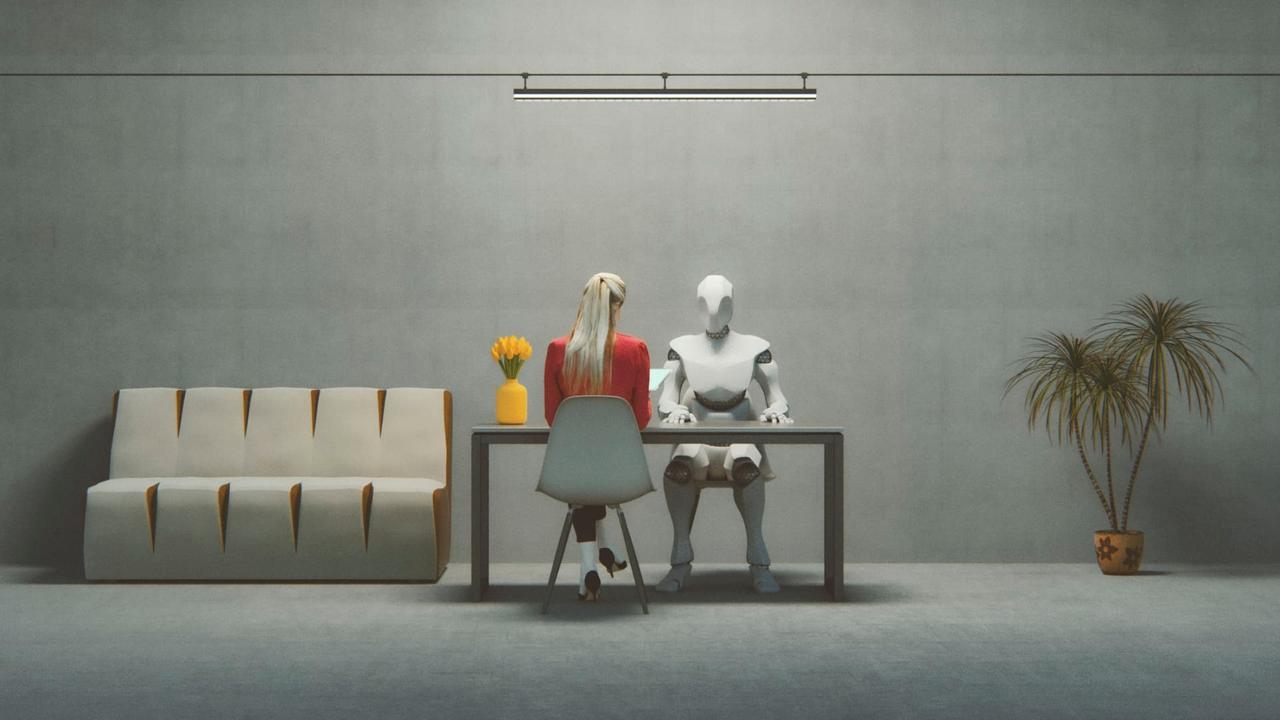

A comprehensive study reveals that AI models and online content consistently depict women as younger than men across various occupations, potentially exacerbating workplace discrimination and reinforcing harmful stereotypes.

AI Systems Reinforce Age-Related Gender Bias

A groundbreaking study published in Nature has uncovered a pervasive age-related gender bias in artificial intelligence systems and online content, potentially exacerbating workplace discrimination and reinforcing harmful stereotypes

1

. Researchers from the University of California, Berkeley, Stanford University, and Oxford University analyzed 1.4 million online images and videos, as well as nine large language models trained on billions of words2

.Systematic Portrayal of Women as Younger

The study found that women are consistently presented as younger than men across 3,495 occupational and social categories on platforms such as Google, Wikipedia, IMDb, Flickr, and YouTube

2

. This distortion is particularly pronounced in high-status and high-earning professions, where the age gap between men and women is most significant1

.AI-Generated Resumes and Hiring Bias

When prompted to generate resumes, ChatGPT consistently produced profiles for women that were, on average, 1.6 years younger than those for men. The AI then ranked these female candidates as less qualified, demonstrating a clear age and gender bias

1

. This bias persisted even in industries where women tend to be older than men, such as sales and service sectors.Impact on Human Perception and Decision-Making

The research team conducted experiments to assess how these online biases influence human beliefs and decision-making. Participants who viewed occupation-related images of women estimated the average age for that job to be significantly lower, while those who saw men in the same roles assumed higher average ages

3

.Implications for Workplace Equality

This age-related gender bias could explain the persistence of the glass ceiling for women in many organizations. Despite efforts to hire more women over the past decade, men continue to dominate higher-ranking positions

1

. The study suggests that companies may be hiring younger women but failing to promote them as they age.Related Stories

Broader Societal Impact

The researchers warn that as AI usage becomes more widespread, these biases are likely to intensify

1

. The systematic portrayal of women as younger in professional settings not only affects hiring and promotion decisions but also shapes societal perceptions of women's roles and capabilities throughout their careers.Call for Action

The study's findings highlight the urgent need for addressing bias in AI systems and online content. As these technologies increasingly influence decision-making processes, it is crucial to develop more balanced and representative datasets and algorithms to prevent the perpetuation and amplification of harmful stereotypes in the workplace and society at large.

References

Summarized by

Navi

[1]

[2]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation