Google's Gemini 3 Launches with Immediate Search Integration, Signaling Strategic Shift in AI Race

10 Sources

10 Sources

[1]

Inside the making of Gemini 3 - how Google's slow and steady approach won the AI race (for now)

When I walked into a conference room in Google's San Francisco building last week, I expected to find the typical tech briefing setup with rows of chairs facing a wall of screens and a corporate voice managing a slide deck. Instead, I found myself in what looked more like group therapy with a large circle of cozy chairs arranged around the center of the room. About a dozen carefully selected testers and creators, including myself, sat down with the team behind Gemini 3, which had just gone public, and Nano Banana Pro, which would debut the next day. Also: Google's Gemini 3 is finally here and it's smarter, faster, and free to access That rapid release schedule couldn't have been more telling. The AI industry is in the midst of an unprecedented race, with OpenAI, Anthropic, Google, and others entrenched in a constant scramble to capture user attention and prove their models deliver more value than the rest. With Tulsee Doshi (senior director and head of product for Gemini Models), Logan Kilpatrick (group PM lead for Gemini API), and Nicole Brichtova (product lead for image & video) sitting across from me, I got a fascinating look at the decisions, tradeoffs, and challenges behind these high-profile launches. Here are three details that stood out during our 75-minute conversation. The gap between Gemini 2.5 Pro's debut at Google I/O in May and Gemini 3's arrival in November felt significant, especially given the rapid pace of AI development across the industry. When the topic of timeline came up, Doshi explained that the delay came down to a two-pronged approach. On the pre-training side, the team set ambitious goals around reasoning performance and multimodality. They wanted "state-of-the-art reasoning" with real "nuance and depth." But the bigger factor was post-training work focused on usability improvements like better tool use and refining the model's persona based on extensive feedback they gathered from the 2.5 release. The team had learned a hard lesson from their previous experimental model release strategy. Also: Want to ditch ChatGPT? Gemini 3 shows early signs of winning the AI race "We had done this sort of experimental model release train multiple times," Doshi said, "and a lot of the feedback from the developer audience was [that] this caused a lot of churn for people." Developers were waking up every morning to find things drastically different. That required them to test new experimental Gemini models, which carried with them a "true cognitive and time cost." This time, they took a different approach. "We spent a much longer iteration cycle of giving the model to folks, getting feedback, using that feedback to iterate on the models more, doing that round a few times," Doshi explained. The last few weeks became an intense sprint of triaging issues, identifying whether problems were in serving or the model itself, and fixing what they could. Also: Google's Nano Banana image generator goes Pro - how it beats the original Kilpatrick added that coordinating launches across multiple Google services created an extra layer of complexity. "It's really difficult to get all of Google on the same page, and spin up the infrastructure to support this model for hundreds of millions of customers," he said. The goal was to simultaneously ship across the Gemini app, Google Search, and AI Studio, which required far more coordination than previous launches. The philosophy driving these decisions was clear: "We try not to be as date-driven as we try to be quality-driven," Doshi noted. The team wanted to avoid shipping an unpolished product and essentially testing and iterating in public. Instead, they opted to do it behind closed doors. Doshi went on to say, "The volume of feedback that came in was almost actually more than we even could properly manage." As I sat there, it occurred to me that I had a sense of what might be helpful in that case, so I asked, "How much, if at all, do you use the Gemini model to analyze and understand the success of the Gemini model?" To my surprise, Doshi's response was immediate: "A lot, actually. It's been actually pretty awesome." The team uses Gemini extensively to cluster feedback and identify patterns from the massive influx of user reports. But Doshi was careful to note an important balance. "I want a lot of our teams to build empathy, and some of that empathy goes away if you abstract too high." If Gemini fully abstracts the feedback, teams might lose touch with the actual pain points users are experiencing. So they use Gemini to help find the patterns, but keep the team reading real user feedback so they stay close to their frustration. Also: Google just rolled out Gemini 3 to Search - here's what it can do and how to try it Beyond analyzing feedback, they're also using Gemini to write tools that speed up their testing process. Kilpatrick's team has taken this even further on the product side. "We're more so continuously coding using Gemini 3, which has been a huge accelerant of making the UI better," he said. Taking it one big step further, Kilpatrick added, "Gemini 4 is going to be created by Gemini 3. Maybe some of the product experiences of how you interact with Gemini 4 are being created right now by Gemini 3." Doshi was quick to add, "I don't know that I would go as far as to say Gemini built Gemini, but I think it's very close to how we take all of these various pieces and have Gemini accelerate." One of Nano Banana Pro's most impressive improvements is something that has taken AI a long time to master. The text in AI-generated images actually looks accurate now. Nicole Brichtova walked us through examples of infographics created with remarkably simple prompts. When looking at these examples on the big screen in the room, I found myself scrutinizing every word, searching for the obvious signs of AI-generated text, any misspellings, made-up words, and seemingly alien nonsense characters that have plagued image generation models to date. To my surprise, this incredibly complex infographic was spotless. The improvement in what Brichtova called the "cherry-pick rate" has been dramatic from the previous version of Nano Banana. "It used to be that you had to generate 10 of these and then maybe one of them actually had perfect text," she said. "And now you'll make 10 and maybe one or two of them you can't actually use." Also: I tried NotebookLM's new visual aids - it said I went to 'Borkeley' What made the progress even more striking was how sophisticated the failures had become. Doshi mentioned looking at examples from a couple of months earlier where errors were obvious, but more recently, she questioned whether seemingly real words were real at all. "It looked legitimate, like it wasn't funny or anything -- but no, it was not a real word." The model had gotten so good that it could create convincing fake words that looked like they belonged in the English language. One tester in the room shared their experience using Nano Banana Pro to generate an infographic from a research paper. The first attempt worked beautifully, and the first couple of iterations to refine it went well. But by the fifth round of edits, things fell apart, and the model started making up words and even dropping in fragments of other languages. Brichtova acknowledged this as a known limitation. "Multi-turn is something that we continue to get better at," she said. "After you get into turn three, you basically have to reset your conversation. The longer you have a conversation with this model, the more it can fall apart." She emphasized it's an area they're actively working on, though for single-shot generation, the quality has reached an impressive level. After 75 minutes of candid conversation, I joined the group for a few hands-on demos of both Gemini 3 and Nano Banana Pro. One moment that stood out for me was seeing Nano Banana Pro generate images of my face with notable accuracy. I've tested plenty of image generators, but this was the first time I had trouble distinguishing the AI-generated version from a real photo. The adherence to my actual facial features was spot on, and the holiday sweater was a nice bonus, too. What struck me most, though, wasn't just the technology being shown off but the mood in the room. Despite Gemini 3's successful launch the day before and the obvious excitement around Nano Banana Pro's release to follow, there was a notable hesitation among the team to celebrate too early. Given how positively people had responded to both Gemini 3 and the viral success of the original Nano Banana, I thought Nano Banana Pro was a slam dunk. However, the team wasn't ready to give themselves high-fives just yet. They wanted to see the launch land successfully first. And even then, the celebration would be brief, because the breakneck pace of AI development meant they'd need to get right back on that treadmill to prepare for the next release. In an industry where companies race to ship the next big model, Google's approach stood out for its willingness to delay for quality, iterate based on concrete feedback, and use its own AI to build better AI. Perhaps most telling, however, was witnessing a team that, even after a major win, understood there's little time to rest.

[2]

Alphabet stock surges on Gemini 3 AI model optimism

The new model is an improvement on its predecessor, Gemini 2.5, which Google released about eight months ago. Google said Gemini 3 allows users to get better answers to more complex questions and doesn't need as much prompting to determine the context and intent behind their requests. Gemini 3 will be integrated into Google's search products, the Gemini app and enterprise services. Analysts at D.A. Davidson said in a Tuesday note that they were quickly impressed by Gemini 3, calling it a "genuinely strong model" and "the current state-of-the-art" based on preliminary testing and how it scored against AI benchmarks. "We'd go as far to say that this latest model from [Google DeepMind] meaningfully moves the frontier forward, with capabilities that in certain areas far exceed what we've typically come to expect from this generation of frontier models," the analysts wrote. The firm has a neutral rating on Alphabet shares.

[3]

Google Search AI Mode just got a big Gemini 3 upgrade -- here's how to try it right now

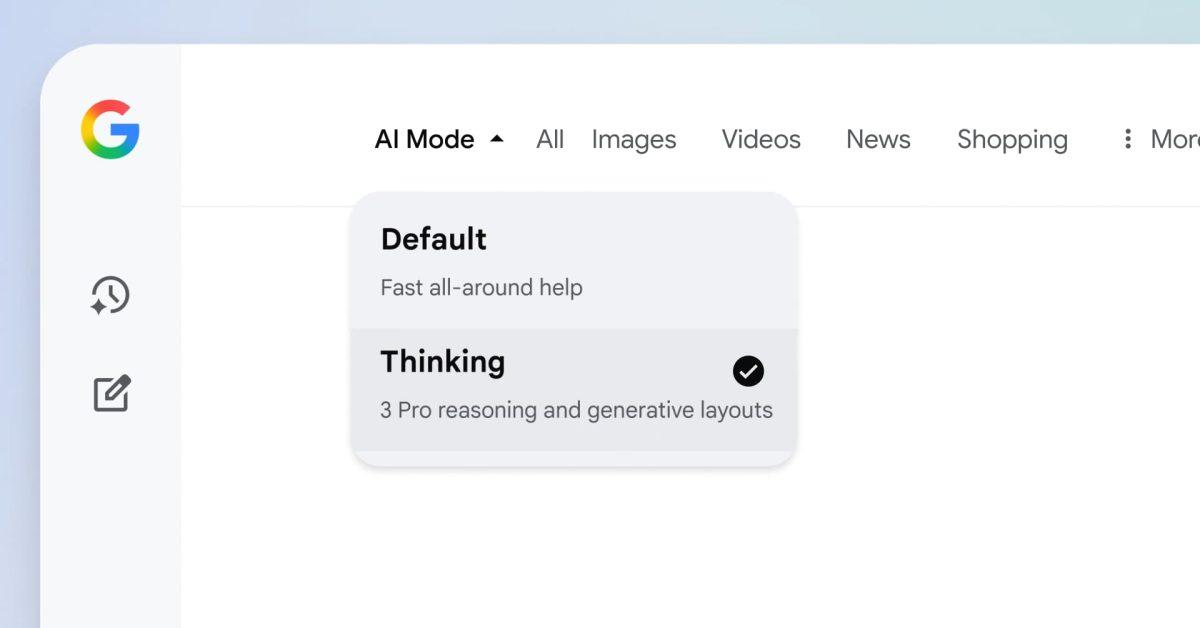

Gemini 3 has arrived, and with it comes huge advancements for Google users. This is being seen in a variety of places, but most notably in search. Google's AI mode, a feature built into Google Search, is now smarter and more capable thanks to the latest Gemini model. Specifically, I'm talking about the new 'Thinking' mode. Your more complicated Google searches can now be tackled by a powerful AI tool, scouring the depths of the internet and carefully analyzing your request. AI mode can even code on the fly, creating interactive tools and games to help answer certain queries. So, if you're new to the world of Google AI mode, here is how to access it and use the latest Gemini 3 upgrades. Try messing around with some different queries and see if you can trigger Google into generating some. Right now, Google is trialing a lot of Gemini 3's features for those in the US only. They are also available on the two paid plans. However, Google has already stated that it plans on bringing AI mode changes to all plans, both free and paid, in the near future. As for other regions of the world, there is currently no news on this. Based on previous launches from AI models in the past, Google should start to make Gemini 3 available in more regions in the next couple of months.

[4]

Google's Gemini 3 unleashes a new era of AI search, build and think smarter

Google has launched Gemini 3, its most advanced artificial-intelligence model to date. Unlike previous model updates, Gemini 3 is embedded into Google Search from day one and available across multiple platforms. With benchmark-beating performance in reasoning, multimodal understanding and agentic coding, Google is signalling a pivot from pure research to revenue-driving AI applications. Gemini 3 brings, why it matters, and what the implications are for users, developers and the broader AI ecosystem. In what is arguably a strategic pivot in the AI arms race, Google has rolled out Gemini 3, a model designed not just to elevate research metrics but to be product-ready at launch. According to Google's blog post, this is "our most intelligent model" yet, built for learning, building and planning anything. On the business side, Google also confirmed that Gemini 3 is embedded into Search from day one. From the official launch page, Gemini 3 makes substantial gains across reasoning, multimodal capabilities and tool usage. For example: Embedding Gemini 3 directly into Search is a significant strategic move. Historically, model launches have been followed by product integration weeks or months later. Google's chief AI architect highlighted that this time the rollout is immediate. For users, this means that the AI layer is not just an optional add-on but part of the core experience. For Google's advertising and cloud business, the faster rollout into products suggests they are accelerating monetisation. Google emphasised revenue-generating consumer and enterprise products at launch. Gemini 3 is available through Google's suite of tools: the Gemini app, AI Studio, Vertex AI and the newly introduced developer platform Google Antigravity. Developers now have access to a model that is described as best-in-class for "vibe coding" and interactive web UI generation. In Enterprise scenarios, the ability to plan, build and execute via agentic workflows opens new possibilities for automation, custom applications and productivity gains. From a strategic content or product-owner perspective, this means the barrier to developing sophisticated AI-driven tools is further lowered. While the advances are noteworthy, there are several practical considerations: Gemini 3 is more than another incremental AI model release. It signals a shift in Google's strategy from pure capability leadership to product-driven, rapidly deployable AI across Search, developer tools and enterprise workflows. For content professionals, technologists and business strategists alike, the question is no longer if these models matter, but how they will transform the workflows, content ecosystems and competitive dynamics we operate within. The organisations that adapt fastest, integrate AI meaningfully and maintain human-centric value will be the ones that win.

[5]

Google Gemini 3 Gives You Superpowers : Here's How to Unlock Them

What if you could supercharge your creativity, streamline your workflow, and tackle challenges with the precision of a seasoned expert, all without breaking a sweat? Bold claim, right? But that's exactly what Google's latest AI marvels, Gemini 3 and Nano Banana, promise to deliver. These innovative tools aren't just upgrades, they're fantastic options, designed to amplify your capabilities in ways you might not have thought possible. Whether you're a developer crafting immersive simulations, a marketer designing standout campaigns, or an educator simplifying complex ideas, these AI models are your ticket to unlocking a new level of productivity and innovation. With their ability to interpret multimodal inputs and generate professional-grade visuals, they're not just tools, they're your creative partners. In this overview Riley Brown explains how to harness the multimodal brilliance of Gemini 3 and the visual mastery of Nano Banana to elevate your projects. You'll discover how these AI models can seamlessly integrate into your existing workflows, helping you create everything from interactive 3D environments to eye-catching infographics with ease. But this isn't just about tools, it's about transformation. By the end, you'll not only understand how to use these technologies but also how to think differently about what's possible in your field. So, if you're ready to unlock your superpowers and reimagine what you can achieve, let's take the first step together. Gemini 3 is a new AI model that excels in multimodal understanding, allowing it to process and interpret text, images, and other data types seamlessly. This versatility makes it an invaluable tool for creating content that is both contextually accurate and visually compelling. Whether your focus is on coding, designing, or generating visual content, Gemini 3 adapts to your specific needs, offering a tailored approach to problem-solving. Key Features of Gemini 3: For example, if you are working on an educational platform, Gemini 3 can assist in designing interactive simulations that simplify complex concepts. Its ability to interpret multimodal inputs ensures that your content is not only functional but also engaging and accessible to a diverse audience. Nano Banana complements Gemini 3 by focusing on the generation of high-quality visual content. This AI model is particularly suited for marketers, educators, and content creators who require professional-grade visuals without the steep learning curve associated with traditional design tools. Its intuitive design and robust capabilities make it an essential resource for producing impactful visual materials. Capabilities of Nano Banana: For instance, if you are launching a marketing campaign, Nano Banana can quickly produce eye-catching visuals that effectively communicate your message. Its user-friendly interface allows you to focus on strategic planning and creative execution, rather than the technical intricacies of design. Gain further expertise in Gemini 3 by checking out these recommendations. The combined strengths of Gemini 3 and Nano Banana unlock a wide array of possibilities across various industries. These tools are particularly valuable for professionals seeking to enhance their workflows and deliver high-quality results. For developers, platforms like the Vibe Code App enable seamless integration of these AI models into mobile app development. This allows for the creation of AI-powered applications that are both functional and visually appealing, providing users with a superior experience. To fully harness the capabilities of Gemini 3 and Nano Banana, Google offers a suite of supporting platforms designed to enhance their functionality and ease of use. These platforms ensure that you can integrate AI into your existing workflows without disruption, saving time and boosting productivity. These tools work in harmony with Gemini 3 and Nano Banana, offering a streamlined approach to integrating AI into your projects. By using these platforms, you can enhance your productivity and focus on delivering innovative solutions. The introduction of Gemini 3 and Nano Banana marks a pivotal moment in the evolution of artificial intelligence. These tools have the potential to reshape industries such as education, marketing, and content creation by making advanced technologies more accessible to a broader audience. Developers, in particular, can use these models to create AI-powered applications that address specific user needs with precision and creativity. As competition in the AI sector intensifies, with companies like OpenAI and Anthropic driving innovation, the future promises even more powerful tools and capabilities. This ongoing evolution will provide you with greater opportunities to enhance your work and explore new possibilities in digital content and application development. By staying ahead of these advancements, you can position yourself at the forefront of AI-driven innovation.

[6]

Why Alphabet Stock Climbed to an All-Time High Today | The Motley Fool

In a blog post, CEO Sundar Pichai marveled at the staggering scale of the company's artificial intelligence (AI) initiatives. Google's AI Overviews search offering already has 2 billion monthly users. Its Gemini AI assistant app has over 650 million monthly users. Over 70% of Google's cloud computing customers use its AI services. And 13 million developers are building products with its generative AI models. "And that is just a snippet of the impact we're seeing," Pichai said. Judging by the impressive specs of its newest model, Alphabet's standing in the AI race is about to improve even further. Gemini 3 is Alphabet's most intelligent AI model. It's designed to quickly synthesize information across text, images, video, audio, and code. Gemini 3 offers state-of-the-art reasoning that's better at understanding the context around a user's request with less prompting. It significantly outperforms Alphabet's previous models on every benchmark, such as mathematics, scientific knowledge, coding, and factual accuracy. Alphabet's latest model is also designed to deliver responses more directly and concisely, without the clichés and flattery that have annoyed users of other leading models. Alphabet is making these new tools available today in its search browser's AI Mode, Gemini app, and developer platforms.

[7]

Gemini 3 is Google's 'most intelligent' AI model. Could it be a problem for its rival ChatGPT's OpenAI?

Google has launched its advanced Gemini 3 AI model, boasting superior reasoning and multimodal capabilities that outperform previous versions and rival OpenAI's offerings. Integrated directly into Google Search, Gemini 3 aims to enhance user experience with more intelligent search results and interactive visualizations, marking a significant move in the AI race. Google's much-awaited Gemini 3 AI model has been launched and the tech giant said the new model is both smarter and better for visual learning. In a blog post, Google said Gemini 3 is the "most intelligent model can help bring any idea to life." "Built on a foundation of state-of-the-art reasoning, Gemini 3 Pro delivers unparalleled results across every major AI benchmark compared to previous versions. It also surpasses 2.5 Pro at coding, mastering both agentic workflows and complex zero-shot tasks," the tech giant said. Business analysts are calling Gemini 3 the most aggressive move by Google against its rival OpenAI's ChatGPT, according to Business Insider. After GPT-5's modest arrival earlier this year, the pressure has been on for Google to deliver something much better. The launch of Gemini 3 is like a turning point in the AI race, with Google integrating the model directly into Google Search from day one. Users can access it instantly through a new "AI mode" toggle -- no separate app or download needed. ALSO READ: OpenAI CEO Sam Altman's big warning to his employees in his leaked memo: 'Google has been doing excellent...' Google says Gemini 3 is an improvement in both reasoning and multimodal abilities, adding that it significantly outperforms 2.5 Pro on every major AI benchmark. Google claims that Gemini 3 is the most-advanced model and it is better at coding. The new AI model is more creative and versatile as compared to the previous iterations. Gemini 3 Pro Image offers a significant leap forward from 2.5 Flash Image, transforming abstract image generation into functional assets. It excels in handling logic and language, and delivers state-of-the-art text rendering, producing clear, accurate text integrated in your images. "It doesn't just process text or images separately," said Tulsee Doshi, product lead for Gemini, on a roundtable with reporters this week. "It actually understands the nuances across them to convert information into the medium that actually makes most sense for you." Google said it has strengthened by the model's coding abilities, meaning it can create a presentation or an interactive graphic to explain a complex idea. ALSO READ: Google co-founder Larry Page overtakes Jeff Bezos as world's no 3 richest. How did he add $6 billion to his net worth? ChatGPT remains one of the most widely used AI chatbots globally, but its ecosystem is relatively limited. It relies on partners for chips, cloud infrastructure, and distribution, while Google controls its entire pipeline. Highlighting this advantage, Koray Kavukcuoglu said, "One of the most important things for us at Google is this is possible because we have a very differentiated full-stack approach." With Gemini 3, Google is positioning itself not just as a rival, but as a full-fledged alternative to ChatGPT across everyday search functions and productivity tools. According to Business Insider, any US user who pay for Google's Pro or Ultra Gemini tiers will see a new "Thinking" option in Google Search's AI Mode, which will use Gemini 3 if you choose it. Google says it will soon make Gemini 3 in search available to all. ALSO READ: JPMorgan's Jamie Dimon throws lavish birthday party for King Charles III -- full menu featuring Yorkshire pudding and Beef pie revealed The new model should perform better searches by breaking down your query into even more pieces, Google said. Gemini 3 will also be capable of building more visualizations and interactive graphics right onto the AI Mode search page. Google claims that Gemini 2 has to be its "most factual" model to date. Bernstein analyst Mark Schmulik told BI, "There's no debate that Google has all the technical intangibles across the stack... but we need HARD evidence that they're putting this all together. With Gemini 3, we may have just gotten some." (You can now subscribe to our Economic Times WhatsApp channel)

[8]

Alphabet (GOOGL) stock jumps over 6% to hit $300 for the first time in history as Gemini 3 powers Alphabet's biggest breakout of the year

Alphabet (GOOGL) stock today: Alphabet (GOOGL) jumped more than 6% to $302.87 and crossed $300 for the first time ever after Google launched Gemini 3. The stock hit an intraday high near $304. Volume reached 23.7 million shares. The market cap stood around $2.94 trillion. Alphabet's 2025 gains are now above 50%. The company reported Q3 revenue of $102 billion, up 16%, with stronger margins. Gemini 3 delivers major advances in reasoning, multimodal inputs, and a 1 million-token window. The model integrates across Search, the Gemini app, Vertex AI, and Google's new Antigravity platform. Alphabet (GOOGL) stock today: Alphabet (GOOGL) surged more than 6% to $302.87, crossing the $300 mark for the first time in its history after Google launched its new Gemini 3 artificial intelligence model. The stock touched an intraday high near $304, supported by heavy trading volume above 23.7 million shares. Its market capitalization hovered around $2.94 trillion. Alphabet has gained over 50% in 2025, driven by strong earnings, rapid AI adoption, and momentum in cloud and autonomous driving divisions. The company recently posted record third-quarter revenue of $102 billion, up 16% year over year, with improved operating margins supported by stable advertising demand and expanding cloud services. Gemini 3 is described as Google's most advanced model, offering major improvements in reasoning, multimodal capabilities, and autonomous task handling, with a context window of up to one million tokens. The model outperforms earlier versions and integrates into Google Search, the Gemini app, developer tools, Vertex AI, and the new agentic platform Google Antigravity, enabling dynamic visualizations and real-time AI interactions. The launch is also backed by expanded safety evaluations designed to reduce misuse risks and strengthen reliability. Investors are watching how quickly Gemini 3 scales across consumer and enterprise products as it becomes available to Pro, Ultra, and enterprise users. Google introduced Gemini 3 with major upgrades in reasoning, multimodal intelligence, and autonomous task execution. The launch includes Gemini 3 Pro, which outperforms Gemini 2.5 Pro across critical AI benchmarks. Google also revealed Deep Think mode, designed for complex long-form reasoning and advanced decision-making. The model features a 1 million-token context window, enabling broader and more accurate understanding of text, images, and videos. Gemini 3 is now integrated across Google Search, the Gemini app, developer platforms, and Google's new agentic environment, Google Antigravity. Users can generate richer visual content, dynamic layouts, and interactive tools in real time. Google highlighted new safety systems, including stronger defenses against prompt injections and misuse, making Gemini 3 its "most secure AI release" yet. Alphabet's stock has gained more than 50% in 2025, supported by rapid advancements in AI and solid performance across advertising, cloud, and autonomous driving operations. The company recently reported record third-quarter revenue of $102 billion, up 16% year over year, with improved operating margins. Advertising demand remained strong while Google Cloud continued to expand, benefiting from rising enterprise AI adoption. Key fundamentals also remain healthy. Alphabet's trailing earnings per share stand at $10.13, and its price-to-earnings ratio sits near 23.66, signaling robust profitability relative to its historical range. The stock also moved above its previous 52-week high of $293.95, reinforcing strong momentum heading into the final quarter of the year. Shareholders are watching how quickly Google can scale Gemini 3 across its consumer and enterprise products. The model is now available to Google AI Pro, Ultra subscribers, developers through the Gemini API, and enterprise users on Vertex AI. Broader access through Google Search and the Gemini app is expected to accelerate adoption. However, analysts note the need to monitor regulatory developments in the U.S. and Europe, where AI governance and antitrust scrutiny are growing. Despite those risks, Alphabet's expanding AI ecosystem, strong earnings base, and leadership in cloud and digital advertising continue to support its position as one of the most influential technology companies in the world. (You can now subscribe to our Economic Times WhatsApp channel)

[9]

Gemini 3 launch could shift Alphabet sentiment toward AI-winner status: Mizuho By Investing.com

Investing.com -- Investor anxiety over OpenAI's competitive threat across the internet space has dominated investor debates recently, but Mizuho argues the narrative around Alphabet may be poised for a recalibration as Google prepares to roll out its next major AI model. The firm's analysts believe the "imminent roll-out of Gemini 3 could further tilt the sentiment for Alphabet shares towards AI-winner, at least near term," helped by a stabilizing trend in Google's search activity and rising engagement with its own AI tools. Mizuho notes that browser-based usage data shows ChatGPT's momentum has cooled since the spring, with time spent per user decelerating across both desktop and mobile. App engagement has also moderated, while global downloads are on track for another month of decline, according to third-party analytics cited in the report. Against that backdrop, Gemini appears to be gaining ground, analysts said, with traffic and token-processing trends pointing to expanding usage across Google's ecosystem. They also highlight that AI-assistant market share has shifted meaningfully since late 2024, with the combined share of GPT and Gemini rising from 7% to 13% -- and more recent gains skewing toward Gemini as Google's tools attract increasing engagement. "The trend indicates broad usage of Google AI products across Search, Gemini, and other channels, which has grown, and could continue growing exponentially driven by Google's product ecosystem and as a whole, compete effectively with OpenAI," Mizuho analysts led by Lloyd Walmsley wrote. Google's AI Mode in Search helped return search traffic growth to positive territory in July, supporting early signs that Alphabet's defensive strategy is working. Recent data also shows Gemini's browser share rising, even as ChatGPT's gains slow. Analysts said that "Google's AI product likely gets the most access through AI Mode in core search," making it a key component in stemming query losses to AI assistants. Gemini 3 is expected to deliver significant upgrades in multimodality, reasoning, and automation. Mizuho points to anticipated real-time video understanding, deeper context windows, and a new default reasoning mode, alongside tighter Workspace integration and an "Agent Mode" capable of executing browser-level tasks. Developer chatter also hints at on-device AI and enhanced pairing with Google's Veo 3.1 for video creation. Analysts believe these improvements could help close the gap with OpenAI or even shift leadership in areas such as enterprise automation. While uncertainty remains around long-term competitive dynamics, the team sees scope for overhangs to ease as Alphabet rolls out its own AI advancements. "In the near term, our bias is to think that Google continues to benefit on the core business fundamentals from AI and rolls out impressive AI product of its own - most imminently Gemini 3 - that continues to swing the pendulum more towards AI winner and less towards AI loser," the analysts wrote.

[10]

Alphabet stock up thanks to launch of its new AI model, Gemini 3

The Alphabet stock has risen almost 4% today following the launch of Gemini 3, Google's latest artificial intelligence model. Successor to Gemini 2.5 launched eight months ago, this new model is touted as more capable, able to understand complex queries with fewer prompts and provide more precise responses. It will be deployed across Google's search services, the Gemini app, and enterprise-oriented tools. Analysts praise Google DeepMind's progress while maintaining a cautious stance on the stock. Bank of America Securities, for example, speaks of meaningful progress that helps Google close the ?perceived gap? with competitors such as OpenAI and Anthropic, while maintaining a Buy rating on the Alphabet stock.

Share

Share

Copy Link

Google releases Gemini 3, its most advanced AI model, with immediate integration into Google Search and enterprise services. The launch represents a strategic pivot from pure research to revenue-driving AI applications, featuring enhanced reasoning capabilities and multimodal understanding.

Strategic Launch Marks New Phase in AI Competition

Google has unveiled Gemini 3, its most advanced artificial intelligence model to date, with immediate integration across Google Search, the Gemini app, and enterprise services.

1

The launch represents a significant strategic pivot from pure research capabilities to revenue-generating AI applications, signaling Google's intensified focus on monetizing its AI investments in the competitive landscape dominated by OpenAI, Anthropic, and other major players.

Source: ET

The model's immediate deployment across Google's ecosystem marks a departure from the company's previous approach of launching experimental models followed by gradual product integration weeks or months later.

4

This accelerated rollout strategy demonstrates Google's commitment to rapidly deploying AI capabilities that can drive business value across its advertising and cloud services.Enhanced Capabilities Drive Market Confidence

Alphabet's stock experienced a notable surge following the Gemini 3 announcement, with analysts expressing strong optimism about the model's capabilities.

2

D.A. Davidson analysts described Gemini 3 as a "genuinely strong model" and "the current state-of-the-art," noting that it "meaningfully moves the frontier forward, with capabilities that in certain areas far exceed what we've typically come to expect from this generation of frontier models."

Source: ET

The model demonstrates substantial improvements over its predecessor, Gemini 2.5, which was released approximately eight months ago. Key enhancements include superior reasoning performance, advanced multimodal understanding, and improved tool usage capabilities.

4

Users can now receive better answers to complex questions with reduced prompting requirements, as the model more effectively determines context and intent behind requests.Quality-Driven Development Philosophy

Google's development approach for Gemini 3 reflected a significant shift in methodology, prioritizing quality over speed in response to developer feedback about previous experimental releases. Tulsee Doshi, senior director and head of product for Gemini Models, explained that the team adopted a "much longer iteration cycle of giving the model to folks, getting feedback, using that feedback to iterate on the models more."

1

delves into the strategic pivot towards a quality-first approach.The development team learned from previous releases that caused "churn" for developers who were constantly adapting to experimental model changes. This time, they invested heavily in post-training work focused on usability improvements, including better tool use and persona refinement based on extensive user feedback. The team's philosophy centered on being "quality-driven" rather than "date-driven," opting to conduct testing and iteration behind closed doors rather than in public.

Related Stories

Advanced Search Integration and New Features

Google Search has received significant enhancements through Gemini 3 integration, most notably the introduction of "Thinking" mode within AI Mode.

3

This feature enables the AI to tackle complex queries by thoroughly analyzing requests and even generating interactive tools and games on demand. The enhanced search capabilities allow for real-time coding and creation of interactive elements to better answer user queries.

Source: Tom's Guide

Currently, these advanced features are primarily available to users in the United States, with both free and paid plan access. Google has indicated plans to expand availability to additional regions and user tiers in the coming months, following typical AI model rollout patterns observed across the industry.

Developer and Enterprise Applications

Gemini 3's capabilities extend significantly into developer and enterprise environments, with availability through Google's comprehensive suite of tools including the Gemini app, AI Studio, Vertex AI, and the newly introduced Google Antigravity platform.

4

The model excels in "vibe coding" and interactive web UI generation, lowering barriers for developing sophisticated AI-driven applications.For enterprise scenarios, Gemini 3's ability to plan, build, and execute through agentic workflows opens new possibilities for automation, custom applications, and productivity improvements.

5

The model's multimodal understanding capabilities make it particularly valuable for creating contextually accurate and visually compelling content across various industries including education, marketing, and content creation.References

Summarized by

Navi

[1]

[3]

[5]

Related Stories

Google expands Gemini 3 and Nano Banana Pro to 120 countries with seamless AI Mode integration

01 Dec 2025•Technology

Google Unveils Gemini 2.5 Pro: A New Frontier in AI Reasoning and Capabilities

26 Mar 2025•Technology

Google Launches Production-Ready Gemini 2.5 AI Models, Challenging OpenAI's Enterprise Dominance

18 Jun 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research