Amazon Launches Project Rainier: Massive AI Infrastructure to Power Anthropic's Claude Model

2 Sources

2 Sources

[1]

Amazon launches AI infrastructure project, to power Anthropic's Claude model

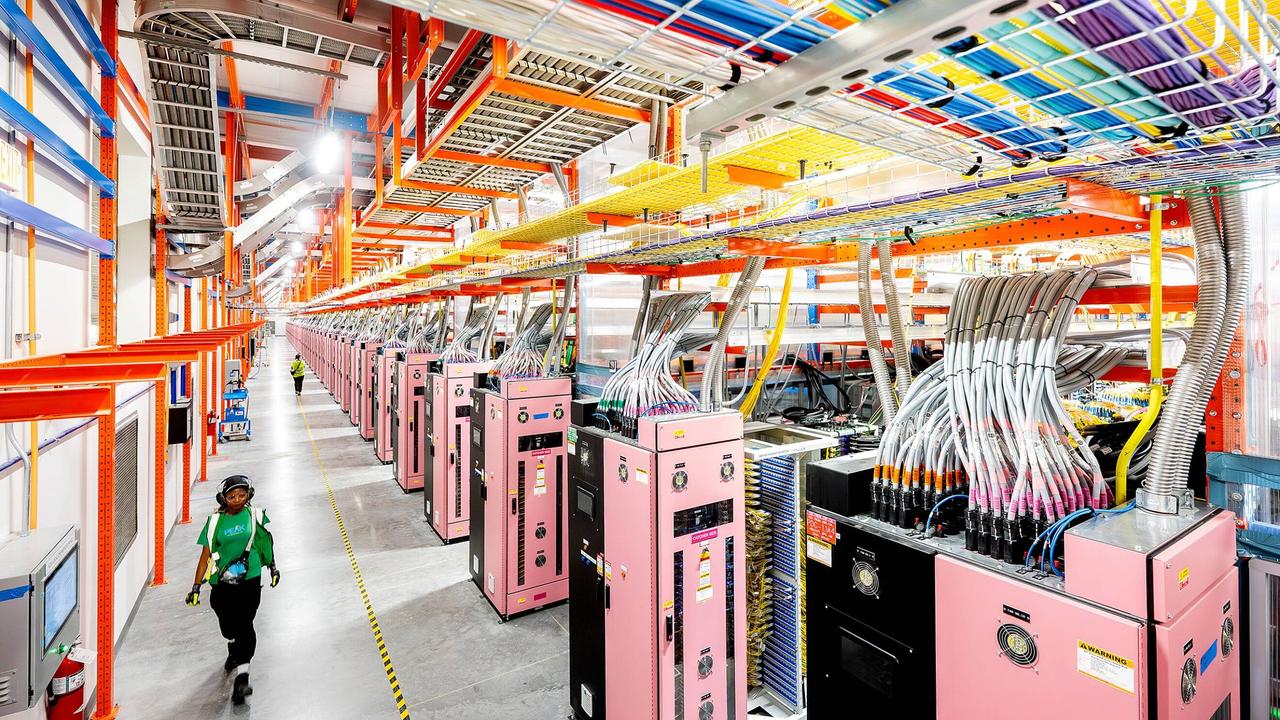

The tech giant had started Project Rainier last year to build an AI compute cluster spread across multiple data centers in the U.S. The computer incorporates nearly half-a-million of Amazon's in-house Trainium2 chips. Amazon.com said on Wednesday it has launched its compute cluster project called Rainier, and added that artificial intelligence firm Anthropic will use more than a million chips of the infrastructure by the end of the year. The tech giant had started Project Rainier last year to build an AI compute cluster spread across multiple data centers in the U.S. The computer incorporates nearly half-a-million of Amazon's in-house Trainium2 chips. As AI models advance, cloud firms such as Amazon's Web Services are scaling up their data center plans to meet the growing need for massive compute capacity. Anthropic, backed by Amazon, is using Project Rainier's compute cluster to build and deploy its AI model Claude. The AI firm will scale to use more than 1 million Trainium2 chips across Amazon's Web Services by the end of this year. Rainier's compute power will also be used for future versions of Claude, Amazon said.

[2]

Amazon launches AI infrastructure project, to power Anthropic's Claude model

(Reuters) -Amazon.com said on Wednesday it has launched its compute cluster project called Rainier, and added that artificial intelligence firm Anthropic will use more than a million chips of the infrastructure by the end of the year. The tech giant had started Project Rainier last year to build an AI compute cluster spread across multiple data centers in the U.S. The computer incorporates nearly half-a-million of Amazon's in-house Trainium2 chips. As AI models advance, cloud firms such as Amazon's Web Services are scaling up their data center plans to meet the growing need for massive compute capacity. Anthropic, backed by Amazon, is using Project Rainier's compute cluster to build and deploy its AI model Claude. The AI firm will scale to use more than 1 million Trainium2 chips across Amazon's Web Services by the end of this year. Rainier's compute power will also be used for future versions of Claude, Amazon said. (Reporting by Zaheer Kachwala in Bengaluru; Editing by Leroy Leo)

Share

Share

Copy Link

Amazon has officially launched Project Rainier, a massive AI compute cluster incorporating nearly half-a-million Trainium2 chips across multiple U.S. data centers. Anthropic will scale to use over 1 million chips by year-end to power its Claude AI model.

Amazon Unveils Project Rainier Infrastructure

Amazon Web Services has officially launched Project Rainier, a massive artificial intelligence compute cluster that represents one of the largest AI infrastructure deployments to date. The project, which Amazon began developing last year, incorporates nearly half-a-million of the company's proprietary Trainium2 chips distributed across multiple data centers throughout the United States

1

.The infrastructure project demonstrates Amazon's commitment to supporting the exponential growth in AI computational requirements. As artificial intelligence models become increasingly sophisticated and resource-intensive, cloud service providers are investing heavily in specialized hardware and distributed computing architectures to meet demand

2

.Anthropic Partnership and Scaling Plans

Anthropic, the AI company backed by Amazon, has emerged as the primary beneficiary of Project Rainier's computational capabilities. The partnership involves Anthropic utilizing the infrastructure to build and deploy its Claude AI model, which has gained significant attention in the competitive landscape of large language models

1

.The scale of Anthropic's planned usage is remarkable, with the company expected to leverage more than one million Trainium2 chips across Amazon's Web Services platform by the end of 2024. This represents a doubling of the current chip deployment and underscores the massive computational requirements for training and running advanced AI models

2

.Related Stories

Industry Implications and Future Development

The launch of Project Rainier reflects broader trends in the cloud computing industry, where major providers are rapidly scaling their data center capabilities to accommodate AI workloads. Amazon's investment in custom silicon through its Trainium2 chips represents a strategic move to reduce dependence on third-party hardware while optimizing performance for AI applications

1

.Amazon has indicated that Project Rainier's computational power will support not only current versions of Claude but also future iterations of the AI model. This forward-looking approach suggests that the infrastructure is designed with scalability in mind, anticipating the continued evolution and increased complexity of AI models in the coming years

2

.References

Summarized by

Navi

[2]

Related Stories

Amazon's $11 Billion Project Rainier AI Megacluster Goes Live, Rivaling OpenAI's Stargate Initiative

30 Oct 2025•Business and Economy

Amazon Deepens AI Partnership with Anthropic, Investing Additional $4 Billion

23 Nov 2024•Business and Economy

Amazon Considers Multibillion-Dollar Investment in Anthropic, Pushing for Adoption of Its AI Chips

08 Nov 2024•Business and Economy

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation