Amazon's $11 Billion Project Rainier AI Megacluster Goes Live, Rivaling OpenAI's Stargate Initiative

3 Sources

3 Sources

[1]

AWS Stargate-smashing Rainier megacluster goes live

Half a million Trainium2 chips now running Anthropic workloads, with half a million more waiting in the wings Never mind Sam Altman's Stargate, which is just beginning to open its portal to distant AI-fueled worlds: Amazon's competing mountain of AI compute power is already up and running. Amazon Web Services today announced that Project Rainier, its Stargate-rivaling AI "UltraCluster", is now up and running, with "nearly half a million" Trainium2 chips serving the massive machine across multiple datacenters. Just how many datacenters and how much compute power Rainier actually offers wasn't shared, but AWS assured the public in its press release that the machine is "one of the world's largest AI compute clusters," and it came online in record time. "Project Rainier ... is now fully operational, less than one year after it was first announced," AWS said - and it's not stopping at that half-a-million Trainium2 chips, either. The cluster is already being used by Amazon's AI partners at Anthropic, who the company said will be scaling "to be on more than 1 million Trainium2 chips - for workloads including training and inference - by the end of the year." From what we know based on earlier discussions with AWS staff in our preview of Project Rainier from the summer, each one of the datacenters housing the project will be massive. An AWS spokesperson told us in July that one site in Indiana that is now partially online as part of the Rainier cluster will eventually span 30 datacenter buildings, each measuring 200,000 square feet. We reached out to AWS for more information on the Rainier cluster, how many datacenters currently encompass it, and how large it'll be by year's end, but didn't hear back. AWS is locked into the AI capacity battle versus the Stargate joint-venture project between OpenAI and partners like Oracle and SoftBank. There were around 200 megawatts of Stargate compute power online at the OpenAI-backed initiative's Abilene, Texas, datacenter as of earlier this month, and commitments from OpenAI's partners plan to expand the Texas Stargate DC to 1.2 GW of capacity by mid-2026. Oracle is supposed to help add 5.7 GW of capacity in the next four years. Amazon's logistical expertise certainly helped it build fast, but it's also got a hardware advantage. "Unlike most other cloud providers, AWS builds its own hardware, and in doing so, can control every aspect of the technology stack, from a chip's tiniest components, to the software that runs on it, to the complete design of the datacenter itself," AWS said in a press release.

[2]

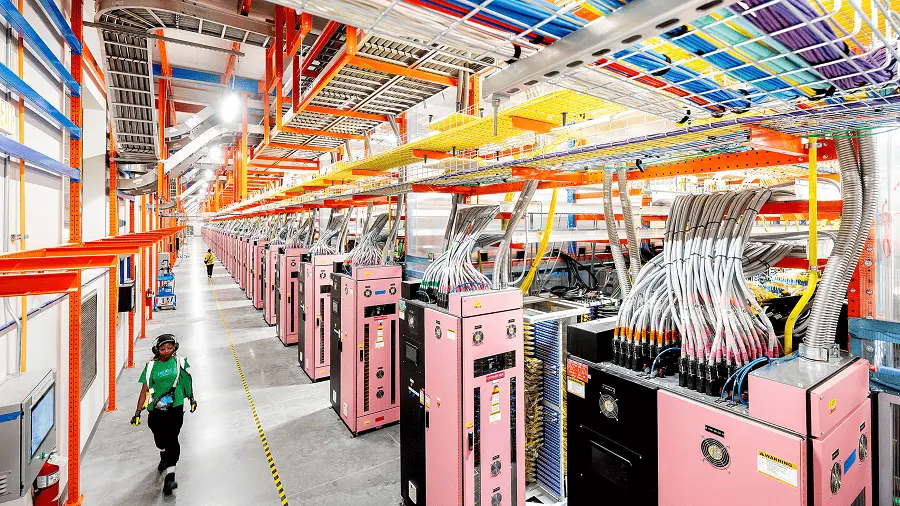

Amazon opens $11 billion AI data center in rural Indiana as rivals race to break ground

NEW CARLISLE, Indiana -- A year ago, it was farmland. Now, the 1,200-acre site near Lake Michigan is home to one of the largest operational AI data centers in the world. It's called Project Rainier, and it's the spot where Amazon is training frontier artificial intelligence models entirely on its own chips. Amazon and its competitors have pledged more than $1 trillion towards AI data center projects that are so ambitious, skeptics wonder if there's enough money, energy and community support to get them off the ground. OpenAI has Stargate -- its name for a slate of mammoth AI data centers that it plans to develop. Rainier is Amazon's $11 billion answer. And it's not a concept, but a cluster that's already online. The complex was built exclusively to train and run models from Anthropic, the AI startup behind Claude, and one of Amazon's largest cloud customers and AI partners. "This is not some future project that we've talked about that maybe comes alive," Matt Garman, CEO of Amazon Web Services, told CNBC in an interview at Amazon's Seattle headquarters. "This is running and training their models today." Tech's megacaps are all racing to build supercomputing sites to meet an expected explosion in demand. Meta is planning a 2-gigawatt Hyperion site in Louisiana, while Google parent Alphabet just broke ground in West Memphis, Arkansas, across the Mississippi River from Elon Musk's Colossus data center for his startup xAI. In the span of a month, OpenAI committed to 33 gigawatts of new compute, a buildout CEO Sam Altman says represents $1.4 trillion in upcoming obligations, with partners including Nvidia, Advanced Micro Devices, Broadcom and Oracle. Amazon is already delivering, thanks to decades of experience in large-scale logistics. From massive fulfillment centers and logistics hubs to AWS data centers and its HQ2 project, Amazon has deep and close relationships with state and local officials and a playbook that's now being used to get AI infrastructure set up in record time.

[3]

AWS opens $11B Project Rainier data center campus built for Anthropic - SiliconANGLE

AWS opens $11B Project Rainier data center campus built for Anthropic Amazon Web Services Inc. has opened a $11 billion data center campus in Indiana that will run artificial intelligence models for Anthropic PBC. CNBC reported the milestone today. The campus, which is located near Lake Michigan, was built as part of an initiative called Project Rainier that AWS announced last year. It will host both training and inference workloads. AWS parent Amazon.com Inc. has invested $8 billion in Anthropic since the start of 2024. The ChatGPT rival uses AWS infrastructure to run many of its most important workloads. Additionally, Anthropic has reportedly provided the cloud giant with technical input that helped improve the efficiency of its AI infrastructure. The Project Rainier campus currently hosts 7 buildings that contain nearly 500,000 AWS Trainium2 chips. That number is expected to top one million by the end of the year. Further down the line, AWS will reportedly construct another 23 buildings that are expected to increase the site's data center capacity to over 2.2 gigawatts. The Amazon unit made Trainium2 generally available to cloud customers last December. The processor comprises two compute tiles, or chiplets, and four stacks of HBM3 memory. Those components sit on a base layer called an interposer that enables them to exchange data with one another. The Trainium2's compute tiles host eight cores based on a custom design called the NeuronCore-v3. According to AWS, each NeuronCore-v3 core comprises four compute modules. One runs calculations on one piece of data at a time, while another can process multiple pieces of data at once. The two other modules are optimized to run linear algebra operations and custom code provided by developers. Project Rainier's chips run in systems called UltraServers. According to AWS, each machine contains four servers that host 16 Trainium2 chips apiece. The chips exchange data via a custom interconnect dubbed NeuronLink. AWS plans to launch a new machine learning accelerator, AWS Trainium3, by the end of the year. It will become available to not only Anthropic but also other customers. Last December, AWS disclosed that UltraServers equipped with Trainium3 are expected to provide four times the performance of current-generation machines. Project Rainier should make it easier for Anthropic to remain competitive with OpenAI Group PBC. Over the past few months, the ChatGPT developer has announced plans to deploy 33 gigawatts of data center capacity. OpenAI will use graphics cards from Nvidia Corp. and Advanced Micro Devices Inc., as well as a custom AI chip set to enter mass production next year.

Share

Share

Copy Link

AWS launches Project Rainier, an $11 billion AI data center campus in Indiana featuring nearly 500,000 Trainium2 chips exclusively serving Anthropic's AI workloads. The facility represents Amazon's direct challenge to OpenAI's Stargate project in the race for AI infrastructure supremacy.

Amazon's AI Infrastructure Gambit Takes Center Stage

Amazon Web Services has officially launched Project Rainier, its $11 billion AI data center campus in Indiana, marking a significant milestone in the escalating competition for AI infrastructure supremacy

1

. The facility, built on what was farmland just a year ago near Lake Michigan, represents one of the world's largest operational AI compute clusters and serves as Amazon's direct answer to OpenAI's ambitious Stargate initiative2

.Massive Scale and Exclusive Partnership

The Project Rainier campus currently houses nearly 500,000 Trainium2 chips across seven buildings, with plans to expand to over one million chips by the end of 2024

3

. The facility was constructed exclusively to serve Anthropic, the AI startup behind Claude and one of Amazon's largest cloud customers. This partnership reflects Amazon's $8 billion investment in Anthropic since early 2024, positioning the companies as strategic allies in the AI race3

.Matt Garman, CEO of Amazon Web Services, emphasized the operational nature of the project, stating that "this is not some future project that we've talked about that maybe comes alive. This is running and training their models today"

2

.

Source: SiliconANGLE

Technical Architecture and Custom Hardware

Project Rainier showcases Amazon's vertical integration strategy in AI infrastructure. The facility utilizes AWS's custom Trainium2 chips, which feature two compute tiles and four stacks of HBM3 memory connected via a custom interposer

3

. Each chip contains eight NeuronCore-v3 cores with specialized compute modules designed for different types of AI workloads.The chips operate within UltraServers, with each machine containing four servers hosting 16 Trainium2 chips each. These systems communicate through AWS's proprietary NeuronLink interconnect, enabling efficient data exchange across the massive cluster

3

.Related Stories

Competitive Landscape and Future Expansion

The launch positions Amazon directly against OpenAI's Stargate project, which currently operates around 200 megawatts of compute power in Texas with plans to expand to 1.2 gigawatts by mid-2026

1

. OpenAI's broader initiative, backed by partners including Oracle and SoftBank, aims to deploy 33 gigawatts of capacity representing $1.4 trillion in commitments2

.Amazon's advantage lies in its established logistics expertise and hardware control. The company's ability to manage "every aspect of the technology stack, from a chip's tiniest components, to the software that runs on it, to the complete design of the datacenter itself" has enabled rapid deployment

1

.Strategic Implications and Industry Impact

Project Rainier represents more than infrastructure investment; it demonstrates Amazon's commitment to maintaining competitive positioning in the AI market. The facility's exclusive focus on Anthropic workloads suggests a strategic bet on the startup's potential to challenge OpenAI's market leadership. With plans to expand the Indiana site to 30 buildings of 200,000 square feet each, the ultimate capacity could exceed 2.2 gigawatts

1

.The project also highlights the broader industry trend toward massive AI infrastructure investments, with tech giants collectively pledging over $1 trillion toward similar initiatives. Meta's planned 2-gigawatt Hyperion site in Louisiana and Google's West Memphis facility demonstrate the scale of competition emerging across the sector

2

.References

Summarized by

Navi

[1]

Related Stories

Amazon Launches Project Rainier: Massive AI Infrastructure to Power Anthropic's Claude Model

29 Oct 2025•Business and Economy

Amazon's Massive AI Data Center: Project Rainier Reshapes the Tech Landscape

25 Jun 2025•Technology

AWS Unveils Next-Gen Trainium3 AI Chip and Launches Trainium2-Powered Cloud Instances

04 Dec 2024•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation