AMD Unveils Instinct MI400 and MI500 AI Accelerators to Challenge NVIDIA's Market Dominance

2 Sources

2 Sources

[1]

AMD's confirms Instinct MI400 series AI GPUs drop in 2026, next-gen Instinct MI500 in 2027

TL;DR: AMD announces its next-gen Instinct MI400 AI accelerators for 2026, featuring the CDNA 5 architecture, 432GB HBM4 memory, and 19.6TB/sec bandwidth. The MI450 series delivers up to 40 PFLOPs FP4 performance, competing directly with NVIDIA's Vera Rubin platform in memory capacity and scale-out bandwidth. AMD confirms its next-gen Instinct MI400 AI accelerators for 2026 based on the new CDNA 5 architecture, with a huge 432GB of HBM4 memory, where it will compete against NVIDIA's next-gen Vera Rubin AI platform. During its Financial Analysts Day event, the company confirmed its new MI400 series will come in multiple variants: MI455X is for training and inference, while the MI430X will be available in HPC variants. AMD's new Instinct MI400 series feature the new CDNA 5 architecture, huge amounts of ultra-fast HBM4 memory, more AI formats and higher throughput, and standard-based rack-scale networking (UALoE, UAL, and UEC). AMD says that its new Instinct MI450 series is its "most advanced AI accelerator" with 40 PFLOPs of FP4 compute performance, and 20 PFLOPs of FP8 compute performance, which works out to double the compute performance over the MI350 series. The new Instinct MI450 series will offer a large 50% memory capacity upgrade over the MI350 series, with the new MI450 featuring a huge 432GB of next-gen HBM4 memory over the 288GB of HBM3E on MI350. AMD's new Instinct MI450 will enjoy a huge 19.6TB/sec of memory bandwidth, which is over double the 8TB/sec on the MI350 series. There's also 300GB/sec scale-out bandwidth per GPU, so expect some big things when they arrive in AMD's next-gen Mega Pod systems. AMD is positioning its next-gen Instinct MI450 series GPUs directly against NVIDIA's next-gen Vera Rubin AI platform, directly comparing rack-scale performance against NVIDIA Vera Rubin with its new AMD Instinct MI450 series. This is what that comparison looks like. AMD Instinct MI450 series vs NVIDIA Vera Rubin rack-scale performance: * 1.5x Memory Capacity vs Competition * Same Memory Bandwidth vs Competition * Same FP4 / FP8 FLOPs vs Competition * Same Scale-Up Bandwidth vs Competition * 1.5x Scale-Out Bandwidth vs Competition However, AMD wasn't finished with its exciting announcements and teases... as it will be launching its future-gen Instinct MI500 series in 2027, and will act like the "Ultra" versions of AI GPUs that NVIDIA have been releasing. NVIDIA has its current-gen Blackwell GB200 and Blackwell Ultra GB300 AI chips, with its Rubin and beefed-up Rubin Ultra AI GPUs, with the Rubin Ultra chip reportedly hitting thermal concerns with 2300W of power per chip. In recent rumors, AMD's next-gen Instinct MI450X AI accelerator "forced" NVIDIA to boost the TDP of its Rubin VR200 AI GPU, with Rubin previously having an 1800W TGP, but upgraded to 2300W to better compete against the MI450X with its 2300W TGP design, with AMD also recently upgrading MI450X to 2500W.

[2]

AMD All-Set To Battle NVIDIA's AI Dominance With Instinct MI400 "MI455X & MI430X" Accelerators In 2026, MI500 Is The Next Big Leap For 2027

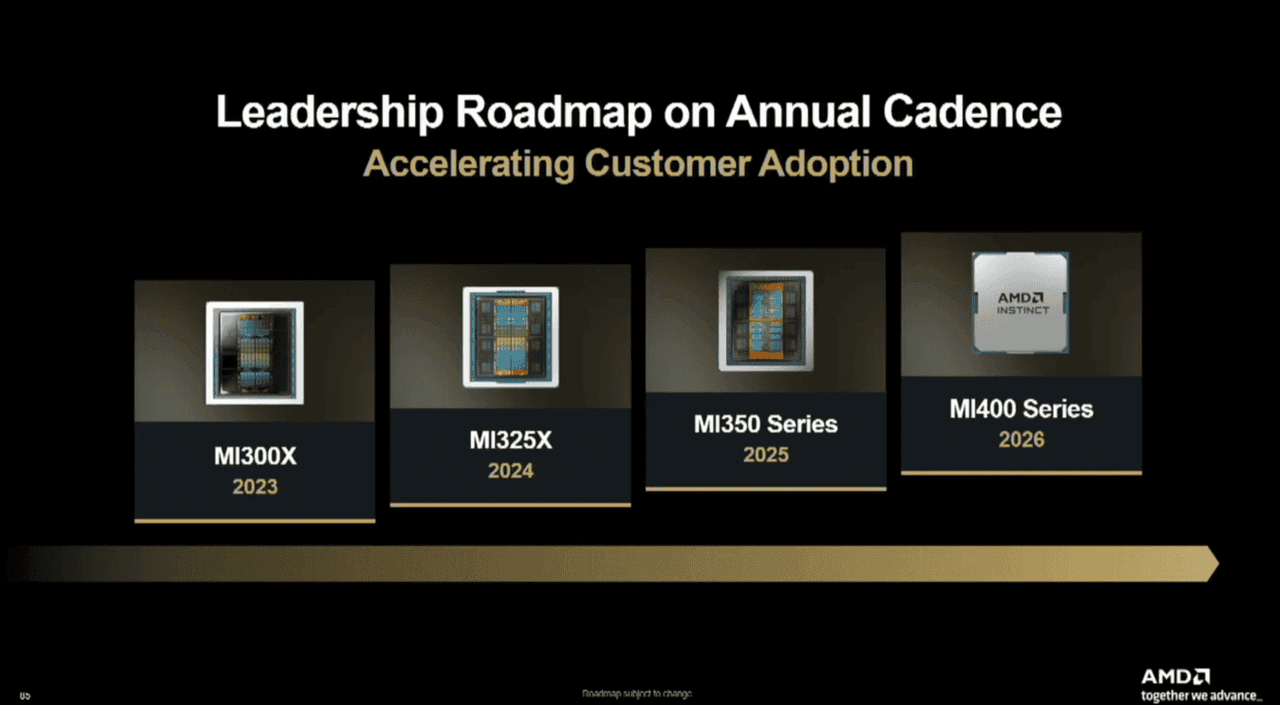

AMD's Instinct MI400 and Instinct MI500 accelerators will set the stage for an epic battle in the AI segment against NVIDIA. AMD MI400 Series Comes In MI455X "Training/Inference" & MI430X "HPC" Variants, Instinct MI500 All Set For 2027 During its Financial Analyst Day 2025, AMD highlighted its MI400 and MI500 series AI GPU accelerators as key highlights. These two Instinct families are really important for AMD's AI strategy moving forward, and the company has reaffirmed its yearly launch cadence to strengthen its AI family against NVIDIA, which is dominating the segment right now. Next year, AMD will launch its Instinct MI400 series AI accelerators. The new lineup will be based on the CDNA 5 architecture and offers: * Increased HBM4 Capacity & Bandwidth * Expanded AI Formats with Higher Throughput * Standard-Based Rack-Scale Networking (UALoE, UAL, UEC) The official metrics list the MI400 as a 40 PFLOP (FP4) & 20 PFLOP (FP8) product, which doubles the compute capability of the MI350 series, which is a hot product for AI data centers. In addition to the compute capability, AMD is also going to leverage HBM4 memory for its Instinct MI400 series. The new chip will offer a 50% memory capacity uplift from 288GB HBM3e to 432GB HBM4. The HBM4 standard will offer a massive 19.6 TB/s bandwidth, more than double that of the 8 TB/s for the MI350 series. The GPU will also feature a 300 GB/s scale-out bandwidth/per GPU, so some big things are coming in the next generation of Instinct. AMD has positioned its Instinct MI400 GPUs against NVIDIA's Vera Rubin, and the high-level comparison looks something like the following: * 1.5x Memory Capacity vs Competition * Same Memory Bandwidth vs Competition * Same FP4 / FP8 FLOPs vs Competition * Same Scale-Up Bandwidth vs Competition * 1.5x Scale-Out Bandwidth vs Competition For the MI400 series, there will be two products, the first one is the Instinct MI455X, which is aimed at scale AI Training & Inference workloads. The other one is MI430X, which is aimed at HPC & Sovereign AI workloads, featuring hardware-based FP64 capabilities, hybrid compute (CPU+GPU), and the same HBM4 memory as the MI455X. In 2027, AMD will be introducing its next-gen Instinct MI500 series AI accelerators. Since AMD is shifting to an annual cadence, we are going to see updates on the datacenter and AI front at a very rapid pace, similar to what NVIDIA is doing now with a standard and an "Ultra" offering. These will be used to power the next-gen AI racks and will offer a disruptive uplift in overall performance. According to AMD, the Instinct MI500 series will offer next-gen compute, memory, and interconnect capabilities. AMD Instinct AI Accelerators: Follow Wccftech on Google or add us as a preferred source, to get our news coverage and reviews in your feeds.

Share

Share

Copy Link

AMD announces its next-generation Instinct MI400 series AI accelerators for 2026 featuring CDNA 5 architecture and 432GB HBM4 memory, followed by the MI500 series in 2027, positioning itself as a direct competitor to NVIDIA's Vera Rubin platform.

AMD Announces Next-Generation AI Accelerator Roadmap

AMD has unveiled its ambitious roadmap for artificial intelligence accelerators during its Financial Analyst Day 2025, announcing the Instinct MI400 series for 2026 and the MI500 series for 2027. This strategic move positions the company as a direct challenger to NVIDIA's dominance in the AI accelerator market

1

2

.

Source: TweakTown

Instinct MI400 Series: Technical Specifications and Performance

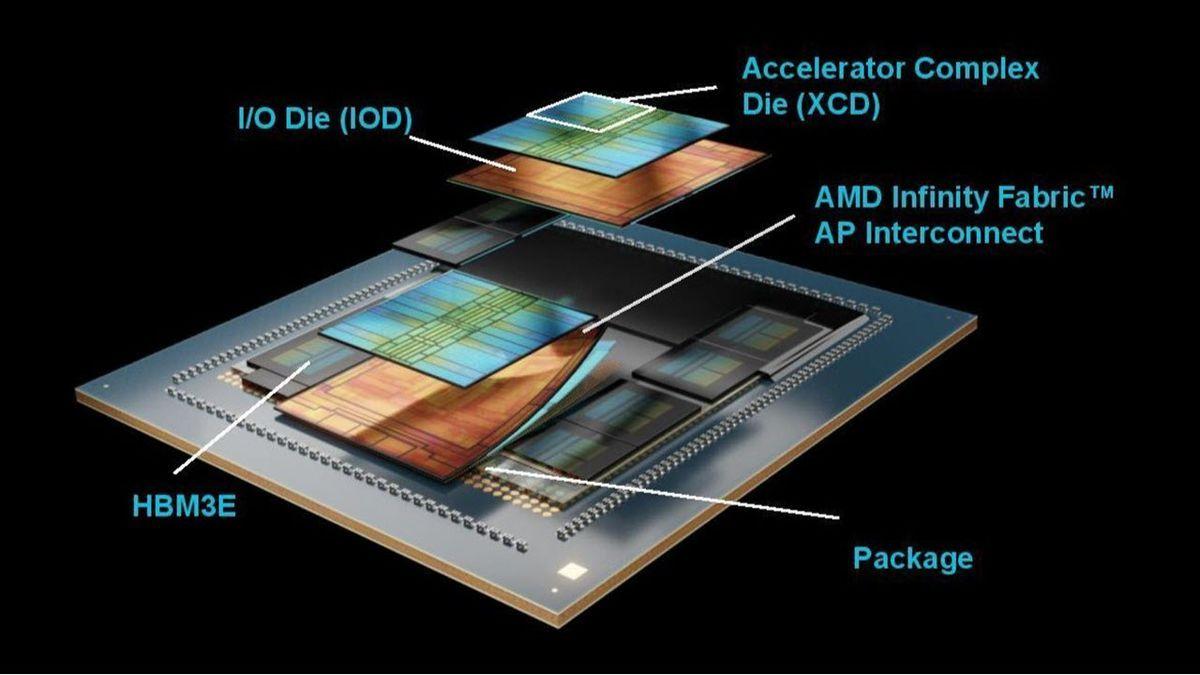

The Instinct MI400 series, built on AMD's new CDNA 5 architecture, represents a significant leap in AI computing capabilities. The flagship MI450 variant delivers 40 PFLOPs of FP4 compute performance and 20 PFLOPs of FP8 compute performance, effectively doubling the computational power of the current MI350 series

1

.

Source: Wccftech

Memory specifications showcase substantial improvements, with the MI400 series featuring 432GB of next-generation HBM4 memory, representing a 50% capacity increase over the MI350's 288GB HBM3E configuration. The memory bandwidth receives an even more dramatic upgrade, jumping from 8TB/sec on the MI350 to an impressive 19.6TB/sec on the MI400 series

2

.Product Variants and Target Markets

AMD will offer two distinct variants within the MI400 lineup. The MI455X targets scale AI training and inference workloads, while the MI430X focuses on high-performance computing (HPC) and sovereign AI applications. The MI430X variant includes hardware-based FP64 capabilities and hybrid compute functionality combining CPU and GPU processing power

2

.Both variants incorporate standard-based rack-scale networking technologies, including UALoE, UAL, and UEC protocols, with each GPU providing 300GB/sec scale-out bandwidth for enhanced system-level performance

1

.Related Stories

Competitive Positioning Against NVIDIA

AMD has positioned the MI400 series as a direct competitor to NVIDIA's upcoming Vera Rubin AI platform. According to AMD's comparative analysis, the MI450 series offers several competitive advantages: 1.5x memory capacity compared to the competition, matching memory bandwidth and FP4/FP8 FLOPs performance, equivalent scale-up bandwidth, and 1.5x superior scale-out bandwidth

1

.The competitive landscape has intensified power requirements, with recent reports suggesting AMD's MI450X forced NVIDIA to increase the thermal design power (TDP) of its Rubin VR200 AI GPU from 1800W to 2300W. AMD has reportedly responded by upgrading the MI450X to 2500W, highlighting the escalating performance race between the two companies

1

.Future Roadmap: MI500 Series for 2027

Looking beyond 2026, AMD has confirmed the development of the Instinct MI500 series for 2027, which will serve as the company's "Ultra" tier accelerators. This strategy mirrors NVIDIA's approach of offering both standard and enhanced variants, such as the Blackwell GB200 and Blackwell Ultra GB300 chips

2

.The MI500 series promises next-generation compute, memory, and interconnect capabilities, though specific technical details remain undisclosed. This annual release cadence demonstrates AMD's commitment to maintaining competitive pressure on NVIDIA's market position

2

.References

Summarized by

Navi

[1]

Related Stories

AMD Accelerates Launch of Powerful Instinct MI350 AI GPU to Challenge Nvidia's Dominance

06 Feb 2025•Technology

AMD Unveils Next-Gen AI Accelerators: MI325X and MI355X to Challenge Nvidia's Dominance

11 Oct 2024•Technology

AMD Unveils Powerful MI350X and MI355X AI GPUs, Challenging Nvidia's Dominance

11 Jun 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy