Anthropic's Claude Code Security sends cybersecurity stocks tumbling as AI reshapes the industry

4 Sources

4 Sources

[1]

Cyber Stocks Slide as Anthropic Unveils 'Claude Code Security'

Shares of cybersecurity software companies tumbled Friday after Anthropic PBC introduced a new security feature into its Claude AI model. Crowdstrike Holdings was the among the biggest decliners, falling as much as 6.5%, while Cloudflare Inc. slumped more than 6%. Meanwhile, Zscaler dropped 3.5%, SailPoint shed 6.8%, and Okta declined 5.7%. The Global X Cybersecurity ETF fell as much as 3.8%, extending its losses on the year to 14%. Anthropic said the new tool will "scans codebases for security vulnerabilities and suggests targeted software patches for human review." The firm said the update is available in a limited research preview for now. The drop by cybersecurity stocks was the latest example of software shares slumping on concerns over competition from AI-native companies. Anxiety has been building for weeks, with iShares Expanded Tech-Software Sector ETF ETF down more than 23% this year, putting it on track for its biggest quarterly percentage drop since the financial crisis in 2008. Much of the selling has come on new AI tools released by companies like Anthropic, OpenAI, and Alphabet Inc., with investors fretting that the ability to "vibe code" -- use AI to write software code -- will allow users to create their own applications, diminishing demand for legacy products, weighing on their growth, margins, and pricing power.

[2]

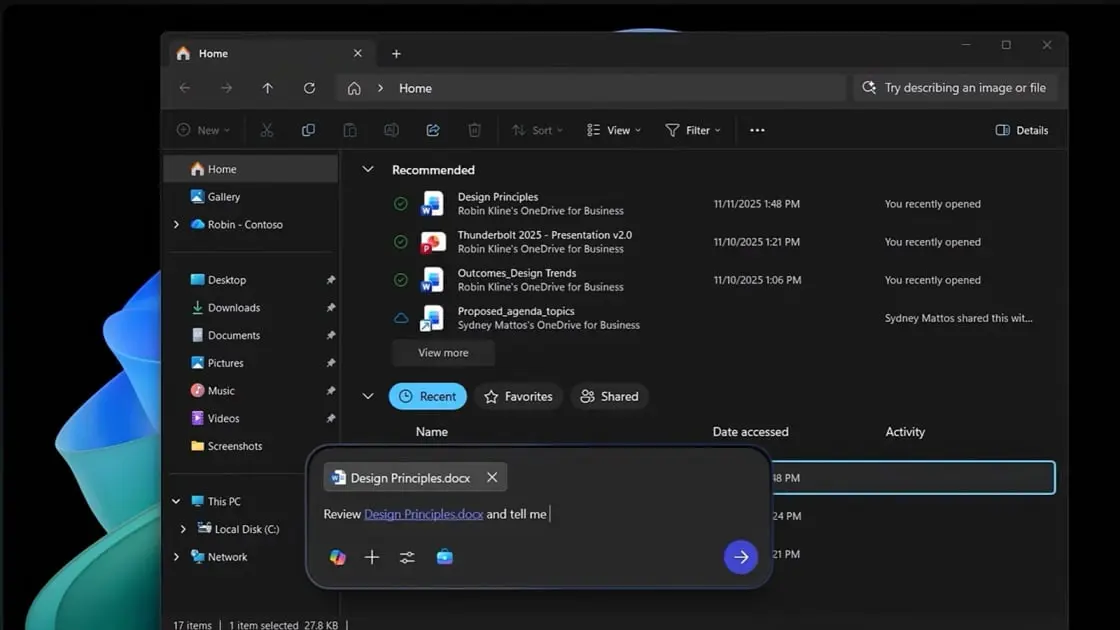

AI can now hunt software bugs on its own. Anthropic is turning that into a security tool. | Fortune

Now, instead of just scanning code for known problem patterns, Claude Code for Security can review entire codebases, more like a human expert would -- looking at how different pieces of software interact and how data moves through a system. The AI double-checks its own findings, rates how severe each issue is, and suggests fixes. But while the system can investigate code on its own, it does not apply fixes automatically, which could be dangerous in its own right -- developers must review and approve every change. Claude Code Security builds on over a year of research by the company's Frontier Red Team, an internal group of about 15 researchers tasked with stress-testing the company's most advanced AI systems and probing how they might be misused in areas such as cybersecurity. The Frontier Red Team's most recent research found that Anthropic's new Opus 4.6 model has significantly improved at finding new, high-severity vulnerabilities -- software flaws that allow attackers to break into systems without permission, steal sensitive data, or disrupt critical services -- across vast amounts of code. In fact, in testing open source software that runs across enterprise systems and in critical infrastructure, Opus 4.6 found some of these vulnerabilities that had gone undetected for decades, and was able to do so without task-specific tooling, custom scaffolding, or specialized prompting. Frontier Red Team leader Logan Graham told Fortune that Claude Code Security is meant to put this power in the hands of security teams that need to boost their defensive capabilities. The tool is being released cautiously as a limited research preview for its Enterprise and Team customers. Anthropic is also giving free expedited access to maintainers of open-source repositories -- the often under-resourced developers responsible for keeping widely used public software running safely. "This is the next step as a company committed to powering the defense of cybersecurity," he said. "We are now using [Opus 4.6] meaningfully ourselves, we have been doing lots of experimentation -- the models are meaningfully better." That is particularly true in terms of autonomy, he added, pointing out that Opus 4.6's agentic capabilities mean it can investigate security flaws and use various tools to test code. In practice, that means the AI can explore a codebase step by step, test how different components behave, and follow leads much like a junior security researcher would -- only much faster. "That makes a really big difference for security engineers and researchers," Graham said. "It's going to be a force multiplier for security teams. It's going to allow them to do more." Of course, it's not just defenders that look for security flaws -- attackers are also using AI to find exploitable weaknesses faster than ever, Graham said, so it's important to make sure that improvements favor the good guys. So in addition to the research preview, he said Anthropic is investing in safeguards to detect malicious use and when attackers might be using the system. "It's really important to make sure that what is a dual-use capability gives defenders a leg up," he said.

[3]

Cybersecurity stocks drop after Anthropic debuts Claude Code Security - SiliconANGLE

Cybersecurity stocks drop after Anthropic debuts Claude Code Security Shares of several major cybersecurity providers dropped today after Anthropic PBC introduced a tool for finding software vulnerabilities. The offering is called Claude Code Security. It's available as a limited research preview in the Enterprise and Teams editions of Anthropic's Claude artificial intelligence service. Additionally, the company plans to provide "expedited access" for open-source project maintainers. Software teams scan their code for vulnerabilities using so-called static analysis tools. Such programs are usually built around a database of rules, or definitions of common cybersecurity vulnerabilities. A static analysis tool works by checking each single snippet of code in an application against its rules. Static vulnerability definitions can't cover every single variation of every single exploit. For example, one of a static analysis tool's rules might not support a niche programming language that a developer used to implement an interface module. As a result, rule-based static analysis tools often miss certain cybersecurity issues. Anthropic positions Claude Code Security as a more effective alternative. According to the company, the tool doesn't use static rules but instead "reasons about your code the way a human security researcher would." It maps out how an application's components interact with one another and the way data moves through them to find potential weak points. Developers can activate Claude Code Security by connecting it to a GitHub repository and asking it to scan the code inside. According to Anthropic, the tool can uncover a wide range of vulnerabilities. Applications include filters that block malicious user input such as unauthorized SQL commands. Claude Code Security can find code snippets that lack an effective input filter. It also spots more sophisticated issues, such as vulnerabilities that make it possible to bypass an application's authentication mechanism. Claude Code Security ranks the security flaws that it finds based on severity. Additionally, it generates a natural language explanation of each one to expedite analysis. A "suggest fix" button below the explainer enables cybersecurity professionals to have Claude generate a patch. CrowdStrike Inc. closed the trading session down 7.56%, while Cloudflare Inc. declined 8.09%. Several other cybersecurity companies also logged share price declines. The selloff is the second that Anthropic has set off in the enterprise software ecosystem since the start of the month. The previous one was sparked by the company's launch of Claude Cowork plugins. Claude Code Security is rolling out about four months after OpenAI Group PBC introduced a cybersecurity automation tool of its own. Aardvark offers many of the same capabilities as Anthropic's new tool. According to OpenAI, it tests vulnerabilities in an isolated sandbox to estimate how difficult it would be for hackers to exploit them. There are several ways OpenAI and Anthropic could expand their cybersecurity over time. Enterprise software teams use systems called CI/CD, or continuous integration and continuous delivery, pipelines to roll out software updates. The two AI providers could integrate their cybersecurity tools with popular CI/CD products to automatically block updates that contain vulnerable code. Many established cybersecurity companies already offer such a capability.

[4]

5 Things To Know On Anthropic's Claude Code Security

The AI platform said Friday it's adding vulnerability scanning capabilities into its web-based Claude Code tool in a move to compete with application security vendors. Anthropic announced Friday it is looking to compete with application security vendors by adding vulnerability scanning capabilities into its web-based Claude Code tool. The move is the latest by Anthropic to add LLM-powered functionality that will rival established software makers and part of a wider trend that has shaken investor confidence in the software industry as a whole. [Related: Palo Alto Networks CEO: AI Won't Replace Security Tools 'Any Time Soon'] Share prices for multiple major security vendors fell Friday after the Anthropic announcement, notably during a day that saw the broader stock market indices rise. What follows are five things to know about Anthropic's Claude Code Security. Claude Code Security marks the first dedicated security product from Anthropic and -- for now -- it's limited to the sphere of application security. In a blog post, Anthropic disclosed that Claude Code Security will provide codebase scanning for vulnerabilities. The tool will then make suggestions for "targeted software patches for human review, allowing teams to find and fix security issues that traditional methods often miss," the company said in the post. Claude Code Security is a part of Anthropic's Claude Code offering on the web and is now rolling out in a limited research preview. Anthropic contended that its tool will enable massive security improvements compared with existing automated testing methods such as static analysis. Such methods are usually rule-based and can only compare code with known vulnerabilities, the company said. Claude Code Security, on the other hand, "reads and reasons about your code the way a human security researcher would," Anthropic said. That means the tool can understand "how components interact, tracing how data moves through your application, and catching complex vulnerabilities that rule-based tools miss," the company said. The result is that Claude Code Security will be capable of uncovering "more complex vulnerabilities, like flaws in business logic or broken access control," as compared with static analysis methods, Anthropic said. In addition, every finding made by the tool will be put through a "multistage verification process" before it's forwarded to an analyst, the company said. The findings are also given severity ratings to help with prioritization, according to Anthropic. With the codebase scanning capabilities offered by Anthropic poised to accelerate the shift toward making vulnerability discovery easier with AI, it's clear that the trend will undoubtedly benefit attackers as well as defenders going forward. Anthropic admitted as much in its post Friday, writing that "the same capabilities that help defenders find and fix vulnerabilities could help attackers exploit them." While Anthropic will no doubt include guardrails aimed at preventing misuse of its own tools, the dual-use nature of AI scanning suggests that attacks will only intensify going forward as the technology continues to advance. Ultimately, threat actors "will use AI to find exploitable weaknesses faster than ever" going forward, the company said. "But defenders who move quickly can find those same weaknesses, patch them, and reduce the risk of an attack," the company said. "Claude Code Security is one step towards our goal of more secure codebases and a higher security baseline across the industry." Despite the restriction of Anthropic's initial efforts to the application security sphere, investors expressed worries about the broader security industry Friday following the Anthropic announcement. As of this writing, shares in CrowdStrike were down 6.5 percent to $394.50 a share, while Cloudflare's stock price fell 6.2 percent to $180.71 a share. Zscaler's stock dropped 3.1 percent to $163.76 a share, and Palo Alto Networks was down 0.6 percent to $150.14 a share. Earlier this week, Palo Alto Networks CEO Nikesh Arora said that investor fears that AI poses more of a risk than an opportunity for cybersecurity vendors are unfounded, with LLMs unlikely to rival the capabilities of security products in the foreseeable future. During Palo Alto Networks' quarterly call Tuesday, Arora told analysts that while GenAI and AI agents are already proving to be massively helpful for security products and teams, there are clear limitations on what LLMs can do. "I'm still confused why the market is treating AI as a threat" to the cybersecurity industry, he said, while adding that he "can't speak for all of software." LLMs aren't accurate enough to fully replace key segments such as security operations, and many security tools -- including from Palo Alto Networks and its broad platform -- have a major leg up through having access to real-world customer data for training its AI models, Arora noted.

Share

Share

Copy Link

Anthropic launched Claude Code Security, an AI security tool that scans codebases for vulnerabilities and suggests software patches. The announcement triggered sharp drops in cybersecurity stocks, with CrowdStrike falling 7.56% and Cloudflare declining 8.09%. The tool uses AI to identify software vulnerabilities that traditional static analysis methods miss, intensifying investor anxiety about AI's impact on the cybersecurity industry.

Anthropic Unveils Claude Code Security, Triggering Market Turmoil

Anthropic launched Claude Code Security on Friday, marking the company's first dedicated AI security tool and sending shockwaves through the cybersecurity industry

1

. The announcement triggered a significant drop in stock prices across cybersecurity software companies, with CrowdStrike falling 7.56%, Cloudflare declining 8.09%, Zscaler dropping 3.1%, and Okta shedding 5.7%3

4

. The Global X Cybersecurity ETF fell as much as 3.8%, extending its year-to-date losses to 14%, while the iShares Expanded Tech-Software Sector ETF has plummeted more than 23% this year1

.

Source: Fortune

The new tool scans codebases for vulnerabilities and suggests targeted software patches for human review, allowing teams to identify software security flaws that traditional methods often overlook

1

. Available initially as a limited research preview for Enterprise and Team customers, Anthropic is also providing expedited access to maintainers of open-source repositories—developers who are often under-resourced but responsible for keeping widely used public software secure2

.How Claude Code Security Identifies Software Vulnerabilities Differently

Unlike conventional static analysis tools that rely on rule-based databases of known vulnerabilities, Claude Code Security reads and reasons about code the way a human security researcher would

3

. The AI-powered vulnerability scanning system maps out how application components interact with one another and traces how data moves through them to identify potential weak points3

. This approach enables the tool to uncover more complex vulnerabilities, including flaws in business logic or broken access control, that rule-based systems typically miss4

.

Source: CRN

The system double-checks its own findings, assigns severity ratings to help with prioritization, and suggests fixes through a "suggest fix" button

2

3

. Every finding undergoes a multistage verification process before reaching an analyst4

. Critically, while the system can investigate code autonomously, it does not apply fixes automatically—developers must review and approve every change2

.Frontier Red Team Research Powers the New Application Security Tool

Claude Code Security builds on over a year of research by Anthropic's Frontier Red Team, an internal group of approximately 15 researchers tasked with stress-testing the company's most advanced AI systems and probing potential misuse in areas like cybersecurity

2

. The team's recent research found that Anthropic's Opus 4.6 model has significantly improved at finding new, high-severity vulnerabilities across vast amounts of code without requiring task-specific tooling, custom scaffolding, or specialized prompting2

.

Source: SiliconANGLE

In testing open-source software that runs across enterprise systems and critical infrastructure, Opus 4.6 discovered some vulnerabilities that had gone undetected for decades

2

. Frontier Red Team leader Logan Graham explained that the model's agentic capabilities allow it to investigate security flaws step by step, test how different components behave, and follow leads much like a junior security researcher would—only much faster. "It's going to be a force multiplier for security teams. It's going to allow them to do more," Graham told Fortune2

.Related Stories

AI Impact on Cybersecurity Intensifies Investor Anxiety

The selloff marks the second time Anthropic has triggered turbulence in the enterprise software ecosystem since the start of the month, following the company's launch of Claude Cowork plugins

3

. Investor anxiety has been building for weeks as AI-native companies like Anthropic, OpenAI, and Alphabet release new tools that threaten to diminish demand for legacy products from established cybersecurity software companies1

.This trend extends beyond application security. The ability for Large Language Models (LLMs) to "vibe code"—using AI to write software code—has investors worried that users will create their own applications, potentially weighing on growth, margins, and pricing power for traditional software vendors

1

. The iShares Expanded Tech-Software Sector ETF is on track for its biggest quarterly percentage drop since the financial crisis in 20081

.However, not all industry leaders share this pessimistic outlook. Earlier this week, Palo Alto Networks CEO Nikesh Arora stated that investor fears about AI posing more risk than opportunity for cybersecurity vendors are unfounded. "I'm still confused why the market is treating AI as a threat" to the cybersecurity industry, Arora said during the company's quarterly call, noting that LLMs aren't accurate enough to fully replace key segments such as security operations

4

.The Dual-Use Challenge and Competitive Landscape

Anthropic acknowledges that the same capabilities helping defenders find and fix vulnerabilities could help attackers exploit them

4

. Graham emphasized that threat actors "will use AI to find exploitable weaknesses faster than ever," making it critical to ensure improvements favor defenders. "It's really important to make sure that what is a dual-use capability gives defenders a leg up," he said, adding that Anthropic is investing in safeguards to detect malicious use2

.Claude Code Security enters a competitive landscape that includes OpenAI's Aardvark, a cybersecurity automation tool launched about four months earlier

3

. According to OpenAI, Aardvark offers many similar capabilities and tests vulnerabilities in an isolated sandbox to estimate exploitation difficulty3

. Looking ahead, both AI providers could expand their offerings by integrating with CI/CD pipelines to automatically block updates containing vulnerable code—a capability many established cybersecurity companies already offer3

.References

Summarized by

Navi

[2]

[3]

Related Stories

Anthropic Introduces Automated Security Reviews in Claude Code to Address AI-Generated Vulnerabilities

07 Aug 2025•Technology

Anthropic's Claude Opus 4.6 finds 500+ security flaws, sparking dual-use concerns

06 Feb 2026•Technology

Anthropic's Claude AI Outperforms Human Hackers in Cybersecurity Competitions

06 Aug 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy