Anthropic Shifts Gears: User Data Now Fuels AI Training, Opt-Out Available

16 Sources

16 Sources

[1]

Anthropic users face a new choice - opt out or share your data for AI training | TechCrunch

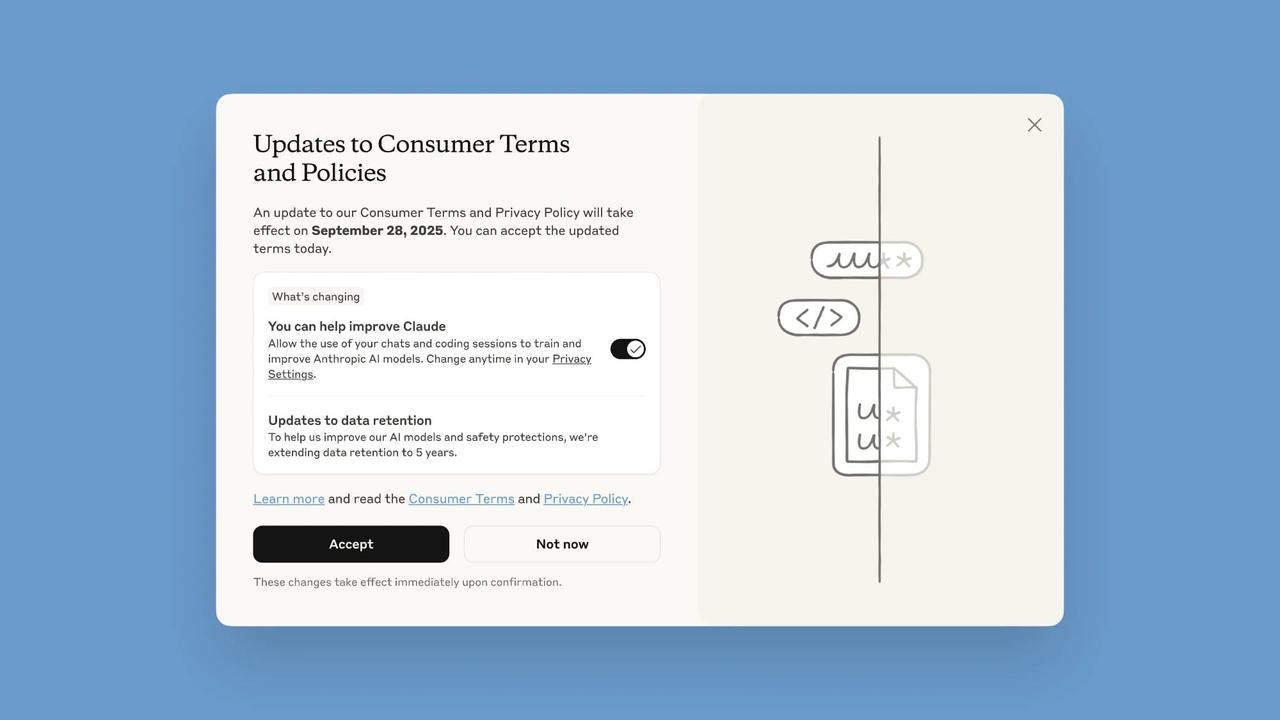

Anthropic is making some big changes to how it handles user data, requiring all Claude users to decide by September 28 whether they want their conversations used to train AI models. While the company directed us to its blog post on the policy changes when asked about what prompted the move, we've formed some theories of our own. But first, what's changing: previously, Anthropic didn't use consumer chat data for model training. Now, the company wants to train its AI systems on user conversations and coding sessions, and it said it's extending data retention to five years for those who don't opt out. That is a massive update. Previously, users of Anthropic's consumer products were told that their prompts and conversation outputs would be automatically deleted from Anthropic's back end within 30 days "unless legally or policy‑required to keep them longer" or their input was flagged as violating its policies, in which case a user's inputs and outputs might be retained for up to two years. By consumer, we mean the new policies apply to Claude Free, Pro, and Max users, including those using Claude Code. Business customers using Claude Gov, Claude for Work, Claude for Education, or API access will be unaffected, which is how OpenAI similarly protects enterprise customers from data training policies. So why is this happening? In that post about the update, Anthropic frames the changes around user choice, saying that by not opting out, users will "help us improve model safety, making our systems for detecting harmful content more accurate and less likely to flag harmless conversations." Users will "also help future Claude models improve at skills like coding, analysis, and reasoning, ultimately leading to better models for all users." In short, help us help you. But the full truth is probably a little less selfless. Like every other large language model company, Anthropic needs data more than it needs people to have fuzzy feelings about its brand. Training AI models requires vast amounts of high-quality conversational data, and accessing millions of Claude interactions should provide exactly the kind of real-world content that can improve Anthropic's competitive positioning against rivals like OpenAI and Google. Beyond the competitive pressures of AI development, the changes would also seem to reflect broader industry shifts in data policies, as companies like Anthropic and OpenAI face increasing scrutiny over their data retention practices. OpenAI, for instance, is currently fighting a court order that forces the company to retain all consumer ChatGPT conversations indefinitely, including deleted chats, because of a lawsuit filed by The New York Times and other publishers. In June, OpenAI COO Brad Lightcap called this "a sweeping and unnecessary demand" that "fundamentally conflicts with the privacy commitments we have made to our users." The court order affects ChatGPT Free, Plus, Pro, and Team users, though enterprise customers and those with Zero Data Retention agreements are still protected. What's alarming is how much confusion all of these changing usage policies are creating for users, many of whom remain oblivious to them. In fairness, everything is moving quickly now, so as the tech changes, privacy policies are bound to change. But many of these changes are fairly sweeping and mentioned only fleetingly amid the companies' other news. (You wouldn't think Tuesday's policy changes for Anthropic users were very big news based on where the company placed this update on its press page.) But many users don't realize the guidelines to which they've agreed have changed because the design practically guarantees it. Most ChatGPT users keep clicking on "delete" toggles that aren't technically deleting anything. Meanwhile, Anthropic's implementation of its new policy follows a familiar pattern. How so? New users will choose their preference during signup, but existing users face a pop-up with "Updates to Consumer Terms and Policies" in large text and a prominent black "Accept" button with a much tinier toggle switch for training permissions below in smaller print - and automatically set to "On." As observed earlier today by The Verge, the design raises concerns that users might quickly click "Accept" without noticing they're agreeing to data sharing. Meanwhile, the stakes for user awareness couldn't be higher. Privacy experts have long warned that the complexity surrounding AI makes meaningful user consent nearly unattainable. Under the Biden Administration, the Federal Trade Commission even stepped in, warning that AI companies risk enforcement action if they engage in "surreptitiously changing its terms of service or privacy policy, or burying a disclosure behind hyperlinks, in legalese, or in fine print." Whether the commission -- now operating with just three of its five commissioners -- still has its eye on these practices today is an open question, one we've put directly to the FTC.

[2]

Anthropic Wants to Use Your Chats With Claude for AI Training: Here's How to Opt Out

Anthropic will soon begin using your chat transcripts to train its popular chatbot, Claude. The announcement came on Thursday as an update to the company's Consumer Terms and Privacy Policy. New users will see an option to "Help improve Claude" that can be toggled on or off as part of the sign-up flow, where existing users will begin to see a notification explaining the change. Users have until Sep 28 to opt out of the new change, as it will be enabled by default. You can still turn the option off in Claude's privacy settings. A representative for Anthropic declined to comment. Don't miss any of CNET's unbiased tech content and lab-based reviews. Add us as a preferred Google source on Chrome. Individual users using Claude Free, Pro, Max or Code sessions from one of the aforementioned plans will be affected by this change when it goes into effect in late September. With the option enabled, AI training will only take place on new and resumed chat and coding sessions. Older chats that aren't revisited won't be affected -- at least, for now. There are exceptions to the updated policy. Claude for Work (Team and Enterprise plans), Claude Gov and Claude Education will not be affected. This also extends to Application Programming Interface use for third parties, including Amazon Bedrock and Google Cloud's Vertex AI. Until the deadline on Sept. 28, users can ignore the notification by closing it or choosing the "not now" option. After the deadline, users must make a choice in order to continue using Claude. Read more: Anthropic's Claude Extension Bakes AI Right Into the Chrome rowser Users who opt in to AI model training will also have their data held for significantly longer than the previous 30-day window. Opted-in users will have data stored for 5 years, and like the update training policy, will only apply to new or resumed chats. Anthropic says the extended data retention will allow the company to identify misuse and detect harmful usage patterns. If you opted into the new changes by accident or changed your mind for any reason, you can always opt out. Here's how. If you previously opted in and decide to opt out, your new and resumed chats will no longer be used for future AI model training. Any qualifying data will still be included in training that has already started and models that have already been trained, but it won't be used in future training.

[3]

Anthropic will start training its AI models on chat transcripts

In smaller print below that, a few lines say, "Allow the use of your chats and coding sessions to train and improve Anthropic AI models," with a toggle on / off switch next to it. It's automatically set to "On." Ostensibly, many users will immediately click the large "Accept" button without changing the toggle switch, even if they haven't read it. If you want to opt out, you can toggle the switch to "Off" when you see the pop-up. If you already accepted without realizing and want to change your decision, navigate to your Settings, then the Privacy tab, then the Privacy Settings section, and, finally, toggle to "Off" under the "Help improve Claude" option. Consumers can change their decision anytime via their privacy settings, but that new decision will just apply to future data -- you can't take back the data that the system has already been trained on.

[4]

Anthropic will start training Claude on user data - but you don't have to share yours

Anthropic updated its AI training policy.Users can now opt in to having their chats used for training. This deviates from Anthropic's previous stance. Anthropic has become a leading AI lab, with one of its biggest draws being its strict position on prioritizing consumer data privacy. From the onset of Claude, its chatbot, Anthropic took a stern stance about not using user data to train its models, deviating from a common industry practice. That's now changing. Users can now opt into having their data used to train the Anthropic models further, the company said in a blog post updating its consumer terms and privacy policy. The data collected is meant to help improve the models, making them safer and more intelligent, the company said in the post. Also: Anthropic's Claude Chrome browser extension rolls out - how to get early access While this change does mark as a sharp pivot from the company's typical approach, users will still have the option to keep their chats out of training. Keep reading to find out how. Before I get into how to turn it off, it is worth noting that not all plans are impacted. Commercial plans, including Claude for Work, Claude Gov, Claude for Education, and API usage, remain unchanged, even when accessed by third parties through cloud services like Amazon Bedrock and Google Cloud's Vertex AI. The updates apply to Claude Free, Pro, and Max plans, meaning that if you are an individual user, you will now be subject to the Updates to Consumer Terms and Policies and will be given the option to opt in or out of training. If you are an existing user, you will be shown a pop-up like the one shown below, asking you to opt in or out of having your chats and coding sessions trained to improve Anthropic AI models. When the pop-up comes up, make sure to actually read it because the bolded heading of the toggle isn't straightforward -- rather, it says "You can help improve Claude," referring to the training feature. Anthropic does clarify underneath that in a bolded statement. You have until Sept. 28 to make the selection, and once you do, it will automatically take effect on your account. If you choose to have your data trained on, Anthropic will only use new or resumed chats and coding sessions, not past ones. After Sept. 28, you will have to decide on the model training preferences to keep using Claude. The decision you make is always reversible via Privacy Settings at any time. Also: OpenAI and Anthropic evaluated each others' models - which ones came out on top New users will have the option to select the preference as they sign up. As mentioned before, it is worth keeping a close look at the verbiage when signing up, as it is likely to be framed as whether you want to help improve the model or not, and could always be subject to change. While it is true that your data will be used to improve the model, it is worth highlighting that the training will be done by saving your data. Another change to the Consumer Terms and Policies is that if you opt in to having your data used, the company will retain that data for five years. Anthropic justifies the longer time period as necessary to allow the company to make better model developments and safety improvements. When you delete a conversation with Claude, Anthropic says it will not be used for model training. If you don't opt in for model training, the company's existing 30-day data retention period applies. Again, this doesn't apply to Commercial Terms. Anthropic also shared that users' data won't be sold to a third party, and that it uses tools to "filter or obfuscate sensitive data." Data is essential to how generative AI models are trained, and they only get smarter with additional data. As a result, companies are always vying for user data to improve their models. For example, Google just recently made a similar move, renaming the "Gemini Apps Activity" to "Keep Activity." When the setting is toggled on, a sample of your uploads, starting on Sept. 2, the company says it will be used to "help improve Google services for everyone."

[5]

Anthropic to Collect Your Chats, Coding Sessions to Train Claude AI

Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Anthropic's growing appetite for user data has prompted a privacy policy change that will give the company's AI a steady stream of the information you feed it. Previously, Anthropic did not train its Claude AI models on user chats and committed to auto-deleting the data after 30 days, TechCrunch reports. Now, it's asking you to "help improve Claude" by allowing your chats and coding sessions to train Anthropic's AI. It will also retain data for five years to remain "consistent" throughout the lengthy AI development cycle. "Models released today began development 18 to 24 months ago," Anthropic says. The next time you log in, you'll see a pop-up menu asking you to accept an update to the company's consumer terms and policies. The "you can help improve Claude" option will be toggled on by default; there's no toggle for the data retention option. Anthropic asks that you accept the terms by Sept. 28. If you accept the term and change your mind, you can revoke Claude's access via Settings > Privacy > Help improve Claude. The changes will apply to new or resumed chats and coding sessions on Claude Free, Pro, and Max plans for regular chats and coding session tools like Claude Code. It will not apply to Claude for Work, Claude Gov, Claude for Education, or API use, including via third parties such as Amazon Bedrock and Google Cloud's Vertex AI. You can also opt in, but keep certain chats private by deleting them, which will prevent them from being used for "future model training," Anthropic says. Unsurprisingly, Anthropic is positioning the move as beneficial to users. Having more information will boost the safety of the models, the company says, and make them better at accurately flagging harmful conversations. It will also help create better models in the future, with stronger "coding, analysis, and reasoning" skills. "All large language models, like Claude, are trained using large amounts of data," Anthropic says. "Data from real-world interactions provide valuable insights on which responses are most useful and accurate for users." Starting today, existing users will see a pop-up asking whether they're willing to opt in. To Anthropic's credit, it's pretty clearly written, stating that the company will use your chats and coding sessions to improve its models. You can also make your choice within the Settings menu. "To protect users' privacy, we use a combination of tools and automated processes to filter or obfuscate sensitive data," Anthropic says. "We do not sell users' data to third parties." Chatbot privacy has come into focus this year. Due to an ongoing lawsuit brought by The New York Times, OpenAI is required to maintain records of all your deleted conversations. Meta, OpenAI, and Grok have also all made private conversations public -- by accident and on purpose.

[6]

Anthropic changing default storage for Claude chats to 5 yrs

Claude creator Anthropic has given customers using its Free, Pro, and Max plans one month to prevent the engine from storing their chats for five years by default and using them for training. A popup will show for existing users, asking if they want to opt out of a new "Help improve Claude" function, and Anthropic will prompt new users with a similar question during app setup. But if you opt in, the data retention window is being extended from 30 days to 1,826 days, give or take leap years. Even if customers opt out, they'll still have their convos stored for 30 days. After September 28, users will no longer see the popup, and will have to tweak these retention settings in Claude's privacy settings. Any conversations that the user deletes will not be retained, Anthropic said, but, if the chats are flagged for containing objectionable content, then they could be retained for seven years. For example, discussions about nuclear weapons would trigger the process. Pro and Max users currently pay $20 and $100 a month respectively to access Claude's AI engine, but that won't buy them out of Anthropic's data collection grab. However, the new data collection policy will not affect commercial, educational, or government customers, nor will API use with Amazon Bedrock, Google Cloud's Vertex AI, and other commercial partners. The exceptions for commercial or government contracts are notable. In the latter case, the AI biz is in the running for a potentially lucrative deal with the US General Services Administration to integrate AI into government systems and reduce the nation's reliance on humans to carry the workload of dealing with citizens. A spokesperson declined to expand on the original statement, other than to tell The Register that the "updated retention length will only apply to new or resumed chats and coding sessions, and will allow us to better support model development and safety improvements." It has been a busy week for Anthropic, as it launched a new Chrome extension to try and get more people using Claude for search investigations. Anthropic has limited the rollout to 1,000 users, but may enlarge the program once it has sorted out the technical issues. Anthropic also admitted that criminals online are harnessing the AI bot's capacity to help with computer intrusions and remote worker fraud. The California biz says it's blocked one North Korean attempt to turn its AI engine to malevolent ends and is on guard for more people trying to abuse its tech. ®

[7]

Anthropic Will Now Train Claude on Your Chats, Here's How to Opt Out

Anthropic announced today that it is changing its Consumer Terms and Privacy Policy, with plans to train its AI chatbot Claude with user data. New users will be able to opt out at signup. Existing users will receive a popup that allows them to opt out of Anthropic using their data for AI training purposes. The popup is labeled "Updates to Consumer Terms and Policies," and when it shows up, unchecking the "You can help improve Claude" toggle will disallow the use of chats. Choosing to accept the policy now will allow all new or resumed chats to be used by Anthropic. Users will need to opt in or opt out by September 28, 2025, to continue using Claude. Opting out can also be done by going to Claude's Settings, selecting the Privacy option, and toggling off "Help improve Claude." Anthropic says that the new training policy will allow it to deliver "even more capable, useful AI models" and strengthen safeguards against harmful usage like scams and abuse. The updated terms apply to all users on Claude Free, Pro, and Max plans, but not to services under commercial terms like Claude for Work or Claude for Education. In addition to using chat transcripts to train Claude, Anthropic is extending data retention to five years. So if you opt in to allowing Claude to be trained with your data, Anthropic will keep your information for a five year period. Deleted conversations will not be used for future model training, and for those that do not opt in to sharing data for training, Anthropic will continue keeping information for 30 days as it does now. Anthropic says that a "combination of tools and automated processes" will be used to filter sensitive data, with no information provided to third-parties. Prior to today, Anthropic did not use conversations and data from users to train or improve Claude, unless users submitted feedback.

[8]

Claude AI will start training on your data soon -- here's how to opt out before the deadline

Anthropic, the company behind Claude, is making a significant change to how it handles your data. Starting today, Claude users will be asked to either let Anthropic use their chats to train future AI models or opt out and keep their data private. If you don't make a choice by September 28, 2025, you'll lose access to Claude altogether. Previously, Anthropic had a privacy-first approach, meaning your chats and code were automatically deleted after 30 days unless required for legal reasons. Starting today, however, unless you opt out, your data will be stored for up to five years and fed into training cycles to help Claude get smarter. The new policy applies to all plans including Free, Pro, and Max, as well as Claude Code under those tiers. Business, government, education and API users aren't affected. New users will see the choice during sign-up. Existing users will get a pop-up called "Updates to Consumer Terms and Policies." The big blue Accept button opts you in by default, while a smaller toggle lets you opt out. If you ignore the prompt, Claude will stop working after September 28. It's important to note, only future chats and code are impacted. Anything you wrote in the past won't be used unless you re-open those conversations. And if you delete a chat, it won't be used for training. You can change your decision later in Privacy Setting, but once data has been used for training, it can't be pulled back. The company says user data is key to making Claude better at vibe and traditional coding, reasoning and staying safe. Anthropic also stresses that it doesn't sell data to third parties and uses automated filters to scrub sensitive information before training. Still, the change shifts the balance from automatic privacy to automatic data sharing unless you say otherwise. Anthropic's decision essentially puts Claude on a faster track to improving the real-world data it trains on; the better it can get at answering complex questions, writing code and avoiding mistakes, the more progress it can make. Yet, this progress comes at a cost to users who may now be part of the training set, stored for years instead of weeks. For casual users, this might not feel like a big deal. But for privacy-minded users, or anyone who discusses work projects, personal matters or sensitive information in Claude, this update could be a red flag. With the default switched on, the responsibility is now on you to opt out if you want your data kept private. Follow Tom's Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

[9]

Claude chats will now be used for AI training, but you can escape

Claude -- one of the most popular AI chatbots out there and a potential candidate for Apple to enhance Siri -- will soon start saving a transcript of all your chats for AI training purposes. The policy change announced by Anthropic has already started appearing to users and gives them until September 28th to accept the terms. What's changing? Anthropic says the log of users' interactions with Claude and its developer-focused Claude Code tool will be used for training, model improvement, and strengthening the safety guardrails. So far, the AI company hasn't tapped into user data for AI training. Recommended Videos "By participating, you'll help us improve model safety, making our systems for detecting harmful content more accurate and less likely to flag harmless conversations," says the company. The policy change is not mandatory, and users can choose to opt out of it. Users will see a notification alert about the data usage policy until September 28. The pop-up will let them easily disable the toggle that says "You can help improve Claude," and then save their preference by hitting the Accept button. After September 28, users will have to manually change their preference from the model training settings dashboard. What's the bottom line? The updated training policy only covers new chats with Claude, or those you resume, but not old chats. But why the reversal? The road to AI supremacy is pretty straightforward. The more training material you can get your hands on, the better the AI model's performance. The industry is already running into a data shortage, so Anthropic's move is not surprising. If you are an existing Claude user, you can opt out by following this path: Settings > Privacy > Help Improve Claude. Anthropic's new user data policy covers the Claude Free, Pro, and Max plans. It, however, doesn't apply to Claude for Work, Claude Gov, Claude for Education, APU use, or when connected to third-party platforms such as Google's Vertex AI and Amazon Bedrock. In addition to this, Anthropic is also changing its data retention rules. Now, the company can keep a copy of user data for up to five years. Chats that have been manually deleted by users will not be used for AI training.

[10]

How to Stop Anthropic From Training Its AI Models on Your Conversations

Did you know you can customize Google to filter out garbage? Take these steps for better search results, including adding my work at Lifehacker as a preferred source. You should never assume what you say to a chatbot is private. When you interact with one of these tools, the company behind it likely scrapes the data from the session, often using it to train the underlying AI models. Unless you explicitly opt out of this practice, you've probably unwittingly trained many models in your time using AI. Anthropic, the company behind Claude, has taken a different approach. The company's privacy policy has stated that Anthropic does not collect user inputs or outputs to train Claude, unless you either report the material to the company, or opt in to training. While that doesn't mean Anthropic was abstaining from collecting data in general, you could rest easy knowing your conversations weren't feeding future versions of Claude. That's now changing. As reported by The Verge, Anthropic will now start training its AI models, Claude, on user data. That means new chats or coding sessions you engage with Claude on will be fed to Anthropic to adjust and improve the models' performances. This will not affect past sessions if you leave them be. However, if you re-engage with a past chat or coding sessions following the change, Anthropic will scrape any new data generated from the session for its training purposes. This won't just happen without your permission -- at least, not right away. Anthropic is giving users until Sept. 28 to make a decision. New users will see the option when they set up their accounts, while existing users will see a permission popup when they login. However, it's reasonable to think that some of us will be clicking through these menus and popups too quickly, and accidentally agree to data collection that we might not otherwise mean to. To Anthropic's credit, the company says it does try to hide sensitive user data through "a combination of tools and automated processes," and that it does not sell your data to third parties. Still, I certainly don't want my conversations with AI to train future models. If you feel the same, here's how to opt out. If you're an existing Claude user, you'll see a popup warning the next time you log into your account. This popup, titled "Updates to Consumer Terms and Policies," explains the new rules, and, by default, opts you into the training. To opt out, make sure the toggle next to "You can help improve Claude" is turned off. (The toggle will be set to the left with an (X), rather than to the right with a checkmark.) Hit "Accept" to lock in your choice. If you've already accepted this popup and aren't sure if you opted in to this data collection, you can still opt out. To check, open Claude and head to Settings > Privacy > Privacy Settings, then make sure the "Help improve Claude" toggle is turned off. Note that this setting will not undo any data that Anthropic has collected since you opted in.

[11]

Claude users must opt out of data training before Sept 28 deadline

Anthropic is implementing new data handling procedures, requiring all Claude users to decide by September 28 whether their conversations can be used for AI model training. The company directed inquiries to its blog post about the policy changes, but external analyses have attempted to determine the reasons for the revisions. The core change involves Anthropic now seeking to train its AI systems using user conversations and coding sessions. Previously, Anthropic did not utilize consumer chat data for model training. Now, data retention will be extended to five years for users who do not opt out of this data usage agreement. This represents a significant shift from previous practices. Previously, users of Anthropic's consumer products were informed that their prompts and the resulting conversation outputs would be automatically deleted from Anthropic's back-end systems within 30 days. The exception occurred if legal or policy requirements dictated a longer retention period or if a user's input was flagged for violating company policies. In such cases, user inputs and outputs could be retained for up to two years. The new policies apply specifically to users of Claude Free, Pro, and Max, including users of Claude Code. Business customers utilizing Claude Gov, Claude for Work, Claude for Education, or those accessing the platform through its API, are exempt from these changes. This mirrors a similar approach adopted by OpenAI, which shields enterprise customers from data training policies. Anthropic framed the change by stating that users who do not opt out will "help us improve model safety, making our systems for detecting harmful content more accurate and less likely to flag harmless conversations." The company added that this data will "also help future Claude models improve at skills like coding, analysis, and reasoning, ultimately leading to better models for all users." This framing presents the policy change as a collaborative effort to improve the AI model. However, external analysis suggests that the underlying motivation is more complex, rooted in competitive pressures within the AI industry. Namely, Anthropic, like other large language model companies, requires substantial data to train its AI models effectively. Access to millions of Claude interactions would provide the real-world content needed to enhance Anthropic's position against competitors such as OpenAI and Google. The policy revisions also reflect broader industry trends concerning data policies. Companies like Anthropic and OpenAI face increasing scrutiny regarding their data retention practices. OpenAI, for example, is currently contesting a court order that compels the company to indefinitely retain all consumer ChatGPT conversations, including deleted chats. This order stems from a lawsuit filed by The New York Times and other publishers. In June, OpenAI COO Brad Lightcap described the court order as "a sweeping and unnecessary demand" that "fundamentally conflicts with the privacy commitments we have made to our users." The court order impacts ChatGPT Free, Plus, Pro, and Team users. Enterprise customers and those with Zero Data Retention agreements remain unaffected. The frequent changes to usage policies have generated confusion among users. Many users remain unaware of these evolving policies. The rapid pace of technological advancements means that privacy policies are subject to change. Changes are often communicated briefly amid other company announcements. Anthropic's implementation of its new policy follows a pattern that raises concerns about user awareness. New users will be able to select their preference during the signup process. However, existing users are presented with a pop-up window labeled "Updates to Consumer Terms and Policies" in large text, accompanied by a prominent black "Accept" button. A smaller toggle switch for training permissions is located below, in smaller print, and is automatically set to the "On" position. The design raises concerns that users might rapidly click "Accept" without fully realizing they are agreeing to data sharing. This observation was initially reported by The Verge. The stakes for user awareness are significant. Experts have consistently warned that the complexity surrounding AI makes obtaining meaningful user consent difficult. The Federal Trade Commission (FTC) has previously intervened in these matters. The FTC warned AI companies against "surreptitiously changing its terms of service or privacy policy, or burying a disclosure behind hyperlinks, in legalese, or in fine print." This warning suggests a potential conflict with practices that may not provide adequate user awareness or consent. The current level of FTC oversight on these practices remains uncertain. The commission is currently operating with only three of its five commissioners. An inquiry has been submitted to the FTC to determine whether these practices are currently under review.

[12]

Anthropic Wants Your Permission to Train Its AI Models on Your Chats

Anthropic is also extending its data retention to five years Anthropic announced an update to its privacy policy on Thursday, highlighting that it will now train its existing and future artificial intelligence (AI) models on users' conversations. The San Francisco-based AI company highlighted that it is now giving users an option to either allow or deny permission to train on its chats. This revised privacy policy only affects end consumers and is not aimed at the AI firm's enterprise customers. In addition to the revised privacy policy, Anthropic is now also extending its data retention policy to store users' conversations for a period of five years. Anthropic's New Privacy Policy Goes Into Effect on September 28 Training AI models on user data is not a new practice among AI companies in Silicon Valley. OpenAI, Google, and Meta have already created provisions where users can consent to sharing their conversations with the companies to train their AI models. So far, Anthropic was an exception to this, as it did not even train its models on the data of those on the free tier. Anthropic explains that it will begin training its models on users' chats. For this, Anthropic is giving users the choice to consent to data sharing via a new pop-up box that appears when you open Claude's website interface or app. Users have until September 28 to make their decision, as after that, they will have to manually navigate the settings to make the changes. The company highlighted that the revised policy is only aimed at Claude's Free, Pro, and Max tiers. If a user consents to sharing their data, they will also be sharing the transcripts of when they use Claude Code. Notably, it does not apply to its enterprise services, including Claude for Work, Claude Gov, Claude for Education, or API use. Additionally, it will also not affect those accessing Claude models via third-party platforms. For both AI training and data retention, only the new chats and chats resumed after accepting the new policy will be used. Old conversations will not be impacted by the revised policy. In case a user deletes a conversation, that will also not be used for future model training. Additionally, Anthropic is also extending its data retention to five years. This only applies to those consenting to share their data; otherwise, the existing 30-day data retention rule will apply.

[13]

What Are Risks Of Anthropic Using People's Chats For AI Training?

AI industry giant Anthropic is asking Claude users to consent to having their conversations used to train future AI models, through an update to its terms of services on August 28. The changes affect users on Claude Free, Pro, and Max plans, including those using Claude Code from these accounts. However, the new policies exclude commercial customers using Claude for Work, Claude for Government, Claude for Education or API services through third-party platforms like Amazon Bedrock and Google Cloud. Anthropic is rolling out these updates through in-app notifications, giving existing users until September 28, 2025 to make their decision about data sharing. When users opt into data sharing, Anthropic will extend its data retention period from 30 days to five years for new and resumed conversations. The company stated that this extended timeframe allows for better model development and improved safety systems. Users who decline data sharing will continue under the existing 30-day retention policy. According to Anthropic, real-world interaction data provides crucial insights for developing more accurate and useful AI models. The company argues that when users collaborate with Claude on tasks like coding, these interactions generate valuable signals that help improve future models' performance on similar tasks. The extended retention period also enables the company to develop better classifiers for detecting harmful usage patterns, including abuse, spam, and misuse. Anthropic uses privacy protections, including automated processes to filter sensitive data, and states that it does not sell user data to third parties. Users can also delete individual conversations to exclude them from training, and those who change their minds about data sharing can opt out, though Anthropic may still use data that it previously collected in training cycles that have already begun. Other AI companies like OpenAI already use user data and conversations to improve their models' performance. However, users can choose to opt out of their conversations becoming part of a dataset. In a similar vein, Google's Gemini trains its models on user data by default, unless Gemini Apps Activity is off. The privacy policy also states that Google would annotate and process user conversations through human reviewers to prepare them for AI training. Meta in particular has faced a lot of flak for its data retention policies. In April this year, Meta announced that it would use public posts of users on its social media platforms like Instagram, Facebook and Threads to train its AI models. Notably, this development was specific to the EU. The company faced a lot of criticism for this decision, including a cease and desist notice from privacy advocacy group NOYB (None of Your Business). In India, as publicly available personal data is not covered under the Digital Personal Data Protection Act (DPDPA, 2023), Meta can use the public social media posts of Indian to train its AI models. An important point to note here is that a number of users use AI chatbots to discuss sensitive personal matters. Multiple reports have pointed out that many people treat ChatGPT as their personal therapist, with some mental health apps even replacing human volunteers with AI technology. In fact, the MIT technology Review pointed out that even some therapists used ChatGPT themselves. It is true that ChatGPT is free/inexpensive and available 24 hours a day when compared with actual therapists. However, there is no legal confidentiality for user conversations, as Sam Altman himself pointed out. Which means that not only can user conversations with ChatGPT become part of AI training datasets, but OpenAI can even hand them over in response to legal requests. OpenAI eventually decided to add mental health focused guardrails to ChatGPT, preventing responses that were too agreeable. The chatbot would now instead prompt users to take breaks, avoid guidance on high-stakes decisions and point users towards evidence-based resources. Another problem is that elements of user conversations could easily be reproduced by subsequent models that were trained on such data. This might end up leading to an unintentional violation of user privacy, where an AI chatbot repeats information that it received from a user in its previous iteration. Further, if a lot of users discuss heavy or sensitive topics with AI chatbots or otherwise engage in the use of inappropriate language or conduct, that could easily affect future models. For example, OpenAI is currently facing a legal challenge in the US when ChatGPT allegedly drove a teenager to commit suicide. The chatbot apparently provided step-by-step instructions for self-harm, helped draft suicide notes, told the teen to drink alcohol before suicide to dull the pain, and discouraged him to speak with his parents. Even OpenAI acknowledged that its guardrails may not work in long conversations. However, the point here is that if such conversations are common enough throughout a dataset, they will impact the responses of a future model.

[14]

How to Stop Anthropic From Using Your Data to Train Claude AI

Taking action ensures greater control over personal information. Anthropic is a prominent AI research company recognized for its focus on safety and reliability. Its flagship product, Claude AI, along with other models, leverages vast amounts of text data to generate more accurate and contextually relevant responses. While this approach enhances performance, it also raises valid concerns regarding data privacy and usage. Only a limited number of individuals fully recognize how their digital footprints, including contacts and files, may contribute to training AI systems. For those who value privacy and wish to prevent Anthropic from using their data to train Claude AI, taking proactive measures to safeguard personal information is essential.

[15]

Anthropic updates Claude AI, gives users control over data-sharing for the first time

The updates apply to all users on Claude Free, Pro and Max plans. Anthropic, the company behind the Claude AI, has announced updates to its Consumer Terms and Privacy Policy that give users more control over their data. The new changes let users decide if they want their data to be used to improve Claude and enhance protections against harmful activity, such as scams or abusive content. The updates apply to all users on Claude Free, Pro and Max plans, including when they use Claude Code. However, they do not affect services under Anthropic's Commercial Terms, such as Claude for Work, Claude Gov, Claude for Education, or API use through third-party platforms like Amazon Bedrock and Google Cloud's Vertex AI. Also read: Microsoft breaks free from OpenAI reliance, launches two homegrown AI models By opting in, users can help Anthropic make Claude safer and more capable. The company says the shared data will help improve systems that detect harmful content, reducing the chances of mistakenly flagging harmless conversations. It will also help future Claude models get better at tasks like coding, analysing information, and reasoning. Users have full control over this setting and can update their preferences at any time. New users will be asked about their choice during the signup process. Existing users will receive a notification prompting them to review the updated terms and make a decision. Also read: Govt's online gaming ban lands in court as Indian firm mounts legal challenge Existing users have until September 28, 2025 to accept the new Consumer Terms and decide whether to allow their data to be used. If users accept the policies, they will go into effect immediately. After the September 28 deadline, users will need to make a choice in the model training setting to continue using Claude. This move comes as more AI companies look to balance safety, usability, and privacy while continuing to enhance the capabilities of their models.

[16]

Anthropic on using Claude user data for training AI: Privacy policy explained

Will your Claude chats train AI? Anthropic's five-year data retention rule explained Anthropic, the AI safety startup behind the Claude chatbot, has quietly redrawn its privacy boundaries. Starting this month, all consumer users of Claude from the free tier to premium subscriptions like Claude Pro and Max are being asked to make a choice: either allow their conversations to be used to train future AI models, or opt out and continue under stricter limits. The change represents one of the most significant updates to Anthropic's data policies since Claude's launch. It also highlights a growing tension in the AI industry, companies need enormous volumes of conversational data to improve their models, but users are increasingly cautious about handing over their words, ideas, and code to corporate servers. Also read: How xAI's new coding model works and what it means for developers Until now, Claude's consumer chats were automatically deleted after 30 days unless flagged for abuse, in which case they could be stored for up to two years. With the new policy, opting in means your conversations, including coding sessions, may be retained for up to five years. When users log into Claude today, they see a large popup titled "Updates to Consumer Terms and Policies." At first glance, the layout looks straightforward: a bold "Accept" button dominates the screen. But the crucial toggle that determines whether your data feeds into Claude's training pipeline is smaller, placed beneath, and switched on by default. New users face the same screen during signup. Anthropic insists that data use is optional and reversible in settings, but once conversations are included in training, they cannot be withdrawn retroactively. Notably, this policy shift applies only to consumer-facing products like Claude Free, Pro, and Max. Enterprise services such as Claude for Work, Claude Gov, Claude Education, and API-based integrations on Amazon Bedrock or Google Cloud remain unaffected. The company has framed this as a way to give users meaningful choice while advancing Claude's capabilities. In a blog post, Anthropic argued that user data is essential for improving reasoning, coding accuracy, and safety systems. Sensitive information, the company says, is automatically filtered out, conversations are encrypted, and no data is sold to third parties. For Anthropic, which has marketed itself as a "safety-first" lab, the update is also about credibility: making Claude better at understanding real-world use cases without compromising trust. Not everyone is convinced. Privacy advocates and user communities, especially on forums like Reddit, have raised alarms over what they call "dark patterns" in the interface design, a default-on toggle that nudges people into sharing data without truly informed consent. Also read: Anthropic updates Claude AI, gives users control over data-sharing for the first time The debate has even spilled into Anthropic's competitive landscape. Brad Lightcap, Chief Operating Officer at OpenAI, has previously criticized similar data policies as "a sweeping and unnecessary demand" that "fundamentally conflicts with the privacy commitments we have made to our users." While Lightcap was responding to a different set of obligations, his comments resonate with concerns now directed at Anthropic: that AI firms, despite public pledges of safety and restraint, are inevitably pulled toward the same hunger for data that drives their rivals. For everyday Claude users, the implications are immediate. By September 28, 2025, users must either accept the new terms or risk losing access, conversations that remain untouched won't be used, but if reopened, they fall under the new rules. Data sharing can be disabled anytime in settings, though only future conversations are protected, once training data is absorbed into Claude's models, it cannot be retrieved. Anthropic built its brand by promising to tread cautiously in the race toward advanced AI. But this policy shift suggests the company is grappling with the same competitive pressures as its peers. Data is the fuel of large language models, and without enough of it, even a well-funded lab risks falling behind. The question is whether users will accept this trade-off: more powerful AI assistants at the cost of longer, deeper data retention. As one analyst put it, the optics may matter as much as the policy itself: "Anthropic sold itself as the company that wouldn't play by Silicon Valley's rules. This move makes it look like every other AI giant." The Claude privacy update is more than just fine print. It's a signal of how AI companies are converging on a new normal: opt-out systems, long retention windows, and user data at the core of product evolution. For users, the choice is clear but consequential. Opt in, and your words help shape the next generation of AI. Opt out, and you keep a measure of privacy but perhaps at the expense of slower progress for the tools you rely on. Either way, September 28 marks a new chapter for Anthropic and its users, one where transparency, trust, and technology collide in real time.

Share

Share

Copy Link

Anthropic announces a significant change in its data policy, allowing user conversations to be used for AI model training. Users have until September 28 to opt out, with data retention extended to five years for those who opt in.

Anthropic's New Data Policy: A Shift in AI Training Approach

Anthropic, a leading AI company, has announced a significant change to its data policy, allowing user conversations to be used for training its AI models

1

. This marks a departure from the company's previous stance, which prioritized consumer data privacy by not using chat data for model training4

.

Source: Digit

Key Changes and User Options

The new policy affects users of Claude Free, Pro, and Max plans, including those using Claude Code

1

. Users have until September 28 to decide whether they want their conversations used for AI training2

. Those who opt in will have their data retained for five years, a significant increase from the previous 30-day retention period1

.Existing users will see a pop-up notification explaining the change, with the option to "Help improve Claude" automatically set to "On"

3

. Users can opt out by toggling this switch off or by navigating to their privacy settings3

.

Source: MacRumors

Implications and Industry Context

Anthropic frames this change as beneficial for users, stating that it will help improve model safety and enhance skills like coding, analysis, and reasoning

1

. However, the move also reflects the competitive pressures in AI development, where access to high-quality conversational data is crucial1

.This policy shift aligns Anthropic more closely with industry practices. Other major players like Google have made similar moves, with Google recently renaming "Gemini Apps Activity" to "Keep Activity" and using user data to improve its services

4

.Privacy Concerns and Regulatory Landscape

The change raises concerns about user privacy and meaningful consent in AI. Privacy experts have long warned that the complexity of AI makes it difficult for users to provide informed consent

1

. The Federal Trade Commission has previously warned AI companies about potential enforcement actions for unclear or deceptive privacy policies1

.

Source: Analytics Insight

Related Stories

Enterprise and API Users Unaffected

It's important to note that business customers using Claude Gov, Claude for Work, Claude for Education, or API access will not be affected by these changes

1

. This approach mirrors OpenAI's policy of protecting enterprise customers from data training policies1

.User Awareness and Control

Anthropic emphasizes that users can change their decision at any time via privacy settings

3

. However, once data has been used for training, it cannot be retroactively removed from the models3

. The company also states that it will not sell user data to third parties and uses tools to filter or obfuscate sensitive information5

.As AI continues to evolve rapidly, this policy change highlights the ongoing challenges in balancing technological advancement with user privacy and consent. It underscores the importance of user awareness and the need for clear, transparent communication from AI companies about their data practices.

References

Summarized by

Navi

[1]

Related Stories

Protecting Your Privacy: How to Opt Out of AI Training with Your Chat Data

16 Aug 2024

Claude AI Gains Ability to End Harmful Conversations, Sparking Debate on AI Welfare

18 Aug 2025•Technology

Court Order Mandates OpenAI to Retain ChatGPT Conversations, Raising Privacy Concerns

25 Jun 2025•Policy and Regulation

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research