Apple brings agentic coding to Xcode 26.3 with Claude Agent and OpenAI's Codex integration

15 Sources

15 Sources

[1]

Xcode 26.3 adds support for Claude, Codex, and other agentic tools via MCP

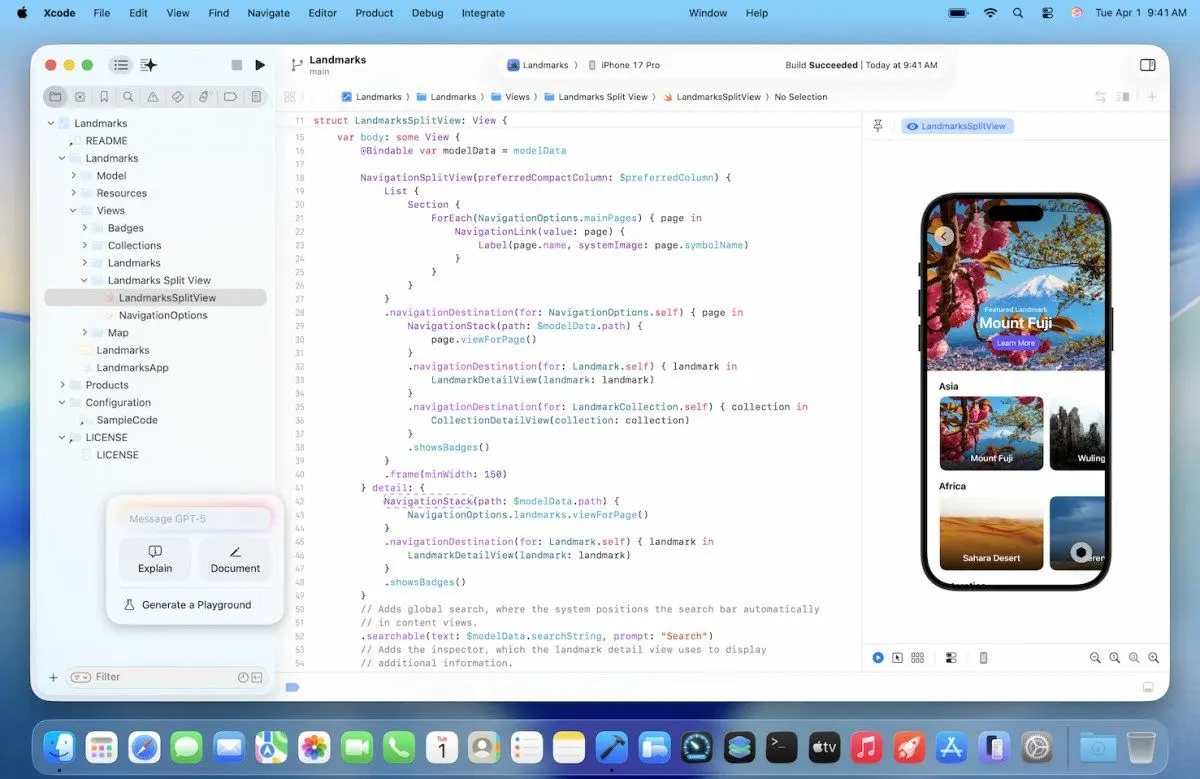

Apple has announced a new version of Xcode, the latest version of its integrated development environment (IDE) for building software for its own platforms, like the iPhone and Mac. The key feature of 26.3 is support for full-fledged agentic coding tools, like OpenAI's Codex or Claude Agent, with a side panel interface for assigning tasks to agents with prompts and tracking their progress and changes. This is achieved via Model Context Protocol (MCP), an open protocol that lets AI agents work with external tools and structured resources. Xcode acts as an MCP endpoint that exposes a bunch of machine-invocable interfaces and gives AI tools like Codex or Claude Agent access to a wide range of IDE primitives like file graph, docs search, project settings, and so on. While AI chat and workflows were supported in Xcode before, this release gives them much deeper access to the features and capabilities of Xcode. This approach is notable because it means that even though OpenAI and Anthropic's model integrations are privileged with a dedicated spot in Xcode's settings, it's actually possible to connect other tooling that supports MCP, which also allows doing some of this with models running locally. Apple began its big AI features push with the release of Xcode 26, expanding on code completion using a local model trained by Apple that was introduced in the previous major release, and fully supporting a chat interface for talking with OpenAI's ChatGPT and Anthropic's Claude. Users who wanted more agent-like behavior and capabilities had to use third-party tools, which sometimes had limitations due to a lack of deep IDE access. Xcode 26.3's release candidate (the final beta, essentially) rolls out imminently, with the final release coming a little further down the line.

[2]

Agentic coding comes to Apple's Xcode with agents from Anthropic and OpenAI | TechCrunch

Apple is bringing agentic coding to Xcode. On Tuesday, the company announced the release of Xcode 26.3, which will allow developers to use agentic tools, including Anthropic's Claude Agent and OpenAI's Codex, directly in Apple's official app development suite. The Xcode 26.3 Release Candidate is available to all Apple Developers today from the developer website and will hit the App Store a bit later. This latest update comes on the heels of the Xcode 26 release last year, which first introduced support for ChatGPT and Claude within Apple's integrated development environment (IDE) used by those building apps for iPhone, iPad, Mac, Apple Watch, and Apple's other hardware platforms. The integration of agentic coding tools allows AI models to tap into more of Xcode's features to perform their tasks and perform more complex automation. The models will also have access to Apple's current developer documentation to ensure they use the latest APIs and follow the best practices as they build. At launch, the agents can help developers explore their project, understand its structure and metadata, then build the project and run tests to see if there are any errors and fix them, if so. To prepare for this launch, Apple said it worked closely with both Anthropic and OpenAI to design the new experience. Specifically, the company said it did a lot of work to optimize token usage and tool calling, so the agents would run efficiently in Xcode. Xcode leverages MCP (Model Context Protocol) to expose its capabilities to the agents and connect them with its tools. That means that Xcode can now work with any outside MCP-compatible agent for things like project discovery, changes, file management, previews and snippets, and accessing the latest documentation. Developers who want to try the agentic coding feature should first download the agents they want to use from Xcode's settings. They can also connect their accounts with the AI providers by signing in or adding their API key. A drop-down menu within the app allows developers to choose which version of the model they want to use (e.g. GPT-5.2 vs. GPT-5.1-mini). In a prompt box on the left side of the screen, developers can tell the agent what sort of project they want to build or change to the code they want to make using natural language commands. For instance, they could direct Xcode to add a feature to their app that uses one of Apple's provided frameworks, and how it should appear and function. As the agent starts working, it breaks down tasks into smaller steps, so it's easy to see what's happening and how the code is changing. It will also look for the documentation it needs before it begins coding. The changes are highlighted visually within the code, and the project transcript on the side of the screen allows developers to learn what's happening under the hood. This transparency could particularly help new developers who are learning to code, Apple believes. To that end, the company is hosting a "code-along" workshop on Thursday on its developer site, where users can watch and learn how to use agentic coding tools as they code along in real-time with their own copy of Xcode. At the end of its process, the AI agent verifies that the code it created works as expected. Armed with the results of its tests on this front, the agent can iterate further on the project if need be to fix errors or other problems. (Apple noted that asking the agent to think through its plans before writing code can sometimes help to improve the process, as it forces the agent to do some pre-planning.) Plus, if developers are not happy with the results, they can easily revert their code back to its original at any point in time, as Xcode creates milestones every time the agent makes a change.

[3]

Apple's Xcode adds OpenAI and Anthropic's coding agents

Apple is building OpenAI and Anthropic's AI-powered coding agents directly into Xcode. New integrations in Xcode 26.3 will give developers the ability to call upon Anthropic's Claude Agent and OpenAI's Codex to write and edit code, update project settings, search documentation, and more. Xcode is the software developers can use to create and test apps for the iPhone, Mac, iPad, Apple Watch, and Apple TV. Both Claude and ChatGPT were previously available inside Xcode, but this latest update will allow AI agents to take action inside the app, rather than just provide coding assistance. Apple is also making Xcode available through the Model Context Protocol, an open-source standard that will enable developers to plug other AI tools into the app. The news of Apple's Xcode update comes just one day after OpenAI launched its AI-powered Codex app on Mac, which lets developers write code alongside a team of AI agents. Apple says Xcode 26.3 will be available to members of the Apple Developer Program starting today, "with a release coming soon on the App Store."

[4]

Xcode 26.3 finally brings agentic coding to Apple's developer tools

Xcode 26.3 adds autonomous AI agents inside the IDE.Agents can build, test, and fix compile errors on their own.New visual checks use screenshots, but device limits remain. Apple today introduced a major update to its Xcode development environment, the tool most developers use to build apps for iPhone, iPad, Mac, Apple Watch, and even the Vision Pro. Xcode 26.3 is now available as a release candidate. This newest version substantially increases Apple's coding intelligence features with built-in support for agentic coding. This will allow autonomous coding agents to work directly inside the IDE. Also: I used Claude Code to vibe code a Mac app in 8 hours, but it was more work than magic Allowing coding agents to work inside Xcode is not new. I demonstrated an earlier version of this capability back in November. The version 26.3 difference? Apple says that agents like Claude Code and OpenAI Codex can now handle complex development tasks. Apple is positioning this update as a major expansion of the basic intelligence features first introduced in Xcode 26. Here's why that matters: Back in November, I was able to get "Hello, world" to work inside of Xcode. However, once I tried coding a complex iPhone app, the Xcode integration crashed and burned. The IDE regularly hung and crashed, rendering it unusable. I also found that Xcode was unable to fill out the wide range of IDE-based forms required to create an app. Also: I used Claude Code to vibe code a Mac app in 8 hours, but it was more work than magic At that point, I turned to Claude Code, which I ran entirely in the terminal. Claude Code did everything that Xcode didn't, which allowed me to first build a powerful iPhone app, then a Mac app, and later a Watch app. The Xcode I originally used had AI features focused on code suggestions, editing, and conversational help, which is probably why the agentic requests I gave it caused it to cry. This new release promises to be better at that kind of workload. Also: How to create your first iPhone app with AI - no coding experience needed In a pre-launch briefing, I asked Apple's Xcode team leaders about whether this release would overcome the previous Xcode AI limitations I had encountered. Apple's experts confidently stated that, with this Xcode 26.3 release, the IDE moves beyond prompts and responses, giving agents broader autonomy and deeper access to project context. Apple says this release supports faster iteration, reduced manual steps, and tighter integration with Apple's unique coding environment requirements. According to Susan Prescott, vice president of Worldwide Developer Relations, "Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation." Apple is now actively positioning agentic coding as a foundation for future development workflows. Apple's view is that its new coding intelligence features put advanced technologies directly into developers' hands, with an emphasis on reducing friction for solo developers and small teams. Also: Claude Code made an astonishing $1B in 6 months - and my own AI-coded iPhone app shows why As with the previous Xcode release, Xcode 26.3 includes native integrations with Claude Code and ChatGPT Codex. These are both agents that come with fairly hefty monthly fees. When I last tested Xcode, it did allow for adding additional providers, so it's possible that local and free coding models might also be usable. I'll be testing that soon and will report back on how that works. Xcode 26.3 is also opening up capabilities through the Model Context Protocol, an open standard originated by Anthropic that allows models and apps to communicate. This should allow any compatible agent or external tool to connect with Xcode's features. Once you choose an agent model, that agent can dig into file structures, fully understand the overall architecture of a project, and smartly identify which files need changes. The agent can build projects, run tests, and identify compile errors without direct user intervention. Agents can also run complex, lengthy tasks, proceeding autonomously until they complete or until user input is required. I'm really curious about how this works in practice, because the previous Xcode would hang whenever an assignment was too long or complex. I was running Xcode on a very powerful computer, an M4 Max Mac Studio with 128GB RAM, so the performance friction wasn't caused by the machine. It was all in Xcode. It would be very nice if that problem were now solved. As of this new release, internal project settings and configurations can also be updated within the Xcode AI workflow. I can already do this via Claude Code's terminal interface, but it would be nice to be able to do it from within Xcode. When I built my iPhone, Mac, and Watch apps, the only time I needed to go into Xcode was to actually cause the AI-generated programs to run. But with Xcode 26.3, Apple says AI agents can launch apps autonomously from within the IDE. In a brand-new feature that shows serious promise, agents can also capture screenshots of the programs they launch to perform visual verification of UI output. If that works, that could provide a powerful boost to project turnaround and autonomy. It would allow the AIs to check whether changes actually reach the app, rather than requiring constant by-hand tests that take human time. On the other hand, there are limits. The Xcode simulators can't take photos, scan NFC tags, or share data via iCloud. Since my programs do all three of these things, they must be tested on-device. For such on-device operations, the visual verification feature is not available. Xcode 26.3 is available here as a release candidate for Apple Developer Program members. A full public release will be available soon through the Mac App Store. If you already have Xcode installed, you'll be able to update it the same way you update all your other Mac App Store apps. Apple is also offering an online developer training event in the new Xcode on February 5. Sign up here. What do you think about Apple bringing full agentic coding directly into Xcode? Have you tried AI-powered coding in the IDE before? If so, how did it compare to using tools like Claude Code or Codex in the terminal? Does visual verification and autonomous building/testing change how comfortable you'd be letting an agent handle complex tasks? Do you think this update is enough to keep you working primarily inside Xcode, or will external tools still play a bigger role in your workflow? Let us know in the comments below.

[5]

You Can Now Vibe Code Apple Apps With Claude, OpenAI's Codex

The latest version of Apple's Xcode, a developer toolkit for creating apps across its devices, has added support for Anthropic's Claude Code and OpenAI's Codex. Both are among the most popular vibe coding tools, or "agentic coding" tools, as Apple puts it. (The definition is a bit loose.) Integrating with Xcode aims to "supercharge productivity and creativity, streamlining the development workflow," says Susan Prescott, Apple's VP of Worldwide Developer Relations. Xcode 26.3 is now available as a release candidate for all members of the Apple Developer Program, with a release on the App Store "coming soon." It's also another subtle admission from Apple that its homegrown AI tools are not on par with the rest of the industry, following its decision last month to use Google Gemini to power its future AI strategy -- not its own models. Apple teased its own coding assistant for its Swift programming language at WWDC 2024, but developers "grew frustrated" when by March 2025 it was still nowhere to be found, MacDailyNews reports. Apple never shipped it to developers, and in May 2025, Bloomberg reported that the company had pivoted and teamed up with Anthropic to develop a new AI coding tool within Xcode. That appears to be the version launched this week, along with a Codex option. Also this week, OpenAI released a Codex app for macOS (a Windows version is in the works). It aims to compete with Anthropic's Claude Code, offering a standalone program for developers outside ChatGPT. In a bid to court developers, OpenAI is offering it for free with the ChatGPT Free and Go plans "for a limited time." It's also doubling the rate limits for Plus, Pro, Business, Enterprise, and Edu users. "The Codex app changes how software gets built and who can build it -- from pairing with a single coding agent on targeted edits to supervising coordinated teams of agents across the full lifecycle of designing, building, shipping, and maintaining software," OpenAI says.

[6]

Apple just made Xcode better for vibe coding

Apple has just released Xcode 26.3, and it's a big step forward in terms of the company's support of coding agents. The new release expands on the AI features the company introduced with Xcode 26 at WWDC 2025 to give systems like Claude and ChatGPT more robust access to its in-house IDE. With the update, Apple says Claude and OpenAI's Codex "can search documentation, explore file structures, update project settings, and verify their work visually by capturing Xcode Previews and iterating through builds and fixes." This is in contrast to earlier releases of Xcode 26 where those same agents were limited in what they could see of a developer's Xcode environment, restricting their utility. According to Apple, the change will give users tools they can use to streamline their processes and work more efficiently than before. Developers can add Claude and Codex to their Xcode terminal from the Intelligence section of the app's setting menu. Once a provider is selected, the interface allows users to also pick their preferred model. So if you like the outputs of say GPT 5.1 over GPT 5.2, you can use the older system. The tighter integration with Claude and Codex was made possible by Model Context Protocol (MCP) servers Apple has deployed. MCP is a technology Anthropic debuted in fall 2024 to make it easier for large language models like Claude to share data with third-party tools and systems. Since its introduction, MCP has become an industry standard -- with OpenAI, for instance, adopting the protocol last year to facilitate its own set of connections. Apple says it worked directly with Anthropic and OpenAI to optimize token usage through Xcode, but the company's adoption of MCP means developers will be able to add any coding agent that supports the protocol to their terminal in the future. Xcode 26.3 is available to download for all members of the Apple Developer Program starting today, with the Mac Store availability "coming soon."

[7]

Apple adds agents from Anthropic and OpenAI to its coding tool

Tim Cook, CEO of Apple Inc., during the Apple Worldwide Developers Conference at Apple Park campus in Cupertino, California, on June 9, 2025. Apple said it's introducing agentic coding into its flagship coding tool called Xcode, throwing its weight behind one of the hottest trends in Silicon Valley. With agent-powered coding, programmers have artificial intelligence software write code independently. Apple said its tool will support Anthropic's Claude Agent and OpenAI's Codex. "Xcode and coding agents can now work together to handle complex multi-step tasks on your behalf," an Apple representative said in a demo video on Tuesday, alongside the announcement. Over the summer, Apple updated Xcode with support for OpenAI's ChatGPT and Claude, but the latest update extends the relationship to agentic coding tools. In an email sent to developers, Apple said the agents will be able to build and test projects, search Apple documentation, and fix issues. Individual programmers and companies have embraced agentic coding as a faster way to build software through a practice known as "vibe coding," where humans prompt an AI and review the code it generates. Apple Intelligence, the company's consumer AI suite, has faced delays and management turnover. But in recent months, AI coding has gained traction among iPhone developers. Users will have to connect their OpenAI or Anthropic accounts to Xcode via an API key. Apple said it uses an open standard that gives programmers the ability to use other compatible agents and AI tools with Xcode, in addition to the built-in Anthropic and OpenAI integrations. Apple's Xcode is the company's developer environment for coders, and is used to build nearly every iPhone app. The new software, Xcode 26.3, is available in a beta version now for registered Apple developers, and will eventually be released on the App Store. OpenAI released a version of its agentic coding application called Codex for Mac computers on Monday.

[8]

Apple announces agentic coding in Xcode with Claude Agent and Codex integration - 9to5Mac

Apple has announced a major upgrade for Xcode: support for agentic coding. Apple says that developers can now build apps with coding agents such as Anthropic's Claude Agent and OpenAI's Codex directly inside Xcode. In a post on its Newsroom site today, Apple explains that agents like Claude Agent and Codex can "now collaborate throughout the entire development life cycle." This includes searching documentation, analyzing file structures, and updating project settings. The agents can even verify work visually using Xcode Previews, then iterating through multiple builds to fix problems. Developers can sign in with their respective accounts for those platforms or enter an API key. Apple is also making Xcode's capabilities available with Model Context Protocol, which is the industry's standard interface between AI systems and traditional platforms. This means that developers have the flexibility to integrate any compatible agent or tool with Xcode, beyond Anthropic's Claude Agent and OpenAI's Codex. Here's Susan Prescott, Apple's vice president of Worldwide Developer Relations: "At Apple, our goal is to make tools that put industry-leading technologies directly in developers' hands so they can build the very best apps. Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation." "This connection combines the power of these agents with Xcode's native capabilities to provide the best results when developing for Apple platforms, giving developers the flexibility to work with the model that best fits their project," Apple says. When Apple announced Xcode 26 at WWDC, the company touted the ability for developers to tap into ChatGPT and other providers to write code, fix bugs, access documentation, and more. Support for agentic coding is a major step beyond that initial integration. The new features are available starting today with the Xcode 26.3 Release Candidate. Learn more on Apple's developer website.

[9]

Xcode 26.3 Lets AI Agents From Anthropic and OpenAI Build Apps Autonomously

With Xcode 26.3, Apple is adding support for agentic coding, allowing developers to use tools like Anthropic's Claude Agent and OpenAI's Codex right in Xcode for app creation. Agentic coding will allow Xcode to complete more complex app development tasks autonomously. Claude, ChatGPT, and other AI models have been available for use in Xcode since Apple added intelligence features in Xcode 26, but until now, AI was limited and was not able to take action on its own. That will change with the option to use an AI coding assistant. AI models can access more of Xcode's features to work toward a project goal, and Apple worked directly with Anthropic and OpenAI to configure their agents for use in Xcode. Agents can create new files, examine the structure of a project in Xcode, build a project directly and run tests, take image snapshots to double-check work, and access full Apple developer documentation that has been designed for AI agents. Adding an agent to Xcode can be done with a single click in the Xcode settings, with agents able to be updated automatically as AI companies release updates. Developers will need to set up an Anthropic or OpenAI account to use those coding tools in Xcode, paying fees based on API usage. Apple says that it aimed to ensure that Claude Agent and Codex run efficiently, with reduced token usage. It is simple to swap between agents in the same project, giving developers the flexibility to choose the agent best suited for a particular task. While Apple worked with OpenAI and Anthropic for Xcode integration, the Xcode 26.3 features can be used with any agent or tool that uses the open standard Model Context Protocol. Apple is releasing documentation so that developers can configure and connect MCP agents to Xcode. Using natural language commands, developers are able to instruct AI agents to complete a project, such as adding a new feature to an app. Xcode then works with the agent to break down the instructions into small tasks, and the agent is able to work on its own from there. Here's how the process works: In the sidebar of a project, developers can follow along with what the agent is doing using the transcript, and can click to see where code is added to keep track of what the agent is doing. At any point, developers can go back to before an agent or model made a modification, so there are options to undo unwanted results or try out multiple options for introducing a new feature. Apple says that agentic coding will allow developers to simplify workflows, make changes quicker, and bring new ideas to life. Apple also sees it as a learning tool that provides developers with the opportunity to learn new ways to build something or to implement an API in an app. "At Apple, our goal is to make tools that put industry-leading technologies directly in developers' hands so they can build the very best apps," said Susan Prescott, Apple's vice president of Worldwide Developer Relations. "Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation." The release candidate of Xcode 26.3 is available for developers as of today, and a launch will likely follow in the next week or so.

[10]

Apple launches Xcode 26.3, brings even more AI power to coding on Mac

Anthropic Claude Agent and OpenAI Codex are fully integrated Apple has announced a major upgrade as part of Xcode 26.3 which brings more powerful AI coding to the platform, including agentic AI to automate some of the tasks needing less human oversight. The IDE, which was built by Apple specifically for developing apps for Apple products, now supports Anthropic's Claude Agent and OpenAI's Codex directly within the interface. Xcode already had some limited ChatGPT and Claude integration, but version 26.3 deepens this by giving the agents access to more of Xcode's tools natively. In an announcement, Apple outlined how AI agents can be used for writing and modifying code, building projects, running tests, detecting errors and searching Apple's latest developer documentation, which could make it easier to jump through the hoops that are already in place to make sure apps are compliant with the iPhone maker's guidance and standards. The company even worked with the third parties to maximize token usage to ensure both efficient performance and lower running costs. And for maximum interoperability (a new and very welcome trend in Big Tech), Apple is also making Xcode's capabilities available via the industry standard MCP. "Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation," Worldwide Developer Relations VP Susan Prescott wrote. For the end user, a drop-down menu lets them choose their preferred model version from the two AI companies. An initial setup to link accounts is necessary. Xcode 26.3 is currently available as a release candidate for Apple Developer Program members, with general availability set "soon."

[11]

Apple integrates Anthropic's Claude and OpenAI's Codex into Xcode 26.3 in push for 'agentic coding'

Apple on Tuesday announced a major update to its flagship developer tool that gives artificial intelligence agents unprecedented control over the app-building process, a move that signals the iPhone maker's aggressive push into an emerging and controversial practice known as "agentic coding." Xcode 26.3, available immediately as a release candidate, integrates Anthropic's Claude Agent and OpenAI's Codex directly into Apple's development environment, allowing the AI systems to autonomously write code, build projects, run tests, and visually verify their own work -- all with minimal human oversight. The update is Apple's most significant embrace of AI-assisted software development since introducing intelligence features in Xcode 26 last year, and arrives as "vibe coding" -- the practice of delegating software creation to large language models -- has become one of the most debated topics in technology. "Integrating intelligence into the Xcode developer workflow is powerful, but the model itself still has a somewhat limited aperture," Tim Sneath, an Apple executive, said during a press conference Tuesday morning. "It answers questions based on what the developer provides, but it doesn't have access to the full context of the project, and it's not able today to take action on its own. And so that changes today." The key innovation in Xcode 26.3 is the depth of integration between AI agents and Apple's development tools. Unlike previous iterations that offered code suggestions and autocomplete features, the new system grants AI agents access to nearly every aspect of the development process. During a live demonstration, Jerome Bouvard, an Apple engineer, showed how the Claude agent could receive a simple prompt -- "add a new feature to show the weather at a landmark" -- and then independently analyze the project's file structure, consult Apple's documentation, write the necessary code, build the project, and take screenshots of the running application to verify its work matched the requested design. "The agent is able to use the tools like build or, you know, grabbing a preview of the screenshots to verify its work, visually analyze the image and confirm that everything has been built accordingly," Bouvard explained. "Before that, when you're interacting with a model, the model will provide you an answer and it will just stop there." The system creates automatic checkpoints as developers interact with the AI, allowing them to roll back changes if results prove unsatisfactory -- a safeguard that acknowledges the unpredictable nature of AI-generated code. Apple worked directly with Anthropic and OpenAI to optimize the experience, Sneath said, with particular attention paid to reducing token usage -- the computational units that determine costs when using cloud-based AI models -- and improving the efficiency of tool calling. "Developers can download new agents with a single click, and they update automatically," Sneath noted. Underlying the integration is the Model Context Protocol, or MCP, an open standard that Anthropic developed for connecting AI agents with external tools. Apple's adoption of MCP means that any compatible agent -- not just Claude or Codex -- can now interact with Xcode's capabilities. "This also works for agents that are running outside of Xcode," Sneath explained. "Any agent that is compatible with MCP can now work with Xcode to do all the same things -- Project Discovery and change management, building and testing apps, working with previews and code snippets, and accessing the latest documentation." The decision to embrace an open protocol, rather than building a proprietary system, represents a notable departure for Apple, which has historically favored closed ecosystems. It also positions Xcode as a potential hub for a growing universe of AI development tools. The announcement comes against a backdrop of mixed experiences with AI-assisted coding in Apple's tools. During the press conference, one developer described previous attempts to use AI agents with Xcode as "horrible," citing constant crashes and an inability to complete basic tasks. Sneath acknowledged the concerns while arguing that the new integration addresses fundamental limitations of earlier approaches. "The big shift here is that Claude and Codex have so much more visibility into the breadth of the project," he said. "If they hallucinate and write code that doesn't work, they can now build. They can see the compile errors, and they can iterate in real time to fix those issues, and we'll do so in this case before you even, you know, presented it as a finished work." The power of IDE integration, Sneath argued, extends beyond error correction. Agents can now automatically add entitlements to projects when needed to access protected APIs -- a task that would be "otherwise very difficult to do" for an AI operating outside the development environment and "dealing with binary file that it may not have the file format for." Apple's announcement arrives at a crucial moment in the evolution of AI-assisted development. The term "vibe coding," coined by AI researcher Andrej Karpathy in early 2025, has transformed from a curiosity into a genuine cultural phenomenon that is reshaping how software gets built. LinkedIn announced last week that it will begin offering official certifications in AI coding skills, drawing on usage data from platforms like Lovable and Replit. Job postings requiring AI proficiency doubled in the past year, according to edX research, with Indeed's Hiring Lab reporting that 4.2% of U.S. job listings now mention AI-related keywords. The enthusiasm is driven by genuine productivity gains. Casey Newton, the technology journalist, recently described building a complete personal website using Claude Code in about an hour -- a task that previously required expensive Squarespace subscriptions and years of frustrated attempts with various website builders. More dramatically, Jaana Dogan, a principal engineer at Google, posted that she gave Claude Code "a description of the problem" and "it generated what we built last year in an hour." Her post, which accumulated more than 8 million views, began with the disclaimer: "I'm not joking and this isn't funny." But the rapid adoption of agentic coding has also sparked significant concerns among security researchers and software engineers. David Mytton, founder and CEO of developer security provider Arcjet, warned last month that the proliferation of vibe-coded applications "into production will lead to catastrophic problems for organizations that don't properly review AI-developed software." "In 2026, I expect more and more vibe-coded applications hitting production in a big way," Mytton wrote. "That's going to be great for velocity... but you've still got to pay attention. There's going to be some big explosions coming!" Simon Willison, co-creator of the Django web framework, drew an even starker comparison. "I think we're due a Challenger disaster with respect to coding agent security," he said, referring to the 1986 space shuttle explosion that killed all seven crew members. "So many people, myself included, are running these coding agents practically as root. We're letting them do all of this stuff." A pre-print paper from researchers this week warned that vibe coding could pose existential risks to the open-source software ecosystem. The study found that AI-assisted development pulls user interaction away from community projects, reduces visits to documentation websites and forums, and makes launching new open-source initiatives significantly harder. Stack Overflow usage has plummeted as developers increasingly turn to AI chatbots for answers -- a shift that could ultimately starve the very knowledge bases that trained the AI models in the first place. Previous research painted an even more troubling picture: a 2024 report found that vibe coding using tools like GitHub Copilot "offered no real benefits unless adding 41% more bugs is a measure of success." Even enthusiastic adopters have begun acknowledging the darker aspects of AI-assisted development. Peter Steinberger, creator of the viral AI agent originally known as Clawdbot (now OpenClaw), recently revealed that he had to step back from vibe coding after it consumed his life. "I was out with my friends and instead of joining the conversation in the restaurant, I was just like, vibe coding on my phone," Steinberger said in a recent podcast interview. "I decided, OK, I have to stop this more for my mental health than for anything else." Steinberger warned that the constant building of increasingly powerful AI tools creates the "illusion of making you more productive" without necessarily advancing real goals. "If you don't have a vision of what you're going to build, it's still going to be slop," he added. Google CEO Sundar Pichai has expressed similar reservations, saying he won't vibe code on "large codebases where you really have to get it right." "The security has to be there," Pichai said in a November podcast interview. Boris Cherny, the Anthropic engineer who created Claude Code, acknowledged that vibe coding works best for "prototypes or throwaway code, not software that sits at the core of a business." "You want maintainable code sometimes. You want to be very thoughtful about every line sometimes," Cherny said. Apple appears to be betting that the benefits of deep IDE integration can mitigate many of these concerns. By giving AI agents access to build systems, test suites, and visual verification tools, the company is essentially arguing that Xcode can serve as a quality control mechanism for AI-generated code. Susan Prescott, Apple's vice president of Worldwide Developer Relations, framed the update as part of Apple's broader mission. "At Apple, our goal is to make tools that put industry-leading technologies directly in developers' hands so they can build the very best apps," Prescott said in a statement. "Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation." But the question remains whether the safeguards will prove sufficient as AI agents grow more autonomous. Asked about debugging capabilities, Bouvard noted that while Xcode has "a very powerful debugger built in," there is "no direct MCP tool for debugging." Developers can run the debugger and manually relay information to the agent, but the AI cannot yet independently investigate runtime issues -- a limitation that could prove significant as the complexity of AI-generated code increases. The update also does not currently support running multiple agents simultaneously on the same project, though Sneath noted that developers can open projects in multiple Xcode windows using Git worktrees as a workaround. Xcode 26.3 is available immediately as a release candidate for members of the Apple Developer Program, with a general release expected soon on the App Store. The release candidate designation -- Apple's final beta before production -- means developers who download today will automatically receive the finished version when it ships. The integration supports both API keys and direct account credentials from OpenAI and Anthropic, offering developers flexibility in managing their AI subscriptions. But those conveniences belie the magnitude of what Apple is attempting: nothing less than a fundamental reimagining of how software comes into existence. For the world's most valuable company, the calculus is straightforward. Apple's ability to attract and retain developers has always underpinned its platform dominance. If agentic coding delivers on its promise of radical productivity gains, early and deep integration could cement Apple's position for another generation. If it doesn't -- if the security disasters and "catastrophic explosions" that critics predict come to pass -- Cupertino could find itself at the epicenter of a very different kind of transformation. The technology industry has spent decades building systems to catch human errors before they reach users. Now it must answer a more unsettling question: What happens when the errors aren't human at all? As Sneath conceded during Tuesday's press conference, with what may prove to be unintentional understatement: "Large language models, as agents sometimes do, sometimes hallucinate." Millions of lines of code are about to find out how often.

[12]

Xcode's new AI agents don't just suggest code, they get things done for you

Apple's Xcode 26.3 adds agentic AI from OpenAI and Anthropic, pushing Apple's IDE from a helpful assistant into something closer to a junior developer. Apple has quietly turned Xcode, its venerable app-building machine, into an AI-driven software that can now harness agentic coding. Last year, the Cupertino giant added basic AI-based features, such as code completion and suggestions to Xcode 26, but the new update changes everything. Xcode 26.3 includes powerful AI agents such as Anthropic's Claude and OpenAI's Codex, both of which can analyze your current project, update settings, search for relevant information, run tests, and interact with previews, all via text-based commands. What does agentic coding actually do? In simpler terms, the app's AI won't just assist coders and developers; it will also help them automate tasks. It works by reading the entire program, understanding what it does and how it performs specific tasks, and then turning your text-based commands into relevant changes or additions. Recommended Videos But what exactly does agentic coding solve? For developers, Xcode 26.3 solves the never-ending problem of writing similar code across projects and helps debug errors by navigating hundreds of lines of code. The idea isn't to replace programmers, but to help them outsource the boring and tedious parts of their job to AI (debugging, rebuilding, adding specific functions, redesigning a piece of code, etc.), while they can work on exploring creative ideas, managing their schedule, or taking some time off. Apple's open-ended AI strategy What's even more unique is Apple's approach toward integrating AI into Xcode. It uses an open Model Context Protocol (MCP), allowing developers to plug in other compatible agents for greater flexibility. For now, Apple Xcode 26.3 with agentic coding is available as a release candidate; it's only available for registered members of the Apple Developer Program (via the developer site). However, a full public release on the Mac App Store is coming soon.

[13]

Apple introduces agentic coding support in Xcode 26.3

Apple introduced support for agentic coding in Xcode 26.3 on Tuesday, enabling developers to integrate autonomous AI agents including Anthropic's Claude Agent and OpenAI's Codex directly into the development environment. The update expands Xcode's existing intelligence features by granting third-party AI models greater autonomy to perform complex development tasks, such as modifying project architectures and verifying code via visual previews. By integrating these agents, Apple aims to reduce manual workflows and allow developers to automate the technical execution of app builds. Coding agents in Xcode 26.3 can now access internal system tools to search documentation, explore file structures, and update project settings. The agents are designed to iterate through builds and fixes autonomously, using Xcode Previews to verify their work before developer review. The new version also implements the Model Context Protocol, an open standard that allows developers to connect any compatible AI agent or tool to Xcode. This integration allows for flexibility in model selection while leveraging Xcode's native capabilities for Apple platform development. "Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation," said Susan Prescott, Apple's vice president of Worldwide Developer Relations, in a company statement. The release builds upon the coding assistant features first introduced in Xcode 26, which focused on writing and editing in the Swift programming language. The current version shifts from assisted completion to agent-led task management across the entire development life cycle. Apple, based in Cupertino, California, develops the Xcode integrated development environment (IDE) as the primary toolset for creating software for iOS, macOS, watchOS, and tvOS. Anthropic and OpenAI are San Francisco-based artificial intelligence research companies that provide the underlying models for the new agentic features.

[14]

Apple's Xcode Upgraded With Agentic Coding Powers From OpenAI, Anthropic

Currently, agentic coding is available via Codex and Claude Agent Apple is adding more artificial intelligence (AI) capabilities in Xcode, its integrated development environment (IDE) for building apps across its platforms. The Release Candidate of Xcode 26.3 began rolling out for developers on Tuesday, and one of its biggest highlights is the support for agentic coding. The Cupertino-based tech giant is now letting AI agents access the platform and perform certain tasks, increasing the scope of task automation within the IDE. Currently, the platform supports OpenAI's Codex and Anthropic's Claude Agent. Xcode 26.3 Brings AI Agents to Developers In a newsroom post, Apple announced the new agentic capabilities within Xcode. The new feature is part of the Xcode 26.3 update, which was rolled out to developers via the company's developer portal. The global stable version is also expected to be available via the Mac App Store soon. Last year, at the Worldwide Developers Conference (WWDC), Apple announced AI integration in Xcode for the first time, allowing users to access ChatGPT or a model of their choice for assistance with coding, debugging, and other tasks. Now, with Xcode 26.3, the tech giant is finally adding support for Anthropic's Model Context Protocol, the open standard that allows a platform to connect with AI agents. In terms of options, currently, developers have the choice between OpenAI's Codex and Anthropic's Claude Agent. The company claims that these agents can now collaborate throughout the entire development cycle and perform tasks such as searching documentation, exploring file structures, updating project settings, and verifying users' work by capturing Xcode Previews. Additionally, the AI agents can iterate and build on existing codebases and automatically fix bugs. According to a TechCrunch report, Apple worked closely with both AI companies to design the new capability. Two particular areas the tech giant had to address were optimising token utilisation and tool calling. Fixing these issues would mean a more efficient agentic coding experience while keeping the developer costs manageable. The report adds that before developers can begin using the agentic coding features, they will have to download their choice of agent from Xcode's settings. This can be done using one of two ways. First, users can simply sign into their OpenAI or Anthropic account; alternatively, they can use their application programming interface (API) keys to directly access their account. Interestingly, users will have the option to choose the AI model they want to work with, just like they would within Codex or Claude Agent.

[15]

Apple Says Hello to Agentic Coding; Announces Xcode Version with Claude and Codex

Having lagged behind other big tech giants on AI adoption, Apple has suddenly sped forward with AI integration of Siri and now including agentic AI options All this while, the market was questioning Apple for being slow in its AI implementation. Suddenly, the company seems to have found its mojo back. First it was Siri that is now powered by Gemini. Now the company is bringing both Anthropic's Claude Agent and OpenAI's Codex to bring agentic coding to its own Xcode. Apple's official app development suite saw the release of Xcode 26.3, allowing developers to leverage coding agents, including Anthropic's Claude Agent and OpenAI's Codex, directly to tackle complex tasks autonomously, helping them develop apps faster than ever, the company said. "Xcode 26.3 introduces support for agentic coding, a new way in Xcode for developers to build apps using coding agents such as Anthropic's Claude Agent and OpenAI's Codex. With agentic coding, Xcode can work with greater autonomy toward a developer's goals -- from breaking down tasks to making decisions based on the project architecture and using built-in tools," Apple says in a statement on its website. The new release would be available to all Apple Developers from today on the developer website. It will hit the App Store sometime in the near future. Last year, the company had released the Xcode V26 with support for both ChatGPT and Claude within Apple's integrated development environment or IDE. Developers building apps for the iPhone, iPad, MacBooks, Apple Watch and other hardware platforms use this platform. With the integrated of agentic coding tools, developers will be able to use AI models to perform more complex automation tasks. In addition, the models would get access to Apple's current developer documentation so that they can use the latest APIs and follow the best practices as they build. For starters, the agents would support developers explore their product, understand structures and metadata, build it and run tests to check for errors and then fix them. Apple notes that it worked closely with Anthropic and OpenAI to design the new experience on the IDE, especially in the area of optimising token usage and tool calling. This was to ensure that these agents run efficiently on Xcode. "At Apple, our goal is to make tools that put industry-leading technologies directly in developers' hands so they can build the very best apps," said Susan Prescott, Apple's vice president of Worldwide Developer Relations. "Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation," she noted. Xcode uses the model context protocol or MCP to open up its capabilities to the agents and connect them with available tools. This allows Xcode to work with any outside MCP-compatible agent for issues related to project discovery, changes, file management, previews and snippets among other things. Apple said developers seeking to try the agentic coding features need to download the agents they want to use from Xcode settings. They could also connect their Apple accounts with AI providers by either signing in or adding the relevant API key. The company also noted that in case the developers were unhappy with the outcomes, they can revert their code back to its original point at any time as Xcode creates milestones on each occasion where the agent makes a change (you can read the detailed note from Apple to developers right here).

Share

Share

Copy Link

Apple has released Xcode 26.3, integrating Anthropic's Claude Agent and OpenAI's Codex directly into its development environment. Using Model Context Protocol, these AI-powered coding agents can autonomously build projects, run tests, and fix errors while accessing Apple's developer documentation. The update marks a shift from basic AI assistance to full agentic coding capabilities for iOS, Mac, and other Apple platform developers.

Apple Expands IDE Capabilities with Full Agent Integration

Apple announced Xcode 26.3, introducing agentic coding capabilities that allow autonomous AI agents to work directly within its integrated development environment. The release candidate is now available to members of the Apple Developer Program, with a full App Store release coming soon

1

2

. This update enables developers building apps for iPhone, iPad, Mac, Apple Watch, and Vision Pro to leverage Anthropic's Claude Agent and OpenAI's Codex for complex automation tasks that go far beyond the chat interface and code completion features introduced in previous releases.

Source: MacRumors

Model Context Protocol Opens Xcode to Broader AI Ecosystem

The integration relies on Model Context Protocol (MCP), an open standard that exposes Xcode's capabilities to external AI-powered coding agents. Through MCP, Xcode acts as an endpoint that provides machine-invocable interfaces, giving agents access to IDE primitives including file graphs, project settings, developer documentation, and code previews

1

4

. While OpenAI and Anthropic receive privileged placement in Xcode's settings, the MCP architecture means any compatible agent or tool can connect with the platform, potentially including models running locally. Apple worked closely with both companies to optimize token usage and tool calling to ensure agents run efficiently within the development workflow2

.Autonomous AI Agents Handle Complex Development Tasks

The agentic coding capabilities in Xcode 26.3 allow autonomous AI agents to explore project structures, understand metadata, build projects, run tests, and fix errors without constant user intervention

2

4

. Developers can assign tasks through a side panel interface using natural language commands in a prompt box. The agents break down complex requests into smaller steps, making it easy to track progress and understand code changes. As agents work, they access Apple's current developer documentation to ensure they use the latest APIs and follow best practices. Changes are highlighted visually within the code, while a project transcript shows what's happening under the hood. At the end of each process, agents verify that code works as expected and can iterate further to fix errors if needed2

.

Source: TechCrunch

Related Stories

Implications for Developer Productivity and Apple's AI Strategy

Susan Prescott, Apple's vice president of Worldwide Developer Relations, stated that "agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation"

4

. The update addresses previous limitations where earlier Xcode AI features would crash or hang when handling complex app development tasks. Apple is positioning agentic coding as a foundation for future development workflows, particularly beneficial for solo developers and small teams. The release also represents another acknowledgment that Apple's homegrown AI tools lag behind industry leaders, following its decision to integrate Google Gemini and pivot from developing its own Swift coding assistant5

. To help developers adopt these AI-driven workflows, Apple is hosting a code-along workshop where users can learn to use agentic coding tools in real-time. Developers can easily revert code to its original state at any point, as Xcode creates milestones every time an agent makes a change2

.

Source: VentureBeat

References

Summarized by

Navi

[2]

[5]

Related Stories

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation