Apple's iOS 26 Update: A Leap Forward in AI Features and User Experience

7 Sources

7 Sources

[1]

The 2 biggest AI features that you should try on your iPhone 17 and AirPods ASAP

While we didn't hear much about Siri or Apple Intelligence during the 2025 Apple Event that launched new iPhones, AirPods, and Apple Watches, there were two huge AI features announced that have largely slipped under the radar. That's mostly because they were presented as great new features and didn't use overhyped AI marketing language. Nevertheless, I got to demo both features at Apple Park earlier this month and my first impression was that both of them are nearly fully baked and ready to start improving daily life -- and those are my favorite kinds of features to talk about. Also: 5 new AI-powered features that flew under the radar at Apple's launch event Here's what they are: Apple has implemented a new kind of front-facing camera. This uses a square sensor and increases the resolution of the sensor from 12 megapixels on previous models to 24MP. However, because the sensor is square, it actually outputs 18MP images. The real trick is that it can output in either vertical or horizontal formats. In fact, you don't even have to turn the phone to switch between vertical and horizontal mode any more. You can now simply keep the phone in one hand and in the vertical or horizontal position you prefer, you can tap the rotate button and it will flip from vertical to horizontal and vice versa. And because it has an ultrawide sensor with double the megapixels, it can take equally crisp photos in either orientation. Now, here's where the AI comes in. You can set the front-facing camera to Auto Zoom and Auto Rotate. Then, it will automatically find faces in your shot and it will widen or tighten the shot and decide whether a vertical or horizontal orientation will work best to fit everyone in the picture. Apple calls this its "Center Stage" feature, which is the same term it uses for centering you in the middle of the screen for a video call on the iPad and the Mac. Also: Every iPhone 17 model compared The feature technically uses machine learning, but it's still fair to call this an AI feature. The Center Stage branding is a little bit confusing though, because the selfie camera on iPhone 17 is used for photos while the feature on iPad and Mac is for video calls. The selfie camera feature is also aimed at photos with multiple people, while the iPad/Mac feature is primarily used with just you in the frame. Still, after trying it on various demo iPhones at Apple Park after the keynote on Tuesday, it's easy to call this the smartest and best selfie camera I've seen. I have no doubt that other phone makers will start copying this feature in 2026. And the best part is that Apple didn't just limit it to this year's high-end iPhone 17 Pro and Pro Max, but put it on the standard iPhone 17 and the iPhone Air as well. That's great news for consumers, who took 500 billion selfies on iPhones last year according to Apple. I've said this many times before, but language translation is one of the best and most accurate uses for Large Language Models. In fact, it's one of the best things you can do with generative AI. It has enabled companies like Apple that lag far behind Google and others in its language translation app to take big strides forward in implementing language translation features into key products. While Google Translate supports 249 different languages, Apple's Translate app supports 20. Google Translate has been around since 2006 while Apple's Translate app launched in 2020. Also: AirPods Pro 3 vs. AirPods Pro 2: Here's who should upgrade Nevertheless, while Google has been doing demos for years showing real-time translation in its phones and earbuds, none of the features have ever really worked very well in the real world. Google again made a big deal about real-time translation during its Pixel 10 launch event in August, but even during the onstage demo the feature hiccuped a bit. Enter Apple. Alongside the AirPods Pro 3, it launched its own live translation feature. And while the number of languages isn't as broad, the implementation is a lot smoother and more user friendly. I got an in-person demo of this with a group of other journalists at Apple Park on Tuesday after the keynote. A Spanish speaker came into the room and started speaking while we had AirPods Pro 3 in our ears and an iPhone 17 Pro in our hands with the Live Translation feature turned on. The AirPods Pro 3 immediately went into ANC mode and started translating the words spoken in Spanish into English so that we could look into the person's face during the conversation while hearing their words in our own language without the distraction of hearing the words in both languages. And I know enough Spanish to understand that the translation was pretty accurate -- with no hiccups in this case. It was only one brief demo, but it traded the tricks of Google's Pixel 10 demo (trying to put the words and intonation into an AI-cloned voice of the speaker) for more practical usability. Apple's version is a beta feature that will be limited to English, Spanish, French, German, and Portuguese to start. Also: I replaced my AirPods Max with the AirPods Pro 3, and didn't mind the $300 price gap And to be clear, the iPhone does most of the processing work while the AirPods make the experience better by automatically invoking noise cancellation. But because of that, the feature isn't just limited to AirPods Pro 3. It will also work with AirPods Pro 2 and AirPods 4, as long as they are connected to a phone that can run Apple Intelligence (iPhone 15 Pro or newer). That's another win for consumers. Since I'm planning to test both the Pixel 10 Pro XL with Pixel Buds Pro 2 as well as the iPhone 17 Pro Max with AirPods Pro 3, I'll put their new translation features head-to-head to see how well they perform. Every week, I'm in contact with friends and community members who speak one or more of the supported languages, so I'll have ample opportunities to put it to work in the real world and then share what I learn.

[2]

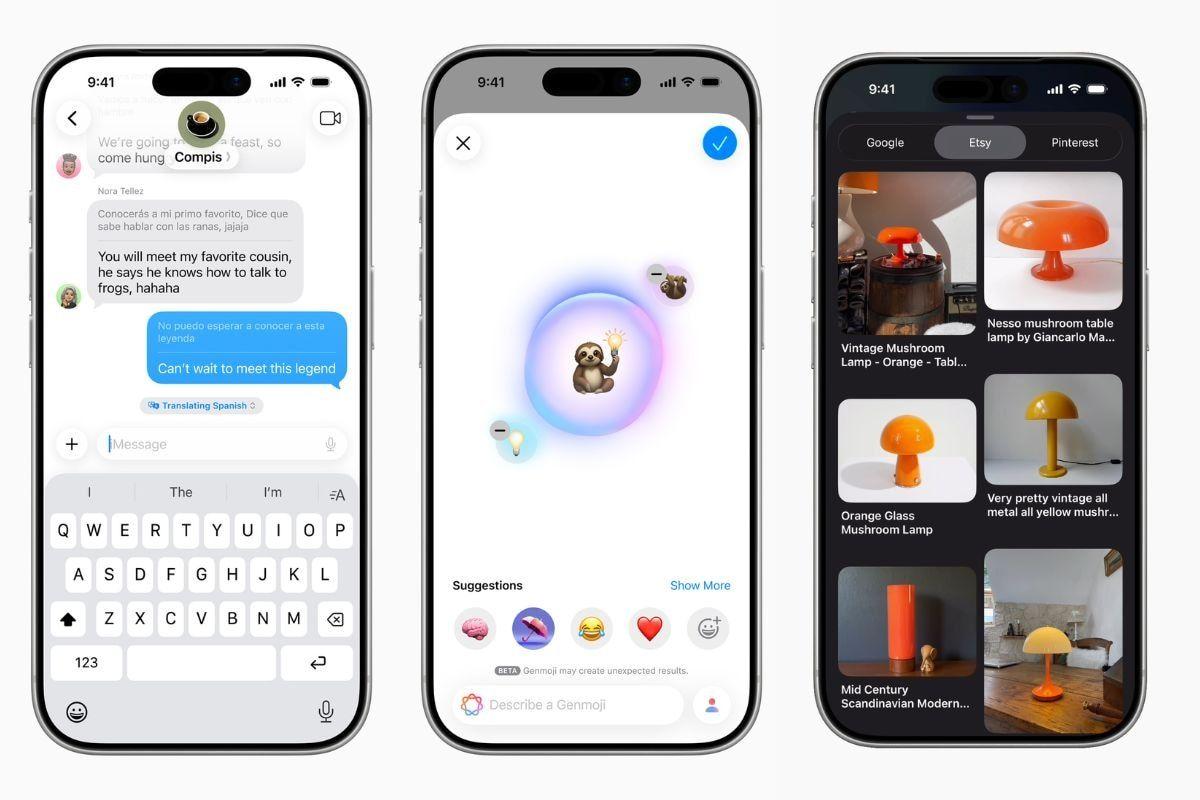

The best new Apple Intelligence features from today's updates - 9to5Mac

While this is not the day Apple will release a revamped, LLM-powered Siri, today's updates bring multiple welcome additions to the Apple Intelligence feature set. Here are some highlights. During WWDC25, Apple made sure to (almost passive-aggressively) highlight all the Apple Intelligence features it had already released, as it tried to counter the fact that it is behind on AI. Then, it proceeded to announce new Apple Intelligence features, making sure to showcase only the features it was sure it could deliver right on the first wave of betas, or just after that. Here are some of the coolest Apple Intelligence features you can use as soon as you update your Apple Inteligence-compatible devices starting today. This is by far the most significant change in today's updates. Now, apps can plug directly into Apple's on-device model, which means they can offer AI features without having to rely on external APIs or even the web. For users, this means more private AI-based features, since prompts never leave the device. For developers, a faster and more streamlined experience, since they can leverage the same on-device power Apple itself is leveraging for its own AI offerings. For now, developers won't have access to Apple's cloud-based model. This is on-device only. Still, the fact that any developer will get to adopt AI features with no extra cost should make for extremely interesting features and use cases. Shortcuts and automation play a big part in today's updates, particularly on macOS Tahoe 26. Now, you can bake Apple Intelligence right into your shortcuts, which in practice means including Writing Tools, Image Playground, and even Apple's own models or ChatGPT, as steps in your workflow to get things done faster. If you've never dabbled in automation before, this may feel overwhelming. But I highly recommend you poke around and try to find ways to bring AI-powered automation into your workflow. Depending on your line of work, this may truly change how you get things done. Although the headline feature is Live Translation with AirPods, which automatically translates what a person may be telling you in a different language, Live Translation features also permeate across Messages, Phone, and FaceTime. In Messages, the Live Translation feature automatically translates incoming and outgoing text messages, while during a FaceTime call, it displays live translated captions on screen. When it comes to phone calls, you'll hear spoken translations, much like the Live Translation with AirPods feature. Here's Apple's fine print on which languages are compatible with which features: Live Translation with AirPods works on AirPods 4 with Active Noise Cancellation or AirPods Pro 2 and later with the latest firmware when paired with an Apple Intelligence-enabled iPhone, and supports English (U.S., UK), French (France), German, Portuguese (Brazil), and Spanish (Spain). Live Translation in Messages is available in Chinese (Simplified), English (UK, U.S.), French (France), German, Italian, Japanese, Korean, Portuguese (Brazil), and Spanish (Spain) when Apple Intelligence is enabled on a compatible iPhone, iPad, or Mac, as well as on Apple Watch Series 9 and later and Apple Watch Ultra 2 and later when paired with an Apple Intelligence-enabled iPhone. Live Translation in Phone and FaceTime is available for one-on-one calls in English (UK, U.S.), French (France), German, Portuguese (Brazil), and Spanish (Spain) when Apple Intelligence is enabled on a compatible iPhone, iPad, or Mac. And speaking of phone calls, Apple Intelligence now provides voicemail summaries, which will appear inline with your missed calls. Apple has extended its visual search feature beyond the camera, and now it also supports what is on the screen. This means that when you take a screenshot of what is on your screen, iOS will now recognize the content and let you highlight a specific portion, search for similar images, or even ask ChatGPT about what appears in the screenshot. Visual Intelligence also extracts date, time, and location from screenshots, and proactively suggests actions such as adding events to the calendar, provided the image is in English. Now, you can combine two different emoji (and, optionally, a text description) to create a new Genmoji. You can also add expressions, or modify personal attributes such as hairstyle and facial hair for Genmoji based on people from your Photos library. On Image Playground, the biggest news is that you can now use OpenAI's image generator, picking from preset styles, such as Watercolor and Oil, or by sending a text prompt of what you'd like to have created. You can also use Image Playground to create Messages Backgrounds, as well as Genmoji without having to leave the app. It is very obvious that Apple is struggling when it comes to getting a handle on the Siri of it all, when it comes to AI. From multiple high-profile departures, to Tim Cook's pep talk following Apple's financial results, the company is clearly in trouble. That said, some of today's additions to its Apple Intelligence offerings are truly well-thought-out and implemented, and may really help users get things done faster and better. I've been using Apple Intelligence with my shortcuts, and it has really improved parts of my workflow, which is something that until very recently, felt out of reach if I wanted to keep things native. Apple still has a long, long way to go, but the set of AI-powered features it is releasing today is a good one, and it may be worth checking out, even if you've dismissed them in the past.

[3]

This one iOS 26 feature gives me hope for the future of Apple Intelligence

Apple Intelligence isn't a focus in iOS 26, but one improvement stands out I don't think many people would dispute the idea that iOS 26 didn't introduce a lot of new Apple Intelligence features, especially when stacked up against the all-out focus on AI that marked 2024's iOS 18 rollout. Oh, there are some new capabilities, like on-the-fly translations in phone calls, FaceTime sessions, and texts, but for the most part, Apple Intelligence receives a few updates to existing features while taking a backseat to iOS 26's Liquid Glass overhaul. Certainly, that's been one of the largest criticisms you can find from my colleagues in their iPhone 17 reviews. Apple already trails the likes of Google and Samsung when it comes to AI features, and shifting the attention to interface improvements in iOS 26 runs the risk of the iPhone falling further behind. It's a legitimate complaint. And yet, I'm not especially worried, as one of the AI features Apple did focus on with iOS 26 shows how Apple Intelligence can progress when Apple really puts its mind to it. Forget about improvements to Image Playground or Genmoji. If you like those AI-powered features, more power to you, but they don't really figure into how I use my iPhone. Instead, it's the Visual Intelligence update in iOS 26 that leaves me hopeful that Apple is on the right path to better AI capabilities, even if that journey is going to take longer than any of us would like. Introduced with last year's iPhone 16 launch, Visual Intelligence was one of the best Apple Intelligence additions to the iPhone's bag of tricks, in my opinion. Summoning your phone's camera -- either with the Camera Control button on the iPhone 16 models or a lock screen shortcut that became available for the iPhone 15 Pro -- you could capture an image and then look up more information about it, with the help of either Google or ChatGPT. My colleague Richard Priday used Apple Intelligence as a tour guide to help him get more out of an art museum visit. Another colleague, Amanda Carswell, turned to Apple's AI for help with guidance through a corn maze. Those are certainly fun uses, but there are practical ones as well. Say, you see a poster with an event date you want to attend. Rather than manually create an entry in your Calendar app, you can summon Visual Intelligence and have it do the work for you. Visual Intelligence can also provide translations of text in different languages -- helpful as a travel tool. Pretty helpful stuff, like I said, but there's a modest barrier to entry with Visual Intelligence. If I remember to whip out my phone and take a picture, that can come in very handy, but that's not something I'm always going to take the time to do. And what if I come across information that I can't easily photograph so that Visual Intelligence can work its magic, like when I read something online? Enter the updated Visual Intelligence for iOS 26. It can still do all the things it could before with a camera, but now those powers are extended to screenshots, too. All I have to do is capture a screenshot like always -- press the sleep and volume up button -- and a series of Visual Intelligence commands pops up on my screen. If there's something in another language in the screenshot, I can have Visual Intelligence translate it. If there's a movie poster on the website of my local Cineplex advertising a limited-time showing, I can have Visual Intelligence create a calendar. And I can use the feature to look up alternative ingredients or suggested cook times when I'm perusing recipes. It's not a perfect implementation. As Mark Spoonauer observes in his iPhone 17 Pro Max review, Apple's implementation falls far short of what you can do on a Pixel phone. Google's Gemini Live can analyze what's on your screen and talk back to you, whereas Apple Intelligence requires you to take a physical screenshot and then type in whatever questions you have. Siri isn't even involved in the process. I'm not going to pretend that Apple is in the same league as Google when it comes to on-device AI. (Our AI phone face-off from earlier this year proved that, and that was prior to Google further raising the bar with this year's Pixel 10 release.) But at the same time, we need to acknowledge that iOS 26 makes Visual Intelligence better. And even incremental progress is still progress. If Apple is ever going to kick its AI efforts into high gear, it's going to take efforts like the one shown in the iOS 26 version of Visual Intelligence. The improvements will need to be thoughtful and well-implemented, extending the capabilities of your phone. The fact that Apple can do that with one feature in its suite of AI tools suggests that it can repeat the trick with others. And with an AI-infused version of Siri apparently proving to be a tough nut for Apple to crack, I'll take any sign of progress that I can get.

[4]

Live Translation in Messages is the best AI feature in iOS 26, and Apple isn't even talking about it

When Apple announced the new Apple Intelligence features in iOS 26 earlier this year, there was one in particular that really piqued my interest. You see, I'm Scottish with Italian grandparents, an Italian wife, and I moved to France when I was 10 years old. Combined, this makes for lots of different languages on my iPhone, and a lot of dialect shuffling in my brain to make conversing easy. I speak fluent French, understand most Italian, and can make myself understood through a mix of my upbringing, speaking to my partner regularly in the language, and by adding Os and As to French words. So while I don't necessarily need the AI feature I'm about to talk about, using it for the last week or so has significantly improved my communication experience on iPhone, and I think this might be one of the best iOS 26 features, full stop. If you haven't guessed yet, or aren't up to speed with the new Apple Intelligence features available in iOS 26 (there's a lot, check out this article), I'm of course talking about Live Translation. Now, before you click off from this article because a translation tool sounds boring, hear me out, I swear this is the kind of technology that will transform the way we communicate. Just keep reading. I've been trying Live Translation for the last few months now, and I think it will transform how smartphone users communicate. It's a pretty seamless tool. In iOS 26, you can open up any conversation in Messages and select a primary language to translate from. The language will be downloaded, and then any incoming messages will seamlessly be translated from the language of the message to your preferred one. I tested this with my Italian in-laws as well as my wife, and I was thoroughly impressed with how seamless the translation was. There's no waiting; someone just messages you, and it arrives in two languages, allowing you to understand exactly what is being written and see the original message too. Live Translation doesn't only work in Messages, it also removes language barriers from phone calls and FaceTime. And if you have a pair of AirPods Pro 3 (or Pro 2/AirPods 3 with ANC), the feature is even better. It's the voice communication Live Translation feature that I'm most excited about, as it now allows me to take part in conversations with my Italian family without any struggle. Live Translation translates in real-time as the person on the other side of the phone speaks, and can do the same for them, allowing one of you to speak in English and the other to speak in Italian without any hiccups. Sound cool? This is the kind of built-in feature I've been waiting for for years, and I'm so excited to finally have my hands on it. iOS 26 is now available, and Apple Intelligence-compatible iPhones get access to everything Live Translation has to offer. Live Translation, as well as all the other new Apple Intelligence features, such as Visual Intelligence for screenshots and ChatGPT styles in Image Playground, are some of the best AI features we've seen on iPhone to date, and you don't need an iPhone 17 to use them. In my brief time testing Live Translation, I've been thoroughly impressed with its accuracy and just how quickly it works. I've written conversations in French and English, and received replies in Italian, all absolutely seamlessly. Live Translation might not have as much of an impact on your life if you rarely converse in other languages, but even then, the capability to eliminate language barriers across Apple devices is the first step in making the world more connected, and that's an incredibly exciting and powerful thought.

[5]

iOS 26 is here - 5 of the best Apple Intelligence features to try right now

iOS 26 is finally here, and your iPhone software just got a gorgeous redesign. While the headline feature will be Liquid Glass, installing iOS 26 will also give you access to some new Apple Intelligence features that might improve your life in subtle, yet meaningful, ways. I've whittled down the list of new AI-powered features available in iOS 26 (and iPadOS 26) and selected my five favorites. From in-app translation to new Apple Intelligence abilities in Shortcuts, there are plenty of reasons to install the new update right away, rather than wait for the iPhone 17 to launch later this week. After using iOS 26 for a few months, I've found Live Translation to be my personal favorite new Apple Intelligence feature. Built into Messages, FaceTime, and the Phone app, Live Translation lets you automatically translate messages, add translated live captions to FaceTime, and, on a phone call, the translation will be spoken aloud throughout the conversation, completely removing language barriers using AI. I've written about how Live Translation has drastically improved my ability to communicate with my Italian in-laws, and trust me, if you regularly speak multiple languages, you'll also find this new Apple Intelligence feature to be a game-changer. Apple launched Genmoji and Image Playground as part of the first wave of Apple Intelligence features, and now the company has improved its generative AI image tools. Users can now turn text descriptions into emojis as well as mix emojis and combine them with descriptions to create something new. You can also change expressions and adjust personal attributes of Genmojis made from photos of friends and family members. Image Playground has also been given ChatGPT support to allow users to access brand-new styles such as oil painting and vector art. Apple says, "users are always in control, and nothing is shared with ChatGPT without their permission." I've enjoyed my time using ChatGPT in Image Playground. While it's still not as good as some of the other best AI image generators out there, it improves the Image Playground experience and is a step in the right direction for Apple's creative AI tool. Visual Intelligence might've already been the best Apple Intelligence feature, but now it's even better. In iOS 26, Visual Intelligence can now scan your screen, allowing users to search and take action on anything they're viewing across apps. You can ask ChatGPT questions about content on your screen via Apple Intelligence, and this new feature can be accessed by taking a screenshot. When using the same buttons as a screenshot in iOS 26, you are now asked to save, share the screenshot, or explore more with Visual Intelligence. Visual Intelligence can even pull information from a screenshot and add info to your calendar; it's super useful. If you're like me and take screenshots regularly to remember information, Visual Intelligence on iOS 26 could be the Apple Intelligence feature you've been waiting for. While this entry isn't a feature per se, this iOS 26 addition is a big one for the future of Apple Intelligence: Developers now have access to Apple's Foundation Models. What does that mean exactly? Well, app developers can now "build on Apple Intelligence to bring users new experiences that are intelligent, available when they're offline, and that protect their privacy, using AI inference that is free of cost." Apple showcased an example at WWDC 2025 of an education app using the Apple Intelligence model to generate a quiz from your notes, without any API costs. This framework could completely change the way we, users, interact with our favorite third-party apps, now with the ability to tap into Apple's AI models and make the user experience even more intuitive. At the moment, I've not seen many apps take advantage of this new feature, but give it a few weeks, and we'll be sure to list the best examples of Apple Intelligence in third-party applications. Last but not least, Apple Intelligence is now available in the Shortcuts app. This is a major upgrade to one of the best apps on Apple devices, allowing users to "tap into intelligent actions, a whole new set of shortcuts enabled by Apple Intelligence." I've tried Apple Intelligence-powered shortcuts, and just like the Shortcuts app, the true power here will come down to user creations and how people tap into this new ability. As someone who uses Shortcuts daily, I'm incredibly excited to see how the fantastic community of people who create powerful shortcuts and share them online will tap into Apple Intelligence's capabilities. This is not an AI improvement everyone is going to use, but if you choose to delve into the world of the Shortcuts app and learn how to get the most from it, this new iOS 26 addition might be the best of the lot. iOS 26 is now available as a free update for iPhone, and while only Apple Intelligence-compatible devices (iPhone 15 Pro and newer) have access to the new AI features, there's plenty here to be excited for. Whether you want a fresh coat of paint and a new lock screen redesigned with Liquid Glass, or the idea of Live Translation makes you excited to use your smartphone on vacation, iOS 26 is a huge step forward for iPhone and well worth the upgrade.

[6]

New Apple Intelligence Features Focus on Daily Use Over AI Gimmicks - Phandroid

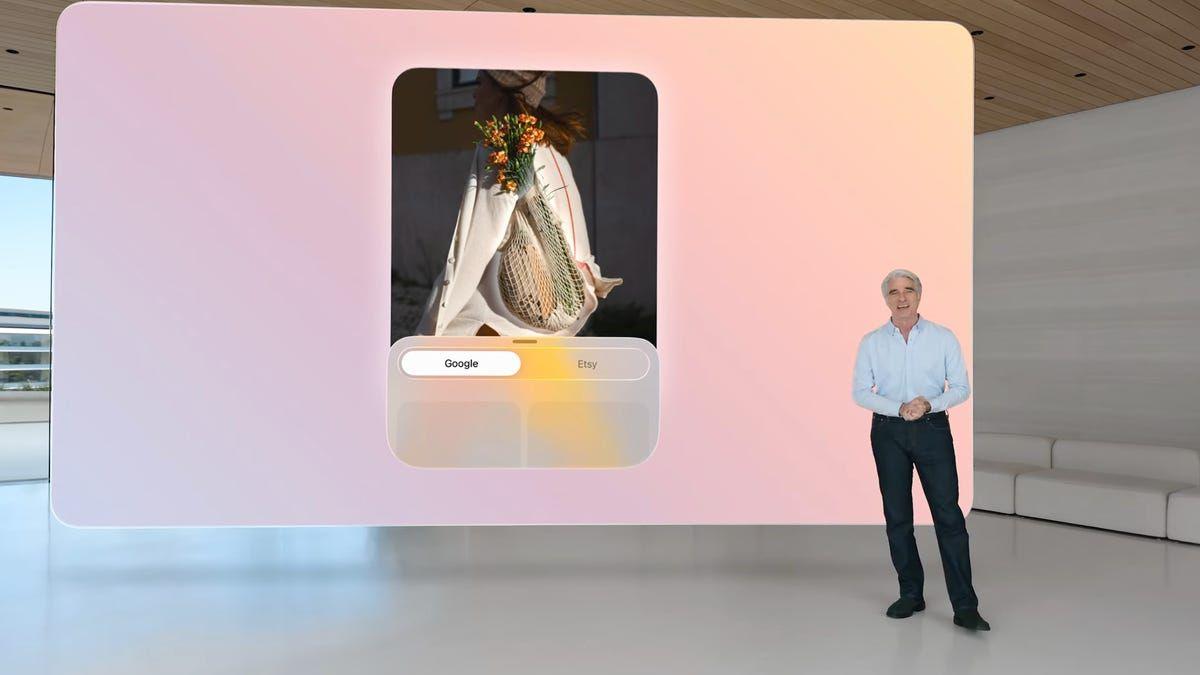

Apple's taking a measured approach to rolling out new Apple Intelligence features, and the September 15 update shows exactly what that looks like. Instead of dumping a dozen half-baked AI tools on users all at once, Apple's selectively adding Live Translation, Visual Intelligence, and Workout Buddy to the mix. These aren't flashy party tricks but actually designed to solve real problems you might run into during your day. The standout feature has to be Live Translation. Apple's not just throwing translation into one app and calling it a day. This thing works across Messages, FaceTime calls, regular phone calls, and even with your AirPods for in-person conversations. In Messages, it translates as you type. During FaceTime calls, you get live captions while still hearing the original voice. The AirPods integration lets you trigger translation by pressing both stems, asking Siri, or using the Action button. Visual Intelligence lets you search and interact with whatever's on your iPhone screen. See something you want to buy? Press the screenshot buttons, highlight the item, and search across Google, eBay, or Etsy. You can translate text directly from your screen, summarize articles, or add events from flyers to your calendar with a single tap. Workout Buddy analyzes your fitness data and delivers personalized spoken motivation during workouts. It looks at your heart rate, pace, and Activity rings to generate real-time encouragement using voices from Fitness+ trainers. Apple's also opened up its on-device AI models to developers. Apps like Streaks now suggest tasks automatically, while CARROT Weather provides unlimited AI-powered weather conversations. The new Apple Intelligence features are available now with iOS 26 across all Apple devices, with support for nine languages and eight more coming soon.

[7]

These New AI Features Are Coming to Your Updated iPhone, iPad and Mac

With Visual Intelligence, users can search their iPhone screen Apple released its major software update for compatible iPhone, iPad, Apple Watch, Mac devices and Vision Pro on Monday. The new updates come with multiple new features, customisation, and the new Liquid Glass design language. Alongside continuing to push on artificial intelligence (AI), the Cupertino-based tech giant has also added several new Apple Intelligence features and has upgraded a few existing ones. Among them is the new Live Translation feature, which allows two-way translation of conversations in supported languages. All the New Apple Intelligence Features Available to Users On Monday, the tech giant released iOS 26, iPadOS 26, watchOS 26, macOS Tahoe, and visionOS 26 updates for all eligible devices, bringing new features and upgrades. The Apple Intelligence suite received special attention from the company, even as it was not mentioned much during the "Awe Dropping" event. Live Translation, as mentioned above, is now available for users across the Messages, FaceTime, and Phone apps. The feature instantly translates text and voice conversations in supported languages. The feature can also be accessed via AirPods Pro 3 to translate in-person conversations, as long as it is paired with an Apple Intelligence-enabled iPhone model. While using AirPods, users can activate Live Translation by either pressing both earbuds' stems together, saying "Siri, start Live Translation," or even by pressing the Action Button on the paired iPhone. The active noise cancellation (ANC) lowers the volume of the speaker so that the user can easily hear the translation. Apple says the AI feature will support Chinese (Mandarin, simplified and traditional), Italian, Japanese, and Korean by the end of the year. Visual Intelligence is also getting an upgrade. Users can now use the feature to search, take action, and answer questions about the content on their iPhone screen. Apple has integrated with several partners, such as Google, eBay, Poshmark, Etsy, and others, to let users quickly look up information online. Additionally, Visual Intelligence supports ChatGPT, enabling it to answer user queries. A quirky new feature is also coming to Genmoji. Users can now mix emojis and combine them to create a new Genmoji. While making these custom emojis, users can also add a text description to control the output. Apple is now also letting individuals change expressions and modify attributes such as hairstyle when generating Genjmoji or images via Image Playground. Additionally, Image Playground now also supports ChatGPT, and it can be used to explore new art styles such as watercolour and oil painting. Workout Buddy is another new Apple Intelligence experience which analyses users' workout data and fitness history to offer personalised, spoken motivation throughout their session. It is available on the Apple Watch and a paired Bluetooth headphone, with an Apple Intelligence-enabled iPhone nearby. The feature is available in the English language for multiple workout types. Finally, the Shortcuts app is being integrated with Apple Intelligence, allowing users to create actions that use the on-device AI features. Users can summarise text with Writing Tools or create images with Image Playground. Alternatively, individuals can also opt for Private Cloud Compute (PCC)-based AI models to access more advanced shortcuts. "For example, users can create powerful Shortcuts like comparing an audio transcription to typed notes, summarising documents by their contents, extracting information from a PDF and adding key details to a spreadsheet, and more," the company said in a post.

Share

Share

Copy Link

Apple's latest iOS 26 update introduces significant AI-powered features, including Live Translation, Visual Intelligence improvements, and developer access to Apple Intelligence models, marking a substantial step in the company's AI strategy.

Apple's AI Revolution: iOS 26 Brings Intelligent Features to iPhones

Apple's latest iOS 26 update marks a significant milestone in the company's artificial intelligence journey, introducing a range of AI-powered features that enhance user experience and productivity. While the update's headline feature is the Liquid Glass interface overhaul, the new Apple Intelligence capabilities are stealing the spotlight for their practical applications and potential to transform how users interact with their devices

1

2

3

.

Source: Gadgets 360

Live Translation: Breaking Language Barriers

One of the standout features in iOS 26 is Live Translation, which has been seamlessly integrated into Messages, FaceTime, and the Phone app. This feature allows for real-time translation of text messages and spoken conversations, effectively removing language barriers in digital communication

4

5

.Live Translation supports multiple languages, including English, French, German, Portuguese, and Spanish. The feature's implementation in AirPods Pro 3 and AirPods Pro 2 is particularly noteworthy, as it enables users to hear translations directly in their ears during phone calls, creating a more natural conversational experience

1

4

.

Source: Phandroid

Visual Intelligence: Smarter Screen Analysis

Apple has significantly enhanced its Visual Intelligence feature in iOS 26. Previously limited to camera-captured images, Visual Intelligence can now analyze screenshots and on-screen content across various apps. This upgrade allows users to interact with information more intuitively, such as automatically adding events to calendars from screenshots or asking ChatGPT questions about on-screen content

3

5

.

Source: Tom's Guide

Developer Access to Apple Intelligence Models

In a move that could dramatically expand the AI capabilities of third-party apps, Apple is now granting developers access to its Foundation Models. This allows app creators to integrate Apple Intelligence into their products, potentially leading to a new wave of AI-enhanced applications that can perform tasks offline while maintaining user privacy

5

.Related Stories

AI-Powered Shortcuts and Creative Tools

The Shortcuts app in iOS 26 now incorporates Apple Intelligence, enabling users to create more sophisticated automated workflows. Additionally, improvements to Genmoji and Image Playground introduce new creative possibilities, including emoji generation from text descriptions and ChatGPT-powered image styles

2

5

.Apple's AI Strategy: Measured Progress

While some critics argue that Apple lags behind competitors like Google and Samsung in AI implementation, the company's approach appears to be one of measured, thoughtful progress. The improvements in iOS 26, particularly in Visual Intelligence and Live Translation, demonstrate Apple's commitment to enhancing practical, everyday features rather than chasing headline-grabbing AI demonstrations

3

.As the AI landscape continues to evolve rapidly, Apple's strategy of focusing on user privacy, on-device processing, and gradual feature enhancement may prove to be a sustainable approach in the long run. With the release of iOS 26 and the upcoming iPhone 17 series, Apple is positioning itself to make significant strides in the AI arena while maintaining its core principles of user-centric design and data protection

1

3

5

.References

Summarized by

Navi

[4]

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy