Artists Take Action to Protect Their Work from Generative AI

4 Sources

4 Sources

[1]

Artists are taking things into their own hands to protect their work from generative AI

LOS ANGELES -- The oil painting depicts a woman standing on a podium, her arm aloft as she grasps a laurel crown in her hand. A scarlet cloak drapes across her chest as she stares at the viewer. To the naked eye, the painting looks like a normal piece in an online portfolio. But the version of the painting uploaded online belies a hidden defense system -- a tool called Glaze that masks the artist's style and cloaks the art from use by generative AI. As image-generating AI continues to evolve, artists have increasingly fought against what they see as an existential threat to their craft on multiple fronts: through lawsuits, in public statements calling for regulations and now, with programs aimed at protecting their art from being scraped and emulated without their permission. Created by researchers from the University of Chicago, Glaze and a second program, Nightshade, essentially poison the well of art uploaded online in an attempt to scramble what AI sees. Where Glaze subtly changes an image so AI perceives it as a different art style, Nightshade is a more "offensive" tool that attempts to confuse an AI training model about what is in an image. The idea, university researchers said, is to provide a technical solution to stop the "malicious" use of AI models while also protecting creators. The team working on Glaze and Nightshade noted that their tools serve as a safeguard in a space that lacks regulation rather than as a comprehensive solution. Where lawsuits and government regulations might force tech giants like Microsoft or OpenAI to change how they operate, smaller AI players outside of the United States might not follow suit, researchers said. In those instances, the AI scrambling tools will still be useful. "Regulations, strikes are very important and arguably launch a much more profound, long lasting effect on this entire landscape," said Shawn Shan, the lead student working on Glaze and Nightshade. "But I think we see Glaze, and specifically Nightshade, as more leverage." For Karla Ortiz, the San Francisco-based artist behind the oil painting and the first person to publicly use Glaze, the intersection of art and AI "all boils down to consent." "It's just deeply unfair to work your whole life to train, to learn to be able to do the things that we do to find our own voice as artists, and then to have someone take that voice, make a mimic of it, and say, 'Oh, actually, this is ours,'" she said. "Artists need a way to be able to exist online." Ortiz is one of three artists seeking to protect their copyrights and careers by suing makers of AI tools that can generate new imagery on command. The suit against Stability AI, the London-based maker of text-to-image generator Stable Diffusion, alleges that the AI image-generators violate the rights of millions of artists by ingesting huge troves of digital images and then producing derivative works that compete against the originals. Ortiz, a concept artist and illustrator in the entertainment industry who has worked on movies including "Rogue One: A Star Wars Story" and "Doctor Strange," said technology like Glaze is key to protecting artists. "It's this disgusting cycle of tech saying we own what is yours, but you don't get to have a say in how you use your work," said Ortiz. "What you post online, that's ours. And we're also going to compete in your markets. And we're gunning for your job, which is essentially what's happening." Experts say anti-AI tools do provide some protection by making it harder for people trying to use AI to mimic an artist's style, but they don't eradicate the problem. As AI models evolve, they will likely become harder to attack or throw off. "When AI becomes stronger and stronger, these anti-AI tools will become weaker and weaker," said Jinghui Chen, an assistant professor at Pennsylvania State University who co-authored a study on the effectiveness of tools like Glaze. "But I recognize that as a first step." It's important, Chen said, to raise questions about the efficacy of these types of tools in order to improve them. Shan, the University of Chicago researcher, agreed that the anti-AI tools are "far from future-proof." "But this is the case for most of the security mechanisms that we see in the digital age," he said. "Firewalls, they are not perfect. There are many ways to bypass them. But most people still use firewalls to stop a good amount of these attacks, these types of problems. So we see Glaze or Nightshade similarity." Artists shouldn't "blindly trust" that all their problems will be solved by those tools, he added. Renato Roldan, an artist who uses Glaze before uploading his work to his portfolio, said that he's hesitant to update as much as he used to because "we are super exposed now" with AI. He worries about how generated art will shift the paradigm on how art is consumed and created, he said, likening an image created by AI to a diluted version of art created by a person. "If you do a photocopy of something, it starts to deteriorate every time you copy it," said Roldan, a former video game art director who now works in narrative and storyboarding. One of the biggest consequences of unregulated AI, he said, is that it has made it harder for new artists who are just finishing school to break into the field because they have to compete with generated art. "They'd usually find a learning curve that was a little bit hard," he said. "But now they are finding that curve is not a curve. It's just a wall."

[2]

Artists are taking things into their own hands to protect their work from generative AI

LOS ANGELES -- The oil painting depicts a woman standing on a podium, her arm aloft as she grasps a laurel crown in her hand. A scarlet cloak drapes across her chest as she stares at the viewer. To the naked eye, the painting looks like a normal piece in an online portfolio. But the version of the painting uploaded online belies a hidden defense system -- a tool called Glaze that masks the artist's style and cloaks the art from use by generative AI. As image-generating AI continues to evolve, artists have increasingly fought against what they see as an existential threat to their craft on multiple fronts: through lawsuits, in public statements calling for regulations and now, with programs aimed at protecting their art from being scraped and emulated without their permission. Created by researchers from the University of Chicago, Glaze and a second program, Nightshade, essentially poison the well of art uploaded online in an attempt to scramble what AI sees. Where Glaze subtly changes an image so AI perceives it as a different art style, Nightshade is a more "offensive" tool that attempts to confuse an AI training model about what is in an image. The idea, university researchers said, is to provide a technical solution to stop the "malicious" use of AI models while also protecting creators. The team working on Glaze and Nightshade noted that their tools serve as a safeguard in a space that lacks regulation rather than as a comprehensive solution. Where lawsuits and government regulations might force tech giants like Microsoft or OpenAI to change how they operate, smaller AI players outside of the United States might not follow suit, researchers said. In those instances, the AI scrambling tools will still be useful. "Regulations, strikes are very important and arguably launch a much more profound, long lasting effect on this entire landscape," said Shawn Shan, the lead student working on Glaze and Nightshade. "But I think we see Glaze, and specifically Nightshade, as more leverage." For Karla Ortiz, the San Francisco-based artist behind the oil painting and the first person to publicly use Glaze, the intersection of art and AI "all boils down to consent." "It's just deeply unfair to work your whole life to train, to learn to be able to do the things that we do to find our own voice as artists, and then to have someone take that voice, make a mimic of it, and say, 'Oh, actually, this is ours,'" she said. "Artists need a way to be able to exist online." Ortiz is one of three artists seeking to protect their copyrights and careers by suing makers of AI tools that can generate new imagery on command. The suit against Stability AI, the London-based maker of text-to-image generator Stable Diffusion, alleges that the AI image-generators violate the rights of millions of artists by ingesting huge troves of digital images and then producing derivative works that compete against the originals. Ortiz, a concept artist and illustrator in the entertainment industry who has worked on movies including "Rogue One: A Star Wars Story" and "Doctor Strange," said technology like Glaze is key to protecting artists. "It's this disgusting cycle of tech saying we own what is yours, but you don't get to have a say in how you use your work," said Ortiz. "What you post online, that's ours. And we're also going to compete in your markets. And we're gunning for your job, which is essentially what's happening." Experts say anti-AI tools do provide some protection by making it harder for people trying to use AI to mimic an artist's style, but they don't eradicate the problem. As AI models evolve, they will likely become harder to attack or throw off. "When AI becomes stronger and stronger, these anti-AI tools will become weaker and weaker," said Jinghui Chen, an assistant professor at Pennsylvania State University who co-authored a study on the effectiveness of tools like Glaze. "But I recognize that as a first step." It's important, Chen said, to raise questions about the efficacy of these types of tools in order to improve them. Shan, the University of Chicago researcher, agreed that the anti-AI tools are "far from future-proof." "But this is the case for most of the security mechanisms that we see in the digital age," he said. "Firewalls, they are not perfect. There are many ways to bypass them. But most people still use firewalls to stop a good amount of these attacks, these types of problems. So we see Glaze or Nightshade similarity." Artists shouldn't "blindly trust" that all their problems will be solved by those tools, he added. Renato Roldan, an artist who uses Glaze before uploading his work to his portfolio, said that he's hesitant to update as much as he used to because "we are super exposed now" with AI. He worries about how generated art will shift the paradigm on how art is consumed and created, he said, likening an image created by AI to a diluted version of art created by a person. "If you do a photocopy of something, it starts to deteriorate every time you copy it," said Roldan, a former video game art director who now works in narrative and storyboarding. One of the biggest consequences of unregulated AI, he said, is that it has made it harder for new artists who are just finishing school to break into the field because they have to compete with generated art. "They'd usually find a learning curve that was a little bit hard," he said. "But now they are finding that curve is not a curve. It's just a wall."

[3]

Artists are taking things into their own hands to protect their work from generative AI

LOS ANGELES (AP) -- The oil painting depicts a woman standing on a podium, her arm aloft as she grasps a laurel crown in her hand. A scarlet cloak drapes across her chest as she stares at the viewer. To the naked eye, the painting looks like a normal piece in an online portfolio. But the version of the painting uploaded online belies a hidden defense system -- a tool called Glaze that masks the artist's style and cloaks the art from use by generative AI. As image-generating AI continues to evolve, artists have increasingly fought against what they see as an existential threat to their craft on multiple fronts: through lawsuits, in public statements calling for regulations and now, with programs aimed at protecting their art from being scraped and emulated without their permission. Created by researchers from the University of Chicago, Glaze and a second program, Nightshade, essentially poison the well of art uploaded online in an attempt to scramble what AI sees. Where Glaze subtly changes an image so AI perceives it as a different art style, Nightshade is a more "offensive" tool that attempts to confuse an AI training model about what is in an image. The idea, university researchers said, is to provide a technical solution to stop the "malicious" use of AI models while also protecting creators. The team working on Glaze and Nightshade noted that their tools serve as a safeguard in a space that lacks regulation rather than as a comprehensive solution. Where lawsuits and government regulations might force tech giants like Microsoft or OpenAI to change how they operate, smaller AI players outside of the United States might not follow suit, researchers said. In those instances, the AI scrambling tools will still be useful. "Regulations, strikes are very important and arguably launch a much more profound, long lasting effect on this entire landscape," said Shawn Shan, the lead student working on Glaze and Nightshade. "But I think we see Glaze, and specifically Nightshade, as more leverage." For Karla Ortiz, the San Francisco-based artist behind the oil painting and the first person to publicly use Glaze, the intersection of art and AI "all boils down to consent." "It's just deeply unfair to work your whole life to train, to learn to be able to do the things that we do to find our own voice as artists, and then to have someone take that voice, make a mimic of it, and say, 'Oh, actually, this is ours,'" she said. "Artists need a way to be able to exist online." Ortiz is one of three artists seeking to protect their copyrights and careers by suing makers of AI tools that can generate new imagery on command. The suit against Stability AI, the London-based maker of text-to-image generator Stable Diffusion, alleges that the AI image-generators violate the rights of millions of artists by ingesting huge troves of digital images and then producing derivative works that compete against the originals. Ortiz, a concept artist and illustrator in the entertainment industry who has worked on movies including "Rogue One: A Star Wars Story" and "Doctor Strange," said technology like Glaze is key to protecting artists. "It's this disgusting cycle of tech saying we own what is yours, but you don't get to have a say in how you use your work," said Ortiz. "What you post online, that's ours. And we're also going to compete in your markets. And we're gunning for your job, which is essentially what's happening." Experts say anti-AI tools do provide some protection by making it harder for people trying to use AI to mimic an artist's style, but they don't eradicate the problem. As AI models evolve, they will likely become harder to attack or throw off. "When AI becomes stronger and stronger, these anti-AI tools will become weaker and weaker," said Jinghui Chen, an assistant professor at Pennsylvania State University who co-authored a study on the effectiveness of tools like Glaze. "But I recognize that as a first step." It's important, Chen said, to raise questions about the efficacy of these types of tools in order to improve them. Shan, the University of Chicago researcher, agreed that the anti-AI tools are "far from future-proof." "But this is the case for most of the security mechanisms that we see in the digital age," he said. "Firewalls, they are not perfect. There are many ways to bypass them. But most people still use firewalls to stop a good amount of these attacks, these types of problems. So we see Glaze or Nightshade similarity." Artists shouldn't "blindly trust" that all their problems will be solved by those tools, he added. Renato Roldan, an artist who uses Glaze before uploading his work to his portfolio, said that he's hesitant to update as much as he used to because "we are super exposed now" with AI. He worries about how generated art will shift the paradigm on how art is consumed and created, he said, likening an image created by AI to a diluted version of art created by a person. "If you do a photocopy of something, it starts to deteriorate every time you copy it," said Roldan, a former video game art director who now works in narrative and storyboarding. One of the biggest consequences of unregulated AI, he said, is that it has made it harder for new artists who are just finishing school to break into the field because they have to compete with generated art. "They'd usually find a learning curve that was a little bit hard," he said. "But now they are finding that curve is not a curve. It's just a wall."

[4]

Artists are taking things into their own hands to protect their work from generative AI

LOS ANGELES (AP) -- The oil painting depicts a woman standing on a podium, her arm aloft as she grasps a laurel crown in her hand. A scarlet cloak drapes across her chest as she stares at the viewer. To the naked eye, the painting looks like a normal piece in an online portfolio. But the version of the painting uploaded online belies a hidden defense system -- a tool called Glaze that masks the artist's style and cloaks the art from use by generative AI. As image-generating AI continues to evolve, artists have increasingly fought against what they see as an existential threat to their craft on multiple fronts: through lawsuits, in public statements calling for regulations and now, with programs aimed at protecting their art from being scraped and emulated without their permission. Created by researchers from the University of Chicago, Glaze and a second program, Nightshade, essentially poison the well of art uploaded online in an attempt to scramble what AI sees. Where Glaze subtly changes an image so AI perceives it as a different art style, Nightshade is a more "offensive" tool that attempts to confuse an AI training model about what is in an image. The idea, university researchers said, is to provide a technical solution to stop the "malicious" use of AI models while also protecting creators. The team working on Glaze and Nightshade noted that their tools serve as a safeguard in a space that lacks regulation rather than as a comprehensive solution. Where lawsuits and government regulations might force tech giants like Microsoft or OpenAI to change how they operate, smaller AI players outside of the United States might not follow suit, researchers said. In those instances, the AI scrambling tools will still be useful. "Regulations, strikes are very important and arguably launch a much more profound, long lasting effect on this entire landscape," said Shawn Shan, the lead student working on Glaze and Nightshade. "But I think we see Glaze, and specifically Nightshade, as more leverage." For Karla Ortiz, the San Francisco-based artist behind the oil painting and the first person to publicly use Glaze, the intersection of art and AI "all boils down to consent." "It's just deeply unfair to work your whole life to train, to learn to be able to do the things that we do to find our own voice as artists, and then to have someone take that voice, make a mimic of it, and say, 'Oh, actually, this is ours,'" she said. "Artists need a way to be able to exist online." Ortiz is one of three artists seeking to protect their copyrights and careers by suing makers of AI tools that can generate new imagery on command. The suit against Stability AI, the London-based maker of text-to-image generator Stable Diffusion, alleges that the AI image-generators violate the rights of millions of artists by ingesting huge troves of digital images and then producing derivative works that compete against the originals. Ortiz, a concept artist and illustrator in the entertainment industry who has worked on movies including "Rogue One: A Star Wars Story" and "Doctor Strange," said technology like Glaze is key to protecting artists. "It's this disgusting cycle of tech saying we own what is yours, but you don't get to have a say in how you use your work," said Ortiz. "What you post online, that's ours. And we're also going to compete in your markets. And we're gunning for your job, which is essentially what's happening." Experts say anti-AI tools do provide some protection by making it harder for people trying to use AI to mimic an artist's style, but they don't eradicate the problem. As AI models evolve, they will likely become harder to attack or throw off. "When AI becomes stronger and stronger, these anti-AI tools will become weaker and weaker," said Jinghui Chen, an assistant professor at Pennsylvania State University who co-authored a study on the effectiveness of tools like Glaze. "But I recognize that as a first step." It's important, Chen said, to raise questions about the efficacy of these types of tools in order to improve them. Shan, the University of Chicago researcher, agreed that the anti-AI tools are "far from future-proof." "But this is the case for most of the security mechanisms that we see in the digital age," he said. "Firewalls, they are not perfect. There are many ways to bypass them. But most people still use firewalls to stop a good amount of these attacks, these types of problems. So we see Glaze or Nightshade similarity." Artists shouldn't "blindly trust" that all their problems will be solved by those tools, he added. Renato Roldan, an artist who uses Glaze before uploading his work to his portfolio, said that he's hesitant to update as much as he used to because "we are super exposed now" with AI. He worries about how generated art will shift the paradigm on how art is consumed and created, he said, likening an image created by AI to a diluted version of art created by a person. "If you do a photocopy of something, it starts to deteriorate every time you copy it," said Roldan, a former video game art director who now works in narrative and storyboarding. One of the biggest consequences of unregulated AI, he said, is that it has made it harder for new artists who are just finishing school to break into the field because they have to compete with generated art. "They'd usually find a learning curve that was a little bit hard," he said. "But now they are finding that curve is not a curve. It's just a wall."

Share

Share

Copy Link

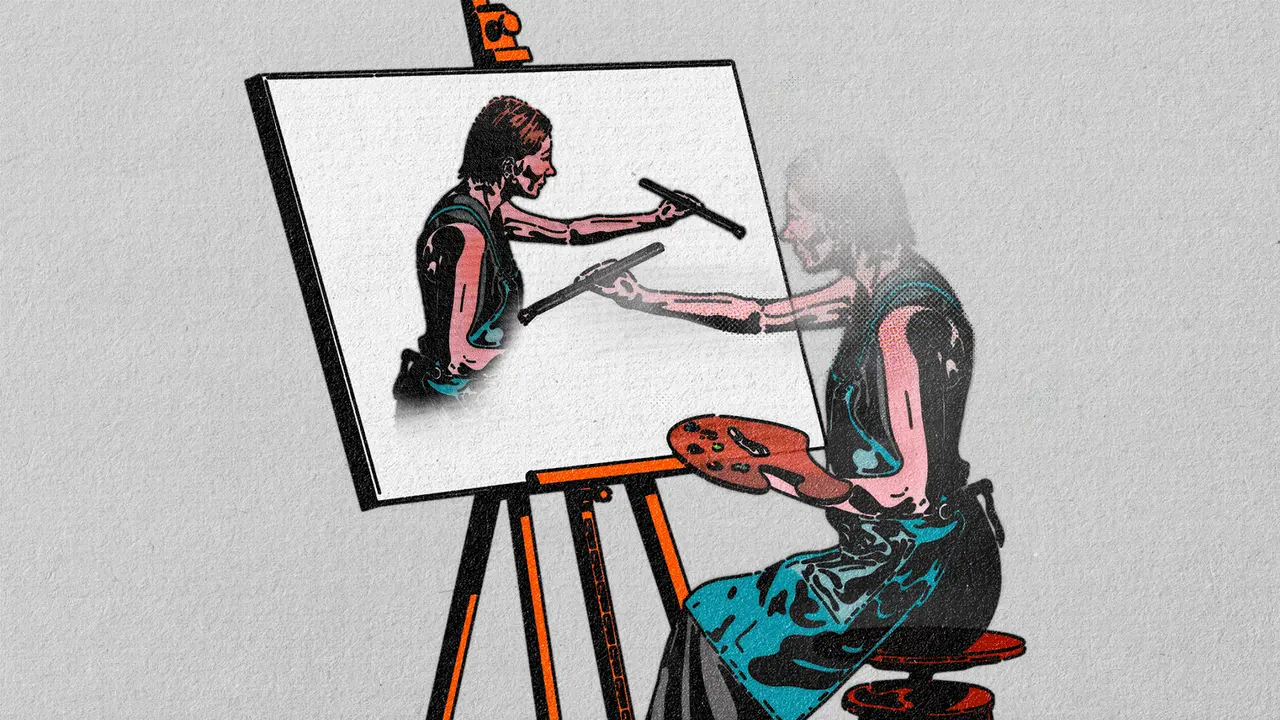

As generative AI threatens artists' livelihoods, creators are developing innovative tools to safeguard their work. These digital defenses aim to prevent AI models from replicating artists' unique styles without permission.

The Rise of AI-Generated Art and Its Impact

The rapid advancement of generative artificial intelligence (AI) has sparked concern among artists worldwide. These AI systems, capable of creating images based on text prompts, have been trained on vast amounts of online data, including copyrighted artworks. This has led to a growing fear among artists that their unique styles and creations could be replicated without permission or compensation

1

.Artists' Innovative Responses

In response to this threat, artists are developing creative solutions to protect their work. Two notable tools have emerged:

-

Glaze: Developed by researchers at the University of Chicago, Glaze subtly alters images in ways imperceptible to the human eye but confusing to AI systems. When AI attempts to mimic a "glazed" image, it produces drastically different results

2

. -

Nightshade: A more aggressive tool, Nightshade not only protects artists' work but can potentially "poison" AI datasets. It alters images so that AI models interpret them incorrectly, potentially causing the AI to produce corrupted outputs

3

.

The Ethical Debate

The development of these tools has sparked a debate about the ethics of AI art generation. While some argue that using publicly available images for AI training is fair use, others contend that it infringes on artists' rights and livelihoods. Ben Zhao, a professor of computer science at the University of Chicago, likens the situation to "going to war," emphasizing the need for artists to protect their interests

4

.Related Stories

Legal and Industry Responses

The controversy has also prompted legal action. A group of artists filed a lawsuit against Stability AI and Midjourney, alleging copyright infringement. Meanwhile, some companies are taking proactive steps. Adobe, for instance, has implemented an opt-out system for its generative AI tools and is exploring ways to compensate artists whose work contributes to AI training

1

.The Future of AI and Art

As the battle between artists and AI developers continues, the future remains uncertain. Some artists view AI as a potential tool for creativity, while others see it as a threat to their profession. The development of protective tools like Glaze and Nightshade represents a significant step in this ongoing struggle, potentially reshaping the landscape of digital art and AI development

3

.References

Summarized by

Navi

Related Stories

The AI Art Controversy: Studio Ghibli Style Sparks Debate on Creativity and Copyright

14 Apr 2025•Technology

Visual Artists Struggle to Protect Work from AI Crawlers Despite Available Tools

06 Aug 2025•Technology

Thousands of Artists Unite Against Unlicensed AI Training in Open Letter

23 Oct 2024•Entertainment and Society

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research